Paddle强化学习从入门到实践 (Day1)

强化学习简介

定义:强化学习(英语:Reinforcement learning,简称RL)是机器学习中的一个领域,强调如何基于环境而行动,以取得最大化的预期利益。

核心思想:智能体agent在环境environment中学习,根据环境的状态state(或观测到的observation),执行动作action,并根据环境的反馈 reward(奖励)来指导更好的动作。简单归结为下图:

注意:从环境中获取的状态,有时候叫state,有时候叫observation,这两个其实一个代表全局状态,一个代表局部观测值,在多智能体环境里会有差别,但我们刚开始学习遇到的环境还没有那么复杂,可以先把这两个概念划上等号。

强化学习与监督学习的区别

- 强化学习、监督学习、非监督学习是机器学习里的三个不同的领域,都跟深度学习有交集。

- 监督学习寻找输入到输出之间的映射,比如分类和回归问题。

- 非监督学习主要寻找数据之间的隐藏关系,比如聚类问题。

- 强化学习则需要在与环境的交互中学习和寻找最佳决策方案。

- 监督学习处理认知问题,强化学习处理决策问题。

环境工具简介

常见的强化学习工具

OPEN AI:GYM,baselines,mujoco-py,Retro

gym项目地址:https://github.com/openai/gym 集成各种环境配置的代码

baseline项目地址:https://github.com/openai/baselines 集成各种强化学习的基本策略代码

mujoco-py项目地址:https://github.com/openai/mujoco-py 一个模拟的机器人,生物力学,图形和动画等领域的物理引擎

Retro项目地址:https://github.com/openai/retro 整合了一些含界面的小游戏

DEEP MIND:pysc2,Lab

pysc2项目地址:https://github.com/deepmind/pysc2 对星际二进行强化训练

lab项目地址:https://github.com/deepmind/lab 集成了一系列3D游戏场景

PARL库简介

项目地址:https://github.com/PaddlePaddle/PARL 文档链接:https://parl.readthedocs.io/en/latest/index.html

可复现性保证:我们提供了高质量的主流强化学习算法实现,严格地复现了论文对应的指标。

大规模并行支持:框架最高可支持上万个CPU的同时并发计算,并且支持多GPU强化学习模型的训练。

可复用性强:用户无需自己重新实现算法,通过复用框架提供的算法可以轻松地把经典强化学习算法应用到具体的场景中。

良好扩展性:当用户想调研新的算法时,可以通过继承我们提供的基类可以快速实现自己的强化学习算法。

接口使用方便简洁

model接口

import parl

class Policy(parl.Model):

def __init__(self):

self.fc = parl.layers.fc(size=12, act='softmax')

def policy(self, obs):

out = self.fc(obs)

return out

policy = Policy()

copied_policy = copy.deepcopy(model)Algorithm接口

model = Model()

dqn = parl.algorithms.DQN(model, lr=1e-3)agent接口

class MyAgent(parl.Agent):

def __init__(self, algorithm, act_dim):

super(MyAgent, self).__init__(algorithm)

self.act_dim = act_dim

下面看一下一个简单的demo

import paddle.fluid as fluid

import numpy as np

import paddle.fluid as fluid

import paddle.fluid.layers as layers

from parl.utils import machine_info

import gym

from parl.utils import logger

#定义策略

class PolicyGradient(object):

def __init__(self, model, lr):

self.model = model

self.optimizer = fluid.optimizer.Adam(learning_rate=lr)

#使用adam作为优化器

def predict(self, obs):

obs = fluid.dygraph.to_variable(obs)

obs = layers.cast(obs, dtype='float32')

return self.model(obs)

#将观测的值传入模型得到结果

def learn(self, obs, action, reward):

obs = fluid.dygraph.to_variable(obs)

obs = layers.cast(obs, dtype='float32')

act_prob = self.model(obs)

action = fluid.dygraph.to_variable(action)

reward = fluid.dygraph.to_variable(reward)

#根据观测结果和模型得到 可能动作的概率

log_prob = layers.cross_entropy(act_prob, action)

#计算可能动作与正确动作来计算loss

cost = log_prob * reward

cost = layers.cast(cost, dtype='float32')

cost = layers.reduce_mean(cost)

cost.backward()

self.optimizer.minimize(cost)

self.model.clear_gradients()

#根据loss更新model

return cost

#定义model

#此处为简单的DNN

class CartpoleModel(fluid.dygraph.Layer):

def __init__(self, name_scope, act_dim):

super(CartpoleModel, self).__init__(name_scope)

hid1_size = act_dim * 10

self.fc1 = fluid.FC('fc1', hid1_size, act='tanh')

self.fc2 = fluid.FC('fc2', act_dim, act='softmax')

def forward(self, obs):

out = self.fc1(obs)

out = self.fc2(out)

return out

#定义agent

class CartpoleAgent(object):

def __init__(

self,

alg,

obs_dim,

act_dim,

):

#学习策略

self.alg = alg

#环境情况可能数

self.obs_dim = obs_dim

#动作情况可能数

self.act_dim = act_dim

def sample(self, obs):

#得到环境观测值

obs = np.expand_dims(obs, axis=0)

act_prob = self.alg.predict(obs).numpy()

act_prob = np.squeeze(act_prob, axis=0)

#算出相应可能性

act = np.random.choice(self.act_dim, p=act_prob)

#随机采样 根据可能性获得动作

return act

def predict(self, obs):

obs = np.expand_dims(obs, axis=0)

act_prob = self.alg.predict(obs).numpy()

act_prob = np.squeeze(act_prob, axis=0)

act = np.argmax(act_prob)

#根据观测或可能性最高的动作

return act

def learn(self, obs, act, reward):

act = np.expand_dims(act, axis=-1)

reward = np.expand_dims(reward, axis=-1)

cost = self.alg.learn(obs, act, reward)

#根据动作的奖励,重新优化模型

return cost

#训练过程

OBS_DIM = 4

ACT_DIM = 2

LEARNING_RATE = 1e-3

def run_episode(env, agent, train_or_test='train'):

obs_list, action_list, reward_list = [], [], []

obs = env.reset()#初始环境

while True:

#观测列表,动作列表,奖励列表更新

obs_list.append(obs)

if train_or_test == 'train':

action = agent.sample(obs)

else:

action = agent.predict(obs)

action_list.append(action)

obs, reward, done, _ = env.step(action)

reward_list.append(reward)

if done:

break

return obs_list, action_list, reward_list

def calc_reward_to_go(reward_list):

#生成奖励值

for i in range(len(reward_list) - 2, -1, -1):

reward_list[i] += reward_list[i + 1]

return np.array(reward_list)

def main():

#三大部分及环境初始化

env = gym.make('CartPole-v0')

model = CartpoleModel(name_scope='noIdeaWhyNeedThis', act_dim=ACT_DIM)

alg = PolicyGradient(model, LEARNING_RATE)

agent = CartpoleAgent(alg, OBS_DIM, ACT_DIM)

with fluid.dygraph.guard():

for i in range(1000): # 100 episodes

obs_list, action_list, reward_list = run_episode(env, agent)

#训练一步

if i % 10 == 0:

logger.info("Episode {}, Reward Sum {}.".format(

i, sum(reward_list)))

#更新列表

batch_obs = np.array(obs_list)

batch_action = np.array(action_list)

batch_reward = calc_reward_to_go(reward_list)

agent.learn(batch_obs, batch_action, batch_reward)

if (i + 1) % 100 == 0:

_, _, reward_list = run_episode(

env, agent, train_or_test='test')

total_reward = np.sum(reward_list)

logger.info('Test reward: {}'.format(total_reward))

if __name__ == '__main__':

main()

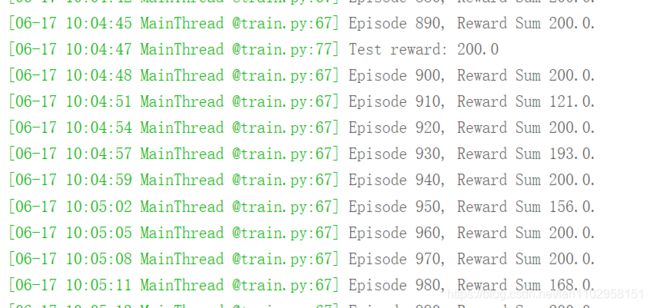

最终reward收敛到200。