concepts:

kubectl/dashboard/client.go/client.py, apiserver, kube controller manager, informer, reflector, work queue, resource, gabarge collector, graph builder, node

可交互的大纲图链接如下

https://www.processon.com/out...

apiserver process flow:

pre:

GenericAPIServer.InstallAPIGroup

|

APIGroupVersion.InstallREST

|

APIInstaller.Install

|

registerResourceHandlers

|

actions = appendIf(actions, action{"DELETE", itemPath, nameParams, namer, false}, isGracefulDeleter)|

discovery.NewAPIVersionHandler.AddToWebService

main flow:

endpoints.installer.restfulDeleteResource

|

handlers.DeleteResource

|

GracefulDeleter.Delete

|

generic.registry.store.Delete

|

rest.delete.BeforeDelete

|

objectMeta.SetDeletionTimestamp

|

objectMeta.SetDeletionGracePeriodSeconds

|

deletionFinalizersForGarbageCollection(add delete finalizers)

|

updateForGracefulDeletionAndFinalizers

|

store.Storage.GuaranteedUpdate(deletionTimestamp, finalizers)

|

if deleteImmediately

|

e.Storage.Delete delete from etcd

|

finalizeDelete ran after delete hook

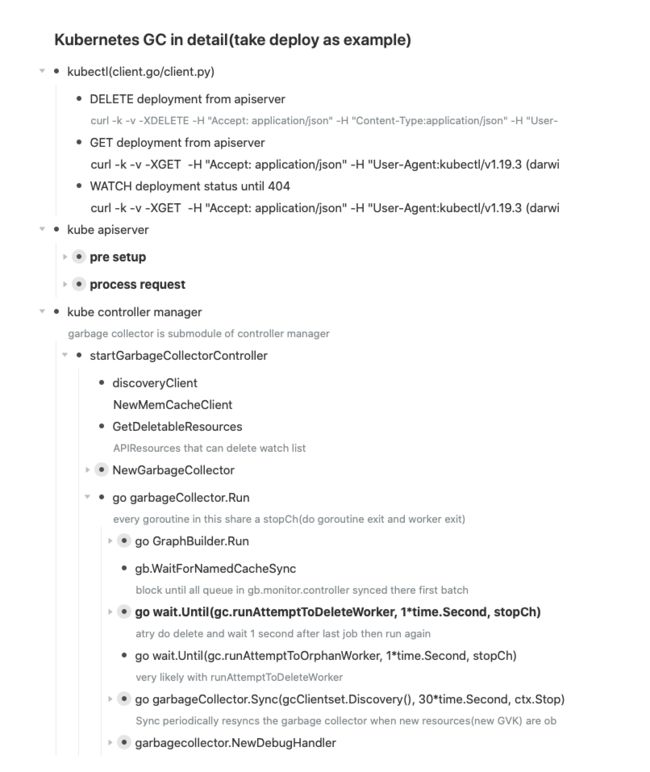

garbage collector process flow:

startGarbageCollectorController

|

discoveryClient — NewMemCacheClient

|

GetDeletableResources(APIResources that can delete watch list)

|

NewGarbageCollector

|

attemptToDelete(NewNamedRateLimitingQueue 单个条目 10 ms - 1000s 的指数增长个重试延迟,总队列 qps 10, 桶大小 100 的令牌桶限流)

attemptToOrphan(NewNamedRateLimitingQueue)

|

absentOwnerCache(UID lru cache with max size 500, 1.16.18 后改为了 objectReference)

|

gc(GarbageCollector)

|

gb(GraphBuilder)

|

graphChanges(NewNamedRateLimitingQueue)

|

gb.syncMonitors(attempt to create a monitor for each resource, called when start or stop gb)

|

go garbageCollector.Run

|

stopCh(do goroutine exit and worker exit)

|

go GraphBuilder.Run(stopCh)

|

startMonitors (start inform)

|

gb.sharedInformers.Start(gb.stopCh)

|

go monitor.Run (start listAndWatch resource)

|

gb.runProcessGraphChanges (a single thread worker)

|

processGraphChanges. (PR return of this function is useless, doesn't exist in the graph Info should be warning)

|

gb.graphChanges.Get()

|

gb.uidToNode.Read (check if node exists)

|

if found

|

node.markObserved

|

switch !found and (event == add or event == update):

|

gb.insertNode(newNode)

|

addDependentToOwners — reverse add dependent to owners based on current node’s ownerReferences

|

if owner not exists

|

attemptToDelete — verify if owner is a deleted node

|

gb.processTransitions — verify if it is being delete because reflector may combine create delete event into one event(PR: maybe this event should not be combined)

switch found and (event == add or event == update):

|

referencesDiffs — PR TODO: profile this function to see if a naive N^2 algorithm performs better when the number of references is small.

switch event == delete

\

removeNode

|

uidToNode.Delete

|

removeDependentFromOwners(from uidToNode)

|

gb.attemptToDelete.Add(dependents — children)

|

gb.attemptToDelete.Add(ownerNodes) — PR: check and update ownerNode dependents rather than put it to attemptToDelete queue may has better performance and more reasonable

|

gb.WaitForNamedCacheSync(block until all queue in gb.monitor.controller synced there first batch)

|

go wait.Until(gc.runAttemptToDeleteWorker, 1*time.Second, stopCh)

|

gc.attemptToDelete.Get()

|

gc.workerLock.RLock()

|

gc.attemptToDeleteItem

|

if item beingDeleted and has no dependents(children)

|

pop — just pop since owner are pre executed before enqueue this node

|

getObject from apiserver

|

if not found or uid does not match

|

dependencyGraphBuilder.enqueueVirtualDeleteEvent

|

if deleting dependent

|

attemptToDelete blockingDependents

|

removeFinalizer if no blockingDependents

|

classifyReferences

|

patch ownerReference to delete solid owner

|

detect circle reference

|

do real deleteObject

|

if err or not observed

|

gc.attemptToDelete.AddRateLimited( put back to queue with ratelimit)

|

go wait.Until(gc.runAttemptToOrphanWorker, 1*time.Second, stopCh)

|

runAttemptToOrphanWorker

|

orphanDependents remove owner children

|

go garbageCollector.Sync(gcClientset.Discovery(), 30*time.Second, ctx.Stop)

|

garbagecollector.NewDebugHandler(TODO)

possible PRs:

processGraphChanges. (PR return of this function is useless, doesn't exist in the graph Info should be warning)

gb.processTransitions — verify if it is being delete because reflector may combine create delete event into one event(PR: maybe this event should not be combined)

referencesDiffs — PR TODO: profile this function to see if a naive N^2 algorithm performs better when the number of references is small.

gb.attemptToDelete.Add(ownerNodes) — PR: check and update ownerNode dependents rather than put it to attemptToDelete queue may has better performance and more reasonable

// TODO: attemptToOrphanWorker() routine is similar. Consider merging

TODO: It's only necessary to talk to the API server if the owner node