【爬虫实战】8基础Python网络爬虫——股票数据定向爬虫(MOOC学习笔记)

股票数据定向爬虫

- 1、股票数据定向爬虫”实例介绍

-

- (1)功能描述:

- (2)理解网站的选取过程

- (3) 程序的结构设计

- 2、股票数据定向爬虫实例编写

- 3、小结

1、股票数据定向爬虫”实例介绍

(1)功能描述:

- 目标:获取上交所和深交所所有股票的名称和交易信息

- 输出:保存到文件中

技术路线:requests‐bs4‐re

候选数据网站的选择:

- 新浪股票:http://finance.sina.com.cn/stock/

- 百度股票:https://gupiao.baidu.com/stock/

(2)理解网站的选取过程

选取原则:股票信息静态存在于HTML页面中,非js代码生成,没有Robots协议限制

选取方法:浏览器F12,源代码查看等

选取心态:不要纠结于某个网站,多找信息源尝试

数据网站的确定如下:

获取股票列表:

- 东方财富网:http://quote.eastmoney.com/stocklist.html

获取个股信息:

- 百度股票:https://gupiao.baidu.com/stock/

- 单个股票:https://gupiao.baidu.com/stock/sz002439.html

(3) 程序的结构设计

步骤1:从东方财富网获取股票列表

步骤2:根据股票列表逐个到百度股票获取个股信息

步骤3:将结果存储到文件

个股信息采用键值对维护

2、股票数据定向爬虫实例编写

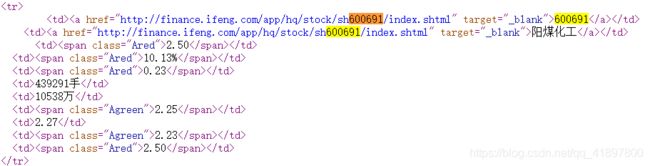

发现视频里面东方财富网打开没有静态的数据可以用,以及百度股票打不开了,参考【MOOC】【实例】–股票数据定向爬取,使用以下两个网站:

- 获得个股信息:https://www.laohu8.com/stock/600210

- 获取列表:http://app.finance.ifeng.com/list/stock.php?t=ha&f=chg_pct&o=desc&p=1

import requests

from bs4 import BeautifulSoup

import re

import traceback

def getHTMLText(url):

try:

r = requests.get(url, timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

print('Error!')

def getStockList(lst, stockURL):

html = getHTMLText(stockURL)

soup = BeautifulSoup(html, 'html.parser')

a = soup.find_all('a')

for i in a:

try:

href = i.attrs['href']

string = re.findall(r'[s][h]\d{6}', href)[0].replace('sh', '')

if lst == []:

lst.append(string)

continue

if lst[-1] == string:

continue

else:

lst.append(string)

except:

continue

def getStockInfo(lst, stockURL, fpath):

regex_symbol = r'\"symbol\":\"\d{6}\"'

regex_name = r'\"nameCN\":\".*?\"'

regex_latestPrice = r'\"latestPrice\":[\d\.]*'

count = 0

total_list = []

for stock in lst:

url = stockURL + stock

html = getHTMLText(url)

try:

if html == '':

continue

stockInfo = []

for match in re.finditer(regex_symbol, html):

stockInfo.append(match.group(0).replace("\"symbol\":",""))

for match in re.finditer(regex_name, html):

stockInfo.append(match.group(0).replace("\"nameCN\":",""))

for match in re.finditer(regex_latestPrice, html):

stockInfo.append(match.group(0).replace("\"latestPrice\":",""))

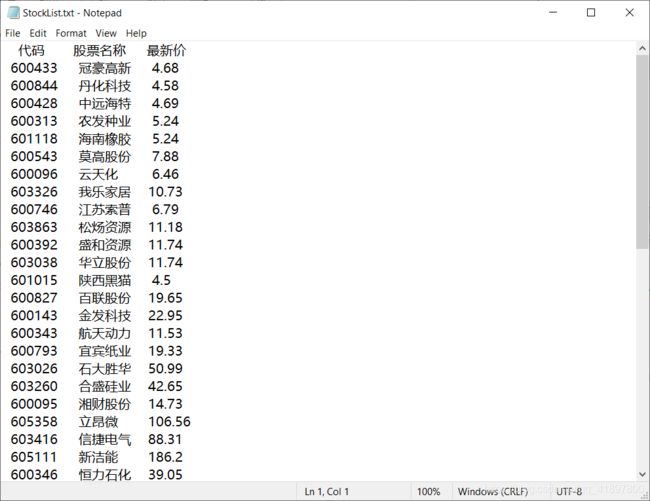

with open(fpath, 'a', encoding='utf-8') as f:

tmpl = '{0:^10}{1:{3}^6}{2:^8}\n'

if count == 0:

string = tmpl.format('代码','股票名称','最新价',chr(12288))

else:

string = tmpl.format(str(stockInfo[0]).replace('\"',''), str(stockInfo[1].replace('\"','')), str(stockInfo[2]),chr(12288))

f.write(string)

count += 1

except:

traceback.print_exc() # 输出详细的异常信息

continue

def main():

stock_list_html = 'http://app.finance.ifeng.com/list/stock.php?t=ha&f=chg_pct&o=desc&p=1'

stock_info_url = 'https://www.laohu8.com/stock/'

output_file = 'E://Users/Yang SiCheng/PycharmProjects/Graduation_Project/StockList.txt'

slist = []

getStockList(slist, stock_list_html)

getStockInfo(slist, stock_info_url, output_file)

# print(slist)

if __name__ == '__main__':

main()

最终在目标路径下得到了一个txt文件:

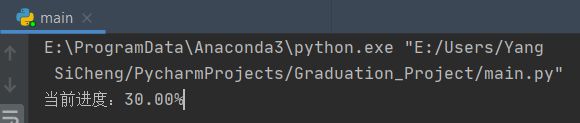

程序运行的时候大多数时候都要等着——如何提高用户体验?

- 速度提高:编码识别的优化

r.apparent_encoding需要分析文本,运行较慢,可辅助人工分析

code = 'utf-8'

r.encoding = code

- 体验提高:增加动态进度显示

\r 表示将光标的位置回退到本行的开头位置

with open(fpath, 'a', encoding='utf-8') as f:

tmpl = '{0:^10}{1:{3}^6}{2:^8}\n'

if count == 0:

string = tmpl.format('代码','股票名称','最新价',chr(12288))

else:

print('\r当前进度:{:.2f}%'.format(count*100/len(lst)),end='')

string = tmpl.format(str(stockInfo[0]).replace('\"',''), str(stockInfo[1].replace('\"','')), str(stockInfo[2]),chr(12288))

f.write(string)

3、小结

采用requests‐bs4‐re路线实现了股票信息爬取和存储,实现了展示爬取进程的动态滚动条