Docker 学习随笔

Docker的安装和卸载–Debian

卸载旧版本

root@linux-PC:~# sudo apt-get remove docker docker-engine docker.io containerd runc

正在读取软件包列表... 完成

正在分析软件包的依赖关系树

正在读取状态信息... 完成

软件包 docker-engine 未安装,所以不会被卸载

注意,选中 'containerd.io' 而非 'containerd'

软件包 docker 未安装,所以不会被卸载

软件包 docker.io 未安装,所以不会被卸载

软件包 runc 未安装,所以不会被卸载

下列软件包是自动安装的并且现在不需要了:

aufs-dkms aufs-tools cgroupfs-mount

使用'sudo apt autoremove'来卸载它(它们)。

升级了 0 个软件包,新安装了 0 个软件包,要卸载 0 个软件包,有 450 个软件包未被升级。

设置存储库

-

更新

apt软件包索引并安装软件包以允许apt通过HTTPS使用存储库:root@linux-PC:~# sudo apt-get update 命中:1 http://mirrors.aliyun.com/docker-ce/linux/debian stretch InRelease 命中:2 https://community-packages.deepin.com/deepin apricot InRelease 命中:3 http://uos-packages.deepin.com/printer eagle InRelease 命中:4 https://cdn-package-store6.deepin.com/appstore eagle InRelease 正在读取软件包列表... 完成 root@linux-PC:~# sudo apt-get install apt-transport-https ca-certificates curl gnupg-agent software-properties-common 正在读取软件包列表... 完成 正在分析软件包的依赖关系树 正在读取状态信息... 完成 apt-transport-https 已经是最新版 (1.8.2.2-1+dde)。 ca-certificates 已经是最新版 (20190110)。 curl 已经是最新版 (7.64.0-4+deb10u1)。 gnupg-agent 已经是最新版 (2.2.12-1+deb10u1)。 software-properties-common 已经是最新版 (0.96.20.2-2)。 升级了 0 个软件包,新安装了 0 个软件包,要卸载 0 个软件包,有 450 个软件包未被升级。 -

下载并安装密钥

root@linux-PC:~# curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/debian/gpg | sudo apt-key add - OK9DC8 5822 9FC7 DD38 854A E2D8 8D81 803C 0EBF CD88通过搜索指纹的后8个字符,验证您现在是否拥有带有指纹的密钥 。root@linux-PC:~# sudo apt-key fingerprint 0EBFCD88 pub rsa4096 2017-02-22 [SCEA] 9DC8 5822 9FC7 DD38 854A E2D8 8D81 803C 0EBF CD88 uid [ 未知 ] Docker Release (CE deb) <[email protected]> sub rsa4096 2017-02-22 [S] -

添加docker仓库

root@linux-PC:~# sudo add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/debian stretch stable" Traceback (most recent call last): File "/usr/bin/add-apt-repository", line 95, in <module> sp = SoftwareProperties(options=options) File "/usr/lib/python3/dist-packages/softwareproperties/SoftwareProperties.py", line 109, in __init__ self.reload_sourceslist() File "/usr/lib/python3/dist-packages/softwareproperties/SoftwareProperties.py", line 599, in reload_sourceslist self.distro.get_sources(self.sourceslist) File "/usr/lib/python3/dist-packages/aptsources/distro.py", line 93, in get_sources (self.id, self.codename)) aptsources.distro.NoDistroTemplateException: Error: could not find a distribution template for Deepin/n/a

安装Docker引擎

-

更新

apt程序包索引,并安装最新版本的Docker Engine和容器:root@linux-PC:~# sudo apt-get update 命中:1 http://mirrors.aliyun.com/docker-ce/linux/debian stretch InRelease 命中:2 https://community-packages.deepin.com/deepin apricot InRelease 命中:4 https://cdn-package-store6.deepin.com/appstore eagle InRelease 命中:3 http://uos-packages.deepin.com/printer eagle InRelease 正在读取软件包列表... 完成 root@linux-PC:~# sudo apt-get install docker-ce docker-ce-cli containerd.io 正在读取软件包列表... 完成 正在分析软件包的依赖关系树 正在读取状态信息... 完成 下列软件包将被升级: containerd.io docker-ce docker-ce-cli 升级了 3 个软件包,新安装了 0 个软件包,要卸载 0 个软件包,有 450 个软件包未被升级。 需要下载 0 B/91.1 MB 的归档。 解压缩后会消耗 43.2 MB 的额外空间。 (正在读取数据库 ... 系统当前共安装有 187646 个文件和目录。) 准备解压 .../containerd.io_1.3.7-1_amd64.deb ... 正在解压 containerd.io (1.3.7-1) 并覆盖 (1.3.4) ... dpkg: 警告: 无法删除原有的目录 /usr/local :目录非空 准备解压 .../docker-ce-cli_5%3a19.03.13~3-0~debian-stretch_amd64.deb ... 正在解压 docker-ce-cli (5:19.03.13~3-0~debian-stretch) 并覆盖 (5:19.03.8~3-0~debian-buster) ... 准备解压 .../docker-ce_5%3a19.03.13~3-0~debian-stretch_amd64.deb ... 正在解压 docker-ce (5:19.03.13~3-0~debian-stretch) 并覆盖 (5:19.03.8~3-0~debian-buster) ... 正在设置 containerd.io (1.3.7-1) ... 正在设置 docker-ce-cli (5:19.03.13~3-0~debian-stretch) ... 正在设置 docker-ce (5:19.03.13~3-0~debian-stretch) ... 正在处理用于 man-db (2.8.5-2) 的触发器 ... 正在处理用于 systemd (241.5+c1-1+eagle) 的触发器 ... -

通过运行

hello-world映像来验证是否正确安装了Docker Engineroot@linux-PC:~# sudo docker run hello-world Unable to find image 'hello-world:latest' locally latest: Pulling from library/hello-world 0e03bdcc26d7: Pull complete Digest: sha256:8c5aeeb6a5f3ba4883347d3747a7249f491766ca1caa47e5da5dfcf6b9b717c0 Status: Downloaded newer image for hello-world:latest Hello from Docker! This message shows that your installation appears to be working correctly. To generate this message, Docker took the following steps: 1. The Docker client contacted the Docker daemon. 2. The Docker daemon pulled the "hello-world" image from the Docker Hub. (amd64) 3. The Docker daemon created a new container from that image which runs the executable that produces the output you are currently reading. 4. The Docker daemon streamed that output to the Docker client, which sent it to your terminal. To try something more ambitious, you can run an Ubuntu container with: $ docker run -it ubuntu bash Share images, automate workflows, and more with a free Docker ID: https://hub.docker.com/ For more examples and ideas, visit: https://docs.docker.com/get-started/

镜像加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://29mfjo9k.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

卸载Docker

root@linux-PC:~# sudo apt-get purge docker-ce docker-ce-cli containerd.io

正在读取软件包列表... 完成

正在分析软件包的依赖关系树

正在读取状态信息... 完成

下列软件包是自动安装的并且现在不需要了:

aufs-dkms aufs-tools cgroupfs-mount

使用'sudo apt autoremove'来卸载它(它们)。

下列软件包将被【卸载】:

containerd.io* docker-ce* docker-ce-cli*

升级了 0 个软件包,新安装了 0 个软件包,要卸载 3 个软件包,有 450 个软件包未被升级。

解压缩后将会空出 409 MB 的空间。

您希望继续执行吗? [Y/n] Y

(正在读取数据库 ... 系统当前共安装有 187633 个文件和目录。)

正在卸载 docker-ce (5:19.03.13~3-0~debian-stretch) ...

正在卸载 containerd.io (1.3.7-1) ...

正在卸载 docker-ce-cli (5:19.03.13~3-0~debian-stretch) ...

正在处理用于 man-db (2.8.5-2) 的触发器 ...

(正在读取数据库 ... 系统当前共安装有 187407 个文件和目录。)

正在清除 docker-ce (5:19.03.13~3-0~debian-stretch) 的配置文件 ...

正在清除 containerd.io (1.3.7-1) 的配置文件 ...

正在处理用于 systemd (241.5+c1-1+eagle) 的触发器 ...

root@linux-PC:~# sudo rm -rf /var/lib/docker

root@linux-PC:~# sudo rm -rf /var/lib/containerd

Docker安装和卸载–Centos

# 卸载旧版本

$ sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

# 安装yum-utils软件包

$ sudo yum install -y yum-utils

# 设置存储库

$ sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装DOCKER引擎

$ sudo yum install docker-ce docker-ce-cli containerd.io

# 启动Docker

$ sudo systemctl start docker

# 通过运行hello-world 映像来验证是否正确安装了Docker Engine 。

$ sudo docker run hello-world

# 镜像加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://29mfjo9k.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

Docker的常用命令

常用命令

docker version # 显示docker的版本信息

docker info # 显示docker的系统信息,包括镜像跟容器的数量

docker 命令 --help # 帮助命令

镜像命令

docker images 查看所有本地主机上的镜像

root@linux-PC:/# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest bf756fb1ae65 10 months ago 13.3kB

# 解释

REPOSITORY # 镜像的仓库源

TAG # 镜像的标签

IMAGE ID # 镜像的Id

CREATED # 镜像的创建时间

SIZE # 镜像的大小

# 可选项

-a, --all # 列出所有镜像

-q, --quiet # 只显示镜像的id

docker search 搜索镜像

root@linux-PC:/# docker search mysql

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

mysql MySQL is a widely used, open-source relation… 10115 [OK]

mariadb MariaDB is a community-developed fork of MyS… 3715 [OK]

docker pull 下载镜像

# 下载镜像 docker pull 镜像名[:tag]

root@linux-PC:/# docker pull nginx

Using default tag: latest # 如果不写tag,tag默认就是latest

latest: Pulling from library/nginx

bb79b6b2107f: Pull complete # 分层下载,docker images的核心,联合文件系统

111447d5894d: Pull complete

a95689b8e6cb: Pull complete

1a0022e444c2: Pull complete

32b7488a3833: Pull complete

Digest: sha256:ed7f815851b5299f616220a63edac69a4cc200e7f536a56e421988da82e44ed8 # 签名

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest # 真实地址

docker pull nginx == docker pull docker.io/library/nginx:latest

docker rmi 删除镜像

root@linux-PC:/# docker rmi -f 容器id 删除指定的容器

root@linux-PC:/# docker rmi -f 容器id 容器id 容器id 删除多个容器

root@linux-PC:/# docker rmi -f $(docker images -aq) 删除全部容器

容器命令

新建容器并启动

docker run [可选参数] image

# 参数说明

--name # 容器名字

-d # 后台方式运行

-it # 使用交互式方式运行,进入容器查看内容

-p # 指定容器的端口 -p 8080:8080

-p ip:宿主机端口:容器端口

-p 宿主机端口:容器端口

-p 容器端口

容器端口

-P # 随机指定容器端口

# 测试、启动并进入容器

root@linux-PC:/# docker run -it nginx /bin/bash

root@37f7590f0b64:/#

退出容器

# 退出容器

root@4fb6db5883fd:/# exit

exit

# 退出容器不停止

Ctrl + P + Q

列出所有运行的容器

# docker ps # 列出当前正在运行的容器

-a # 列出当前正在运行的容器+历史运行过的容器

-n=1 # 列出最近创建的几个容器

-q # 只显示容器的编号

root@linux-PC:/# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

root@linux-PC:/# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4fb6db5883fd nginx "/docker-entrypoint.…" About a minute ago Exited (0) About a minute ago crazy_sinoussi

root@linux-PC:/#

删除容器

docker rm 容器id # 删除指定的容器,不能删除运行的容器,如果要强制删除 rm -f

docker rm -f $(docker ps -aq) # 删除所有的容器

docker ps -a -q|xargs docker rm # 删除所有容器

启动和停止容器的操作

docker start 容器id # 启动容器

docker restart 容器id # 重启容器

docker stop 容器id # 停止容器

docker kill 容器id # 强制停止容器

常用其他命令

后台启动容器

root@linux-PC:/# docker run -d centos

5bcbab940be78705b68bb93f15ecaa4d154af0f47417a456372d513b8655a498

root@linux-PC:/# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

root@linux-PC:/# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5bcbab940be7 centos "/bin/bash" 12 seconds ago Exited (0) 9 seconds ago hardcore_sammet

# 问题:docker ps 发现centos停止了

# docker 容器使用后台运行,就必须要有一个前台进程,docker发现没有应用,就会自动停止

查看日志

docker logs -tf --tail 10 容器id

docker logs -tf 容器id

查看容器中的进程信息

root@linux-PC:/# docker top d25ab60789f3

UID PID PPID C STIME TTY TIME CMD

root 21180 21161 0 14:19 ? 00:00:00 nginx: master process nginx -g daemon off;

systemd+ 21230 21180 0 14:19 ? 00:00:00 nginx: worker process

root@linux-PC:/#

查看镜像的元数据

root@linux-PC:/# docker inspect d25ab60789f3

[

{

"Id": "d25ab60789f3b317b480b1e2aa8d4df3809f1a4d62cb72ca640fb450db9b4fc8",

"Created": "2020-10-30T06:19:14.289562938Z",

"Path": "/docker-entrypoint.sh",

"Args": [

"nginx",

"-g",

"daemon off;"

],

"State": {

"Status": "running",

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"Dead": false,

"Pid": 21180,

"ExitCode": 0,

"Error": "",

"StartedAt": "2020-10-30T06:19:15.347773463Z",

"FinishedAt": "0001-01-01T00:00:00Z"

},

"Image": "sha256:f35646e83998b844c3f067e5a2cff84cdf0967627031aeda3042d78996b68d35",

"ResolvConfPath": "/var/lib/docker/containers/d25ab60789f3b317b480b1e2aa8d4df3809f1a4d62cb72ca640fb450db9b4fc8/resolv.conf",

"HostnamePath": "/var/lib/docker/containers/d25ab60789f3b317b480b1e2aa8d4df3809f1a4d62cb72ca640fb450db9b4fc8/hostname",

"HostsPath": "/var/lib/docker/containers/d25ab60789f3b317b480b1e2aa8d4df3809f1a4d62cb72ca640fb450db9b4fc8/hosts",

"LogPath": "/var/lib/docker/containers/d25ab60789f3b317b480b1e2aa8d4df3809f1a4d62cb72ca640fb450db9b4fc8/d25ab60789f3b317b480b1e2aa8d4df3809f1a4d62cb72ca640fb450db9b4fc8-json.log",

"Name": "/epic_brattain",

"RestartCount": 0,

"Driver": "overlay2",

"Platform": "linux",

"MountLabel": "",

"ProcessLabel": "",

"AppArmorProfile": "docker-default",

"ExecIDs": null,

"HostConfig": {

"Binds": null,

"ContainerIDFile": "",

"LogConfig": {

"Type": "json-file",

"Config": {

}

},

"NetworkMode": "default",

"PortBindings": {

},

"RestartPolicy": {

"Name": "no",

"MaximumRetryCount": 0

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": null,

"CapAdd": null,

"CapDrop": null,

"Capabilities": null,

"Dns": [],

"DnsOptions": [],

"DnsSearch": [],

"ExtraHosts": null,

"GroupAdd": null,

"IpcMode": "private",

"Cgroup": "",

"Links": null,

"OomScoreAdj": 0,

"PidMode": "",

"Privileged": false,

"PublishAllPorts": false,

"ReadonlyRootfs": false,

"SecurityOpt": null,

"UTSMode": "",

"UsernsMode": "",

"ShmSize": 67108864,

"Runtime": "runc",

"ConsoleSize": [

0,

0

],

"Isolation": "",

"CpuShares": 0,

"Memory": 0,

"NanoCpus": 0,

"CgroupParent": "",

"BlkioWeight": 0,

"BlkioWeightDevice": [],

"BlkioDeviceReadBps": null,

"BlkioDeviceWriteBps": null,

"BlkioDeviceReadIOps": null,

"BlkioDeviceWriteIOps": null,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"Devices": [],

"DeviceCgroupRules": null,

"DeviceRequests": null,

"KernelMemory": 0,

"KernelMemoryTCP": 0,

"MemoryReservation": 0,

"MemorySwap": 0,

"MemorySwappiness": null,

"OomKillDisable": false,

"PidsLimit": null,

"Ulimits": null,

"CpuCount": 0,

"CpuPercent": 0,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0,

"MaskedPaths": [

"/proc/asound",

"/proc/acpi",

"/proc/kcore",

"/proc/keys",

"/proc/latency_stats",

"/proc/timer_list",

"/proc/timer_stats",

"/proc/sched_debug",

"/proc/scsi",

"/sys/firmware"

],

"ReadonlyPaths": [

"/proc/bus",

"/proc/fs",

"/proc/irq",

"/proc/sys",

"/proc/sysrq-trigger"

]

},

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/dc4b5ec358852f228842b80dc3c7c063002b357467a5e7d6fba35fc9e966cbf9-init/diff:/var/lib/docker/overlay2/8a37c49d726166ecdaa4dee9c7dd1113b24bf36a9ab24712c33f04a592889f1e/diff:/var/lib/docker/overlay2/67858cfdfa4c61816e34e1571e3e558c85d39f369ffda878ff2572334144f7b7/diff:/var/lib/docker/overlay2/357ac69e1b83ddd35d043a68d27f693b31c668e5e2874d413e3a5db2baeaf941/diff:/var/lib/docker/overlay2/8c239eec2c38fd23c5fd9d7312737a4bc691ea0545ba421c1798e48790561c87/diff:/var/lib/docker/overlay2/eb7dafa6c18319b4d47e272fe1840c2ecfdda50ed3015f52764c67d69eea49c8/diff",

"MergedDir": "/var/lib/docker/overlay2/dc4b5ec358852f228842b80dc3c7c063002b357467a5e7d6fba35fc9e966cbf9/merged",

"UpperDir": "/var/lib/docker/overlay2/dc4b5ec358852f228842b80dc3c7c063002b357467a5e7d6fba35fc9e966cbf9/diff",

"WorkDir": "/var/lib/docker/overlay2/dc4b5ec358852f228842b80dc3c7c063002b357467a5e7d6fba35fc9e966cbf9/work"

},

"Name": "overlay2"

},

"Mounts": [],

"Config": {

"Hostname": "d25ab60789f3",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"80/tcp": {

}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"NGINX_VERSION=1.19.3",

"NJS_VERSION=0.4.4",

"PKG_RELEASE=1~buster"

],

"Cmd": [

"nginx",

"-g",

"daemon off;"

],

"Image": "nginx",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": [

"/docker-entrypoint.sh"

],

"OnBuild": null,

"Labels": {

"maintainer": "NGINX Docker Maintainers "

},

"StopSignal": "SIGTERM"

},

"NetworkSettings": {

"Bridge": "",

"SandboxID": "f1211e664c18aa30367da87306e9b7031245a49c31a08b34ceb343d6c16f717b",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": {

"80/tcp": null

},

"SandboxKey": "/var/run/docker/netns/f1211e664c18",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "3c6f9b1d0deff38c14a32c4fe496ec8177a5d7e3f427f22983005c01aa87d4e3",

"Gateway": "172.17.0.1",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"MacAddress": "02:42:ac:11:00:02",

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "f3472496df35b63da4f082a4e025dc04f14a28d350d44427195edcb81fca0f9c",

"EndpointID": "3c6f9b1d0deff38c14a32c4fe496ec8177a5d7e3f427f22983005c01aa87d4e3",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:02",

"DriverOpts": null

}

}

}

}

]

进入当前正在进行的容器

docker exec -it 容器id /bin/bash

root@linux-PC:/# docker exec -it d25ab60789f3 /bin/bash

root@d25ab60789f3:/#

docker attach 容器id

root@linux-PC:/# docker attach d25ab60789f3

当前正在执行的代码...

# docker exec 进入容器后开启一个新的终端,可以在里面操作

# docker attach 进入容器正在执行的终端,不会启动新的终端

从容器中拷贝文件到宿主机上

docker cp 容器id:容器内路径 目的地宿主机路径

# 拷贝是一个手动过程,使用 -v 卷的技术可以实现自动同步

作业练习

Docker 安装 Nginx

root@linux-PC:/# docker run -it -d --name myNginx -p 80:80 f35646e83998

087ebee79f80c341eb26bde37a9188bb453bbefc65d1c2989daf4576f4516ca8

root@linux-PC:/# docker run -it -d --name myNginx2 -p 81:80 nginx

939161c514632b485d57c39642dc18268c2da4ee2154b54ae25c31735fbab552

root@linux-PC:/# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

939161c51463 nginx "/docker-entrypoint.…" 14 seconds ago Up 12 seconds 0.0.0.0:81->80/tcp myNginx2

087ebee79f80 f35646e83998 "/docker-entrypoint.…" 44 seconds ago Up 42 seconds 0.0.0.0:80->80/tcp myNginx

Dcoker 安装 TomCat

# 官方使用 使用完就删除

root@linux-PC:/# docker run -it --rm tomcat

root@linux-PC:/# docker run -d --name myTomcat -p 8080:8080 tomcat

285c4301281b40250f05f3625b464b83f0dfd4ef39972a878946a24996ac1455

Docker 部署 es + kibana

# es暴露的端口很多

# es十分耗内存

# es的数据要放在安全目录,挂载

# --net somenetwork 网络配置

docker run -d --name elasticsearch --net somenetwork -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" elasticsearch:7.9.3

Docker镜像

镜像是什么

镜像是一种轻量级、可执行的独立软件包,用来打包软件运行环境和基于运行环境开发的软件,它包含运行某个软件所需的所有内容,包括代码、运行时、环境变量和配置文件。

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-NGMt9ULj-1605160651353)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201103121313034.png)]

Dcoker镜像加载原理

UnionFS(联合文件系统)

UnionFS:是一种分层、轻量级并且高性能的文件系统,它支持对文件系统的修改作为一次提交来一层一层的叠加,同时可以将不同目录挂载到同一个虚拟文件系统下(Unite several directories into a single virtual filesystem)。Union文件系统是Docker镜像的基础。镜像可以通过分层来进行继承,基于基础镜像(没有父镜像),可以制作各种具体的应用镜像。

特性:一次同时加载多个文件系统,但从外面看起来,只能看到一个文件系统,联合加载会把各层文件系统叠加起来,这样最终的文件系统会包含所有底层的文件和目录

Docker 镜像加载原理

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-U0NIrsNs-1605160651383)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201030161543869.png)]

分层的理解

分层的镜像

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-eET2CgGR-1605160651386)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201030183537853.png)]

理解

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-e56iOfvF-1605160651395)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201030183638737.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-YGB6W2XO-1605160651396)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201030183741704.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-GgC1I4J0-1605160651399)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201030183817823.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-qGJzduwx-1605160651400)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201030183917459.png)]

特点

Docker镜像都是只读的,当容器启动时,一个新的可写层被加载到镜像的顶部。

这一层就是我们通常说的容器层,容器之下的都叫镜像层。

commit镜像

docker commit 提交容器为一个新的副本

docker commit -m="描述信息" -a="作者" 容器id 目标镜像名:[TAG]

容器数据卷

什么是容器数据卷

容器的持久化和同步操作,容器间也可以数据共享

使用数据卷

方式一:使用命令进行挂载

docker run -it -v 宿主机目录:容器内目录

实战:安装mysql

root@linux-PC:/# docker run -it -d --name mysql -p 3306:3306 -v /data/yshen/mount/mysql/conf:/etc/mysql/conf.d -v /data/yshen/mount/mysql/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root mysql:5.7.32

00a3a133d6cb2a636bb43b62b8aa8f8d6eded2e7dedc5e580b69df5fc70125db

具名挂载和匿名挂载

# 匿名挂载

-v 容器内路径

root@linux-PC:~# docker run -d -P --name nginx -v /etc/nginx nginx

3366ced2ac3bbbe999714c9a07f4725a925ff29423b1bab2ca1f5c706b156c38

#具名挂载

root@linux-PC:~# docker run -it -d -p 80:80 -v new-nginx:/etc/nginx --name nginx02 nginx

aa6185847092495bfa835ef77a1b665d4efd2a64aecd837ed1baf565c1b91713

root@linux-PC:~# docker volume inspect new-nginx

[

{

"CreatedAt": "2020-11-02T16:00:13+08:00",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/new-nginx/_data",

"Name": "new-nginx",

"Options": null,

"Scope": "local"

}

]

-v 容器内路径 # 匿名挂载

-v 卷名:容器内路径 # 具名挂载

-v 宿主机路径:容器内路径 # 指定路径挂载

# 通过 -v 容器内路径 ro,rw 改变独写权限

root@linux-PC:~# docker run -d -P --name nginx03 -v nginx_02:/etc/nginx:ro nginx

3fe2452896adef15edf8b5fc3b0aabb1a5dcebd2a6516613ca3f8e49ea064d2d

root@linux-PC:~# docker run -d -P --name nginx04 -v nginx_02:/etc/nginx:rw nginx

0c4afdee33ac36fed1e5f3053faddd153bdc61f27b1f97b960d3c339a51f5161

初识Dockerfile

Dockerfile就是用来构建docker镜像的文件,通过这个脚本可以生成镜像,镜像是一层一层的,脚本里就是一个一个的命令,每个命令都是一层。

方式二:

#创建dockerFile文件,文件中的内容指令大写

FROM nginx

VOLUME ["volume01","volume02"]

CMD echo "----end----01"

CMD /bin/bash

#这里每个命令就是镜像的一层

root@linux-PC:~# docker build -f /data/yshen/dockerfile/dockerfile01 -t build/nginx:1.0 .

Sending build context to Docker daemon 2.048kB

Step 1/4 : FROM nginx

---> f35646e83998

Step 2/4 : VOLUME ["volume01","volume02"]

---> Running in 328525b33161

Removing intermediate container 328525b33161

---> c66e230a35f6

Step 3/4 : CMD echo "----end----01"

---> Running in 314bfa1253a8

Removing intermediate container 314bfa1253a8

---> 85b388d781e7

Step 4/4 : CMD /bin/bash

---> Running in 263d590dbf76

Removing intermediate container 263d590dbf76

---> d099666c7f91

Successfully built d099666c7f91

Successfully tagged build/nginx:1.0

root@linux-PC:/# docker run -it -P d099666c7f91 /bin/bash

# volume01 volume02 挂载卷

root@febbf77cfb70:/# ls

bin boot dev docker-entrypoint.d docker-entrypoint.sh etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var volume01 volume02

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-zXAqd340-1605160651402)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201102163600458.png)]

容器数据卷

--volume-from

docker run -it nginx02 --volume-from nginx01 nginx

DockerFile

dockerfile是用来构建docker镜像的文件,命令参数脚本

构建步骤:

1、编写一个dockerfile文件

2、docker build构建成一个镜像

3、docker run运行镜像

4、docker push 发布镜像

DockerFile的构建过程

基础知识

1、每个保留关键字(指令)都必须是大写

2、从上到下执行

3、#表示注释

4、每一个指令都会创建一个新的镜像并提交

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-6HYQBqeU-1605160651404)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201102171053694.png)]

DockerFile的指令

FROM # 基础镜像

MAINTAINER # 镜像是谁写的,姓名+邮箱

RUN # 镜像构建时需要运行的命令

ADD # 添加内容

WORKDIR # 镜像的工作目录

VOLUMR # 挂载的目录

EXPOSE # 端口配置

CMD # 指定这个容器启动的时候要运行的命令,只有最后一个会生效,可被替代

ENTRYPOINT # 指定这个容器启动的时候要运行的命令,可以追加命令

ONBUILD # 当构建一个被继承DockerFile,这时就是会运行ONBUILD的指令。是一个触发指令

COPY # 类似ADD,将我们的文件拷贝到镜像中

ENV # 构建的时候设置环境变量

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-GzL8YJ38-1605160651406)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201102171903853.png)]

实战测试

创建一个centos

CMD 和 ENTRYPOINT的区别

CMD #指定容器启动时要运行的命令,只有最后一个会生效,可别替代

ENTRYPOINT # 指定容器启动时要运行的命令,可以追加命令

Tomcat镜像

发布镜像

# 登录

docker login -p yshen123 -u yshen

root@linux-PC:/data/yshen/package# docker login --help

Usage: docker login [OPTIONS] [SERVER]

Log in to a Docker registry.

If no server is specified, the default is defined by the daemon.

Options:

-p, --password string Password

--password-stdin Take the password from stdin

-u, --username string Username

阿里云镜像

- 登录阿里云Docker Registry

$ sudo docker login --username=jane registry.cn-shanghai.aliyuncs.com

用于登录的用户名为阿里云账号全名,密码为开通服务时设置的密码。

您可以在访问凭证页面修改凭证密码。

- 从Registry中拉取镜像

$ sudo docker pull registry.cn-shanghai.aliyuncs.com/jane_sun/docker_home:[镜像版本号]

- 将镜像推送到Registry

$ sudo docker login --username=jane registry.cn-shanghai.aliyuncs.com

$ sudo docker tag [ImageId] registry.cn-shanghai.aliyuncs.com/jane_sun/docker_home:[镜像版本号]

$ sudo docker push registry.cn-shanghai.aliyuncs.com/jane_sun/docker_home:[镜像版本号]

root@linux-PC:/data/yshen/package# sudo docker login --username=卓君_jane registry.cn-shanghai.aliyuncs.com

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

小结

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-OKoASbDe-1605160651407)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201103124729720.png)]

Docker网络

理解Docker0

# 查看容器内部的网络地址 容器启动时会得到一个 eth0@if159 IP地址,docker分配的

root@linux-PC:/# docker exec -it 560e6c7363c2 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

158: eth0@if159: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# linux 可以 ping 通容器内部

root@linux-PC:/# ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.056 ms

64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.030 ms

64 bytes from 172.17.0.3: icmp_seq=3 ttl=64 time=0.030 ms

64 bytes from 172.17.0.3: icmp_seq=4 ttl=64 time=0.030 ms

64 bytes from 172.17.0.3: icmp_seq=5 ttl=64 time=0.031 ms

64 bytes from 172.17.0.3: icmp_seq=6 ttl=64 time=0.030 ms

64 bytes from 172.17.0.3: icmp_seq=7 ttl=64 time=0.030 ms

64 bytes from 172.17.0.3: icmp_seq=8 ttl=64 time=0.029 ms

^C

--- 172.17.0.3 ping statistics ---

8 packets transmitted, 8 received, 0% packet loss, time 157ms

rtt min/avg/max/mdev = 0.029/0.033/0.056/0.009 ms

原理

1、每启动一个docker容器,docker就会给容器分配一个ip,只要安装了docker,就会有一个网卡docker0,桥接模式,使用的是veth-pair

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ouWB824m-1605160651409)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201103125305842.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-isjVIj47-1605160651412)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201103125504672.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-sCpSrF94-1605160651415)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201103125548281.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-wJNGvLRJ-1605160651417)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201103125627167.png)]

veth-pair 就是一对的虚拟设备接口,它都是成对出现的。一端连着协议栈,一端彼此相连着。

正因为有这个特性,它常常充当着一个桥梁,连接着各种虚拟网络设备,典型的例子像“两个 namespace 之间的连接”,“Bridge、OVS 之间的连接”,“Docker 容器之间的连接” 等等,以此构建出非常复杂的虚拟网络结构,比如 OpenStack Neutron。

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-W4tItLuS-1605160651419)(C:\Users\89721\AppData\Roaming\Typora\typora-user-images\image-20201103130154128.png)]

容器互联 --link

root@linux-PC:/# docker exec -it tomcat01 ping tomcat02

ping: tomcat02: Name or service not known

# 通过 --link解决容器间的网络连接问题

root@linux-PC:/# docker run -it -d --name tomcat03 -p 8083:8080 --link tomcat02 tomcat

0be024a248e916e251e079418100d466e0d232552b65a418eba350cfbb02765a

root@linux-PC:/# docker exec -it tomcat03 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.046 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.041 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.041 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=4 ttl=64 time=0.041 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=5 ttl=64 time=0.046 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=6 ttl=64 time=0.036 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=7 ttl=64 time=0.041 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=8 ttl=64 time=0.041 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=9 ttl=64 time=0.040 ms

# 反向 ping

root@linux-PC:/# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Name or service not known

root@linux-PC:/# docker network ls

NETWORK ID NAME DRIVER SCOPE

c29ca0f9dd55 bridge bridge local

847c30861cf6 host host local

68adff11e58f none null local

root@linux-PC:/# docker network inspect c29ca0f9dd55

[

{

"Name": "bridge",

"Id": "c29ca0f9dd55d86ed7ded105fedb7acd0646dced6e08a4356b2a0dbdf878d669",

"Created": "2020-11-02T15:51:18.404907591+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"0be024a248e916e251e079418100d466e0d232552b65a418eba350cfbb02765a": {

"Name": "tomcat03",

"EndpointID": "d9b9e6be97d7174cc66e7e8b267891255094df65789152dac9e5e1c2a2e1d0ca",

"MacAddress": "02:42:ac:11:00:04",

"IPv4Address": "172.17.0.4/16",

"IPv6Address": ""

},

"573d73861ded88c4f2ad2107b3e0db63f04287f0e37e493c4150d961ddfec0db": {

"Name": "tomcat02",

"EndpointID": "6c7618837e63b3bc367f3b284abc720fc64703b4332ca20389d6b1384a6c6704",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"636375c85ade1c00112a769ec3d127e745c98370ba9ba5ecd640f44875430975": {

"Name": "tomcat01",

"EndpointID": "1f461a90341d34147557f3b97ba5e3eaba4f0f02ea55e7b676223d6c095ca442",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {

}

}

]

# 查看tomcat03的hosts 加入了tomcat02的映射

root@linux-PC:/# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 573d73861ded

172.17.0.4 0be024a248e9

# 查看tomcat02的hosts

root@linux-PC:/# docker exec -it tomcat02 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 573d73861ded

本质:–link在hosts配置中增加了映射

自定义网络

查看所有的docker网络

root@linux-PC:/# docker network ls

NETWORK ID NAME DRIVER SCOPE

c29ca0f9dd55 bridge bridge local

847c30861cf6 host host local

68adff11e58f none null local

网络模式

bridge:桥接,docker(默认)

none:不配置网络

host:和宿主机共享网络

container:容器网络连通(局限性大)

实例

# 创建 network

root@linux-PC:/# docker network create -d bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

34e044e1a47a70f09f2c5176eaf30bf8ba935d0273da62874de8ddbfb82ea188

root@linux-PC:/# docker network ls

NETWORK ID NAME DRIVER SCOPE

c29ca0f9dd55 bridge bridge local

847c30861cf6 host host local

0442029fd67c mynet bridge local

68adff11e58f none null local

root@linux-PC:/# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "0442029fd67c7be7a676af664bd1f3b660f2c2eff130a2a36fcdbdc07883a053",

"Created": "2020-11-03T14:14:16.260301592+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {

},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

},

"Options": {

},

"Labels": {

}

}

]

# 运行tomcat-net-01

root@linux-PC:/# docker run -it -d --name tomcat-net-01 -P --network mynet tomcat

ef36e184f83b86145ede4c436c76c516b9af8965859f660fa776c3bb6f8f5d95

# 运行tomcat-net-02

root@linux-PC:/# docker run -it -d --name tomcat-net-02 -P --network mynet tomcat

d2ba958d6ea842b91b6c7864785047c0e305c774e00547816606f135f412d3b9

# tomcat-net-02 ping tomcat-net-01

root@linux-PC:/# docker exec -it tomcat-net-02 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.051 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.044 ms

# tomcat-net-01 ping tomcat-net-02

root@linux-PC:/# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.058 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.043 ms

root@linux-PC:/# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "0442029fd67c7be7a676af664bd1f3b660f2c2eff130a2a36fcdbdc07883a053",

"Created": "2020-11-03T14:14:16.260301592+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {

},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"d2ba958d6ea842b91b6c7864785047c0e305c774e00547816606f135f412d3b9": {

"Name": "tomcat-net-02",

"EndpointID": "d48fd319fdb5d6ec2dc852ddde7697afdc01eefdc705e8470246396b0d0b96b8",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"ef36e184f83b86145ede4c436c76c516b9af8965859f660fa776c3bb6f8f5d95": {

"Name": "tomcat-net-01",

"EndpointID": "a4a40920cc25e92730b933439e295dac5deef8e69a773dc146d22439932ff25f",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

}

},

"Options": {

},

"Labels": {

}

}

]

网络连通

本质

实质上是将容器与网络进行连通

root@linux-PC:/# docker network connect --help

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

Connect a container to a network

Options:

--alias strings Add network-scoped alias for the container

--driver-opt strings driver options for the network

--ip string IPv4 address (e.g., 172.30.100.104)

--ip6 string IPv6 address (e.g., 2001:db8::33)

--link list Add link to another container

--link-local-ip strings Add a link-local address for the container

实例

# 在默认的 docker0(birdge) 下运行 tomcat01

root@linux-PC:/# docker run -it -d -P --name tomcat01 tomcat

fbba42261764549fc1cee6d89cf6638032171e8c8d9c37aa5667fdcd7b0a4eef

# tomcat01 ping tomcat-net-01

root@linux-PC:/# docker exec -it tomcat01 ping tomcat-net-01

ping: tomcat-net-01: Name or service not known

# tomcat01 ping tomcat-net-02

root@linux-PC:/# docker exec -it tomcat01 ping tomcat-net-02

ping: tomcat-net-02: Name or service not known

# 将 tomcat01 加入 mynet

root@linux-PC:/# docker network connect mynet tomcat01

# 查看 mynet,Containers中加入了 tomcat01

root@linux-PC:/# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "0442029fd67c7be7a676af664bd1f3b660f2c2eff130a2a36fcdbdc07883a053",

"Created": "2020-11-03T14:14:16.260301592+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {

},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"d2ba958d6ea842b91b6c7864785047c0e305c774e00547816606f135f412d3b9": {

"Name": "tomcat-net-02",

"EndpointID": "d48fd319fdb5d6ec2dc852ddde7697afdc01eefdc705e8470246396b0d0b96b8",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"ef36e184f83b86145ede4c436c76c516b9af8965859f660fa776c3bb6f8f5d95": {

"Name": "tomcat-net-01",

"EndpointID": "a4a40920cc25e92730b933439e295dac5deef8e69a773dc146d22439932ff25f",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"fbba42261764549fc1cee6d89cf6638032171e8c8d9c37aa5667fdcd7b0a4eef": {

"Name": "tomcat01",

"EndpointID": "1608e3fd2695964dbcee05ddc8abcc87c5b99aa4fd7816db6ff59f626dbccdce",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

}

},

"Options": {

},

"Labels": {

}

}

]

# tomcat01 再次 ping tomcat-net-01

root@linux-PC:/# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.068 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.044 ms

# tomcat01 再次 ping tomcat-net-02

root@linux-PC:/# docker exec -it tomcat01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.079 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.044 ms

实战:部署Redis集群

SpringBoot微服务打包Docker镜像

Docker Compose

简介

Docker Compose轻松高效的来管理容器,定义和运行多个容器

官方介绍

Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration. To learn more about all the features of Compose, see the list of features.

Compose works in all environments: production, staging, development, testing, as well as CI workflows. You can learn more about each case in Common Use Cases.

Using Compose is basically a three-step process:

- Define your app’s environment with a

Dockerfileso it can be reproduced anywhere. - Define the services that make up your app in

docker-compose.ymlso they can be run together in an isolated environment. - Run

docker-compose upand Compose starts and runs your entire app.

A docker-compose.yml looks like this:

version: "3.8"

services:

web:

build: .

ports:

- "5000:5000"

volumes:

- .:/code

- logvolume01:/var/log

links:

- redis

redis:

image: redis

volumes:

logvolume01: {

}

重要概念

**服务(service):**一个应用的容器,实际上可以包括若干运行相同镜像的容器实例。

**项目(project):**由一组关联的应用容器组成的一个完整业务单元,在docker-compose.yml文件中定义。

安装

1、下载

sudo curl -L "https://get.daocloud.io/docker/compose/releases/download/1.27.4/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

2、授权

sudo chmod +x /usr/local/bin/docker-compose

3、测试

root@linux-PC:/# docker-compose --version

docker-compose version 1.27.4, build 40524192

入门

Step 1: Setup

Define the application dependencies.

-

Create a directory for the project:

$ mkdir composetest $ cd composetest -

Create a file called

app.pyin your project directory and paste this in:import time import redis from flask import Flask app = Flask(__name__) cache = redis.Redis(host='redis', port=6379) def get_hit_count(): retries = 5 while True: try: return cache.incr('hits') except redis.exceptions.ConnectionError as exc: if retries == 0: raise exc retries -= 1 time.sleep(0.5) @app.route('/') def hello(): count = get_hit_count() return 'Hello World! I have been seen {} times.\n'.format(count)In this example,

redisis the hostname of the redis container on the application’s network. We use the default port for Redis,6379.Handling transient errors

Note the way the

get_hit_countfunction is written. This basic retry loop lets us attempt our request multiple times if the redis service is not available. This is useful at startup while the application comes online, but also makes our application more resilient if the Redis service needs to be restarted anytime during the app’s lifetime. In a cluster, this also helps handling momentary connection drops between nodes. -

Create another file called

requirements.txtin your project directory and paste this in:flask redis

Step 2: Create a Dockerfile

In this step, you write a Dockerfile that builds a Docker image. The image contains all the dependencies the Python application requires, including Python itself.

In your project directory, create a file named Dockerfile and paste the following:

FROM python:3.7-alpine

WORKDIR /code

ENV FLASK_APP=app.py

ENV FLASK_RUN_HOST=0.0.0.0

RUN apk add --no-cache gcc musl-dev linux-headers

COPY requirements.txt requirements.txt

RUN pip install -r requirements.txt

EXPOSE 5000

COPY . .

CMD ["flask", "run"]

This tells Docker to:

- Build an image starting with the Python 3.7 image.

- Set the working directory to

/code. - Set environment variables used by the

flaskcommand. - Install gcc and other dependencies

- Copy

requirements.txtand install the Python dependencies. - Add metadata to the image to describe that the container is listening on port 5000

- Copy the current directory

.in the project to the workdir.in the image. - Set the default command for the container to

flask run.

For more information on how to write Dockerfiles, see the Docker user guide and the Dockerfile reference.

Step 3: Define services in a Compose file

Create a file called docker-compose.yml in your project directory and paste the following:

version: "3.8"

services:

web:

build: .

ports:

- "5000:5000"

redis:

image: "redis:alpine"

This Compose file defines two services: web and redis.

Web service

The web service uses an image that’s built from the Dockerfile in the current directory. It then binds the container and the host machine to the exposed port, 5000. This example service uses the default port for the Flask web server, 5000.

Redis service

The redis service uses a public Redis image pulled from the Docker Hub registry.

Step 4: Build and run your app with Compose

-

From your project directory, start up your application by running

docker-compose up.$ docker-compose up Creating network "composetest_default" with the default driver Creating composetest_web_1 ... Creating composetest_redis_1 ... Creating composetest_web_1 Creating composetest_redis_1 ... done Attaching to composetest_web_1, composetest_redis_1 web_1 | * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit) redis_1 | 1:C 17 Aug 22:11:10.480 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo redis_1 | 1:C 17 Aug 22:11:10.480 # Redis version=4.0.1, bits=64, commit=00000000, modified=0, pid=1, just started redis_1 | 1:C 17 Aug 22:11:10.480 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf web_1 | * Restarting with stat redis_1 | 1:M 17 Aug 22:11:10.483 * Running mode=standalone, port=6379. redis_1 | 1:M 17 Aug 22:11:10.483 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128. web_1 | * Debugger is active! redis_1 | 1:M 17 Aug 22:11:10.483 # Server initialized redis_1 | 1:M 17 Aug 22:11:10.483 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled. web_1 | * Debugger PIN: 330-787-903 redis_1 | 1:M 17 Aug 22:11:10.483 * Ready to accept connectionsCompose pulls a Redis image, builds an image for your code, and starts the services you defined. In this case, the code is statically copied into the image at build time.

-

Enter http://localhost:5000/ in a browser to see the application running.

If you’re using Docker natively on Linux, Docker Desktop for Mac, or Docker Desktop for Windows, then the web app should now be listening on port 5000 on your Docker daemon host. Point your web browser to http://localhost:5000 to find the

Hello Worldmessage. If this doesn’t resolve, you can also try http://127.0.0.1:5000.If you’re using Docker Machine on a Mac or Windows, use

docker-machine ip MACHINE_VMto get the IP address of your Docker host. Then, openhttp://MACHINE_VM_IP:5000in a browser.You should see a message in your browser saying:

Hello World! I have been seen 1 times.

配置规则

https://docs.docker.com/compose/compose-file/

开源项目:部署WordPress博客

Define the project

-

Create an empty project directory.

You can name the directory something easy for you to remember. This directory is the context for your application image. The directory should only contain resources to build that image.

This project directory contains a

docker-compose.ymlfile which is complete in itself for a good starter wordpress project.Tip: You can use either a

.ymlor.yamlextension for this file. They both work. -

Change into your project directory.

For example, if you named your directory

my_wordpress:$ cd my_wordpress/ -

Create a

docker-compose.ymlfile that starts yourWordPressblog and a separateMySQLinstance with a volume mount for data persistence:version: '3.3' services: db: image: mysql:5.7 volumes: - db_data:/var/lib/mysql restart: always environment: MYSQL_ROOT_PASSWORD: somewordpress MYSQL_DATABASE: wordpress MYSQL_USER: wordpress MYSQL_PASSWORD: wordpress wordpress: depends_on: - db image: wordpress:latest ports: - "8000:80" restart: always environment: WORDPRESS_DB_HOST: db:3306 WORDPRESS_DB_USER: wordpress WORDPRESS_DB_PASSWORD: wordpress WORDPRESS_DB_NAME: wordpress volumes: db_data: { }

Build the project

Now, run docker-compose up -d from your project directory.

This runs docker-compose up in detached mode, pulls the needed Docker images, and starts the wordpress and database containers, as shown in the example below.

root@linux-PC:/data/yshen/wordpress# docker-compose up -d

Creating network "wordpress_default" with the default driver

Creating volume "wordpress_db_data" with default driver

Pulling db (mysql:5.7)...

5.7: Pulling from library/mysql

bb79b6b2107f: Pulling fs layer

bb79b6b2107f: Pull complete

49e22f6fb9f7: Pull complete

842b1255668c: Pull complete

9f48d1f43000: Pull complete

c693f0615bce: Pull complete

8a621b9dbed2: Pull complete

0807d32aef13: Pull complete

f15d42f48bd9: Pull complete

098ceecc0c8d: Pull complete

b6fead9737bc: Pull complete

351d223d3d76: Pull complete

Digest: sha256:4d2b34e99c14edb99cdd95ddad4d9aa7ea3f2c4405ff0c3509a29dc40bcb10ef

Status: Downloaded newer image for mysql:5.7

Pulling wordpress (wordpress:latest)...

latest: Pulling from library/wordpress

bb79b6b2107f: Already exists

80f7a64e4b25: Pull complete

da391f3e81f0: Pull complete

8199ae3052e1: Pull complete

284fd0f314b2: Pull complete

f38db365cd8a: Pull complete

1416a501db13: Pull complete

be0026dad8d5: Pull complete

7bf43186e63e: Pull complete

c0d672d8319a: Pull complete

645db540ba24: Pull complete

6f355b8da727: Pull complete

aa00daebd81c: Pull complete

98996914108d: Pull complete

69e3e95397b4: Pull complete

5698325d4d72: Pull complete

b604b3777675: Pull complete

57c814ef71bc: Pull complete

a336d2404e01: Pull complete

2e2e849c5abf: Pull complete

Digest: sha256:4fb444299c3e0b1edc4e95fc360a812ba938c7dbe22a69fb77a3f19eefc5a3d3

Status: Downloaded newer image for wordpress:latest

Creating wordpress_db_1 ... done

Creating wordpress_wordpress_1 ... done

实战

Docker Swarm

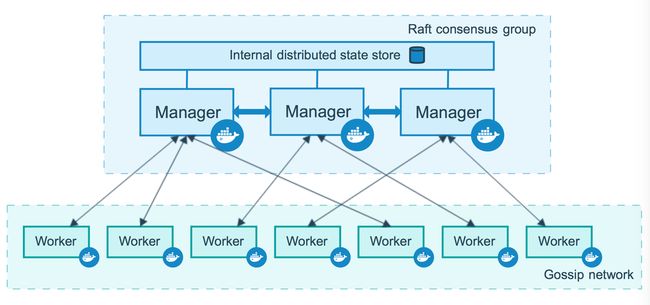

工作模式

Docker Engine 1.12 introduces swarm mode that enables you to create a cluster of one or more Docker Engines called a swarm. A swarm consists of one or more nodes: physical or virtual machines running Docker Engine 1.12 or later in swarm mode.

There are two types of nodes: managers and workers.

If you haven’t already, read through the swarm mode overview and key concepts.