opencv 手册_I.MX6 Linux Yocto与OpenCV

配件环境:

- 主机:Ubuntu16.04 LTS 64bit

- 开发板:NXP imx6qsabresd

- Bsp version:fsl-yocto-L4.1.15_2.0.0-ga

- Yocto Project version: 2.1

Yocto配置:

- 主机包安装: 打开Terminal依次输入以下三条语句,如果速度很慢,可以更换国内下载源。

$ sudo apt-get install gawk wget git-core diffstat unzip texinfo gcc-multilib

build-essential chrpath socat libsdl1.2-dev

$ sudo apt-get install libsdl1.2-dev xterm sed cvs subversion coreutils texi2html

docbook-utils python-pysqlite2 help2man make gcc g++ desktop-file-utils

libgl1-mesa-dev libglu1-mesa-dev mercurial autoconf automake groff curl lzop asciidoc

$ sudo apt-get install u-boot-tools2.repo工具:Terminal依次输入

$ mkdir ~/bin

$ curl http://commondatastorage.googleapis.com/git-repo-downloads/repo > ~/bin/repo

$ chmod a+x ~/bin/repo接着在./bashrc文件最后一行添加环境变量,然后source ~/.bashrc保存生效。

$ export PATH=~/bin:$PATH此处,repo的链接上不去(xx原因),解决方案是更改~/bin/repo文件下的REPO_URL,换为国内镜像。

REPO_URL = 'https://mirrors.tuna,tsinghua.edu.cn/git/git-repo'3.设置Yocto的Git配置:创建yocto工程文件夹后,git下bsp代码和工具,然后repo同步命令更新下载。

$ mkdir fsl-release-bsp

$ cd fsl-release-bsp

$ git config --global user.name "Your Name"

$ git config --global user.email "Your Email"

$ git config --list

$ repo init -u git://git.freescale.com/imx/fsl-arm-yocto-bsp.git -b imx-3.14.28-1.0.0_ga

$ repo syncYocto编译:

1.根据板子的名称和DISTRO配置选项(yocto用户手册中有),选择创建目标。例如

$ MACHINE=imx6qsabresd source fsl-setup-release.sh -b build-x11 -e x112.编译带有opencv库的yocto环境,需要更改/fsl-release-bsp-x11/conf/local.conf文件,如下

MACHINE ??= 'imx6qsabresd'

DISTRO ?= 'poky'

PACKAGE_CLASSES ?= "package_rpm package_ipk"

EXTRA_IMAGE_FEATURES = "debug-tweaks package-management"

IMAGE_INSTALL_append = " opkg "

USER_CLASSES ?= "buildstats image-mklibs image-prelink"

PATCHRESOLVE = "noop"

BB_DISKMON_DIRS = "

STOPTASKS,${TMPDIR},1G,100K

STOPTASKS,${DL_DIR},1G,100K

STOPTASKS,${SSTATE_DIR},1G,100K

ABORT,${TMPDIR},100M,1K

ABORT,${DL_DIR},100M,1K

ABORT,${SSTATE_DIR},100M,1K"

PACKAGECONFIG_pn-qemu-native = "sdl"

ASSUME_PROVIDED += "libsdl-native"

CONF_VERSION = "1"

IMAGE_FSTYPES = "tar.bz2 ext3 sdcard"

BB_NUMBER_THREADS = '4'

PARALLEL_MAKE = '-j 4'

DL_DIR ?= "${BSPDIR}/downloads/"

ACCEPT_FSL_EULA = "1"

CORE_IMAGE_EXTRA_INSTALL += "libopencv-core-dev libopencv-highgui-dev libopencv-imgproc-dev libopencv-objdetect-dev libopencv-ml-dev"

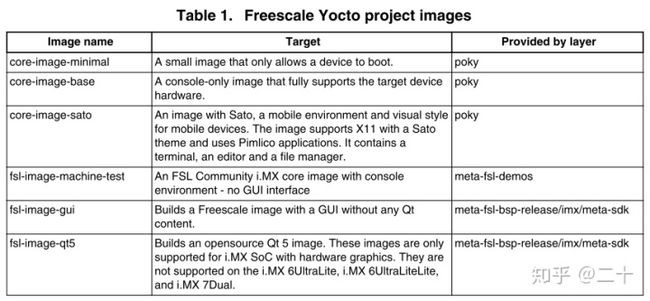

LICENSE_FLAGS_WHITELIST = "commercial"3.选择要编译的镜像类型,注意这需要很大空间(至少80G),也需要很多时间。

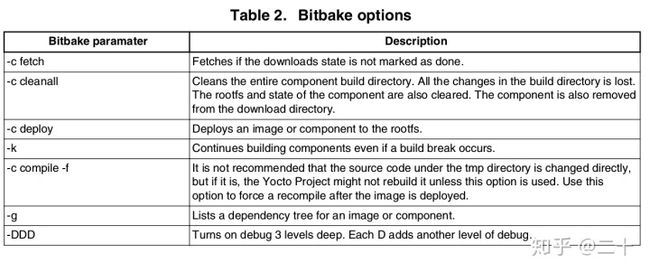

$ bitbake 可选

可选

编译过程中出现了报错,有两个文件没能下下来,手动下载后且需新建.done文件告知以下载成功。

报错1:ERROR: cpuburn-neon-20140626-r0 do_fetch: Function failed: Fetcher failure for URL: 'http:// hardwarebug.org/files/burn.S;name=mru'. Unable to fetch URL from any source.

解决:将burn.S和cpuburn-a8.S放入../fsl-release-bsp/downloads/cpuburn-neon-20140626/文件夹下,且新建burn.S.done cpuburn-a8.S.done文件

报错2:ERROR: imx-codec-4.1.6-r0 do_fetch: Fetcher failure for URL: 'http://www.freescale.com/lgfiles/NMG/MAD/YOCTO//imx-codec-4.1.6.bin;fsl-eula=true'. Checksum mismatch!

解决:将imx-codec-4.1.6.bin放入../fsl-release-bsp/downloads/文件夹下,且新建imx-codec-4.1.6.bin.done文件

此外,git clone很慢的解决方法:

1.访问https://www.ipaddress.com,找到页面中下方的“IP Address Tools – Quick Links”,分别输入github.global.ssl.fastly.net和github.com,查询ip地址。

2./etc/hosts 末尾添加后重启

github.global.ssl.fastly.Net 173.252.102.16

Build software better, together 13.250.177.2334. FLASH IMAGE ONTO SD CARD (Freescale_Yocto_Project_User's_Guide.pdf)

$ cd ~/fsl-release-bsp/build-x11/tmp/deploy/images/imx6qsabresd/

$ sudo dd if=.sdcard of=/dev/sd bs=1M && sync 5.编译工具链

$ cd ~/fsl-release-bsp/build-x11/

$ bitbake -c populate_sdk 6.安装工具链

$ cd ~/fsl-release-bsp/build-x11/tmp/deploys/sdk/

$ ./poky-glibc-x86_64-core-image-sato-cortexa9hf-neon-toolchain-2.1.1.sh7.开启交叉编译环境,就可以编译自己的程序放在arm板上跑了。

*open a new terminal

$ cd /opt/poky/2.1.1

$ . ./environment-setup-cortexa9hf-neon-poky-linux-gnueabi 基于OpenCV的简易单目测距:

需求设计:提取视频信息中最大物体(入门学习,简易实现,因此针对的是无障碍情况况),并计算此物体距离摄像头的距离。

实现思路:实现步骤主要分为读取摄像头的视频图像、检测视频最大物体、测量物体与摄像头的距离。详细地,就是对读取到的视频图像灰度化二值化后canny算子提取轮廓,然后针对图像中最大轮廓物体进行距离测量,距离测量的方法参考相似三角形原理,即可得

#include "opencv/highgui.h"

#include "opencv/cv.h"

#include

#include

#include

using namespace cv;

using std::vector;

using std::string;

int main()

{

//初始化

Mat frame;

Mat gray;

Mat dosmooth;

Mat dothreshold;

Mat docanny;

Mat polyPic;

vector> contours;//Vector里放vector,子vector里存放四个点坐标

//获取摄像头读取的视频信息

VideoCapture capture;

frame = capture.open(0);

if (!capture.isOpened()) {

std::cout<< "ERROR! Unable to open cameran"<> polyContours(contours.size());

vector minRect(contours.size());//vector: 像素width * height from 位置(x*y)

int maxArea = 0;

for (int index = 0; index < contours.size(); index++) {

if (contourArea(contours[index]) > contourArea(contours[maxArea]))

maxArea = index;

approxPolyDP(contours[index], polyContours[index], 10, true);

//设置阈值为10,将轮廓绘制成闭合折线,为提取矩形。

}

//画出最大轮廓

polyPic = Mat::zeros(frame.size(), CV_8UC3);

drawContours(polyPic, polyContours, maxArea, Scalar(0, 0, 255/*rand() & 255, rand() & 255, rand() & 255*/), 2);

//顺时针绘制凸包,即能包围这个物体的凸集的交集。

vector hull;

convexHull(polyContours[maxArea], hull, true);

//将角点绘制成半径为10非填充的圆圈

for (int i = 0; i < hull.size(); ++i) {

circle(polyPic, polyContours[maxArea][i], 10, Scalar(rand() & 255, rand() & 255, rand() & 255), 3);

}

addWeighted(polyPic, 0.5, frame, 0.5, 0, frame);//融合背景和前景区域框

//测距

float g_dConLength = arcLength(polyContours[maxArea], true);//像素宽度

float knowLength = 21;//实际物体宽度

float focalLength =4539;//焦距

float distance = (knowLength * focalLength) / g_dConLength;//待测距离

//将测得的距离显示在图像左上角

string dis_str = std::to_string(distance);

Point p(20,20);

putText(frame, dis_str, p, FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 255, 0), 2,false );

imshow("output", frame);

//保存处理后的图像

writer.write(frame);

if (frame.empty())break;

int ch = waitKey(100);

if ((char)ch ==27){

break;

}

}

writer.release();

capture.release();

return 0;

}

移植到I.MX6:

写好了demo之后,需要在Linux主机的交叉编译环境下编译代码,生成可执行文件。然后,将可执行文件拷贝到插入开发板的sd卡上。最后,同过minicomc调试板子运行可执行文件。

1.开启交叉编译环境

*open a new terminal

$ cd /opt/poky/2.1.1

$ . ./environment-setup-cortexa9hf-neon-poky-linux-gnueabi 2.Makefile编译生成可文件

#before buildint it don't forget to export ROOTF_DIR and TOOLCHAIN (only if they are uncommented here)

APPNAME = ceju

DESTDIR = ../bin

SRCDIR = ../src

CXX = $(CROSS_COMPILE)g++

DEL_FILE = rm -rf

CP_FILE = cp -rf

ROOTFS_DIR = $(SDKTARGETSYSROOT)

TARGET_PATH_LIB = $(ROOTFS_DIR)/usr/lib

TARGET_PATH_INCLUDE = $(ROOTFS_DIR)/usr/include

CFLAGS = -march=armv7-a -marm -mfpu=neon -mfloat-abi=hard -mcpu=cortex-a9 --sysroot=$(ROOTFS_DIR)

-DEGL_USE_X11 -DGPU_TYPE_VIV -DGL_GLEXT_PROTOTYPES -DENABLE_GPU_RENDER_20

-I.

LFLAGS = -march=armv7-a -marm -mfpu=neon -mfloat-abi=hard -mcpu=cortex-a9 --sysroot=$(ROOTFS_DIR) -lm

-lopencv_core -lopencv_imgproc -lopencv_highgui -lopencv_ml -lopencv_videoio

-lusb-1.0

-lEGL -lGLESv2 -lpthread

OBJECTS = $(APPNAME).o

first: all

all: $(APPNAME)

$(APPNAME): $(OBJECTS)

$(CXX) $(LFLAGS) -o $(DESTDIR)/$(APPNAME) $(OBJECTS)

$(APPNAME).o: $(APPNAME).cpp

$(CXX) $(CFLAGS) -c $(APPNAME).cpp

clean:

$(DEL_FILE) $(SRCDIR)/$(OBJECTS)

$(DEL_FILE) $(DESTDIR)/$(OBJECTS)

$(DEL_FILE) $(DESTDIR)/*~ $(DESTDIR)/*.core

$(DEL_FILE) $(DESTDIR)/$(APPNAME)

distclean: clean

$(DEL_FILE) $(DESTDIR)/$(APPNAME)

install: all

#${CP_FILE} $(SRCDIR)/$(APPNAME) $(DESTDIR)/$(APPNAME)

#${CP_FILE} $(DESTDIR)/$(APPNAME) $(ROOTFS_DIR)/home/opencv3.利用读卡器将可执行文件cp到板子的sd卡,通过mincom串口调试利器运行程序

$ sudo cp bin/ceju /your_board_path

$ sudo minicom

root@:~$ ./your_board_path/your_project_name 至此,实现了一个简易的测距demo,精度不是太高,而且只能实现简单背景情况下的测距。所以,需要学习的还有很多!!!

https://www.zhihu.com/video/1219874797439614976