本示例实现RocketMQ的生产者,消费者以及消息的监听

使用docker-compose搭建RocketMQ环境

如下配置文件都是来源网上,我只是使用。

在与docker-compose.yml文件同级目录下生成如下目录

mkdir -p ./data/logs

mkdir -p ./data/store

mkdir -p ./data/brokerconf在 ./data/brokerconf目录下编辑broker.conf

brokerClusterName=DefaultCluster

# broker 名字,注意此处不同的配置文件填写的不一样,如果在 broker-a.properties 使用: broker-a,

# 在 broker-b.properties 使用: broker-b

brokerName=broker-a

# 0 表示 Master,> 0 表示 Slave

brokerId=0

# nameServer地址,分号分割

# namesrvAddr=rocketmq-nameserver1:9876;rocketmq-nameserver2:9876

namesrvAddr=192.168.6.68:9876

# brokerIP1 设置宿主机IP,不要使用docker 内部IP

brokerIP1=192.168.6.68

# 在发送消息时,自动创建服务器不存在的topic,默认创建的队列数

defaultTopicQueueNums=4

# 是否允许 Broker 自动创建 Topic,建议线下开启,线上关闭

autoCreateTopicEnable=true

# 是否允许 Broker 自动创建订阅组,建议线下开启,线上关闭

autoCreateSubscriptionGroup=true

# Broker 对外服务的监听端口

listenPort=10911

# 删除文件时间点,默认凌晨4点

deleteWhen=04

# 文件保留时间,默认48小时

fileReservedTime=120

# commitLog 每个文件的大小默认1G

mapedFileSizeCommitLog=1073741824

# ConsumeQueue 每个文件默认存 30W 条,根据业务情况调整

mapedFileSizeConsumeQueue=300000

# destroyMapedFileIntervalForcibly=120000

# redeleteHangedFileInterval=120000

# 检测物理文件磁盘空间

diskMaxUsedSpaceRatio=88

# 存储路径

# storePathRootDir=/home/ztztdata/rocketmq-all-4.1.0-incubating/store

# commitLog 存储路径

# storePathCommitLog=/home/ztztdata/rocketmq-all-4.1.0-incubating/store/commitlog

# 消费队列存储

# storePathConsumeQueue=/home/ztztdata/rocketmq-all-4.1.0-incubating/store/consumequeue

# 消息索引存储路径

# storePathIndex=/home/ztztdata/rocketmq-all-4.1.0-incubating/store/index

# checkpoint 文件存储路径

# storeCheckpoint=/home/ztztdata/rocketmq-all-4.1.0-incubating/store/checkpoint

# abort 文件存储路径

# abortFile=/home/ztztdata/rocketmq-all-4.1.0-incubating/store/abort

# 限制的消息大小

maxMessageSize=65536

# flushCommitLogLeastPages=4

# flushConsumeQueueLeastPages=2

# flushCommitLogThoroughInterval=10000

# flushConsumeQueueThoroughInterval=60000

# Broker 的角色

# - ASYNC_MASTER 异步复制Master

# - SYNC_MASTER 同步双写Master

# - SLAVE

brokerRole=ASYNC_MASTER

# 刷盘方式

# - ASYNC_FLUSH 异步刷盘

# - SYNC_FLUSH 同步刷盘

flushDiskType=ASYNC_FLUSH

# 发消息线程池数量

sendMessageThreadPoolNums=10

# 拉消息线程池数量

pullMessageThreadPoolNums=10docker-compose.yml内容如下

version: '3.5'

services:

rmqnamesrv:

image: foxiswho/rocketmq:server

container_name: rmqnamesrv

ports:

- 9876:9876

volumes:

- ./data/logs:/opt/logs

- ./data/store:/opt/store

networks:

rmq:

aliases:

- rmqnamesrv

rmqbroker:

image: foxiswho/rocketmq:broker

container_name: rmqbroker

ports:

- 10909:10909

- 10911:10911

volumes:

- ./data/logs:/opt/logs

- ./data/store:/opt/store

- ./data/brokerconf/broker.conf:/etc/rocketmq/broker.conf

environment:

NAMESRV_ADDR: "rmqnamesrv:9876"

JAVA_OPTS: " -Duser.home=/opt"

JAVA_OPT_EXT: "-server -Xms128m -Xmx128m -Xmn128m"

command: mqbroker -c /etc/rocketmq/broker.conf

depends_on:

- rmqnamesrv

networks:

rmq:

aliases:

- rmqbroker

rmqconsole:

image: styletang/rocketmq-console-ng

container_name: rmqconsole

ports:

- 18080:8080

environment:

JAVA_OPTS: "-Drocketmq.namesrv.addr=rmqnamesrv:9876 -Dcom.rocketmq.sendMessageWithVIPChannel=false"

depends_on:

- rmqnamesrv

networks:

rmq:

aliases:

- rmqconsole

networks:

rmq:

name: rmq

driver: bridge

启动

docker-compose up浏览器输入 http://localhost:18080/ 可显示控制台界面

RocketMQ要点内容

消费方式

- 推送式消费 (Push Consumer)

Broker收到数据后会主动推送给消费端

- 拉取式消费(Pull Consumer)

主动调用Consumer的拉消息方法从Broker服务器拉消息、主动权由应用控制。一旦获取了批量消息,应用就会启动消费过程。

定时消息

- 定时消息(延迟队列)是指消息发送到broker后,不会立即被消费,等待特定时间投递给真正的topic。

- delayLevel有18个level,对应时间分别为:“1s 5s 10s 30s 1m 2m 3m 4m 5m 6m 7m 8m 9m 10m 20m 30m 1h 2h”,

- messageDelayLevel是broker的属性,不属于某个topic。

发消息时,设置delayLevel等级即可:msg.setDelayLevel(level)

- level == 0,消息为非延迟消息

- 1<=level<=maxLevel,消息延迟特定时间,例如level==1,延迟1s

- level > maxLevel,则level== maxLevel,例如level==20,延迟2h

消息模式

- 集群消费(Cluster)

相同Consumer Group的每个Consumer实例平均分摊消息 - 广播消费 (Broadcasting)

相同Consumer Group的每个Consumer实例都接收全量的消息

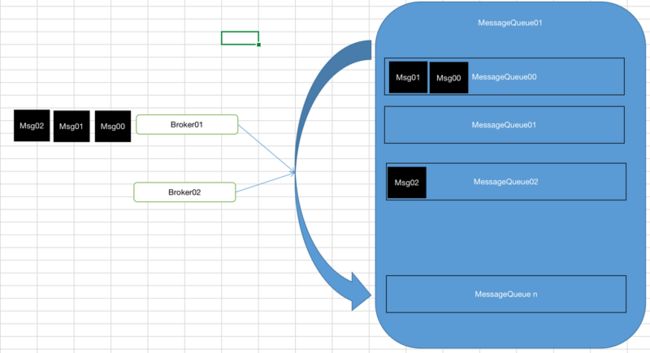

消息顺序

- 普通顺序消息 (Normal Ordered Message)

消费者通过同一个消费队列收到的消息是有顺序的,不同消息队列收到的消息则可能是无顺序的 - 严格顺序消息 (Strictly Ordered Message)

消费者收到的所有消息均是有顺序的

消息重试

重试队列

- RocketMQ会为每个消费组都设置一个Topic名称为“%RETRY%+consumerGroup”的重试队列。这个Topic的重试队列是针对消费组,而不是针对每个Topic设置的

- 因为异常恢复起来需要一些时间,会为重试队列设置多个重试级别,每个重试级别都有与之对应的重新投递延时,重试次数越多投递延时就越大。

- RocketMQ对于重试消息的处理是先保存至Topic名称为“SCHEDULE_TOPIC_XXXX”的延迟队列中,后台定时任务按照对应的时间进行Delay后重新保存至“%RETRY%+consumerGroup”的重试队列中。

死信队列

- 用于处理无法被正常消费的消息。当一条消息初次消费失败,消息队列会自动进行消息重试;达到最大重试次数后,若消费依然失败,此时,消息队列 不会立刻将消息丢弃,而是将其发送到该消费者对应的特殊队列中。

- RocketMQ将这种正常情况下无法被消费的消息称为死信消息(Dead-Letter Message),将存储死信消息的特殊队列称为死信队列(Dead-Letter Queue)。在RocketMQ中,可以通过使用console控制台对死信队列中的消息进行重发来使得消费者实例再次进行消费。

测试代码

发送消息,不按顺序

public class ProducerNoOrder {

public static void main(String[] args) {

DefaultMQProducer producer;

Random r = new Random();

producer = new DefaultMQProducer("BinProducerGroup");

producer.setInstanceName("BinTestInstance_" + r.nextInt(1000));

producer.setNamesrvAddr("192.168.6.68:9876"); //broker.conf中定义的namesrvAddr

producer.setSendMsgTimeout(30000);

producer.setMaxMessageSize(500000);

producer.setRetryTimesWhenSendFailed(3);

try {

producer.start();

Thread.sleep(8000);

} catch (Exception e) {

e.printStackTrace();

}

for(int i=0;i<100;i++) {

try {

String msg = "message"+i;

Message sendMsg = new Message("BinTestTopic02",

"rocketTag01",

"key"+i,

msg.getBytes());

//mqProducer.sendOneway(sendMsg); //Only send message, don't care about send result (such as send log).

//sendResult = mqProducer.send(sendMsg);// Sync send

producer.send(sendMsg, new SendCallback() {

@Override

public void onSuccess(SendResult sendResult) {

System.out.println("Sent ok:"+sendResult);

}

@Override

public void onException(Throwable throwable) {

System.out.println("Sent failed:" + throwable.getMessage());

}

});

Thread.sleep(100);

} catch (Exception e) {

e.printStackTrace();

}

}

}

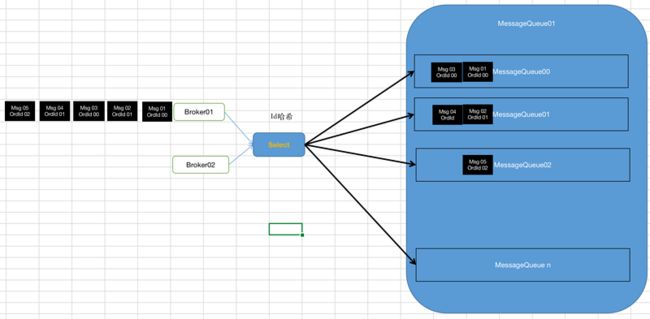

}顺序发送消息

public class ProducerInOrder {

public static void main(String[] args) {

DefaultMQProducer producer;

Random r = new Random();

producer = new DefaultMQProducer("BinProducerGroup");

producer.setInstanceName("BinTestInstance_" +r.nextInt(1000));

producer.setNamesrvAddr("192.168.6.68:9876");

producer.setSendMsgTimeout(30000);

producer.setMaxMessageSize(500000);

producer.setRetryTimesWhenSendFailed(3);

try {

producer.start();

Thread.sleep(8000);

} catch (Exception e) {

e.printStackTrace();

}

String[] tags = new String[]{"TagA", "TagC", "TagD"};

// 订单列表

List orderList = new ProducerInOrder().buildOrders();

Date date = new Date();

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String dateStr = sdf.format(date);

int orderSize = orderList.size();

for (int i = 0; i < 100; i++) {

// 加个时间前缀

String body = dateStr + " Hello RocketMQ " + orderList.get(i%orderSize);

Message msg = new Message("BinTestTopic02", tags[i % tags.length], "KEY" + i, body.getBytes());

SendResult sendResult = null;//订单id

try {

sendResult = producer.send(msg, new MessageQueueSelector() {

@Override

public MessageQueue select(List mqs, Message msg, Object arg) {

Long id = (Long) arg; //根据订单id选择发送queue

long index = id % mqs.size();

return mqs.get((int) index);

}

}, orderList.get(i%orderSize).getOrderId());

} catch (MQClientException e) {

e.printStackTrace();

} catch (RemotingException e) {

e.printStackTrace();

} catch (MQBrokerException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(String.format("SendResult status:%s, queueId:%d, body:%s",

sendResult.getSendStatus(),

sendResult.getMessageQueue().getQueueId(),

body));

}

//producer.shutdown();

}

/**

* 订单的步骤

*/

private static class OrderStep {

private long orderId;

private String desc;

public long getOrderId() {

return orderId;

}

public void setOrderId(long orderId) {

this.orderId = orderId;

}

public String getDesc() {

return desc;

}

public void setDesc(String desc) {

this.desc = desc;

}

@Override

public String toString() {

return "OrderStep{" +

"orderId=" + orderId +

", desc='" + desc + '\'' +

'}';

}

}

/**

* 生成模拟订单数据

*/

private List buildOrders() {

List orderList = new ArrayList();

OrderStep orderDemo = new OrderStep();

orderDemo.setOrderId(15103111039L);

orderDemo.setDesc("创建");

orderList.add(orderDemo);

orderDemo = new OrderStep();

orderDemo.setOrderId(15103111065L);

orderDemo.setDesc("创建");

orderList.add(orderDemo);

orderDemo = new OrderStep();

orderDemo.setOrderId(15103111039L);

orderDemo.setDesc("付款");

orderList.add(orderDemo);

orderDemo = new OrderStep();

orderDemo.setOrderId(15103117235L);

orderDemo.setDesc("创建");

orderList.add(orderDemo);

orderDemo = new OrderStep();

orderDemo.setOrderId(15103111065L);

orderDemo.setDesc("付款");

orderList.add(orderDemo);

orderDemo = new OrderStep();

orderDemo.setOrderId(15103117235L);

orderDemo.setDesc("付款");

orderList.add(orderDemo);

orderDemo = new OrderStep();

orderDemo.setOrderId(15103111065L);

orderDemo.setDesc("完成");

orderList.add(orderDemo);

orderDemo = new OrderStep();

orderDemo.setOrderId(15103111039L);

orderDemo.setDesc("推送");

orderList.add(orderDemo);

orderDemo = new OrderStep();

orderDemo.setOrderId(15103117235L);

orderDemo.setDesc("完成");

orderList.add(orderDemo);

orderDemo = new OrderStep();

orderDemo.setOrderId(15103111039L);

orderDemo.setDesc("完成");

orderList.add(orderDemo);

return orderList;

} 可以看到相同orderId的消息都发送到了相同的queueId

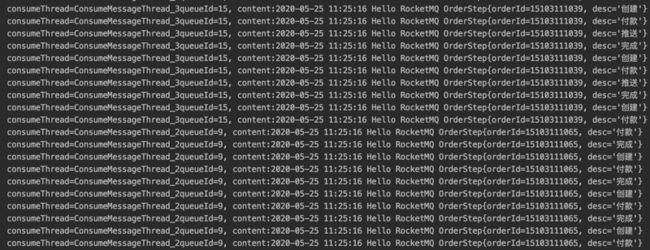

顺序消费

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("BinConsumerGroup");

Random r = new Random();

String instanceName = "ConsumerInstance" + r.nextInt(100);

consumer.setInstanceName(instanceName);

consumer.setNamesrvAddr("192.168.6.68:9876");

consumer.setConsumeTimeout(20000);

consumer.registerMessageListener(new MessageListenerOrderly() {

Random random = new Random();

@Override

public ConsumeOrderlyStatus consumeMessage(List msgs, ConsumeOrderlyContext context) {

context.setAutoCommit(true);

for (MessageExt msg : msgs) {

// 可以看到每个queue有唯一的consume线程来消费, 订单对每个queue(分区)有序

System.out.println("consumeThread=" + Thread.currentThread().getName() + "queueId=" + msg.getQueueId() + ", content:" + new String(msg.getBody()));

}

return ConsumeOrderlyStatus.SUCCESS;

}

});

try {

consumer.subscribe("BinTestTopic02", "*");

consumer.setMessageModel(MessageModel.CLUSTERING);

consumer.setConsumeFromWhere(ConsumeFromWhere.CONSUME_FROM_FIRST_OFFSET);

consumer.setConsumeMessageBatchMaxSize(10);

consumer.start();

} catch (MQClientException e) {

e.printStackTrace();

} 可以看到从相同queueId中消费的消息是按照发送到该queue中的顺序被消费

延时消息

延时消息最典型的应用场景就是订单状态查询。用户下单,30分钟后确认该订单是否支付,如果未支付则将当前订单取消。

DefaultMQProducer producer = new DefaultMQProducer("BinProducerGroup");

Random r = new Random();

producer.setInstanceName("BinTestInstance_" + r.nextInt(1000));

producer.setNamesrvAddr("192.168.6.68:9876");

producer.setSendMsgTimeout(30000);

producer.setMaxMessageSize(500000);

producer.setRetryTimesWhenSendFailed(3);

try {

producer.start();

Thread.sleep(8000);

} catch (Exception e) {

e.printStackTrace();

}

int totalMessagesToSend = 100;

for (int i = 0; i < totalMessagesToSend; i++) {

Message message = new Message("BinTestTopic02", ("Scheduled message_10s_" + i).getBytes());

// 延时等级时长如下,从1到18

//private String messageDelayLevel = "1s 5s 10s 30s 1m 2m 3m 4m 5m 6m 7m 8m 9m 10m 20m 30m 1h 2h";

message.setDelayTimeLevel(3); // 10s

// 发送消息

SendResult result = producer.send(message);

System.out.println("Send result:" + result);

}

// 关闭生产者

producer.shutdown();

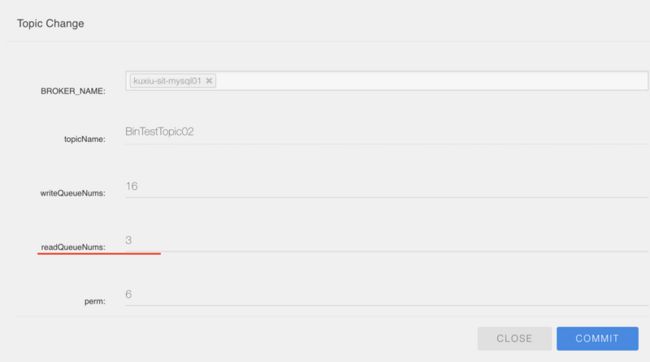

}提高消费的速度

提高消费的并行处理能力

- 同一个 ConsumerGroup 下,通过增加 Consumer 实例数量来提高并行度(增加消费实例)Consumer的实例数量不要超过配置的订阅队列数

- 提高单个 Consumer 的消费并行线程,通过修改参数 consumeThreadMin、consumeThreadMax实现。

批量消费消息

- 通过 setConsumeMessageBatchMaxSize设置批量取得message的最大数量。一次消费多条消息

工程代码在这:

代码