基于Opencv4.4的YOLOv4目标检测

2020年7月18日,OpenCV官网发布了OpenCV的最新版本OpenCV4.4.0,令我比较兴奋的是,其中支持了YOLOv4,之前的一段时间,我都在YOLO系列苦苦挣扎,虽然YOLOv4的性能很好,准确率也高,但当时opencv不支持,就导致在QT做界面时,读取不了yolov4的权重,无法进行目标检测,后来无奈只能选择了yolov3。

虽然用pytorch-yolov4也能得到很好的效果,但那样训练出来的权重文件后缀是pth,这么做,只能选择PyQT去做界面,考虑到Python的运行速度远不及C++,且到最后这个项目要进行实地的检测等等,还是放弃了。

当时我就觉得,pytorch-yolov4训练自己的模型,也只能在自己电脑上玩玩了,不能将项目落地实现。

但OpenCV4.4支持YOLOv4,它来了。。。(迟到了一个多月)

基于Opencv4.4的YOLOv4目标检测

- 一.环境搭建

- 二.用官方的权重进行目标检测

- 三.用自己训练好的权重进行目标检测

一.环境搭建

1.OpenCV4.4的安装包、YOLOv4相关配置文件:

链接:https://pan.baidu.com/s/1Xg-Er1mJNCvSQyMt3B2HBw

提取码:p2ye

2.关于Visual Studio配置OpenCV,参考如下博客:

https://blog.csdn.net/shuiyixin/article/details/105998661

PS:我电脑上装的是VS2019,在Path系统环境变量中还是设置的vc15文件夹

二.用官方的权重进行目标检测

源程序:

#pragma once

#include三.用自己训练好的权重进行目标检测

1.首先用Darknet-YOLOv4训练自己的权重文件,具体步骤参考我的这篇博客:

Windows10系统下YOLOv4—Darknet训练过程

https://blog.csdn.net/qq_45445740/article/details/108253155

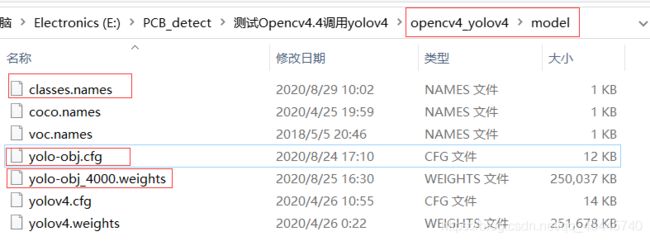

2.训练好了之后会在darknet-master\build\darknet\x64\backup文件下生成你的权重文件,这里选择yolo-obj_4000.weights权重文件。

还有选择darknet-master\build\darknet\x64文件下的yolo-obj.cfg文件。

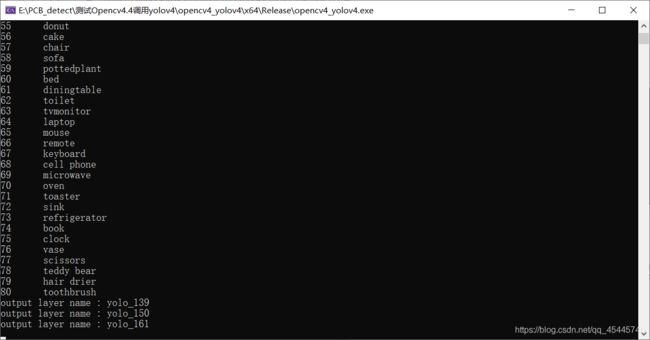

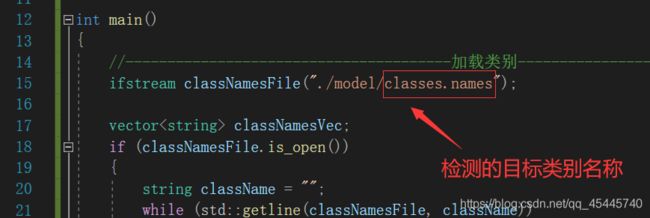

3.修改上面程序中的几个地方,换成你需要检测的目标相关的权重配置文件。