import pandas as pd

import numpy as np

import os

import matplotlib.pyplot as plt

导入相关数据并进行简单的数据处理

os.chdir('D:\\proj\\titanic')

df=pd.read_csv('train.csv',encoding='gbk')

df.columns

sex_dummy=pd.get_dummies(df['Sex'],prefix='Sex')

pclass_dummy=pd.get_dummies(df['Pclass'],prefix='Pclass')

embarked_dummy=pd.get_dummies(df['Embarked'],prefix='Embarked')

parch_dummy=pd.get_dummies(df['Parch'],prefix='Parch')

sibsp_dummy=pd.get_dummies(df['SibSp'],prefix='SibSp')

df.drop(['PassengerId','Sex','Pclass','Embarked','Parch','SibSp','Name','Ticket','Cabin'],axis=1,inplace=True)

df_train=pd.concat([df,sex_dummy,pclass_dummy,embarked_dummy,parch_dummy,sibsp_dummy],axis=1)

dataset=df_train.reindex(columns=['Age','Fare', 'Sex_female', 'Sex_male', 'Pclass_1','Pclass_2', 'Pclass_3', 'Embarked_C', 'Embarked_Q', 'Embarked_S','Parch_0', 'Parch_1', 'Parch_2', 'Parch_3', 'Parch_4', 'Parch_5', 'Parch_6', 'SibSp_0', 'SibSp_1', 'SibSp_2', 'SibSp_3', 'SibSp_4', 'SibSp_5', 'SibSp_8','Survived' ])

dataset_1=df_train.reindex(columns=['Fare', 'Sex_female', 'Sex_male', 'Pclass_1','Pclass_2', 'Pclass_3', 'Embarked_C', 'Embarked_Q', 'Embarked_S','Parch_0', 'Parch_1', 'Parch_2', 'Parch_3', 'Parch_4', 'Parch_5', 'Parch_6', 'SibSp_0', 'SibSp_1', 'SibSp_2', 'SibSp_3', 'SibSp_4', 'SibSp_5', 'SibSp_8','Survived' ])

dataset['Age'].fillna(np.mean(dataset['Age']),inplace=True)

dataset.info()

开始手写代码

逻辑回归函数

#逻辑回归函数

def sigmoid(x):

s=1/(1+np.exp(-x))

return s

定义标准化函数

#将xmat标准化

def normalizer(xmat):

inmat=xmat.copy()

mean=np.mean(inmat,axis=0)

std=np.std(inmat,axis=0)

result=(inmat-mean)/std

return result

计算损失函数

#计算损失函数

def Loss(xmat,ymat,weight,n):

y_pred=sigmoid(xmat*weight)

loss=-(1/n)*np.sum((np.multiply(ymat,np.log(y_pred))+np.multiply((1-ymat),np.log(1-y_pred))))

return loss

计算逻辑回归权重

#计算逻辑回归的权重

def BDG_LR(dataset,penalty=None,Lambda=1,alpha=0.001,mat_iter=10001):

xmat=np.mat(dataset.iloc[:,:-1])

ymat=np.mat(dataset.iloc[:,-1]).reshape(-1,1)

xmat=normalizer(xmat)

n,m=xmat.shape

losslist=[]

weight=np.zeros((m,1))

for i in range(mat_iter):

grad=xmat.T*(sigmoid(xmat*weight)-ymat)/n

# print((sigmoid(xmat*weight)-ymat))

# print(grad)

if penalty=='l2':

grad=grad+Lambda*weight

if penalty=='l1':

grad=grad+Lambda*np.sign(weight)

weight=weight-alpha*grad

if i % 100 ==0:

losslist.append(Loss(xmat,ymat,weight,n))

#随着迭代次数的增加,用新的权重计算损失函数

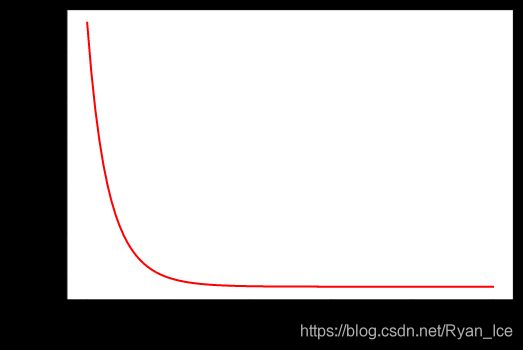

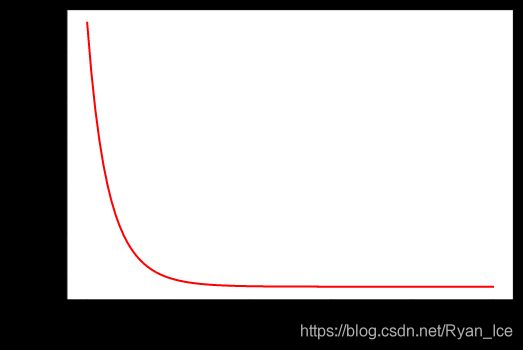

plt.figure()

x=range(len(losslist))

plt.plot(x,losslist,color='r')

plt.xlabel('number')

plt.ylabel('loss')

plt.show()

return weight,losslist

用数据集测试

BDG_LR(dataset,penalty='l2')

损失函数结果如下图