OpenGL (一)OpenGL ES 绘制基础

OpenGL (二)GLSurface 视频录制

OpenGL (三)滤镜filter 应用

OpenGL (四)贴纸和磨皮理论

用到了人脸识别定位。SeetaFace2

具体内容搜索关键字,到github搜搜,找到Android编译,不再用链接,这里的书写环境用到链接容易锁文章

编译好之后,进行配置android C++ 环境

具体api的时候参考他的example

大眼萌算法

其实就是根据photoshop上面功能的算法公式

Local Scale warps 局部缩放

前提:定位到眼睛的位置

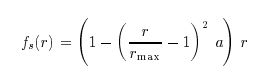

解释: rmax为圆形选区的半径,r为控制点移动位移,即目的矢量,as是(-1,1)之间的缩放参数,a<0表示缩小,a>0表示放大,给出点X时,可以求出它变换前的坐标U(精确的浮点坐标),然后用变化前图像在U点附近的像素进行插值,求出U的像素值。如此对圆形选区内的每一个像素进行求值,便可得出变换后的图像。

理解图像

实现

写着色器

bigeye_frag.frag

precision mediump float;//数据精度

varying vec2 aCoord;

uniform sampler2D vTexture;// samplerExternalOES 图片,采样器

uniform vec2 left_eye;

uniform vec2 right_eye;

//r: 要画的点与眼睛的距离

//max 最大半径

//0.4 放大系数 -1 -- 1 大于0 就是放大

float fs(float r,float rmax){

// return (1.0-pow(r/rmax-1.0,2.0)* 0.5)*r;

return (1.0-pow(r/rmax-1.0,2.0)* 0.5);

}

vec2 newCoord(vec2 coord,vec2 eye,float rmax){

vec2 p = cood;

//得到要画的点coord,与眼睛的距离

float r = distance(coord,eye);

if(r < rmax){

// 在这个范围,新的点与眼睛的距离

float fsr = fs(r,rmax);

// (缩放后的点- 眼睛的点)/(原点-眼睛的点)= fsr /r

//缩放的点=fsr/r * (原点-眼睛的点) + 眼睛的点

// p = fsr/r*(coord-eye) + eye;

// 优化 抵消掉,这需要除,上面公式需要乘

p = fsr/(coord-eye) + eye;

}

return p;

}

void main(){

// gl_FragColor = texture2D(vTexture,aCoord);//从图片中找到像素点,赋值

float rmax = distance(left_eye,right_eye)/2.0;

vec2 p = newCoord(aCoord,left_eye,rmax);

p = newCoord(aCoord,right_eye,rmax);

gl_FragColor = texture2D(vTexture,p);//从图片中找到像素点,赋值

}

opecv 相关+CameraX采集人脸数据

参考之前的文章

OpenCV 系列人脸定位&人脸定位模型训练

将识别的人脸图像通过SeetaFace2 定位到眼睛的位置,

然后计算,利用着色器在指定区域着色。

大眼滤镜

public class BigEyeFilter extends AbstractFrameFilter {

private FloatBuffer left;

private FloatBuffer right;

int left_eye;

int right_eye;

Face face;

public BigEyeFilter(Context context) {

super(context, R.raw.base_vert, R.raw.bigeye_frag);

left = ByteBuffer.allocateDirect(8).order(ByteOrder.nativeOrder()).asFloatBuffer();

right = ByteBuffer.allocateDirect(8).order(ByteOrder.nativeOrder()).asFloatBuffer();

}

@Override

public void initGL(Context context, int vertexShaderId, int fragmentShaderId) {

super.initGL(context, vertexShaderId, fragmentShaderId);

left_eye = GLES20.glGetUniformLocation(program, "left_eye");

right_eye = GLES20.glGetUniformLocation(program, "right_eye");

}

@Override

public int onDraw(int texture, FilterChain filterChain) {

FilterContext filterContext = filterChain.filterContext;

face = filterContext.face;

return super.onDraw(texture, filterChain);

}

@Override

public void beforeDraw() {

super.beforeDraw();

if (face == null) {

// Log.e("zcw_opengl","未识别到人脸");

return;

}

Log.e("zcw_opengl","是一个-------人脸");

float x = face.left_x / face.imgWidth;

float y = 1.0f - face.left_y / face.imgHeight;

left.clear();

left.put(x).put(y).position(0);

GLES20.glUniform2fv(left_eye, 1, left);

x = face.right_x / face.imgWidth;

y = 1.0f - face.right_y / face.imgHeight;

right.clear();

right.put(x).put(y).position(0);

GLES20.glUniform2fv(right_eye, 1, right);

}

}

责任连模式,使用滤镜开发更简单

public class FilterContext {

public Face face; // 人脸

public float[] cameraMtx; //摄像头转换矩阵

public int width;

public int height;

public void setSize(int width, int height) {

this.width = width;

this.height = height;

}

public void setTransformMatrix(float[] mtx) {

this.cameraMtx = mtx;

}

public void setFace(Face face) {

this.face = face;

}

}

--------

public class FilterChain {

public FilterContext filterContext;

private List filters;

private int index;

public FilterChain(List filters, int index, FilterContext filterContext) {

this.filters = filters;

this.index = index;

this.filterContext = filterContext;

}

public int proceed(int textureId) {

if (index >= filters.size()) {

return textureId;

}

FilterChain nextFilterChain = new FilterChain(filters, index + 1, filterContext);

AbstractFilter abstractFilter = filters.get(index);

return abstractFilter.onDraw(textureId, nextFilterChain);

}

public void setSize(int width, int height) {

filterContext.setSize(width, height);

}

public void setTransformMatrix(float[] mtx) {

filterContext.setTransformMatrix(mtx);

}

public void setFace(Face face) {

filterContext.setFace(face);

}

public void release() {

for (AbstractFilter filter : filters) {

filter.release();

}

}

}

------

/**

* FBO

*/

public abstract class AbstractFrameFilter extends AbstractFilter {

int[] frameBuffer;

int[] frameTextures;

public AbstractFrameFilter(Context context, int vertexShaderId, int fragmentShaderId) {

super(context, vertexShaderId, fragmentShaderId);

}

public void createFrame(int width, int height) {

releaseFrame();

//創建FBO

/**

* 1、创建FBO + FBO中的纹理

*/

frameBuffer = new int[1];

frameTextures = new int[1];

GLES20.glGenFramebuffers(1, frameBuffer, 0);

OpenGLUtils.glGenTextures(frameTextures);

/**

* 2、fbo与纹理关联

*/

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, frameTextures[0]);

GLES20.glTexImage2D(GLES20.GL_TEXTURE_2D, 0, GLES20.GL_RGBA, width, height, 0, GLES20.GL_RGBA, GLES20.GL_UNSIGNED_BYTE,

null);

//纹理关联 fbo

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, frameBuffer[0]); //綁定FBO

GLES20.glFramebufferTexture2D(GLES20.GL_FRAMEBUFFER, GLES20.GL_COLOR_ATTACHMENT0, GLES20.GL_TEXTURE_2D,

frameTextures[0],

0);

/**

* 3、解除绑定

*/

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, 0);

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, 0);

}

@Override

public int onDraw(int texture, FilterChain filterChain) {

FilterContext filterContext = filterChain.filterContext;

createFrame(filterContext.width, filterContext.height);

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, frameBuffer[0]); //綁定fbo

super.onDraw(texture, filterChain);

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, 0); //

return filterChain.proceed(frameTextures[0]);

}

@Override

public void release() {

super.release();

releaseFrame();

}

private void releaseFrame() {

if (frameTextures != null) {

GLES20.glDeleteTextures(1, frameTextures, 0);

frameTextures = null;

}

if (frameBuffer != null) {

GLES20.glDeleteFramebuffers(1, frameBuffer, 0);

}

}

}

核心 onDraw

public int onDraw(int texture, FilterChain filterChain) {

FilterContext filterContext = filterChain.filterContext;

createFrame(filterContext.width, filterContext.height);

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, frameBuffer[0]); //綁定fbo

super.onDraw(texture, filterChain);

GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, 0); //

return filterChain.proceed(frameTextures[0]);

}

我们有很多过滤器,可以看成不同处理流程,都继承超类AbstractFrameFilter 并且实现onDraw 调用super.onDraw

CameraFilter,BigEyeFilter,ScreenFilter,RecordFilter

参考项目