1、Nginx+Keepalived实现站点高可用

linux cluster类型

LB:nginx负载,varnish(director module)haproxy,lvs

HA:keepalived,heartbeat 采用冗余方式为活动设备提供备用设备,活动设备出现故障时,备用设备主动代替活动设备工作

HP:

keepalived 主要是通过vrrp虚拟路由虚拟路由冗余协议实现ip地址转移,结合api接口脚本实现高可用

keepalived实现过程

准备两台机器

192.168.1.198

192.168.1.196

两台机器都要同步时间 ntpdate ntp1.aliyun.com

关闭防火墙或者修改防火墙规则放行keepalive的报文

keepalive的被收录在base仓库中,可直接安装

yum install keepalived 两台节点都安装keepalived

keepalived的三个大配置配置

GLOBAL CONFIGURATION #全局配置

VRRPD CONFIGURATION #VRRP虚拟路由配置

LVS CONFIGURATION #LVS相关的配置

简单配置示例

! Configuration File for keepalived

global_defs { #全局配置

notification_email { #配置邮件地址

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1 #邮件地址

smtp_connect_timeout 30#超时时长

router_id node1.com #主机id

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 224.0.0.1 #组播地址,用于发通告信息

vrrp_iptables

}

vrrp_instance VI_1 { #这是一个实例 虚拟路由

state MASTER #表示为主节点

interface ens33 #在自己真实网卡配置

virtual_router_id 51 #配置一个id

priority 100 #优先级

advert_int 1

authentication { #跟验证有关

auth_type PASS #验证类型

auth_pass 1111 #密码

}

virtual_ipaddress { #定义虚拟路由的ip地址 接口,和标签

192.168.1.254/24 brd 192.168.1.255 dev ens33 label ens33:1

}

}

配置完需要将这个配置文件拷贝至另外一台备用机器,并且需要将 state master 改成 state backup,优先级需要改。改完开启服务即可生效

keepalived消息通知机制

通过notify调用脚本实现通知机制

# notify scripts, alert as above

notify_master

notify_backup

notify_fault

notify_stop

notify

通知脚本的使用方式:

示例通知脚本:

#!/bin/bash

#

contact='root@localhost'

notify() {

local mailsubject="$(hostname) to be $1, vip floating"

local mailbody="$(date +'%F %T'): vrrp transition, $(hostname) changed to be $1"

echo "$mailbody" | mail -s "$mailsubject" $contact

}

case $1 in

master)

notify master

;;

backup)

notify backup

;;

fault)

notify fault

;;

*)

echo "Usage: $(basename $0) {master|backup|fault}"

exit 1

;;

esac

脚本的调用方法:

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

高可用的ipvs集群示例:

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node1

vrrp_mcast_group4 224.0.100.19

}

vrrp_instance VI_1 {

state MASTER

interface eno16777736

virtual_router_id 14

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 571f97b2

}

virtual_ipaddress {

10.1.0.93/16 dev eno16777736

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

virtual_server 10.1.0.93 80 { #虚拟服务。vip地址

delay_loop 3 #对后端real server 3秒检测一次

lb_algo rr#算法

lb_kind DR#lvs类型

protocol TCP

sorry_server 127.0.0.1 80 #say sorry服务器

real_server 10.1.0.69 80 { #后端真实服务器

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 10.1.0.71 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

}

实验过程

准备机器

ipvs,以及keepalived部署在两台机器中192.168.1.196 198 后端realserver 部署两台nginx 192.168.1.201 202

在前端机器部署nginx。用于实现后端机器宕机时say sorry

设定后端real主机参数,使用DR类型,设定脚本,修改arp报文参数。并添加ip地址

在两台real server 中执行

#!/bin/bash

vip=192.168.1.254 #设置为虚拟路由的ip地址

interface="lo:0"

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_ignore

ifconfig $interface $vip netmask 255.255.255.255 broadcast $vip up

route add -host $vip $interface

;;

stop)

ifconfig $interface down

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

;;

*)

echo canshu cuowu

esac

修改配置文件,添加virtual_server字段,在后端添加两台real服务字段。会自动生成ipvsadm规则

停掉一台real server 断开连接几秒后会全部调度到real 1中

keepalived调用外部的辅助脚本进行资源监控,并根据监控的结果状态能实现优先动态调整;

分两步:(1) 先定义一个脚本;(2) 在vrrp实例中调用此脚本;

vrrp_script

script ""

interval INT

weight -INT

rise 2

fall 3

}

track_script {

SCRIPT_NAME_1

SCRIPT_NAME_2

...

}

注意:

vrrp_script chk_down {

script "/bin/bash -c '[[ -f /etc/keepalived/down ]]' && exit 1 || exit 0"

interval 1

weight -10

}

[[ -f /etc/keepalived/down ]]要特别地作为bash的参数的运行!

示例:高可用nginx服务

修改keepalived配置文件,添加一个外部脚本,检测nginx服务。如果出现故障则自动重启nginx

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node1

vrrp_mcast_group4 224.0.100.19

}

vrrp_script chk_nginx {

script "killall -0 nginx && exit 0 || exit 1"

interval 1

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface eno16777736

virtual_router_id 14

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 571f97b2

}

virtual_ipaddress {

10.1.0.93/16 dev eno16777736

}

track_script {

chk_down

chk_nginx

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

2、实现keepalived主主模型

双主模型

需要配置两个实例路由,一个主机作为一个实例的主,一个实例的备

! Configuration File for keepalived

global_defs { #全局配置

notification_email { #配置邮件地址

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1 #邮件地址

smtp_connect_timeout 30#超时时长

router_id node1.com #主机id

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 224.0.0.1 #组播地址,用于发通告信息

vrrp_iptables

}

vrrp_instance VI_1 { #这是一个实例 虚拟路由

state MASTER #表示为主节点

interface ens33 #在自己真实网卡配置

virtual_router_id 51 #配置一个id

priority 100 #优先级

advert_int 1

authentication { #跟验证有关

auth_type PASS #验证类型

auth_pass 1111 #密码

}

virtual_ipaddress { #定义虚拟路由的ip地址 接口,和标签

192.168.1.254/24 brd 192.168.1.255 dev ens33 label ens33:1

}

}

vrrp_instance VI_2 { #定义第二个虚拟路由

state BACKUP #在这个路由中本机为备用节点

interface ens33 #网卡名

virtual_router_id 55 #id不能和第一个相同

priority 98 #优先级。因为是备用。优先级不能太高

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress { #定义另外一个地址,自己作为此地址的备用地址

192.168.1.253/24 brd 192.168.1.255 dev ens33 label ens33:3

}

}

在本机定义好之后需要复制到另外一个节点,在另一个节点将第二个虚拟路由配置为主节点

配置成功

systemctl start keepalived启动服务我这里先启动第二台机器

启动之后第二台机器会获取两个地址,通告通告两次,一次为id为55的,优先级100,(这是第二个虚拟路由的master)一次为id为51的,优先级为99,这是第一台虚拟路由,为备用节点

现在启动第一台机器 systemctl start keepalived

启动之后他会抢占本机作为优先级的虚拟路由设备的ip地址作为主节点

3、Haproxy+Keepalived实现站点高可用

创建haproxy脚本

设置可执行权限chmod +x check_haproxy.sh,脚本内容如下:

#!/bin/bash

#auto check haprox process

killall -0 haproxy

if

[[ $? -ne 0 ]];then

/etc/init.d/keepalived stop

fi

haproxy+keealived Master端keepalived.conf配置文件如下:

! Configuration File for keepalived

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_haproxy {

script "/data/sh/check_haproxy.sh"

interval 2

weight 2

}

# VIP1

vrrp_instance VI_1 {

state MASTER

interface eth0

lvs_sync_daemon_inteface eth0

virtual_router_id 151

priority 100

advert_int 5

nopreempt

authentication {

auth_typePASS

auth_pass 2222

}

virtual_ipaddress {

192.168.0.133

}

track_script {

chk_haproxy

}

}

1.1.6创建haproxy脚本

设置可执行权限chmod +x check_haproxy.sh,脚本内容如下:

#!/bin/bash

#auto check haprox process

killall -0 haproxy

if

[[ $? -ne 0 ]];then

/etc/init.d/keepalived stop

fi

Haproxy+keealived Backup端keepalived.conf配置文件如下:

! Configuration File for keepalived

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_haproxy {

script "/data/sh/check_haproxy.sh"

interval 2

weight 2

}

# VIP1

vrrp_instance VI_1 {

state BACKUP

interface eth0

lvs_sync_daemon_inteface eth0

virtual_router_id 151

priority 90

advert_int 5

nopreempt

authentication {

auth_typePASS

auth_pass 2222

}

virtual_ipaddress {

192.168.0.133

}

track_script {

chk_haproxy

}

}

4、搭建tomcat服务器,并通过nginx反向代理访问

软件架构模式:

分层架构;表现层,业务层,持久层,数据库层

事件驱动架构;分布式异步架构,

微内核架构,及插件式架构

微服务架构,

jdk:java 开发工具箱

servlet:java用于开发web服务器网页类库

安装jdk工具,这里使用openjdk

yum install java-1.8.0-openjdk-devel #安装devel版本,会自动解决其他依赖关系

wget http://mirrors.tuna.tsinghua.edu.cn/apache/tomcat/tomcat-8/v8.5.45/bin/apache-tomcat-8.5.45.tar.gz #下载tomcat二进制安装包

tar xf apache-tomcat-8.5.45.tar.gz -C /usr/local/ #解压至usr/local目录中

ln -s apache-tomcat-8.5.45.tar.gz tomcat #创建软连接方便以后修改

useradd tomcat #添加用户,修改属组 ,tomcat默认以普通身份运行,需要修改文件权限

chown -R .tomcat .

chmod g+r conf/*

chmod g+rx conf/

chown -R tomcat logs/ temp/ work/

vim /etc/profile.d/cols.sh #修改tomcat命令行配置。

PS1='[\e[32;40m\u@\h \W\e[m]$ '

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/usr/local/tomcat/bin

catalina.sh start #启动tomcat

8009为管理接口,8080提供服务

tomcat内部关键 类

Tomcat的核心组件:server.xml

...

...

...

...

每一个组件都由一个Java“类”实现,这些组件大体可分为以下几个类型:

顶级组件:Server

服务类组件:Service

连接器组件:http, https, ajp(apache jserv protocol)

容器类:Engine, Host, Context

被嵌套类:valve, logger, realm, loader, manager, ...

集群类组件:listener, cluster, ...

部署(deploy)webapp的相关操作:

deploy:将webapp的源文件放置于目标目录(网页程序文件存放目录),配置tomcat服务器能够基于web.xml和context.xml文件中定义的路径来访问此webapp;将其特有的类和依赖的类通过class loader装载至JVM;

部署有两种方式:

自动部署:auto deploy

手动部署:

冷部署:把webapp复制到指定的位置,而后才启动tomcat;

热部署:在不停止tomcat的前提下进行部署;

部署工具:manager、ant脚本、tcd(tomcat client deployer)等;

undeploy:反部署,停止webapp,并从tomcat实例上卸载webapp;

start:启动处于停止状态的webapp;

stop:停止webapp,不再向用户提供服务;其类依然在jvm上;

redeploy:重新部署;

JSP WebAPP的组织结构:

/: webapps的根目录

index.jsp, index.html:主页;

WEB-INF/:当前webapp的私有资源路径;通常用于存储当前webapp的web.xml和context.xml配置文件;

META-INF/:类似于WEB-INF/;

classes/:类文件,当前webapp所提供的类;

lib/:类文件,当前webapp所提供的类,被打包为jar格式;

tomcat的配置文件构成:

server.xml:主配置文件;

web.xml:每个webapp只有“部署”后才能被访问,它的部署方式通常由web.xml进行定义,其存放位置为WEB-INF/目录中;此文件为所有的webapps提供默认部署相关的配置;

context.xml:每个webapp都可以专用的配置文件,它通常由专用的配置文件context.xml来定义,其存放位置为WEB-INF/目录中;此文件为所有的webapps提供默认配置;

tomcat-users.xml:用户认证的账号和密码文件;

catalina.policy:当使用-security选项启动tomcat时,用于为tomcat设置安全策略;

catalina.properties:Java属性的定义文件,用于设定类加载器路径,以及一些与JVM调优相关参数;

logging.properties:日志系统相关的配置; log4j

手动提供一测试类应用,并冷部署: #示例

# mkidr -pv /usr/share/tomcat/webapps/myapp/{classes,lib,WEB-INF}

创建文件/usr/local/tomcat/myapp/test/index.jsp

<%@ page language="java" %>

<%@ page import="java.util.*" %>

<% out.println("hello world");

%>

#将index文件放再myapp目录中,index.jsp文件会自动部署

work目录中记录了代码的转换之后的源代码

登录gui的tomcat后端

默认访问tomcat后台时会提示我们输入账户密码,需要在tomcat-user文件中启用账户,并且关联至对应账户

tomcat的常用组件配置:

Server:代表tomcat instance,即表现出的一个java进程;监听在8005端口,只接收“SHUTDOWN”。各server监听的端口不能相同,因此,在同一物理主机启动多个实例时,需要修改其监听端口为不同的端口;

Service:用于实现将一个或多个connector组件关联至一个engine组件;

Connector组件:端点

负责接收请求,常见的有三类http/https/ajp;

进入tomcat的请求可分为两类:

(1) standalone : 请求来自于客户端浏览器;

(2) 由其它的web server反代:来自前端的反代服务器;

nginx --> http connector --> tomcat

httpd(proxy_http_module) --> http connector --> tomcat

httpd(proxy_ajp_module) --> ajp connector --> tomcat

httpd(mod_jk) --> ajp connector --> tomcat

属性:

port="8080"

protocol="HTTP/1.1"

connectionTimeout="20000" #单位毫秒

address:监听的IP地址;默认为本机所有可用地址;

maxThreads:最大并发连接数,默认为200;

enableLookups:是否启用DNS查询功能;

acceptCount:等待队列的最大长度;

secure:

sslProtocol:

Engine组件:Servlet实例,即servlet引擎,其内部可以一个或多个host组件来定义站点; 通常需要通过defaultHost属性来定义默认的虚拟主机;

属性:

name=

defaultHost="localhost"

jvmRoute=

Host组件:位于engine内部用于接收请求并进行相应处理的主机或虚拟主机,示例:

unpackWARs="true" autoDeploy="true">

Webapp ARchives

常用属性说明:

(1) appBase:此Host的webapps的默认存放目录,指存放非归档的web应用程序的目录或归档的WAR文件目录路径;可以使用基于$CATALINA_BASE变量所定义的路径的相对路径;

(2) autoDeploy:在Tomcat处于运行状态时,将某webapp放置于appBase所定义的目录中时,是否自动将其部署至tomcat;

示例:

# mkdir -pv /appdata/webapps

# mkdir -pv /appdata/webapps/ROOT/{lib,classes,WEB-INF}

提供一个测试页即可;

Context组件:

示例:

#URL路径,本地文件路径,是否支持重载

Valve组件:

prefix="localhost_access_log" suffix=".txt" pattern="%h %l %u %t "%r" %s %b" /> #官方文档日志 https://tomcat.apache.org/tomcat-7.0-doc/api/org/apache/catalina/valves/AccessLogValve.html Valve存在多种类型: 定义访问日志:org.apache.catalina.valves.AccessLogValve 定义访问控制:org.apache.catalina.valves.RemoteAddrValve nginx实现反代 Client (http) --> nginx (reverse proxy)(http) --> tomcat (http connector) #本机实现反代 location / { proxy_pass http://tc1.magedu.com:8080; } location ~* \.(jsp|do)$ { proxy_pass http://tc1.magedu.com:8080; } 因为图片和jsp的路径不在一块,反代时没有location图片位置路径,所以代理时加载不了图片 LAMT:Linux Apache(httpd) MySQL Tomcat httpd的代理模块: proxy_module proxy_http_module:适配http协议客户端; proxy_ajp_module:适配ajp协议客户端; Client (http) --> httpd (proxy_http_module)(http) --> tomcat (http connector) Client (http) --> httpd (proxy_ajp_module)(ajp) --> tomcat (ajp connector) Client (http) --> httpd (mod_jk)(ajp) --> tomcat (ajp connector) proxy_http_module代理配置示例: ServerName tc1.magedu.com ProxyRequests Off ProxyVia On ProxyPreserveHost On Require all granted ProxyPass / http://tc1.magedu.com:8080/ ProxyPassReverse / http://tc1.magedu.com:8080/ Require all granted ProxyPass / http://tc1.magedu.com:8080/ proxy_ajp_module代理配置示例: ServerName tc1.magedu.com ProxyRequests Off ProxyVia On ProxyPreserveHost On Require all granted ProxyPass / ajp://tc1.magedu.com:8009/ ProxyPassReverse / ajp://tc1.magedu.com:8009/ Require all granted 对tomcat做负载均衡 docker pull tomcat:8.5-slim #拉取tomcat镜像,作为后端服务器 docker run --name tc1 --hostname tc1.com -d -v /data/tc1:/usr/local/tomcat/webapps/myapp tomcat:8.5-slim docker run --name tc2 --hostname tc2.com -d -v /data/tc1:/usr/local/tomcat/webapps/myapp tomcat:8.5-slim #启动容器绑定挂载卷,指定主机名 [root@centos7 tc1]$ mkdir -p lib classes WEB-INF #创建目录,和index.jsp 需要在两台机器上创建此index文件 [root@centos7 tc1]$ vim index.jsp <%@ page language="java" %>

TomcatA.magedu.com

| Session ID | <%= session.getId() %> |

| Created on | <%= session.getCreationTime() %> |

修改nginx配置文件定义负载集群主机组及反代的配置

upstream tcsrvs {

server 172.17.0.2:8080;

server 172.17.0.3:8080;

}

location /myapp/ {

proxy_pass http://tcsrvs/myapp/;

}

httpd会话粘性的实现方法:

Header add Set-Cookie "ROUTEID=.%{BALANCER_WORKER_ROUTE}e; path=/" env=BALANCER_ROUTE_CHANGED

BalancerMember http://172.18.100.67:8080 route=TomcatA loadfactor=1

BalancerMember http://172.18.100.68:8080 route=TomcatB loadfactor=2

ProxySet lbmethod=byrequests

ProxySet stickysession=ROUTEID

ServerName lb.magedu.com

ProxyVia On

ProxyRequests Off

ProxyPreserveHost On

Require all granted

ProxyPass / balancer://tcsrvs/

ProxyPassReverse / balancer://tcsrvs/

Require all granted

启用管理接口:

SetHandler balancer-manager

ProxyPass !

Require all granted

示例程序:

演示效果,在TomcatA上某context中(如/test),提供如下页面

<%@ page language="java" %>

TomcatA.magedu.com

| Session ID | <%= session.getId() %> |

| Created on | <%= session.getCreationTime() %> |

演示效果,在TomcatB上某context中(如/test),提供如下页面

<%@ page language="java" %>

TomcatB.magedu.com

| Session ID | <%= session.getId() %> |

| Created on | <%= session.getCreationTime() %> |

第二种方式:

BalancerMember ajp://172.18.100.67:8009

BalancerMember ajp://172.18.100.68:8009

ProxySet lbmethod=byrequests

ServerName lb.magedu.com

ProxyVia On

ProxyRequests Off

ProxyPreserveHost On

Require all granted

ProxyPass / balancer://tcsrvs/

ProxyPassReverse / balancer://tcsrvs/

Require all granted

SetHandler balancer-manager

ProxyPass !

Require all granted

保持会话的方式参考前一种方式。

Tomcat Session Replication Cluster:

(1) 配置启用集群,将下列配置放置于

channelSendOptions="8"> expireSessionsOnShutdown="false" notifyListenersOnReplication="true"/> address="228.0.0.4" port="45564" frequency="500" dropTime="3000"/> address="auto" port="4000" autoBind="100" selectorTimeout="5000" maxThreads="6"/> filter=""/> tempDir="/tmp/war-temp/" deployDir="/tmp/war-deploy/" watchDir="/tmp/war-listen/" watchEnabled="false"/>

确保Engine的jvmRoute属性配置正确。

(2) 配置webapps

编辑WEB-INF/web.xml,添加

注意:CentOS 7上的tomcat自带的文档中的配置示例有语法错误;

绑定的地址为auto时,会自动解析本地主机名,并解析得出的IP地址作为使用的地址;

5、搭建Tomcat,并基于memcached实现会话共享

https://github.com/magro/memcached-session-manager/wiki/SetupAndConfiguration 借助msm部署 mamcached session manager 的java扩展库实现

搭建后端tomcat会话replication cluster

后端tomcat 服务器地址 192.168.80.134 192.168.80.130

前端调度器nginx地址 192.168.80.133,192.168.1.196

先下载对应的扩张jar包

wget http://repo1.maven.org/maven2/de/javakaffee/msm/memcached-session-manager/2.3.2/memcached-session-manager-2.3.2.jar

wget http://repo1.maven.org/maven2/de/javakaffee/msm/memcached-session-manager-tc7/2.3.2/memcached-session-manager-tc7-2.3.2.jar

wget http://repo1.maven.org/maven2/net/spy/spymemcached/2.12.3/spymemcached-2.12.3.jar

wget http://repo1.maven.org/maven2/de/javakaffee/msm/msm-kryo-serializer/2.3.2/msm-kryo-serializer-2.3.2.jar

wget http://repo1.maven.org/maven2/com/esotericsoftware/kryo/4.0.2/kryo-4.0.2.jar

wget http://repo1.maven.org/maven2/de/javakaffee/kryo-serializers/0.42/kryo-serializers-0.42.jar

wget http://repo1.maven.org/maven2/com/esotericsoftware/minlog/1.3.0/minlog-1.3.0.jar

wget http://repo1.maven.org/maven2/com/esotericsoftware/reflectasm/1.11.7/reflectasm-1.11.7.jar

wget http://repo1.maven.org/maven2/org/ow2/asm/asm/6.2/asm-6.2.jar

wget http://repo1.maven.org/maven2/org/objenesis/objenesis/2.6/objenesis-2.6.jar

mv /etc/tomcat/*.jar . #把所有下载的jav包放到tomcat扩展库目录 /usr/share/java/tomcat/ 目录中

vim /etc/tomcat/server.xml#修改配置文件。在context中增加别名目录。并且加载memcached节点端口实现共享会话

后端两台机器同样的这样操作,修改细节即可,如ip地址等等

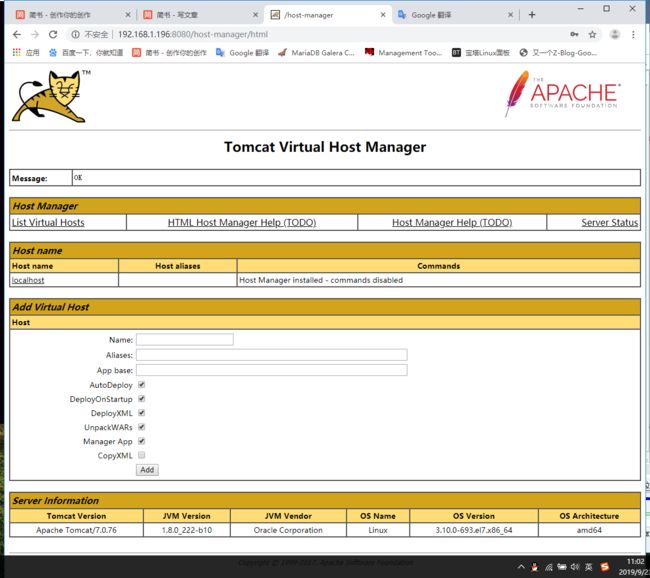

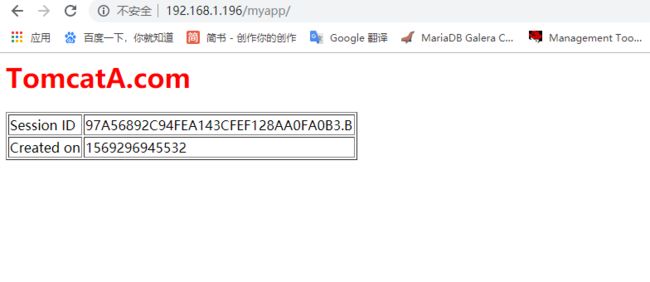

memcachedNodes="m1:192.168.80.134:11211,m2:192.168.80.130:11211" failoverNodes="m1" requestUriIgnorePattern=".*\.(ico|png|gif|jpg|css|js)$" transcoderFactoryClass="de.javakaffee.web.msm.serializer.kryo.KryoTranscoderFactory" /> #启动memcached 服务 systemctl start memcached 启动tomcat 6、搭建Nginx+Tomcat服务 搭建后端tomcat会话replication cluster 后端tomcat 服务器地址 192.168.80.132 192.168.80.130 前端调度器nginx地址 192.168.80.133,192.168.1.196 安装jdk,tomcat软件包 yum install java-1.8.0-openjdk-devel tomcat tomcat-webapps tomcat-admin-webapps tomcat-docs-webapp -y 创建创建测试页目录及测试页#后端两台机器都要操作 mkdir /webapps/myapp/{lib,class,WEB-INF} -pv vim /webapps/myapp/index.jsp <%@ page language="java" %>

TomcatA.com

| Session ID | <%= session.getId() %> |

| Created on | <%= session.getCreationTime() %> |

#修改tomcat配置文件

添加官方推荐的集群配置文件

https://tomcat.apache.org/tomcat-7.0-doc/cluster-howto.html

channelSendOptions="8"> expireSessionsOnShutdown="false" notifyListenersOnReplication="true"/> address="228.0.0.4" port="45564" frequency="500" dropTime="3000"/> address="192.168.80.132" port="4000" autoBind="100" selectorTimeout="5000" maxThreads="6"/> filter=""/> tempDir="/tmp/war-temp/" deployDir="/tmp/war-deploy/" watchDir="/tmp/war-listen/" watchEnabled="false"/>

在host配置端配置一个别名。指向我们刚刚创建的目录

安装官方文档提示,修改web.xml文件加入

[root@centos7 tomcat]# cp web.xml /webapps/myapp/WEB-INF/

vim web.xml

启动服务

修改nginx配置文件

upstream tcsrvs {

server 192.168.80.130:8080;

server 192.168.80.132:8080;

}

location / {

proxy_pass http://tcsrvs;

}