Fast-ReID 训练自己的数据集调优记录(二)

Fast-ReID 代码讲解

文章目录

- Fast-ReID 代码讲解

- 一、使用开源数据集跑通代码

- 二、重要代码解读

-

- 1.configs.yaml解读

- 2.fastreid解读

- 总结

接上一节 Fast-ReID前期准备

一、使用开源数据集跑通代码

这里建议代码和数据集分开放,便于数据集的管理(数据集也是一个很重要的资源)。使用软链接的方式吧数据链到项目指定目录下:

命令如下(示例):

ln -s /home/username/Market-1501-v15.09.15 ./dataset/Market-1501-v15.09.15

这样就把Market1501数据集链接到了fastreid指定dataset目录下,(类似与windows的超链接,不占用内存)。

按照官方INSTALL相关依赖

按照官方GETTING_STARTED

正常情况这样就可以使用Market1501数据集训练了,并且很容易达到论文精度。

二、重要代码解读

1.configs.yaml解读

configs.yaml配置文件详细写了模型的数据处理,模型框架,训练方法等这些超参,训练调参都可以通过此修改,以./configs/Market1501目录下的AGW_R50-ibn.yml为例:

代码如下(示例):

_BASE_: "../Base-AGW.yml" # AGW_R50-ibn的上一级配置文件

MODEL:

BACKBONE:

WITH_IBN: True 模型是否使用IBN module

DATASETS:

NAMES: ("Market1501",) 数据集

TESTS: ("Market1501",)

OUTPUT_DIR: "logs/market1501/agw_R50-ibn" # 日志输出目录

接下来看AGW_R50-ibn的上一级配置文件Base-AGW.yml(示例):

_BASE_: "Base-bagtricks.yml" # Base-AGW的上一级配置文件

MODEL:

BACKBONE:

WITH_NL: True # 模型是否使用No_local module

HEADS:

POOL_LAYER: "gempool" # HEADS POOL_LAYER

LOSSES:

NAME: ("CrossEntropyLoss", "TripletLoss") # 使用loss

CE:

EPSILON: 0.1 # CrossEntropyLoss 超参

SCALE: 1.0

TRI:

MARGIN: 0.0 # TripletLoss 超参

HARD_MINING: False

SCALE: 1.0

接下来看Base-AGW的上一级配置文件Base-bagtricks.yml(示例):

MODEL:

META_ARCHITECTURE: "Baseline"

BACKBONE:

NAME: "build_resnet_backbone"

NORM: "BN" **# 模型NORM 如果是多卡需设置为syncBN 多卡同步BN**

DEPTH: "50x"

LAST_STRIDE: 1

FEAT_DIM: 2048 # 输出特征维度

WITH_IBN: True

PRETRAIN: True

PRETRAIN_PATH: "/media/zengwb/PC/baseline/ReID/resnet50_ibn_a-d9d0bb7b.pth"

HEADS:

NAME: "EmbeddingHead"

NORM: "BN" **# 模型NORM 如果是多卡需设置为syncBN 多卡同步BN**

WITH_BNNECK: True

POOL_LAYER: "avgpool"

NECK_FEAT: "before"

CLS_LAYER: "linear"

LOSSES:

NAME: ("CrossEntropyLoss", "TripletLoss",)

CE:

EPSILON: 0.1

SCALE: 1.

TRI:

MARGIN: 0.3

HARD_MINING: True

NORM_FEAT: False

SCALE: 1.

INPUT:

SIZE_TRAIN: [256, 128]

SIZE_TEST: [256, 128]

REA:

ENABLED: True

PROB: 0.5

MEAN: [123.675, 116.28, 103.53]

DO_PAD: True

DATALOADER:

PK_SAMPLER: True

NAIVE_WAY: True

NUM_INSTANCE: 4

NUM_WORKERS: 8

SOLVER:

OPT: "Adam"

MAX_ITER: 120

BASE_LR: 0.00035

BIAS_LR_FACTOR: 2.

WEIGHT_DECAY: 0.0005

WEIGHT_DECAY_BIAS: 0.0005

IMS_PER_BATCH: 64 # 设置batch size

SCHED: "WarmupMultiStepLR"

STEPS: [40, 90]

GAMMA: 0.1

WARMUP_FACTOR: 0.01

WARMUP_ITERS: 10

CHECKPOINT_PERIOD: 60 # epoxh

TEST:

EVAL_PERIOD: 30

IMS_PER_BATCH: 128

CUDNN_BENCHMARK: True

可以看到整个配置文件为三个configs文件Base-bagtricks,Base-AGW,AGW_R50-ibn组成,一级一级细化,结合./fastreid/config/default.py 可以很容易理解整个项目的配置文件结构。

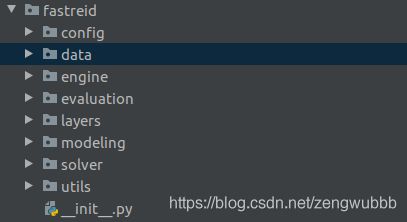

2.fastreid解读

包含数据处理data,模型结构modeling,loss函数

首先我们看./engine/default.py 定义了模型,优化器,学习率策略,训练,评估等函数

./engine/default.py代码如下(示例):

def default_argument_parser() # 参数选择

class DefaultTrainer(SimpleTrainer) # 设置

def build_hooks(self):

def train(self):

def build_model(cls, cfg):

def build_optimizer(cls, cfg, model):

def build_lr_scheduler(cls, cfg, optimizer):

def build_train_loader(cls, cfg):

def build_test_loader(cls, cfg, dataset_name):

def build_evaluator(cls, cfg, dataset_name, output_dir=None):

def test(cls, cfg, model):

def auto_scale_hyperparams(cfg, data_loader):

然后我们看./data/build.py 定义了数据处理方式, ./meta_arch/build.py定义了backbone

./data/build.py代码如下(示例):

_root = os.getenv("FASTREID_DATASETS", "datasets")

def build_reid_train_loader(cfg):

def build_reid_test_loader(cfg, dataset_name):

META_ARCH_REGISTRY = Registry("META_ARCH") # noqa F401 isort:skip

META_ARCH_REGISTRY.__doc__ = """

def build_model(cfg): # 通过cconfig.yaml选择backbone

"""

Build the whole model architecture, defined by ``cfg.MODEL.META_ARCHITECTURE``.

Note that it does not load any weights from ``cfg``.

"""

meta_arch = cfg.MODEL.META_ARCHITECTURE

model = META_ARCH_REGISTRY.get(meta_arch)(cfg)

model.to(torch.device(cfg.MODEL.DEVICE))

return model

接下来我们看baseline 定义了loss:

baseline.py代码如下(示例):

@META_ARCH_REGISTRY.register()

class Baseline(nn.Module):

def __init__(self, cfg):

super().__init__()

self._cfg = cfg

assert len(cfg.MODEL.PIXEL_MEAN) == len(cfg.MODEL.PIXEL_STD)

self.register_buffer("pixel_mean", torch.tensor(cfg.MODEL.PIXEL_MEAN).view(1, -1, 1, 1))

self.register_buffer("pixel_std", torch.tensor(cfg.MODEL.PIXEL_STD).view(1, -1, 1, 1))

# backbone

self.backbone = build_backbone(cfg)

# head

self.heads = build_heads(cfg)

@property

def device(self):

return self.pixel_mean.device

def forward(self, batched_inputs):

images = self.preprocess_image(batched_inputs)

features = self.backbone(images)

if self.training:

assert "targets" in batched_inputs, "Person ID annotation are missing in training!"

targets = batched_inputs["targets"].to(self.device)

# PreciseBN flag, When do preciseBN on different dataset, the number of classes in new dataset

# may be larger than that in the original dataset, so the circle/arcface will

# throw an error. We just set all the targets to 0 to avoid this problem.

if targets.sum() < 0: targets.zero_()

outputs = self.heads(features, targets)

return {

"outputs": outputs,

"targets": targets,

}

else:

outputs = self.heads(features)

return outputs

def preprocess_image(self, batched_inputs):

r"""

Normalize and batch the input images.

"""

if isinstance(batched_inputs, dict):

images = batched_inputs["images"].to(self.device)

elif isinstance(batched_inputs, torch.Tensor):

images = batched_inputs.to(self.device)

else:

raise TypeError("batched_inputs must be dict or torch.Tensor, but get {}".format(type(batched_inputs)))

images.sub_(self.pixel_mean).div_(self.pixel_std)

return images

def losses(self, outs): **# loss的选择和处理过程**

r"""

Compute loss from modeling's outputs, the loss function input arguments

must be the same as the outputs of the model forwarding.

"""

# fmt: off

outputs = outs["outputs"]

gt_labels = outs["targets"]

# model predictions

pred_class_logits = outputs['pred_class_logits'].detach()

cls_outputs = outputs['cls_outputs']

pred_features = outputs['features']

# fmt: on

# Log prediction accuracy

log_accuracy(pred_class_logits, gt_labels)

loss_dict = {

}

loss_names = self._cfg.MODEL.LOSSES.NAME

if "CrossEntropyLoss" in loss_names:

loss_dict['loss_cls'] = cross_entropy_loss(

cls_outputs,

gt_labels,

self._cfg.MODEL.LOSSES.CE.EPSILON, # 看到这些cfg大写字母的都是

self._cfg.MODEL.LOSSES.CE.ALPHA, # config.yaml里设置的

) * self._cfg.MODEL.LOSSES.CE.SCALE

if "TripletLoss" in loss_names:

loss_dict['loss_triplet'] = triplet_loss(

pred_features,

gt_labels,

self._cfg.MODEL.LOSSES.TRI.MARGIN,

self._cfg.MODEL.LOSSES.TRI.NORM_FEAT,

self._cfg.MODEL.LOSSES.TRI.HARD_MINING,

) * self._cfg.MODEL.LOSSES.TRI.SCALE

if "CircleLoss" in loss_names:

loss_dict['loss_circle'] = circle_loss(

pred_features,

gt_labels,

self._cfg.MODEL.LOSSES.CIRCLE.MARGIN,

self._cfg.MODEL.LOSSES.CIRCLE.ALPHA,

) * self._cfg.MODEL.LOSSES.CIRCLE.SCALE

return loss_dict

总结

看到这里时,大脑里对fastreid有了一个整体的理解,fastreid封装的过于完善,我们也没必要所有细节都看完,对项目中主要结构部分了解后就可以按照我们自己的需求开进行修改调参。下次详细讲讲如何新增自己的数据集,修改backbone,heads,loss以及调参策略。,下一篇:行人重识别系统部署