- 深度学习训练中GPU内存管理

@Mr_LiuYang

遇到过的问题内存管理内存溢出outofmemoryGPU内存

文章目录概述常见问题1、设备选择和数据迁移2、显存监控函数3、显存释放函数4、自适应batchsize调节5、梯度累积概述在深度学习模型训练中,主流GPU显存通常为8GB~80GB,内存不足会导致训练中断或BatchSize受限,GPU内存管理是优化性能和避免OutOfMemoryError的关键挑战。本博客简介PyTorch中GPU内存管理的核心函数、用法和实战技巧,帮助开发者高效利用显存资源。

- 深度学习pytorch之简单方法自定义9类卷积即插即用

@Mr_LiuYang

计算机视觉基础卷积类型非对称卷积深度卷积空洞卷积组卷积深度可分离卷积动态卷积

本文详细解析了PyTorch中torch.nn.Conv2d的核心参数,通过代码示例演示了如何利用这一基础函数实现多种卷积操作。涵盖的卷积类型包括:标准卷积、逐点卷积(1x1卷积)、非对称卷积(长宽不等的卷积核)、空洞卷积(扩大感受野)、深度卷积(逐通道滤波)、组卷积(分组独立处理)、深度可分离卷积(深度+逐点组合)、转置卷积(上采样)和动态卷积(动态生成卷积核),帮助读者理解如何通过调整参数灵活

- 深度学习PyTorch之数据加载DataLoader

@Mr_LiuYang

计算机视觉基础深度学习pytorch人工智能

深度学习pytorch之简单方法自定义9类卷积即插即用文章目录数据加载基础架构1、Dataset类详解2、DataLoader核心参数解析3、数据增强数据加载基础架构核心类关系图torch.utils.data├──Dataset(抽象基类)├──DataLoader(数据加载器)├──Sampler(采样策略)├──BatchSampler(批量采样)└──IterableDataset(流式数

- 仅仅使用pytorch来手撕transformer架构(4):解码器和解码器模块类的实现和向前传播

KangkangLoveNLP

手撕系列#transformerpytorchtransformer人工智能深度学习python机器学习

仅仅使用pytorch来手撕transformer架构(4):解码器和解码器模块类的实现和向前传播仅仅使用pytorch来手撕transformer架构(1):位置编码的类的实现和向前传播最适合小白入门的Transformer介绍仅仅使用pytorch来手撕transformer架构(2):多头注意力MultiHeadAttention类的实现和向前传播仅仅使用pytorch来手撕transfor

- 基于transformer实现机器翻译(日译中)

小白_laughter

课程学习transformer机器翻译深度学习

文章目录一、引言二、使用编码器—解码器和注意力机制来实现机器翻译模型2.0含注意力机制的编码器—解码器2.1读取和预处理数据2.2含注意力机制的编码器—解码器2.3训练模型2.4预测不定长的序列2.5评价翻译结果三、使用Transformer架构和PyTorch深度学习库来实现的日中机器翻译模型3.1、导入必要的库3.2、数据集准备3.3、准备分词器3.4、构建TorchText词汇表对象,并将句

- PyTorch实现CNN:CIFAR-10图像分类实战教程

吴师兄大模型

PyTorchpytorchcnnCIFAR-10图像分类人工智能python卷积神经网络开发语言

Langchain系列文章目录01-玩转LangChain:从模型调用到Prompt模板与输出解析的完整指南02-玩转LangChainMemory模块:四种记忆类型详解及应用场景全覆盖03-全面掌握LangChain:从核心链条构建到动态任务分配的实战指南04-玩转LangChain:从文档加载到高效问答系统构建的全程实战05-玩转LangChain:深度评估问答系统的三种高效方法(示例生成、手

- 基于PyTorch的深度学习6——数据处理工具箱2

Wis4e

深度学习pytorch人工智能

torchvision有4个功能模块:model、datasets、transforms和utils。主要介绍如何使用datasets的ImageFolder处理自定义数据集,以及如何使用transforms对源数据进行预处理、增强等。下面将重点介绍transforms及ImageFolder。transforms提供了对PILImage对象和Tensor对象的常用操作。1)对PILImage的常

- 基于PyTorch的深度学习——机器学习3

Wis4e

深度学习机器学习pytorch

激活函数在神经网络中作用有很多,主要作用是给神经网络提供非线性建模能力。如果没有激活函数,那么再多层的神经网络也只能处理线性可分问题。在搭建神经网络时,如何选择激活函数?如果搭建的神经网络层数不多,选择sigmoid、tanh、relu、softmax都可以;而如果搭建的网络层次较多,那就需要小心,选择不当就可导致梯度消失问题。此时一般不宜选择sigmoid、tanh激活函数,因它们的导数都小于1

- 3.10 项目总结

不要不开心了

pyqt深度学习机器学习数据挖掘人工智能

今天的项目是一个使用PyTorch框架构建和训练神经网络的实例,旨在实现手写数字识别。以下是项目的总结、内容分析以及优化建议:项目总结1.目标:使用神经网络对MNIST数据集中的手写数字进行分类。2.步骤:-数据加载和预处理。-构建神经网络模型。-定义损失函数和优化器。-训练模型并评估其性能。-可视化训练结果。内容分析1.数据加载和预处理:-使用`torchvision.datasets`加载MN

- 学习总结项目

苏小夕夕

学习人工智能深度学习机器学习

近段时间学习了机器学习、线性回归和softmax回归、多层感知机、卷积神经网络、Pytorch神经网络工具箱、Python数据处理工具箱、图像分类等的知识,学习了利用神经网络实现cifar10的操作、手写图像识别项目以及其对应的实验项目报告总结。项目总结本次项目我使用了VGG19模型、AlexNet模型和已使用的VGG16模型进行对比,在已有的条件下,对代码进行更改是,结果展示中,VGG19模型的

- 第N4周:NLP中的文本嵌入

OreoCC

自然语言处理人工智能

本人往期文章可查阅:深度学习总结词嵌入是一种用于自然语言处理(NLP)的技术,用于将单词表示为数字,以便计算机可以处理它们。通俗的讲就是,一种把文本转为数值输入到计算机中的方法。之前文章中提到的将文本转换为字典序列、one-hot编码就是最早期的词嵌入方法。Embedding和EmbeddingBag则是PyTorch中的用来处理文本数据中词嵌入(wordembedding)的工具,它们将离散的词

- 【大模型】DeepSeek-R1-Distill-Qwen部署及API调用

油泼辣子多加

大模型实战算法gptlangchain人工智能

DeepSeek-R1-Distill-Qwen是由中国人工智能公司深度求索(DeepSeek)开发的轻量化大语言模型,基于阿里巴巴的Qwen系列模型通过知识蒸馏技术优化而来。当前模型开源后,我们可以将其部署,使用API方式进行本地调用1.部署环境本文中的部署基础环境如下所示:PyTorch2.5.1Python3.12(ubuntu22.04)Cuda12.4GPURTX3090(24GB)*1

- 深度学习 PyTorch 中 18 种数据增强策略与实现

@Mr_LiuYang

计算机视觉基础数据增强深度学习torchvisiontransforms

深度学习pytorch之简单方法自定义9类卷积即插即用数据增强通过对训练数据进行多种变换,增加数据的多样性,它帮助我们提高模型的鲁棒性,并减少过拟合的风险。PyTorch提供torchvision.transforms模块丰富的数据增强操作,我们可以通过组合多种策略来实现复杂的增强效果。本文将介绍18种常用的图像数据增强策略,并展示如何使用PyTorch中的torchvision.transfor

- Win11及CUDA 12.1环境下PyTorch安装及避坑指南:深度学习开发者的福音

郁云爽

Win11及CUDA12.1环境下PyTorch安装及避坑指南:深度学习开发者的福音【下载地址】Win11及CUDA12.1环境下PyTorch安装及避坑指南本资源文件旨在为在Windows11操作系统及CUDA12.1环境下安装PyTorch的用户提供详细的安装步骤及常见问题解决方案。无论你是初学者还是有经验的开发者,这份指南都将帮助你顺利完成PyTorch的安装,并避免常见的坑项目地址:htt

- Word2Vec 模型 PyTorch 实现并复现论文中的数据集

Illusionna.

word2vecpytorch人工智能算法自然语言处理nlpmatplotlib

详细注解链接:https://www.orzzz.net/directory/codes/Word2Vec/index.html欢迎咨询!

- 基于PyTorch的深度学习4——使用numpy实现机器学习vs使用Tensor及Antograd实现机器学习

Wis4e

深度学习机器学习pytorch

首先,给出一个数组x,然后基于表达式y=3x2+2,加上一些噪音数据到达另一组数据y。然后,构建一个机器学习模型,学习表达式y=wx2+b的两个参数w、b。利用数组x,y的数据为训练数据。最后,采用梯度梯度下降法,通过多次迭代,学习到w、b的值。以下为具体步骤:1)导入需要的库。importnumpyasnp%matplotlibinlinefrommatplotlibimportpyplotas

- Pytorch 第九回:卷积神经网络——ResNet模型

Start_Present

pytorchcnnpython分类深度学习

Pytorch第九回:卷积神经网络——ResNet模型本次开启深度学习第九回,基于Pytorch的ResNet卷积神经网络模型。这是分享的第四个卷积神经网络模型。该模型是基于解决因网络加深而出现的梯度消失和网络退化而进行设计的。接下来给大家分享具体思路。本次学习,借助的平台是PyCharm2024.1.3,python版本3.11numpy版本是1.26.4,pytorch版本2.0.0+cu11

- Pytorch的一小步,昇腾芯片的一大步

BRUCE_WUANG

pytorch人工智能python

Pytorch的一小步,昇腾芯片的一大步相信在AI圈的人多多少少都看到了最近的信息:PyTorch最新2.1版本宣布支持华为昇腾芯片!1、发生了什么事儿?在2023年10月4日PyTorch2.1版本的发布博客上,PyTorch介绍的beta版本新特性上有一个PRIVATEUSE1特性是提高了第三方设备的支持,并说明了华为AscendNPU(昇腾NPU芯片)的OSS小组已经成功将torch_npu

- 每天五分钟深度学习pytorch:基于Pytorch搭建ResNet模型的残差块

每天五分钟玩转人工智能

深度学习框架pytorch深度学习pytorch人工智能ResNet机器学习

残差块我们分析一下这个残差块,x经过两个卷积层得到F(x),然后F(x)+x作为残差块的输出,此时就有一个问题,这个问题就是F(x)+x的维度问题,如果图片数据经过两个卷积层之后F(x)变小(height和weight变小)或者通道数发生了变化,那么此时F(x)是没有办法和x相加的,当然我们可以学习前面的GoogLeNet的方式,也就是说卷积之后的F(x)和x一样,大小不变,或者对x变道和F(x)

- 实战1. 利用Pytorch解决 CIFAR 数据集中的图像分类为 10 类的问题

啥都鼓捣的小yao

深度学习pytorch分类人工智能深度学习

实战1.利用Pytorch解决CIFAR数据集中的图像分类为10类的问题加载数据建立模型模型训练测试评估你的任务是建立一个用于CIFAR图像分类的神经网络,并实现分类质量>0.5。注意:因为我们实战1里只讨论最简单的神经网络构建,所以准确率达到0.5以上就符合我们的目标,后面会不断学习新的模型进行优化CIFAR的数据集如下图所示:我们大概所需要的功能包如下:importnumpyasnpimpor

- Pycharm搭建CUDA,Pytorch教程(匹配版本,安装,搭建全保姆教程)_cuda12(1)

2401_84557821

程序员pycharmpytorchide

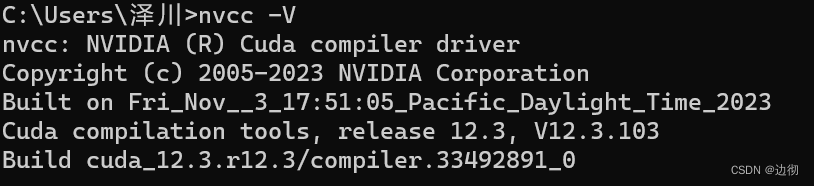

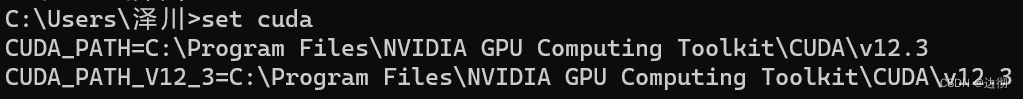

查看cuda版本输入setcuda查看环境变量如上两图即为下载成功!##二、安装Pytorch#

- 基于PyTorch的深度学习5——神经网络工具箱

Wis4e

深度学习pytorch神经网络

可以学习如下内容:•介绍神经网络核心组件。•如何构建一个神经网络。•详细介绍如何构建一个神经网络。•如何使用nn模块中Module及functional。•如何选择优化器。•动态修改学习率参数。5.1核心组件神经网络核心组件不多,把这些组件确定后,这个神经网络基本就确定了。这些核心组件包括:1)层:神经网络的基本结构,将输入张量转换为输出张量。2)模型:层构成的网络。3)损失函数:参数学习的目标函

- python可應用在金融分析的那一個方面,如何部署在linux server上面。

蠟筆小新工程師

金融

Python在金融分析中應用廣泛,以下是幾個主要方面:###1.**數據處理與分析**-使用**Pandas**和**NumPy**等庫來處理和分析大規模數據集,進行清理、轉換和統計運算。-舉例:處理歷史市場數據,分析價格趨勢、交易量等。###2.**機器學習與預測**-使用**scikit-learn**、**TensorFlow**或**PyTorch**建立模型進行股票價格預測、信用風險評估

- 【vLLM 教程】使用 TPU 安装

vLLM是一款专为大语言模型推理加速而设计的框架,实现了KV缓存内存几乎零浪费,解决了内存管理瓶颈问题。更多vLLM中文文档及教程可访问→https://vllm.hyper.ai/vLLM使用PyTorchXLA支持GoogleCloudTPU。依赖环境GoogleCloudTPUVM(单主机和多主机)TPU版本:v5e、v5p、v4Python:3.10安装选项:href="https://v

- 数字识别项目

不要天天开心

机器学习人工智能深度学习算法

集成算法·Bagging·随机森林构造树模型:由于二重随机性,使得每个树基本上都不会一样,最终的结果也会不一样。集成算法·Stacking·堆叠:很暴力,拿来一堆直接上(各种分类器都来了)·可以堆叠各种各样的分类器(KNN,SVM,RF等等)·分阶段:第一阶段得出各自结果,第二阶段再用前一阶段结果训练实现神经网络实例利用PyTorch内置函数mnist下载数据。·利用torchvision对数据进

- 2024年Python最新Pytorch--3,面试高分实战

m0_60666452

程序员python学习面试

(1)Python所有方向的学习路线(新版)这是我花了几天的时间去把Python所有方向的技术点做的整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。最近我才对这些路线做了一下新的更新,知识体系更全面了。(2)Python学习视频包含了Python入门、爬虫、数据分析和web开发的学习视频,总共100多个,虽然没有那么全面,但是对于入门

- 2024年最新PyTorch深度学习项目实战100例数据集_python 深度学习项目演练

2401_84585440

程序员深度学习pythonpytorch

前言最近很多订阅了《PyTorch深度学习项目实战100例》的用户私信咨询有些数据集下载不了以及一些文章中没有给出数据集链接,为了解决这个问题,专门开设了本篇文章,提供数据集下载链接,打包100例的所有数据集。本专栏适用人群:深度学习初学者,刚刚接触PyTorch的用户群体,专栏将具体讲解如何快速搭建深度学习模型用自己的数据集实现深度学习小项目,快速让新手小白能够对基于深度学习方法有个基本的框架认

- PyTorch 学习路线

gorgor在码农

#python入门基础pythonpytorch

学习PyTorch需要结合理论理解和实践编码,逐步掌握其核心功能和实际应用。以下是分阶段的学习路径和资源推荐,适合从入门到进阶:1.基础知识准备前提条件Python基础:熟悉Python语法(变量、函数、类、模块等)。数学基础:了解线性代数、微积分、概率论(深度学习的基础)。机器学习基础:理解神经网络、损失函数、优化器(如梯度下降)等概念。学习资源Python入门:Python官方教程机器学习基础

- PyTorch:Python深度学习框架使用详解

零 度°

pythonpython深度学习pytorch

PyTorch是一个开源的机器学习库,广泛用于计算机视觉和自然语言处理领域。它由Facebook的AI研究团队开发,因其动态计算图、易用性以及与Python的紧密集成而受到开发者的青睐。PyTorch的主要特点动态计算图:PyTorch的计算图在运行时构建,使得模型的修改和调试更加灵活。自动微分:自动计算梯度,简化了机器学习模型的训练过程。丰富的API:提供了丰富的神经网络层、函数和损失函数。跨平

- 基于Pytorch的语音情感识别系统

鱼弦

人工智能时代pytorch人工智能python

基于Pytorch的语音情感识别系统介绍语音情感识别(SpeechEmotionRecognition,SER)是指通过分析和处理人的语音信号来识别其情感状态。常见的情感状态包括愤怒、喜悦、悲伤、惊讶等。基于Pytorch的语音情感识别系统使用深度学习技术,通过训练神经网络模型来实现情感识别任务。应用使用场景客户服务中心:自动识别客户情绪,提供有针对性的服务。智能语音助手:提升人机交互体验,更加智

- 书其实只有三类

西蜀石兰

类

一个人一辈子其实只读三种书,知识类、技能类、修心类。

知识类的书可以让我们活得更明白。类似十万个为什么这种书籍,我一直不太乐意去读,因为单纯的知识是没法做事的,就像知道地球转速是多少一样(我肯定不知道),这种所谓的知识,除非用到,普通人掌握了完全是一种负担,维基百科能找到的东西,为什么去记忆?

知识类的书,每个方面都涉及些,让自己显得不那么没文化,仅此而已。社会认为的学识渊博,肯定不是站在

- 《TCP/IP 详解,卷1:协议》学习笔记、吐槽及其他

bylijinnan

tcp

《TCP/IP 详解,卷1:协议》是经典,但不适合初学者。它更像是一本字典,适合学过网络的人温习和查阅一些记不清的概念。

这本书,我看的版本是机械工业出版社、范建华等译的。这本书在我看来,翻译得一般,甚至有明显的错误。如果英文熟练,看原版更好:

http://pcvr.nl/tcpip/

下面是我的一些笔记,包括我看书时有疑问的地方,也有对该书的吐槽,有不对的地方请指正:

1.

- Linux—— 静态IP跟动态IP设置

eksliang

linuxIP

一.在终端输入

vi /etc/sysconfig/network-scripts/ifcfg-eth0

静态ip模板如下:

DEVICE="eth0" #网卡名称

BOOTPROTO="static" #静态IP(必须)

HWADDR="00:0C:29:B5:65:CA" #网卡mac地址

IPV6INIT=&q

- Informatica update strategy transformation

18289753290

更新策略组件: 标记你的数据进入target里面做什么操作,一般会和lookup配合使用,有时候用0,1,1代表 forward rejected rows被选中,rejected row是输出在错误文件里,不想看到reject输出,将错误输出到文件,因为有时候数据库原因导致某些column不能update,reject就会output到错误文件里面供查看,在workflow的

- 使用Scrapy时出现虽然队列里有很多Request但是却不下载,造成假死状态

酷的飞上天空

request

现象就是:

程序运行一段时间,可能是几十分钟或者几个小时,然后后台日志里面就不出现下载页面的信息,一直显示上一分钟抓取了0个网页的信息。

刚开始已经猜到是某些下载线程没有正常执行回调方法引起程序一直以为线程还未下载完成,但是水平有限研究源码未果。

经过不停的google终于发现一个有价值的信息,是给twisted提出的一个bugfix

连接地址如下http://twistedmatrix.

- 利用预测分析技术来进行辅助医疗

蓝儿唯美

医疗

2014年,克利夫兰诊所(Cleveland Clinic)想要更有效地控制其手术中心做膝关节置换手术的费用。整个系统每年大约进行2600例此类手术,所以,即使降低很少一部分成本,都可以为诊 所和病人节约大量的资金。为了找到适合的解决方案,供应商将视野投向了预测分析技术和工具,但其分析团队还必须花时间向医生解释基于数据的治疗方案意味着 什么。

克利夫兰诊所负责企业信息管理和分析的医疗

- java 线程(一):基础篇

DavidIsOK

java多线程线程

&nbs

- Tomcat服务器框架之Servlet开发分析

aijuans

servlet

最近使用Tomcat做web服务器,使用Servlet技术做开发时,对Tomcat的框架的简易分析:

疑问: 为什么我们在继承HttpServlet类之后,覆盖doGet(HttpServletRequest req, HttpServetResponse rep)方法后,该方法会自动被Tomcat服务器调用,doGet方法的参数有谁传递过来?怎样传递?

分析之我见: doGet方法的

- 揭秘玖富的粉丝营销之谜 与小米粉丝社区类似

aoyouzi

揭秘玖富的粉丝营销之谜

玖富旗下悟空理财凭借着一个微信公众号上线当天成交量即破百万,第七天成交量单日破了1000万;第23天时,累计成交量超1个亿……至今成立不到10个月,粉丝已经超过500万,月交易额突破10亿,而玖富平台目前的总用户数也已经超过了1800万,位居P2P平台第一位。很多互联网金融创业者慕名前来学习效仿,但是却鲜有成功者,玖富的粉丝营销对外至今仍然是个谜。

近日,一直坚持微信粉丝营销

- Java web的会话跟踪技术

百合不是茶

url会话Cookie会话Seession会话Java Web隐藏域会话

会话跟踪主要是用在用户页面点击不同的页面时,需要用到的技术点

会话:多次请求与响应的过程

1,url地址传递参数,实现页面跟踪技术

格式:传一个参数的

url?名=值

传两个参数的

url?名=值 &名=值

关键代码

- web.xml之Servlet配置

bijian1013

javaweb.xmlServlet配置

定义:

<servlet>

<servlet-name>myservlet</servlet-name>

<servlet-class>com.myapp.controller.MyFirstServlet</servlet-class>

<init-param>

<param-name>

- 利用svnsync实现SVN同步备份

sunjing

SVN同步E000022svnsync镜像

1. 在备份SVN服务器上建立版本库

svnadmin create test

2. 创建pre-revprop-change文件

cd test/hooks/

cp pre-revprop-change.tmpl pre-revprop-change

3. 修改pre-revprop-

- 【分布式数据一致性三】MongoDB读写一致性

bit1129

mongodb

本系列文章结合MongoDB,探讨分布式数据库的数据一致性,这个系列文章包括:

数据一致性概述与CAP

最终一致性(Eventually Consistency)

网络分裂(Network Partition)问题

多数据中心(Multi Data Center)

多个写者(Multi Writer)最终一致性

一致性图表(Consistency Chart)

数据

- Anychart图表组件-Flash图转IMG普通图的方法

白糖_

Flash

问题背景:项目使用的是Anychart图表组件,渲染出来的图是Flash的,往往一个页面有时候会有多个flash图,而需求是让我们做一个打印预览和打印功能,让多个Flash图在一个页面上打印出来。

那么我们打印预览的思路是获取页面的body元素,然后在打印预览界面通过$("body").append(html)的形式显示预览效果,结果让人大跌眼镜:Flash是

- Window 80端口被占用 WHY?

bozch

端口占用window

平时在启动一些可能使用80端口软件的时候,会提示80端口已经被其他软件占用,那一般又会有那些软件占用这些端口呢?

下面坐下总结:

1、web服务器是最经常见的占用80端口的,例如:tomcat , apache , IIS , Php等等;

2

- 编程之美-数组的最大值和最小值-分治法(两种形式)

bylijinnan

编程之美

import java.util.Arrays;

public class MinMaxInArray {

/**

* 编程之美 数组的最大值和最小值 分治法

* 两种形式

*/

public static void main(String[] args) {

int[] t={11,23,34,4,6,7,8,1,2,23};

int[]

- Perl正则表达式

chenbowen00

正则表达式perl

首先我们应该知道 Perl 程序中,正则表达式有三种存在形式,他们分别是:

匹配:m/<regexp>;/ (还可以简写为 /<regexp>;/ ,略去 m)

替换:s/<pattern>;/<replacement>;/

转化:tr/<pattern>;/<replacemnt>;

- [宇宙与天文]行星议会是否具有本行星大气层以外的权力呢?

comsci

举个例子: 地球,地球上由200多个国家选举出一个代表地球联合体的议会,那么现在地球联合体遇到一个问题,地球这颗星球上面的矿产资源快要采掘完了....那么地球议会全体投票,一致通过一项带有法律性质的议案,既批准地球上的国家用各种技术手段在地球以外开采矿产资源和其它资源........

&

- Oracle Profile 使用详解

daizj

oracleprofile资源限制

Oracle Profile 使用详解 转

一、目的:

Oracle系统中的profile可以用来对用户所能使用的数据库资源进行限制,使用Create Profile命令创建一个Profile,用它来实现对数据库资源的限制使用,如果把该profile分配给用户,则该用户所能使用的数据库资源都在该profile的限制之内。

二、条件:

创建profile必须要有CREATE PROFIL

- How HipChat Stores And Indexes Billions Of Messages Using ElasticSearch & Redis

dengkane

elasticsearchLucene

This article is from an interview with Zuhaib Siddique, a production engineer at HipChat, makers of group chat and IM for teams.

HipChat started in an unusual space, one you might not

- 循环小示例,菲波拉契序列,循环解一元二次方程以及switch示例程序

dcj3sjt126com

c算法

# include <stdio.h>

int main(void)

{

int n;

int i;

int f1, f2, f3;

f1 = 1;

f2 = 1;

printf("请输入您需要求的想的序列:");

scanf("%d", &n);

for (i=3; i<n; i

- macbook的lamp环境

dcj3sjt126com

lamp

sudo vim /etc/apache2/httpd.conf

/Library/WebServer/Documents

是默认的网站根目录

重启Mac上的Apache服务

这个命令很早以前就查过了,但是每次使用的时候还是要在网上查:

停止服务:sudo /usr/sbin/apachectl stop

开启服务:s

- java ArrayList源码 下

shuizhaosi888

ArrayList源码

版本 jdk-7u71-windows-x64

JavaSE7 ArrayList源码上:http://flyouwith.iteye.com/blog/2166890

/**

* 从这个列表中移除所有c中包含元素

*/

public boolean removeAll(Collection<?> c) {

- Spring Security(08)——intercept-url配置

234390216

Spring Securityintercept-url访问权限访问协议请求方法

intercept-url配置

目录

1.1 指定拦截的url

1.2 指定访问权限

1.3 指定访问协议

1.4 指定请求方法

1.1 &n

- Linux环境下的oracle安装

jayung

oracle

linux系统下的oracle安装

本文档是Linux(redhat6.x、centos6.x、redhat7.x) 64位操作系统安装Oracle 11g(Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production),本文基于各种网络资料精心整理而成,共享给有需要的朋友。如有问题可联系:QQ:52-7

- hotspot虚拟机

leichenlei

javaHotSpotjvm虚拟机文档

JVM参数

http://docs.oracle.com/javase/6/docs/technotes/guides/vm/index.html

JVM工具

http://docs.oracle.com/javase/6/docs/technotes/tools/index.html

JVM垃圾回收

http://www.oracle.com

- 读《Node.js项目实践:构建可扩展的Web应用》 ——引编程慢慢变成系统化的“砌砖活”

noaighost

Webnode.js

读《Node.js项目实践:构建可扩展的Web应用》

——引编程慢慢变成系统化的“砌砖活”

眼里的Node.JS

初初接触node是一年前的事,那时候年少不更事。还在纠结什么语言可以编写出牛逼的程序,想必每个码农都会经历这个月经性的问题:微信用什么语言写的?facebook为什么推荐系统这么智能,用什么语言写的?dota2的外挂这么牛逼,用什么语言写的?……用什么语言写这句话,困扰人也是阻碍

- 快速开发Android应用

rensanning

android

Android应用开发过程中,经常会遇到很多常见的类似问题,解决这些问题需要花时间,其实很多问题已经有了成熟的解决方案,比如很多第三方的开源lib,参考

Android Libraries 和

Android UI/UX Libraries。

编码越少,Bug越少,效率自然会高。

但可能由于 根本没听说过、听说过但没用过、特殊原因不能用、自己已经有了解决方案等等原因,这些成熟的解决

- 理解Java中的弱引用

tomcat_oracle

java工作面试

不久之前,我

面试了一些求职Java高级开发工程师的应聘者。我常常会面试他们说,“你能给我介绍一些Java中得弱引用吗?”,如果面试者这样说,“嗯,是不是垃圾回收有关的?”,我就会基本满意了,我并不期待回答是一篇诘究本末的论文描述。 然而事与愿违,我很吃惊的发现,在将近20多个有着平均5年开发经验和高学历背景的应聘者中,居然只有两个人知道弱引用的存在,但是在这两个人之中只有一个人真正了

- 标签输出html标签" target="_blank">关于标签输出html标签

xshdch

jsp

http://back-888888.iteye.com/blog/1181202

关于<c:out value=""/>标签的使用,其中有一个属性是escapeXml默认是true(将html标签当做转移字符,直接显示不在浏览器上面进行解析),当设置escapeXml属性值为false的时候就是不过滤xml,这样就能在浏览器上解析html标签,

&nb