Docker——Docker Swarm(阿里云搭建Swarm集群)

Docker Swarm

- Swarm的一些关键概念

-

- 什么是Swarm

- 阿里云搭建Swarm集群

-

- 准备工作

- 阿里云镜像加速

- 创建主节点

- Raft协议

- 集群里部署项目(扩缩容)

- 概念总结

-

- Swarm的特点

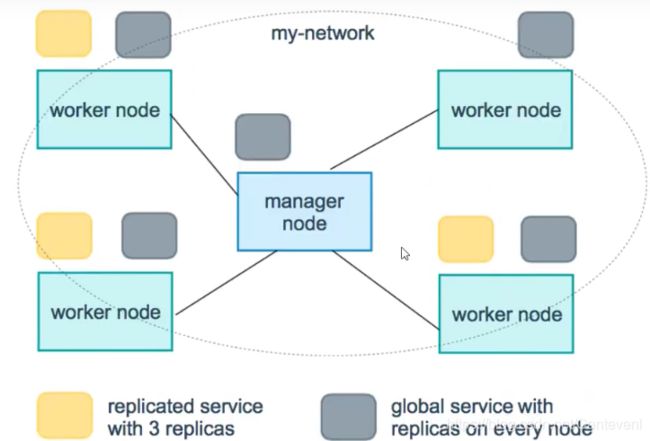

- 服务副本与全局服务

- Docker Stack

Swarm的一些关键概念

什么是Swarm

-

使用Swarm构建嵌入在Docker引擎中的集群管理和编排功能。Swarm是一个单独的项目,实现了Docker的业务流程层,并能够直接在Docker中使用。

-

一个群集由多个Docker主机组成,这些Docker主机以群集模式运行,并充当管理节点(用于管理成员资格和委派)和工作节点(用于运行 群集服务)。一个Docker主机可以是管理员,工作人员,或同时担任这两个角色。创建服务时,需要定义容器的最佳状态(可用副本,网络和存储资源的数量,服务向外界公开的端口等)。Docker致力于维持高可用。例如,如果工作节点不可用,Docker会在其他节点上安排该节点的任务。一个运行的容器是一个群服务的一部分,并通过管理节点管理,而不是一个独立的容器。

-

与独立容器相比,群集服务的主要优势之一是,可以修改服务的配置,包括它所连接的网络和卷,而无需手动重新启动服务。Docker将更新配置,使用过期的配置停止服务任务,并创建与所需配置匹配的新任务。

-

当Docker以swarm模式运行时,仍然可以在参与该swarm的任何Docker主机以及swarm服务上运行独立容器。独立容器和群集服务之间的主要区别在于,只有群集管理器可以管理群集,而独立容器可以在任何守护程序上启动。Docker守护程序可以是管理节点,工作节点或两者兼有的形式参与。

-

与可以使用Docker Compose定义和运行容器的方式相同,可以定义和运行Swarm服务堆栈。

阿里云搭建Swarm集群

准备工作

4台机器安装 Docker

# 安装gcc相关环境

yum install -y gcc gcc-c++

# 安装需要的软件包

yum install -y yum-utils

# 安装国内源仓库

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 更新yum软件包索引

yum makecache fast

# 安装 Docker CE

yum install -y docker-ce docker-ce-cli containerd.io

# 启动Docker

systemctl start docker

# 测试命令

docker version

docker run hello-world

docker images

阿里云镜像加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://xxx.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

创建主节点

查看ip

ip a

inet 192.168.188.80/25

docker swarm init --advertise-addr 192.168.188.80

Swarm initialized: current node (w9souqryc62xwcptuv3zs63cq) is now a manager.

这个地址已经加入到了swarm里面 可以使用下面的命令让其他节点加入进来

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-5n0et0tehpffvcvgwa7270nl2bo3onw9n4h44k92ynpyd58o9t-58uthrjhxif4bo54txvvb7zg9 192.168.188.80:2377

如果你想添加一个管理节点,你可以使用 docker swarm join-token manager

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instruct

ions.

初始化节点`docker swarm init`

docker swarm join 加入一个节点

# 获取令牌

docker swarm join-token manager

docker swarm join-token worker

在主节点获取令牌

[root@swarm1 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-5n0et0tehpffvcvgwa7270nl2bo3onw9n4h44k92ynpyd58o9t-9uftr1s3e4mbvay8gsnea0r7x 192.168.188.80:2377

在另外一台服务器上通过令牌加入集群

[root@swarm2 ~]# docker swarm join --token SWMTKN-1-5n0et0tehpffvcvgwa7270nl2bo3onw9n4h44k92ynpyd58o9t-58uthrjhxif4bo54txvvb7z

g9 192.168.188.80:2377This node joined a swarm as a worker.

查看节点信息

[root@swarm1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

w9souqryc62xwcptuv3zs63cq * swarm1 Ready Active Leader 20.10.6

jk3fylomxksvus1nmuai2f1ad swarm2 Ready Active 20.10.6

将第三台服务器也加入集群

[root@swarm3 ~]# docker swarm join --token SWMTKN-1-5n0et0tehpffvcvgwa7270nl2bo3onw9n4h44k92ynpyd58o9t-58uthrjhxif4bo54txvvb7

zg9 192.168.188.80:2377This node joined a swarm as a worker.

将第四台服务器作为主节点

先生成令牌

[root@swarm1 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-5n0et0tehpffvcvgwa7270nl2bo3onw9n4h44k92ynpyd58o9t-9uftr1s3e4mbvay8gsnea0r7x 192.168.188.80:2377

[root@swarm4 ~]# docker swarm join --token SWMTKN-1-5n0et0tehpffvcvgwa7270nl2bo3onw9n4h44k92ynpyd58o9t-9uftr1s3e4mbvay8gsnea0

r7x 192.168.188.80:2377This node joined a swarm as a manager.

[root@swarm1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

w9souqryc62xwcptuv3zs63cq * swarm1 Ready Active Leader 20.10.6

jk3fylomxksvus1nmuai2f1ad swarm2 Ready Active 20.10.6

gmeyrsa08bs4du78ym60s1kmt swarm3 Ready Active 20.10.6

w43kyxy54egy9b6nl6mw6acyw swarm4 Ready Active Reachable 20.10.6

Reachable 就是可触达的 Leader和swarm4是可达的

Raft协议

-

双主双从:假设一个节点挂了!其他节点是否可以用!

-

Raft协议:保证大多数节点存活才可以用。集群至少要大于3台

将swarm1机器停止。宕机!

[root@swarm1 ~]# systemctl stop docker

发现另外一个管理节点也不能使用了

[root@swarm4 ~]# docker node ls

Error response from daemon: rpc error: code = Unknown desc = The swarm does not have a leader. It's possible that too few man

agers are online. Make sure more than half of the managers are online.

重启swarm1,之后Leader变成了 swarm4

[root@swarm4 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

w9souqryc62xwcptuv3zs63cq swarm1 Ready Active Reachable 20.10.6

jk3fylomxksvus1nmuai2f1ad swarm2 Ready Active 20.10.6

gmeyrsa08bs4du78ym60s1kmt swarm3 Ready Active 20.10.6

w43kyxy54egy9b6nl6mw6acyw * swarm4 Ready Active Leader 20.10.6

将swarm3 离开集群

[root@swarm3 ~]# docker swarm leave

Node left the swarm.

swarm3状态变成了down

[root@swarm4 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

w9souqryc62xwcptuv3zs63cq swarm1 Ready Active Reachable 20.10.6

jk3fylomxksvus1nmuai2f1ad swarm2 Ready Active 20.10.6

gmeyrsa08bs4du78ym60s1kmt swarm3 Down Active 20.10.6

w43kyxy54egy9b6nl6mw6acyw * swarm4 Ready Active Leader 20.10.6

将swarm3也加入作为管理节点

[root@swarm3 ~]# docker swarm join --token SWMTKN-1-5n0et0tehpffvcvgwa7270nl2bo3onw9n4h44k92ynpyd58o9t-9uftr1s3e4mbvay8gsnea0

r7x 192.168.188.83:2377This node joined a swarm as a manager.

[root@swarm4 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

w9souqryc62xwcptuv3zs63cq swarm1 Ready Active Reachable 20.10.6

jk3fylomxksvus1nmuai2f1ad swarm2 Ready Active 20.10.6

gmeyrsa08bs4du78ym60s1kmt swarm3 Down Active 20.10.6

q3e1ffi6ouolqymbv4nnql62m swarm3 Ready Active Reachable 20.10.6

w43kyxy54egy9b6nl6mw6acyw * swarm4 Ready Active Leader 20.10.6

目前3台机器设置为了管理节点

之前两台管理节点 挂一台另一台就不能使用了

但是现在有三台管理节点,再来做一次测试

停止第一台

[root@swarm1 ~]# systemctl stop docker

Warning: Stopping docker.service, but it can still be activated by:

docker.socket

但是另外两台管理节点仍然可使用

[root@swarm3 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

w9souqryc62xwcptuv3zs63cq swarm1 Down Active Unreachable 20.10.6

jk3fylomxksvus1nmuai2f1ad swarm2 Ready Active 20.10.6

gmeyrsa08bs4du78ym60s1kmt swarm3 Down Active 20.10.6

q3e1ffi6ouolqymbv4nnql62m * swarm3 Ready Active Reachable 20.10.6

w43kyxy54egy9b6nl6mw6acyw swarm4 Ready Active Leader 20.10.6

[root@swarm4 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

w9souqryc62xwcptuv3zs63cq swarm1 Down Active Unreachable 20.10.6

jk3fylomxksvus1nmuai2f1ad swarm2 Ready Active 20.10.6

gmeyrsa08bs4du78ym60s1kmt swarm3 Down Active 20.10.6

q3e1ffi6ouolqymbv4nnql62m swarm3 Ready Active Reachable 20.10.6

w43kyxy54egy9b6nl6mw6acyw * swarm4 Ready Active Leader 20.10.6

-

因此集群要保证高可用,必须至少3个主节点。大于1台管理节点存活

-

Raft协议:保证大多数节点存活,才可以使用,高可用!

集群里部署项目(扩缩容)

创建一个service

docker service

[root@swarm1 ~]# docker service create -p 8888:80 --name my-nginx nginx

rmb0sgq07hh72wq0zzeqm3iad

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

docker run 容器启动 不具有扩缩容

docker service 服务!具有扩缩容 滚动更新

查看服务

docker service ls

[root@swarm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

rmb0sgq07hh7 my-nginx replicated 1/1 nginx:latest *:8888->80/tcp

[root@swarm1 ~]# docker service ps my-nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

vnnxrxob201n my-nginx.1 nginx:latest swarm2 Running Running about a minute ago

查看详细信息

docker service inspect my-nginx

副本是启动在swarm2上

[root@swarm2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f50871234197 nginx:latest "/docker-entrypoint.…" 2 minutes ago Up 2 minutes 80/tcp my-nginx.1.vnnxrxob201nsm0el

a9v54snr

动态扩缩容

[root@swarm1 ~]# docker service update --replicas 3 my-nginx

my-nginx

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

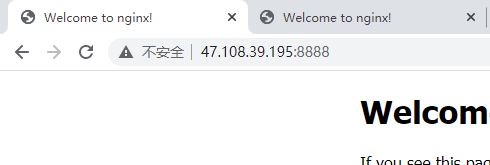

访问四台服务器的ip都可以访问

注意:端口号需要在阿里云安全组放行

动态扩缩容 开启10个服务

[root@swarm4 ~]# docker service update --replicas 10 my-nginx

my-nginx

overall progress: 10 out of 10 tasks

1/10: running [==================================================>]

2/10: running [==================================================>]

3/10: running [==================================================>]

4/10: running [==================================================>]

5/10: running [==================================================>]

6/10: running [==================================================>]

7/10: running [==================================================>]

8/10: running [==================================================>]

9/10: running [==================================================>]

10/10: running [==================================================>]

verify: Service converged

在每台服务器上docker ps 查看容器,正好10个

不仅可以扩容 还可以缩小

docker service update --replicas 1 my-nginx

[root@swarm4 ~]# docker service update --replicas 1 my-nginx

my-nginx

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

还有一个命令 scale

scale 也是动态扩缩容 和update一样

[root@swarm4 ~]# docker service scale my-nginx=5

my-nginx scaled to 5

overall progress: 5 out of 5 tasks

1/5: running [==================================================>]

2/5: running [==================================================>]

3/5: running [==================================================>]

4/5: running [==================================================>]

5/5: running [==================================================>]

verify: Service converged

[root@swarm4 ~]# docker service scale my-nginx=2

my-nginx scaled to 2

overall progress: 2 out of 2 tasks

1/2: running [==================================================>]

2/2: running [==================================================>]

verify: Service converged

移除服务

[root@swarm4 ~]# docker service rm my-nginx

my-nginx

[root@swarm4 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

概念总结

-

swarm

- 集群的管理和编排是使用嵌入docker引擎的SwarmKit。可用在docker初始化时启动swarm模式或者加入已存在的swarm(作为管理、工作者)

-

Node

- 一个节点是docker引擎集群的一个实例。可以将其视为Docker节点。还可以在单个物理计算机或云服务器上运行一个或多个节点,但生产群集部署通常包括分布在多个物理和云计算机上的Docker节点。

- 要将应用程序部署到swarm,请将服务定义提交给管理器节点。管理器节点将称为任务的工作单元分派给工作节点。

- Manager节点还执行维护所需群集状态所需的编排和集群管理功能。Manager节点选择单个领导者来执行编排任务。

- 工作节点接收并执行从管理器节点分派的任务。默认情况下,管理器节点还将服务作为工作节点运行,但您可以将它们配置为仅运行管理器任务并且是仅管理器节点。代理程序在每个工作程序球点上运行,并报告分配给它的任务。工作节点向管理器节点通知其分配的任务的当前状态,以便管理器可以维持每个工作者的期望状态。

-

Service

- 一个服务是任务的定义,管理机或工作节点上执行。它是群体系统的中心结构,是用户与群体交互的主要根源。创建服务时,你需要指定要使用的容器镜像

-

Task

- 任务是docker容器中执行的命令,Manager节点根据指定数量的任务副本分配任务给worker节点

-

docker swarm:集群管理,子命令有

init,join,leave,update(docker swarm --help 来查看帮助) -

docker service:子命令有

create,inspect,update,remove,tasks,(docker service --help 查看帮助) -

docker node:节点管理,子命令有

accept,promote,demote,inspect,update,tasks,ls,rm(docker node --help查看帮助)

Swarm的特点

- Docker Engine集成集群管理

使用Docker Engine CLl创建一个Docker Engine的Swarm模式,在集群中部署应用程序服务。 - 去中心化设计

Swarm角色分为Manager和Worker节点,Manager节点故障不影响应用使用。 - 扩容缩容

可以声明每个服务运行的容器数量,通过添加或删除容器数自动调整期望的状态。 - 期望状态协调

Swarm Manager节点不断监视集群状态,并调整当前状态与期望状态之间的差异。例如,设置一个服务运行10个副本容器,如果两个副本的服务器节点崩溃,Manager将创建两个新的副本替代崩溃的副本。并将新的副本分配到可用的worker节点。 - 多主机网络

可以为服务指定overlay网络。当初始化或更新应用程序时,Swarm manager会自动为overlay网络上的容器分配IP地址。 - 服务发现

Swarm manager节点为集群中的每个服务分配唯一的DNS记录和负载均衡VIP。可以通过Swarm内置的DNS服务器查询集群中每个运行的容器。 - 负载均衡

实现服务副本负载均衡,提供入口访问。也可以将服务入口暴露给外部负载均衡器再次负载均衡。 - 安全传输

Swarm中的每个节点使用TLS相互验证和加密,确保安全的其他节点通信。 - 滚动更新

升级时,逐步将应用服务更新到节点,如果出现问题,可以将任务回滚到先前版本

服务副本与全局服务

--mode string

Service mode (replicated or global) (default "replicated")

docker service create --mode replicated --name mytom tomcat:7 默认的

docker service create --mode global --name haha alpine ping baidu.com

# 场景 日志收集

每一个节点有自己的日志收集器,过滤。把所有日志最终再传给日志中心

服务监控,状态性能

[root@swarm4 ~]# docker service create -p 8888:80 --name my-nginx nginx

查看网络信息

docker service inspect my-nginx

动态扩缩容

docker service scale my-nginx=5

再次查看网络

docker service inspect my-nginx

网络模式:"PublishMode":"ingress"

ingress: 特殊的Overlay网络,具有负载均衡的功能

docker network inspect ingress

虽然docker在4台机器上,实际网络是同一个!ingress网络,是一个特殊的Overlay网络

网络变成了一个整体!

Docker Stack

-

docker-compose 是单机部署项目

-

Docker Stack部署 是集群部署

# 单机

docker-compose up -d wordpress.yml

# 集群

docker stack deploy wordpress.yml

# docker-compose 文件

version: '3.4'

services:

mongo:

image: mongo

restart: always

networks:

- mongo_network

deploy:

restart_policy:

condition: on-failure

replicas: 2

mongo-express:

image: mongo-express

restart: always

networks:

- mongo_network

ports:

- target: 8081

published: 80

protocol: tcp

mode: ingress

environment:

ME_CONFIG_MONGODB_SERVER: mongo

ME_CONFIG_MONGODB_PORT: 27017

deploy:

restart_policy:

condition: on-failure

replicas: 1

networks:

mongo_network:

external: true

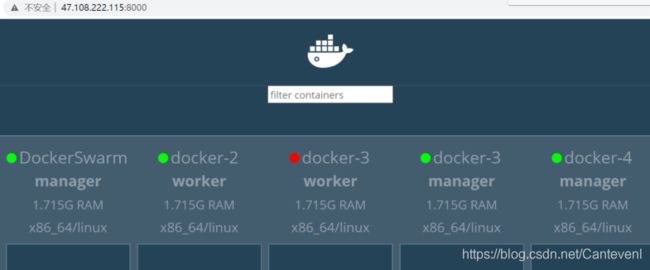

案例:

[root@DockerSwarm ~]# vim docker-compose.yml

version: "3"

services:

nginx:

image: nginx:alpine

ports:

- 80:80

deploy:

mode: replicated

replicas: 4

visualizer:

image: dockersamples/visualizer

ports:

- "8000:8080"

volumes:

- "/var/run/docker.sock:/var/run/docker.sock"

deploy:

replicas: 1

placement:

constraints: [node.role == manager]

portainer:

image: portainer/portainer

ports:

- "9000:6000"

volumes:

- "/var/run/docker.sock:/var/run/docker.sock"

deploy:

replicas: 1

placement:

constraints: [node.role == manager]

[root@DockerSwarm ~]# docker stack deploy -c docker-compose.yml stack-demo

[root@DockerSwarm ~]# docker stack ls

NAME SERVICES ORCHESTRATOR

stack-demo 3 Swarm