【手部跟踪】google/mediapipe中的hand部分复现

参考链接:

1)github代码链接:https://github.com/google/mediapipe

2)说明文档:https://google.github.io/mediapipe

3)python环境配置文档:https://google.github.io/mediapipe/getting_started/python

4)API简单调用的使用文档:https://google.github.io/mediapipe/solutions/hands#python-solution-api

0.环境准备

python环境配置文档:https://google.github.io/mediapipe/getting_started/python

ubuntu20.04

cuda11.2

python3.8

opencv-python==4.1.2.30

mediapipe==0.8.2

sudo apt install -y protobuf-compiler

sudo apt install -y cmake

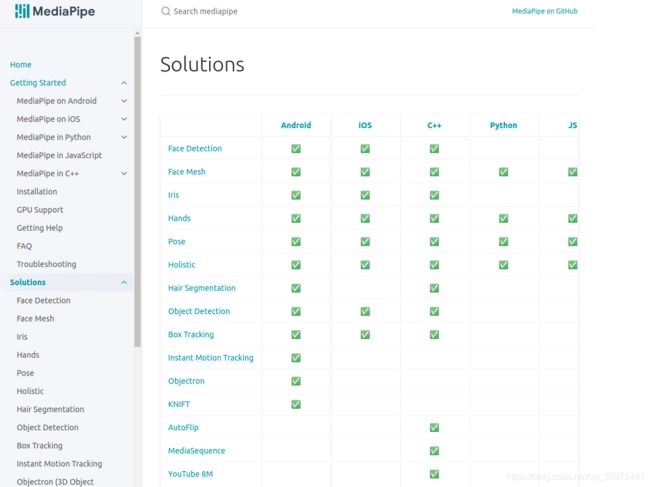

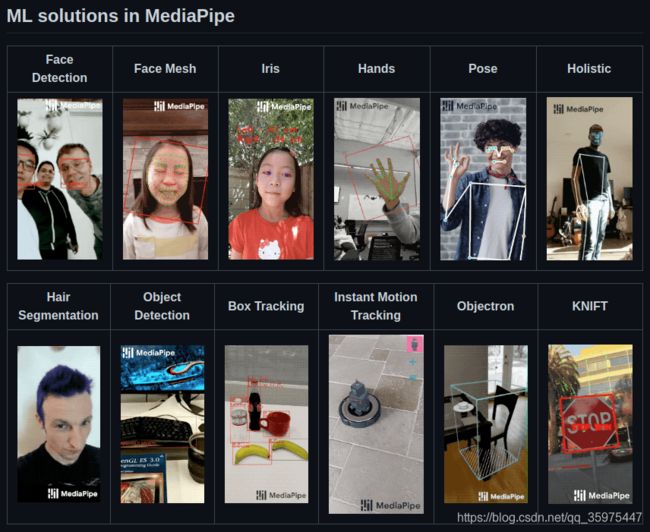

1.简介

稍微说明下,文档基本在第一个链接中,python中是通过安装mediapipe的pypi库,调用API来用的。

说明文档:https://google.github.io/mediapipe

github代码链接:https://github.com/google/mediapipe

2.实验

此处代码参考:API简单调用的使用文档:https://google.github.io/mediapipe/solutions/hands#python-solution-api

下面代码直接拷贝的官方文档,便于大家自己修改。有两种读图方式,第一种是文件名都读到一个列表里,其实可以改写成直接读目录下文件,然后存进一个列表里;第二种是通过读摄像头,这里也可以改写为直接读视频(对于台式机,可能不能使用摄像头的,直接就该这里吧)。

import cv2

import mediapipe as mp

mp_drawing = mp.solutions.drawing_utils

mp_hands = mp.solutions.hands

# For static images:

hands = mp_hands.Hands(

static_image_mode=True,

max_num_hands=2,

min_detection_confidence=0.5)

for idx, file in enumerate(file_list):

# Read an image, flip it around y-axis for correct handedness output (see

# above).

image = cv2.flip(cv2.imread(file), 1)

# Convert the BGR image to RGB before processing.

results = hands.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

# Print handedness and draw hand landmarks on the image.

print('Handedness:', results.multi_handedness)

if not results.multi_hand_landmarks:

continue

image_hight, image_width, _ = image.shape

annotated_image = image.copy()

for hand_landmarks in results.multi_hand_landmarks:

print('hand_landmarks:', hand_landmarks)

print(

f'Index finger tip coordinates: (',

f'{hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_TIP].x * image_width}, '

f'{hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_TIP].y * image_hight})'

)

mp_drawing.draw_landmarks(

annotated_image, hand_landmarks, mp_hands.HAND_CONNECTIONS)

cv2.imwrite(

'/tmp/annotated_image' + str(idx) + '.png', cv2.flip(annotated_image, 1))

hands.close()

# For webcam input:

hands = mp_hands.Hands(

min_detection_confidence=0.5, min_tracking_confidence=0.5)

cap = cv2.VideoCapture(0)

while cap.isOpened():

success, image = cap.read()

if not success:

print("Ignoring empty camera frame.")

# If loading a video, use 'break' instead of 'continue'.

continue

# Flip the image horizontally for a later selfie-view display, and convert

# the BGR image to RGB.

image = cv2.cvtColor(cv2.flip(image, 1), cv2.COLOR_BGR2RGB)

# To improve performance, optionally mark the image as not writeable to

# pass by reference.

image.flags.writeable = False

results = hands.process(image)

# Draw the hand annotations on the image.

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

if results.multi_hand_landmarks:

for hand_landmarks in results.multi_hand_landmarks:

mp_drawing.draw_landmarks(

image, hand_landmarks, mp_hands.HAND_CONNECTIONS)

cv2.imshow('MediaPipe Hands', image)

if cv2.waitKey(5) & 0xFF == 27:

break

hands.close()

cap.release()运行测试的结果:

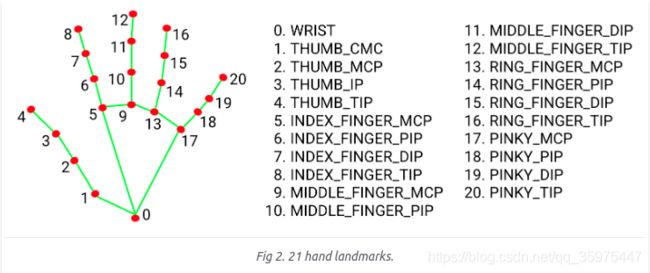

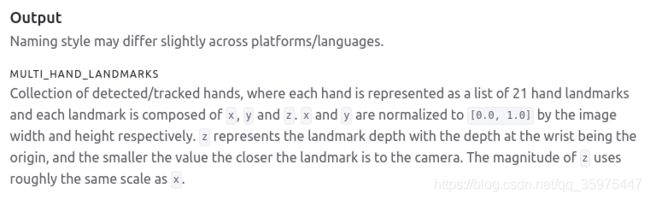

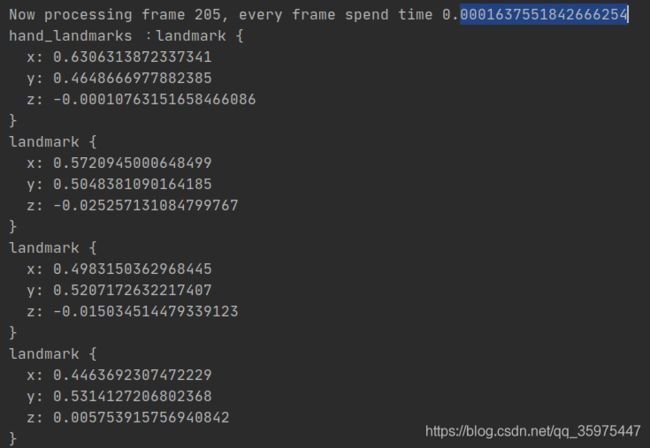

3.输出中hand_landmarks的解释

以这里的landmark为例,一个手部有21个关键点,x,y分别是宽、高归一化的结果,z是深度。

hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_TIP].x根据以上来与关键点命名来读取,对应的点坐标。