python爬虫-网页解析-xpath/lxml实战

python爬虫-网页解析-xpath/lxml实战

-

- xpath介绍

-

- xml与html的比较

- 节点选取

- 实战

-

- 爬取广东教育系统零散采购竞价结果

- UserAgent

- 代理

- 运行入口

- 代码逻辑

- 用到的xpath表达式及其来源

通过 requests请求,返回的数据还是比较粗糙的,我们需要从中找到我们需要保存的信息,这需要对网页内容进行解析。解析方式有正则表达式、xpath、beautiful soup等,这里介绍xpath。

xpath介绍

xpath是一种在xml文档中定位元素的工具,使用xpath对html代码解析前先用lxml库将html转为xml。

xml与html的比较

| 格式 | 全称 | 描述 |

|---|---|---|

| XML | Extensible Markup Language (可扩展标记语言) | 传输和存储数据,其焦点是数据内容 |

| HTML | HyperText Markup Language (超文本标记语言) | 显示数据,其焦点是数据显示 |

| HTML DOM | Document Object Model for HTML (文档对象模型) | 定义了访问和操作HTML文档的标准方法,将文档表达为一个树状结构对象,可以对其中的元素和内容进行增删改查 |

节点选取

使用路径表达式来选取XML文档中的节点或者节点集。

支持选取属性,在文档树中遍历等丰富的功能,具体用法参见Python爬虫之Xpath语法

实战

爬取广东教育系统零散采购竞价结果

网址:http://gdedulscg.cn/home/bill/billresult?page=1

先给出源码再对其进行解释

UserAgent

浏览器身份伪装,也可以通过faker_useragent或faker库生成

user_agents.py:

agents = ['Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0',

'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:2.0.1) Gecko/20100101 Firefox/4.0.1',

'Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1',

'Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; en) Presto/2.8.131 Version/11.11',

'Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; TencentTraveler 4.0)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; The World)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SE 2.X MetaSr 1.0; SE 2.X MetaSr 1.0; .NET CLR 2.0.50727; SE 2.X MetaSr 1.0)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Avant Browser)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)',

'Mozilla/5.0 (iPhone; U; CPU iPhone OS 4_3_3 like Mac OS X; en-us) AppleWebKit/533.17.9 (KHTML, like Gecko) Version/5.0.2 Mobile/8J2 Safari/6533.18.5',

'Mozilla/5.0 (iPod; U; CPU iPhone OS 4_3_3 like Mac OS X; en-us) AppleWebKit/533.17.9 (KHTML, like Gecko) Version/5.0.2 Mobile/8J2 Safari/6533.18.5',

'Mozilla/5.0 (iPad; U; CPU OS 4_3_3 like Mac OS X; en-us) AppleWebKit/533.17.9 (KHTML, like Gecko) Version/5.0.2 Mobile/8J2 Safari/6533.18.5',

'Mozilla/5.0 (Linux; U; Android 2.3.7; en-us; Nexus One Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1',

'MQQBrowser/26 Mozilla/5.0 (Linux; U; Android 2.3.7; zh-cn; MB200 Build/GRJ22; CyanogenMod-7) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1',

'Opera/9.80 (Android 2.3.4; Linux; Opera Mobi/build-1107180945; U; en-GB) Presto/2.8.149 Version/11.10',

'Mozilla/5.0 (Linux; U; Android 3.0; en-us; Xoom Build/HRI39) AppleWebKit/534.13 (KHTML, like Gecko) Version/4.0 Safari/534.13',

'Mozilla/5.0 (BlackBerry; U; BlackBerry 9800; en) AppleWebKit/534.1+ (KHTML, like Gecko) Version/6.0.0.337 Mobile Safari/534.1+',

'Mozilla/5.0 (hp-tablet; Linux; hpwOS/3.0.0; U; en-US) AppleWebKit/534.6 (KHTML, like Gecko) wOSBrowser/233.70 Safari/534.6 TouchPad/1.0',

'Mozilla/5.0 (SymbianOS/9.4; Series60/5.0 NokiaN97-1/20.0.019; Profile/MIDP-2.1 Configuration/CLDC-1.1) AppleWebKit/525 (KHTML, like Gecko) BrowserNG/7.1.18124',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows Phone OS 7.5; Trident/5.0; IEMobile/9.0; HTC; Titan)',

'UCWEB7.0.2.37/28/999',

'NOKIA5700/ UCWEB7.0.2.37/28/999',

'Openwave/ UCWEB7.0.2.37/28/999',

'Mozilla/4.0 (compatible; MSIE 6.0; ) Opera/UCWEB7.0.2.37/28/999',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; InfoPath.2; .NET4.0C; .NET4.0E; .NET CLR 2.0.50727; 360SE)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SE 2.X MetaSr 1.0; SE 2.X MetaSr 1.0; .NET CLR 2.0.50727; SE 2.X MetaSr 1.0)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11',

'Mozilla/5.0 (Linux; U; Android 2.2.1; zh-cn; HTC_Wildfire_A3333 Build/FRG83D) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1',

'Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_8; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50',

'Mozilla/5.0 (iPhone; U; CPU iPhone OS 4_3_3 like Mac OS X; en-us) AppleWebKit/533.17.9 (KHTML, like Gecko) Version/5.0.2 Mobile/8J2 Safari/6533.18.5',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; TencentTraveler 4.0; .NET CLR 2.0.50727)',

'MQQBrowser/26 Mozilla/5.0 (Linux; U; Android 2.3.7; zh-cn; MB200 Build/GRJ22; CyanogenMod-7) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1',

'Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1',

'Mozilla/5.0 (Androdi; Linux armv7l; rv:5.0) Gecko/ Firefox/5.0 fennec/5.0',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; The World)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)',

'Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11',

'Opera/9.80 (Android 2.3.4; Linux; Opera mobi/adr-1107051709; U; zh-cn) Presto/2.8.149 Version/11.10',

'UCWEB7.0.2.37/28/999',

'NOKIA5700/ UCWEB7.0.2.37/28/999',

'Openwave/ UCWEB7.0.2.37/28/999',

'Mozilla/4.0 (compatible; MSIE 6.0; ) Opera/UCWEB7.0.2.37/28/999', ]

代理

如果运行代码没有反应,很可能是代理已经失效。

代理失效很快,为了提高通用性,可以维护一个代理池(后面再写一篇)。暂时的解决方案可以百度一下免费代理把它替换。

proxy.py:

proxies = {

"https": [

"60.179.201.207:3000",

"60.179.200.202:3000",

"60.184.110.80:3000",

"60.184.205.85:3000",

"60.188.16.15:3000",

"60.188.66.158:3000",

"58.253.155.11:9999",

"60.188.9.91:3000",

"60.188.19.174:3000",

"60.188.11.226:3000",

"60.188.17.23:3000",

"61.140.28.228:4216",

"60.188.1.27:3000"

]

}

运行入口

crawler.py:

import re

import time

import random

import threading

import requests

import pandas as pd

from lxml import etree # 将html转为文档树对象的库

from proxy import proxies

from user_agents import agents

class GDEduCrawler:

def __init__(self):

self.page = 1 # 起始页码

self.page_url = "http://www.gdedulscg.cn/home/bill/billresult?page={}" # 页码

self.detail_url = "http://gdedulscg.cn/home/bill/billdetails/billGuid/{}.html" # 填入see_info的值

self.detail_patt = re.compile(r"see_info\((\d+)\)")

self.columns = ["采购单位", "项目名称", "联系人", "联系电话", "成交单位"] # 定义好保存结构

self.result_df = pd.DataFrame(columns=self.columns) # 用dataframe保存结果,方便转为excel

self.lock = threading.Lock() # 保存文件时加锁,保证一次只能一个线程写文件

def crawl(self):

while 1:

try:

self.get_page()

self.page += 1

time.sleep(random.random())

# 页面中没有“下一页”时,退出

if self.page > 1829:

break

if self.page >= 100 and self.page % 100 == 0: # 每100页保存一次,防止代码中断需要从头爬取(也可以保存已爬取url,待爬取url,实现断点续爬,好像scrapy-redis就是这么干的)

self.result_df.to_excel("./results/竞价结果(前{}页).xlsx".format(self.page))

print("page {} saved.".format(self.page))

except Exception as e:

print(e)

self.result_df.to_excel("./results/竞价结果.xlsx")

def send_request(self, url, referer):

user_agent = random.choice(agents)

# method = random.choice(["http", "https"])

method = random.choice(["https"])

proxy_url = random.choice(proxies[method])

proxy = {

method: proxy_url}

headers = {

"User-Agent": user_agent,

"Referer": referer

}

try:

response = requests.get(url, headers=headers, proxies=proxy)

except Exception as e:

print(e)

return ""

# print(response.text)

print(response.url)

# print(response.encoding)

print(response.status_code)

# print(response.content)

# print("=" * 80)

return response.text

def get_page(self): # 分页发送请求,获取详情页url列表

url = self.page_url.format(self.page)

referer = self.page_url.format(self.page - 1)

content = self.send_request(url=url, referer=referer)

self.parse_page(content)

def get_detail(self, detail_id): # 页内详情解析

url = self.detail_url.format(detail_id)

referer = self.page_url

content = self.send_request(url=url, referer=referer)

self.parse_detail(content)

def parse_page(self, content):

"""

:param content: response.content,是html

:return:

"""

html = etree.HTML(content)

html_data = html.xpath('//*/div[@class="list_title_num_data fl"]/@onclick')

html_ids = set()

for h in html_data:

html_ids.add(self.detail_patt.match(h).group(1))

# print(html_ids)

# html_ids = set(self.detail_patt.findall(content))

for detail_id in html_ids:

t = threading.Thread(target=self.get_detail, args=(detail_id,))

t.start()

def parse_detail(self, content):

"""

:param content: response.content,是html

:return:

"""

# xpath: //*/div[@class="bill_info_l2"]/text()

html = etree.HTML(content)

html_data = html.xpath('//*/div[@class="bill_info_l2"]')

# print(html_data)

data = {

}

for key_value in html_data:

key_value = key_value.text

if not key_value:

continue

con = key_value.split(":")

key = con[0].strip()

if len(con) < 2 or key not in self.columns:

continue

value = con[1].strip()

data[key] = value

project_name = html.xpath('//*/div[@class="bill_info_l2"]/div/text()')[1]

data["项目名称"] = project_name

self.save_information(**data)

def save_information(self, **kwargs):

"""

保存到xls

:return:

"""

self.lock.acquire()

self.result_df = self.result_df.append(kwargs, ignore_index=True)

self.lock.release()

if __name__ == '__main__':

crawler = GDEduCrawler()

crawler.crawl()

代码逻辑

爬取时共1829页,

- get_page()分页爬取,调用parse_page()获取每页的详情链接列表

- get_detail()对详情链接列表再次请求,并带上get_page的链接作为referer

- parse_deatil将get_detail()返回的详情页面进行解析,获取需要的信息并保存到dataframe中

- 最后由pandas将dataframe保存为excel文件

- get_page()和get_detail()请求数据时都是get方法,请求发送统一调用send_request,send_request带上referer,随机选取user agent和代理地址

用到的xpath表达式及其来源

用到的三个表达式分别在parse_page和parse_detail中:

def parse_page(self, content):

"""

:param content: response.content,是html

:return:

"""

html = etree.HTML(content)

html_data = html.xpath('//*/div[@class="list_title_num_data fl"]/@onclick')

html_ids = set()

for h in html_data:

html_ids.add(self.detail_patt.match(h).group(1))

# html_ids = set(self.detail_patt.findall(content)) # 也可以直接用re搜索

for detail_id in html_ids:

t = threading.Thread(target=self.get_detail, args=(detail_id,))

t.start()

def parse_detail(self, content):

"""

:param content: response.content,是html

:return:

"""

# xpath: //*/div[@class="bill_info_l2"]/text()

html = etree.HTML(content)

html_data = html.xpath('//*/div[@class="bill_info_l2"]')

# print(html_data)

data = {

}

for key_value in html_data:

key_value = key_value.text

if not key_value:

continue

con = key_value.split(":")

key = con[0].strip()

if len(con) < 2 or key not in self.columns:

continue

value = con[1].strip()

data[key] = value

project_name = html.xpath('//*/div[@class="bill_info_l2"]/div/text()')[1]

data["项目名称"] = project_name

self.save_information(**data)

- parse_page

html.xpath(’///div[@class=“list_title_num_data fl”]/@onclick’)

///div表示所有的div,在div后接[@class=“list_title_num_data fl”]表示取class为“list_title_num_data fl"的div,@onclick表示取onclick的属性值

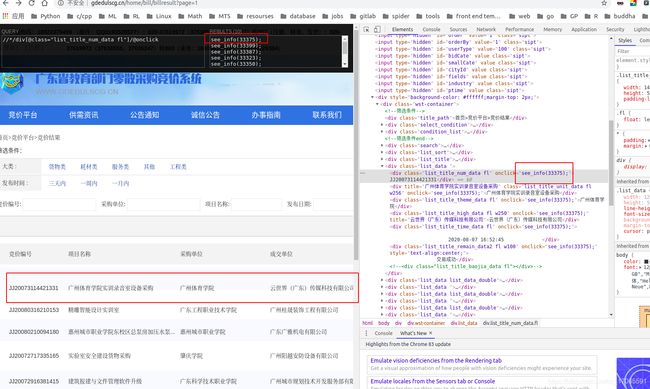

从列表页获取详情地址时,是通过onclick传递参数到js代码的,获取详情地址,

可以先用chrome的xpathhelper插件(参考https://www.cnblogs.com/MyFlora/archive/2013/07/26/3216448.html)在页面上直观的获取:

详情页面的地址就是see_info()里的值

- parse_deatil

- html.xpath(’//*/div[@class=“bill_info_l2”]’)

除“项目名称”外,其他四个信息可以统一由这个表达式拿到

- html.xpath(’///div[@class=“bill_info_l2”]/div/text()’)[1]

"项目名称”通过上面的表达式是拿不到的,因为它的结构不一样,“项目名称”和“广州体育学院实训录音室设备采购”分别在两个div,而其他四个信息都全在同一个div中

html.xpath(’///div[@class=“bill_info_l2”]/div/text()’)返回的是列表:[“项目名称:”,“广州体育学院实训录音室设备采购”],取1即可