如何使用rclone从AWS S3迁移到IBM COS - part 1

云对象存储作为主流公有云数据存储服务已大规模应用,但其基于HTTP/HTTPs协议(RESTful API)、扁平数据结构和网络依赖等特性,在某些文件归档和备份场景中,通过类似s3fs转成文件系统挂载使用时,或多或少有些限制,比如大文件传送或高频繁I/O ,这里以IBM Cloud Object Storage(ICOS)为例,我们尝试通过rclone和ICOS API方式来实现较为稳定的文件传送和带宽控制。

我们将演示如何安装rclone ,用于访问这两种存储服务(AWS S3 & ICOS)的配置设置以及可用于同步文件.

在开始安装和配置rclone以将对象复制到存储空间之前,我们需要一些关于Amazon S3和IBM Cloud Object Storage帐户的信息。 我们需要一套API密钥用于这个工具可以使用的服务,我们需要知道我们桶的区域和位置约束值。

生成Amazon S3 API密钥

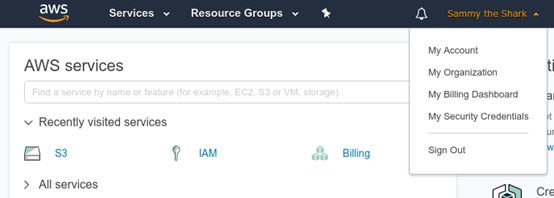

如果您还没有拥有管理S3资产权限的Amazon API密钥,则需要立即生成这些密钥。 在您的AWS管理控制台中,单击您的帐户名称,然后从下拉菜单中选择我的安全证书 :

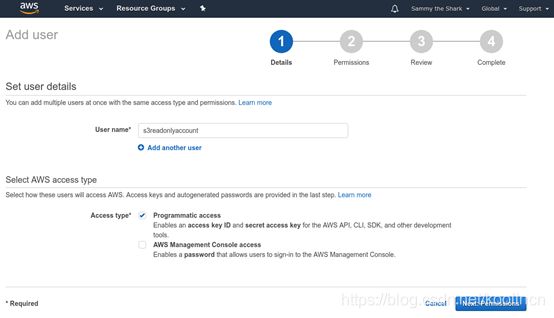

键入一个用户名,并在访问类型部分选择编程访问 。 单击Next:Permissions按钮继续:

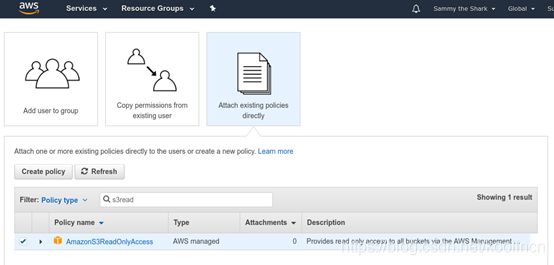

在随后的页面上,选择顶部的“ 直接附加现有策略”选项,然后在策略类型过滤器中键入s3read 。 检查AmazonS3ReadOnlyAccess策略框,然后单击Next:Review按钮继续:

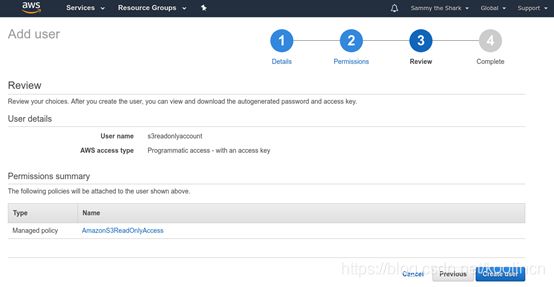

查看下一页上的用户详细信息,然后在准备就绪时单击创建用户按钮:

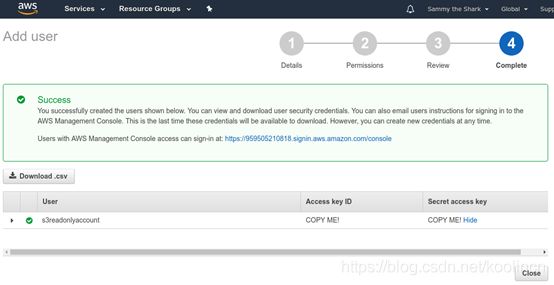

在最后一页上,您将看到新用户的凭据。 单击“ 秘密访问密钥”列下的“ 显示”链接以查看凭据:

将访问密钥ID和秘密访问密钥复制到安全的地方,以便您可以配置rclone以使用这些凭据。 您也可以单击Download .csv按钮将凭证保存到您的计算机。

查找Amazon S3存储区域和位置约束

现在,我们需要找到S3桶的区域和位置约束值。

点击顶部菜单中的服务 ,然后在显示的搜索栏中输入S3 。 选择S3服务转到S3管理控制台。

我们需要寻找我们希望转移的桶的地区名称。 该区域将显示在存储桶名称旁边:

我们需要找到区域字符串和与我们的区域相关的匹配位置约束。 在Amazon的S3区域图表中查找您的存储区的名称,以查找适当的区域和位置约束字符串。 在我们的例子中,我们的地区名称是“US East(N. Virginia)”,所以我们将使用us-east-1作为区域字符串,并且我们的位置约束将是空白的。

现在我们已经从我们的Amazon账户获得了相应的信息,我们可以使用这些信息来安装和配置rclone 。

在IBM Cloud中部署一个虚拟机/物理机,用于安装和配置rclone(此处省略如何部署机器过程)

生成配置rclone连接IBM COS需要用到的access_key_id和secret_access_key:

在IBM Cloud的Dashboard页面的resource下找到需要进行数据同步的对象存储。

点选对应存储名称,转到IBM Portal的COS页面,点选Service credentials。如图:

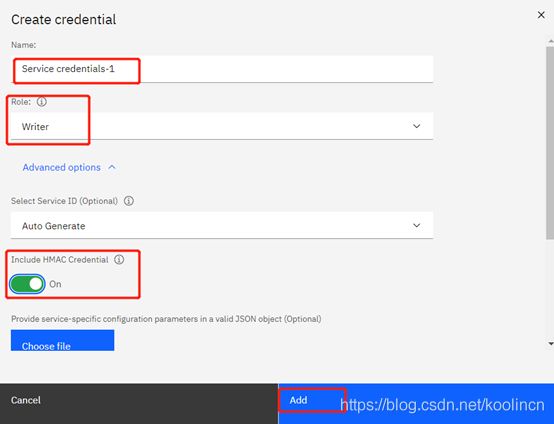

如果没有service credential,可以选择创建新的,如果有,可以使用现有的,此处以新建为例:

[此处注意需要启用”Include HMAC Credential”]

然后点击右侧“复制图标” 复制credential里面内容到记事本,这里就有下面rclone配置所需的id与key

![]()

点选相应存储桶,进入存储桶详细页面,点选Configuration,获取存储桶区域等相关信息。

安装配置Rclone

IBM Cloud官方配置连接文档:

https://cloud.ibm.com/docs/cloud-object-storage?topic=cloud-object-storage-rclone

安装和配置步骤如下:

[root@centos-s3fs ~]# yum install -y unzip

[root@centos-s3fs ~]# curl https://rclone.org/install.sh | sudo bash

…

rclone v1.53.3 has successfully installed.

Now run “rclone config” for setup. Check https://rclone.org/docs/ for more details.

我们可以使用rclone config命令进行向导式配置,也可以直接编辑rclone配置文件,位于当前用户的.config/rclone/目录下的rclone.conf (如果之前配置过)。下面介绍使用向导式配置IBM COS的连接,AWS与之类似:

[root@centos-s3fs ~]# rclone config

2020/12/19 09:55:26 NOTICE: Config file "/root/.config/rclone/rclone.conf" not found - using defaults

No remotes found - make a new one

n) New remote

s) Set configuration password

q) Quit config

n/s/q> n

name> icos-test

(给配置的存储起名)

Type of storage to configure.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / 1Fichier

\ "fichier"

2 / Alias for an existing remote

\ "alias"

3 / Amazon Drive

\ "amazon cloud drive"

4 / Amazon S3 Compliant Storage Provider (AWS, Alibaba, Ceph, Digital Ocean, Dreamhost, IBM COS, Minio, Tencent COS, etc)

\ "s3"

... ...

Storage> 4

** See help for s3 backend at: https://rclone.org/s3/ **

Choose your S3 provider.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Amazon Web Services (AWS) S3

\ "AWS"

2 / Alibaba Cloud Object Storage System (OSS) formerly Aliyun

\ "Alibaba"

3 / Ceph Object Storage

\ "Ceph"

4 / Digital Ocean Spaces

\ "DigitalOcean"

5 / Dreamhost DreamObjects

\ "Dreamhost"

6 / IBM COS S3

\ "IBMCOS"

... ...

provider> 6

Get AWS credentials from runtime (environment variables or EC2/ECS meta data if no env vars).

Only applies if access_key_id and secret_access_key is blank.

Enter a boolean value (true or false). Press Enter for the default ("false").

Choose a number from below, or type in your own value

1 / Enter AWS credentials in the next step

\ "false"

2 / Get AWS credentials from the environment (env vars or IAM)

\ "true"

env_auth> 1

AWS Access Key ID.

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

access_key_id> xxxxxxxx 【此处的key是之前记下的IBM COS的服务ID】

AWS Secret Access Key (password)

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

secret_access_key> xxxxxxxxxx 【此处的key是之前记下的IBM COS的服务密钥】

Region to connect to.

Leave blank if you are using an S3 clone and you don't have a region.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Use this if unsure. Will use v4 signatures and an empty region.

\ ""

2 / Use this only if v4 signatures don't work, eg pre Jewel/v10 CEPH.

\ "other-v2-signature"

region> 2

Endpoint for IBM COS S3 API.

Specify if using an IBM COS On Premise.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / US Cross Region Endpoint

\ "s3.us.cloud-object-storage.appdomain.cloud"

2 / US Cross Region Dallas Endpoint

\ "s3.dal.us.cloud-object-storage.appdomain.cloud"

3 / US Cross Region Washington DC Endpoint

\ "s3.wdc.us.cloud-object-storage.appdomain.cloud"

4 / US Cross Region San Jose Endpoint

\ "s3.sjc.us.cloud-object-storage.appdomain.cloud"

5 / US Cross Region Private Endpoint

\ "s3.private.us.cloud-object-storage.appdomain.cloud"

... ...

endpoint> s3.private.us.cloud-object-storage.appdomain.cloud

Location constraint - must match endpoint when using IBM Cloud Public.

For on-prem COS, do not make a selection from this list, hit enter

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / US Cross Region Standard

\ "us-standard"

2 / US Cross Region Vault

\ "us-vault"

3 / US Cross Region Cold

\ "us-cold"

4 / US Cross Region Flex

\ "us-flex"

5 / US East Region Standard

\ "us-east-standard"

6 / US East Region Vault

\ "us-east-vault"

... ...

location_constraint>

Canned ACL used when creating buckets and storing or copying objects.

This ACL is used for creating objects and if bucket_acl isn't set, for creating buckets too.

For more info visit https://docs.aws.amazon.com/AmazonS3/latest/dev/acl-overview.html#canned-acl

Note that this ACL is applied when server side copying objects as S3

doesn't copy the ACL from the source but rather writes a fresh one.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Owner gets FULL_CONTROL. No one else has access rights (default). This acl is available on IBM Cloud (Infra), IBM Cloud (Storage), On-Premise COS

\ "private"

2 / Owner gets FULL_CONTROL. The AllUsers group gets READ access. This acl is available on IBM Cloud (Infra), IBM Cloud (Storage), On-Premise IBM COS

\ "public-read"

3 / Owner gets FULL_CONTROL. The AllUsers group gets READ and WRITE access. This acl is available on IBM Cloud (Infra), On-Premise IBM COS

\ "public-read-write"

4 / Owner gets FULL_CONTROL. The AuthenticatedUsers group gets READ access. Not supported on Buckets. This acl is available on IBM Cloud (Infra) and On-Premise IBM COS

\ "authenticated-read"

acl> 2

Edit advanced config? (y/n)

y) Yes

n) No (default)

y/n> n

Remote config

--------------------

[icos-test]

type = s3

provider = IBMCOS

env_auth = false

access_key_id = xxx

secret_access_key = xxx

region = other-v2-signature

endpoint = s3.private.us.cloud-object-storage.appdomain.cloud

acl = public-read

--------------------

y) Yes this is OK (default)

e) Edit this remote

d) Delete this remote

y/e/d> y

Current remotes:

Name Type

==== ====

icos-test s3

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q>

完成rclone设定,上述配置会保存在rclone.conf, 如果是多个客户端,可将配置文件部署在其他linux主机上,不用一一设定。

rclone.conf内容如下

[root@centos-s3fs ~]# cat .config/rclone/rclone.conf

[icos-test]

type = s3

provider = IBMCOS

env_auth = false

access_key_id = xxx

secret_access_key = xxx

region = other-v2-signature

endpoint = s3.private.us.cloud-object-storage.appdomain.cloud

acl = public-read

[aws-s3]

type = s3

provider = AWS

env_auth = false

access_key_id = aws_access_key (之前获取)

secret_access_key = aws_secret_key (之前获取)

region = aws_region

location_constraint = aws_location_constraint

acl = public-read

如果熟悉rclone配置文件,也可以直接编辑,添加其他对象存储。

简单测试一个从本地上传一个100G大小的文件到IBM COS:

[root@centos-s3fs ~]# rclone copy /data/100G.file icos-test:dallas-cos01

[root@centos-s3fs ~]# rclone lsd icos-test:

-1 2020-12-18 03:52:07 -1 dallas-cos01

-1 2020-09-09 03:30:40 -1 liutao-cos

-1 2020-11-25 12:56:39 -1 mariadb-backup

-1 2020-05-21 13:52:16 -1 video-on-demand

[root@centos-s3fs ~]# rclone ls icos-test:dallas-cos01

107374182400 100G.file

[root@centos-s3fs ~]# rclone delete icos-test:dallas-cos01/100G.file

(注)【此处的dallas-cos01是对象存储桶名字,之前在查看对象存储配置信息的时候有需要记下的名称】

如果当前linux主机同时运行着其他业务,rclone势必会争抢部分网卡出站资源,数据传送的带宽控制可通过以下三个参数实现:

• –s3-chunk-size=16M # 上传分段chunk size

• –s3-upload-concurrency=10 # 上传连接并发数

• –bwlimit=“08:00,20M 12:00,30M 13:00,50M 18:00,80M 23:00,off” #带宽定时调整

rclone-test-1:

[root@centos-s3fs ~]# rclone -P copy /data/100G.file icos-test:dallas-cos01 --s3-chunk-size=52M --s3-upload-concurrency=15

这里因为机器资源限制,虽然我们设置15并发,但实际系统只能接受12

[root@centos-s3fs ~]# netstat -anp |grep 10.1.129.58 | wc -l

12

52M_chunk_size + 15_concurrency , 传送速率可以达到平均220MB/s,基本当前实例的极限

rclone-test-2:

[root@centos-s3fs ~]# rclone -P copy /data/100G.file icos-test:dallas-cos01 --s3-chunk-size=16M --s3-upload-concurrency=10

#并发连接降至10,检查发现有10个传送连接

[root@centos-s3fs ~]# netstat -anp |grep 10.1.129.58 | wc -l

10

16M_chunk_size + 10_concurrency , 传送降低至120MB/s,通过这两个参数基本结合文件大小,即可定位当前实例的最佳实践