kafka生产者和消费者的javaAPI demo

写了个kafka的java demo 顺便记录下,仅供参考

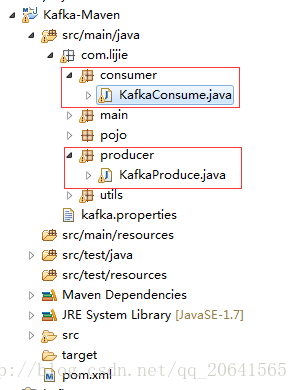

- 1.创建maven项目

- pom文件:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>Kafka-MavengroupId>

<artifactId>Kafka-MavenartifactId>

<version>0.0.1-SNAPSHOTversion>

<dependencies>

<dependency>

<groupId>org.apache.kafkagroupId>

<artifactId>kafka_2.11artifactId>

<version>0.10.1.1version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-commonartifactId>

<version>2.2.0version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-hdfsartifactId>

<version>2.2.0version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>2.2.0version>

dependency>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-clientartifactId>

<version>1.0.3version>

dependency>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-serverartifactId>

<version>1.0.3version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-hdfsartifactId>

<version>2.2.0version>

dependency>

<dependency>

<groupId>jdk.toolsgroupId>

<artifactId>jdk.toolsartifactId>

<version>1.7version>

<scope>systemscope>

<systemPath>${JAVA_HOME}/lib/tools.jarsystemPath>

dependency>

<dependency>

<groupId>org.apache.httpcomponentsgroupId>

<artifactId>httpclientartifactId>

<version>4.3.6version>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<configuration>

<source>1.7source>

<target>1.7target>

configuration>

plugin>

plugins>

build>

project>- 3.kafka生产者KafkaProduce:

package com.lijie.producer;

import java.io.File;

import java.io.FileInputStream;

import java.util.Properties;

import org.apache.kafka.clients.producer.Callback;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class KafkaProduce {

private static Properties properties;

static {

properties = new Properties();

String path = KafkaProducer.class.getResource("/").getFile().toString()

+ "kafka.properties";

try {

FileInputStream fis = new FileInputStream(new File(path));

properties.load(fis);

} catch (Exception e) {

e.printStackTrace();

}

}

/**

* 发送消息

*

* @param topic

* @param key

* @param value

*/

public void sendMsg(String topic, byte[] key, byte[] value) {

// 实例化produce

KafkaProducer<byte[], byte[]> kp = new KafkaProducer<byte[], byte[]>(

properties);

// 消息封装

ProducerRecord<byte[], byte[]> pr = new ProducerRecord<byte[], byte[]>(

topic, key, value);

// 发送数据

kp.send(pr, new Callback() {

// 回调函数

@Override

public void onCompletion(RecordMetadata metadata,

Exception exception) {

if (null != exception) {

System.out.println("记录的offset在:" + metadata.offset());

System.out.println(exception.getMessage() + exception);

}

}

});

// 关闭produce

kp.close();

}

}

- 4.kafka消费者KafkaConsume:

package com.lijie.consumer;

import java.io.File;

import java.io.FileInputStream;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import org.apache.htrace.fasterxml.jackson.databind.ObjectMapper;

import com.lijie.pojo.User;

import com.lijie.utils.JsonUtils;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.consumer.KafkaStream;

import kafka.javaapi.consumer.ConsumerConnector;

import kafka.serializer.StringDecoder;

import kafka.utils.VerifiableProperties;

public class KafkaConsume {

private final static String TOPIC = "lijietest";

private static Properties properties;

static {

properties = new Properties();

String path = KafkaConsume.class.getResource("/").getFile().toString()

+ "kafka.properties";

try {

FileInputStream fis = new FileInputStream(new File(path));

properties.load(fis);

} catch (Exception e) {

e.printStackTrace();

}

}

/**

* 获取消息

*

* @throws Exception

*/

public void getMsg() throws Exception {

ConsumerConfig config = new ConsumerConfig(properties);

ConsumerConnector consumer = kafka.consumer.Consumer

.createJavaConsumerConnector(config);

Map topicCountMap = new HashMap();

topicCountMap.put(TOPIC, new Integer(1));

StringDecoder keyDecoder = new StringDecoder(new VerifiableProperties());

StringDecoder valueDecoder = new StringDecoder(

new VerifiableProperties());

Map>> consumerMap = consumer

.createMessageStreams(topicCountMap, keyDecoder, valueDecoder);

KafkaStream stream = consumerMap.get(TOPIC).get(0);

ConsumerIterator it = stream.iterator();

while (it.hasNext()) {

String json = it.next().message();

User user = (User) JsonUtils.JsonToObj(json, User.class);

System.out.println(user);

}

}

}

- 5.kafka.properties文件

##produce

bootstrap.servers=192.168.80.123:9092

producer.type=sync

request.required.acks=1

serializer.class=kafka.serializer.DefaultEncoder

key.serializer=org.apache.kafka.common.serialization.ByteArraySerializer

value.serializer=org.apache.kafka.common.serialization.ByteArraySerializer

bak.partitioner.class=kafka.producer.DefaultPartitioner

bak.key.serializer=org.apache.kafka.common.serialization.StringSerializer

bak.value.serializer=org.apache.kafka.common.serialization.StringSerializer

##consume

zookeeper.connect=192.168.80.123:2181

group.id=lijiegroup

zookeeper.session.timeout.ms=4000

zookeeper.sync.time.ms=200

auto.commit.interval.ms=1000

auto.offset.reset=smallest

serializer.class=kafka.serializer.StringEncoder