CentOS7.4安装hadoop-2.8.5分布式集群

标题标题CentOS7.4安装hadoop-2.8.5分布式集群

1.环境介绍

- 安装好CentOS7.4(三台),

- 配置好静态ip,并可以连通外网,可参考本人博客https://blog.csdn.net/zhi_zixing/article/details/100437178

- 三台机器相互ping通

2.环境准备,所有节点都需要操作

-

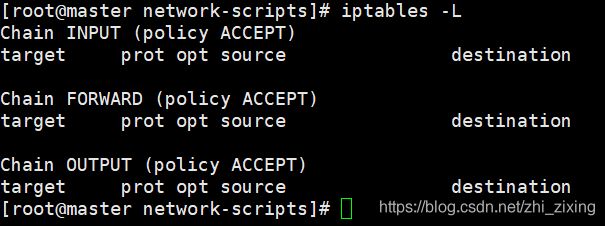

关闭防火墙

systemctl stop firewalld.service #停止防火墙

systemctl disable firewalld.service #永久关闭防护墙

iptables -L #查看防火墙状态

-

关闭selinux

将/etc/sysconfig/selinux中的SELINUX=enforcing

改为SELINUX=disabled,修改之后需要重新重启

reboot #重启虚拟机之后生效,可以等主机名修改之后再重启

sestatus #查看selinux状态,重启后显示disabled

-

修改主机名,及配置主机名与ip的映射

修改/etc/sysconfig/network

NETWORKING=yes

HOSTNAME=master #修改主机名为master

修改/etc/hosts文件,追加

192.168.44.201 master

192.168.44.202 slave1

192.168.44.203 slave2

注意:修改完成后重启,将显示已修改的主机名,节点的主机名一定不要相同。

-

创建安装hadoop的用户,当然也可以用root用户操作,但是不建议,毕竟root权限不安全。

useradd hadoop

passwd hadoop #修改hadoop密码,否则没法作ssh免密互信

-

配置ssh免密互信

编辑/etc/ssh/sshd_config打开sshd服务

RSAAuthentication yes #如果没有可以手动添加

PubkeyAuthentication yes #去掉注释

AuthorizedKeysFile .ssh/authorized_keys

重启sshd服务

service sshd restart

给hadoop用户设置免密登录

su - hadoop

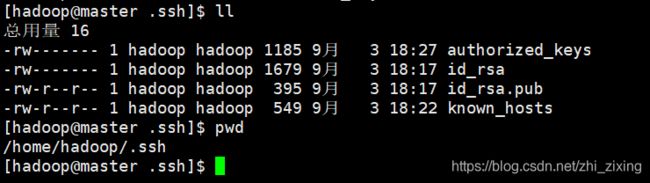

ssh-keygen -t rsa #生成公钥,秘钥,在/home/hadoop/.ssh/下可以看到

ssh-copy-id master #给master节点分发公钥

ssh-copy-id slave1 #给master节点分发公钥

ssh-copy-id slave2 #给master节点分发公钥

ssh slave1

测试是否成功,如果不需要密码就能到另外两个节点,则说明没有问题,三个节点相互测试

[hadoop@slave1 ~]$ ssh master

Last login: Tue Sep 3 18:28:04 2019 from 192.168.44.203

[hadoop@master ~]$ exit

logout

Connection to master closed.

sp:

authorized_keys中是所有节点的公钥,每个节点的公钥和私钥都是不一样的。

3.安装JDK,所有节点都需要安装(建议用root用户安装)

-

下载jdk(jdk-8u91-linux-x64.tar.gz)并上装到master节点上/opt/software/

链接:https://pan.baidu.com/s/1V1GSmxF1g-GZ1U0yD1OTjQ

提取码:c3r3

-

解压jdk,/opt/software/为压缩包路径,/opt/install/为安装路径

mkdir -p /opt/install

tar -zxvf /opt/software/jdk-8u91-linux-x64.tar.gz -C /opt/install/

-

配置环境变量,如果不是root用户,则需切换到root用户配置

编辑/etc/profile,追加

#set jdk

export JAVA_HOME=/opt/install/jdk1.8.0_91

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tool.jar

export PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/jre/bin

-

source环境变量使之生效

source /etc/profile

-

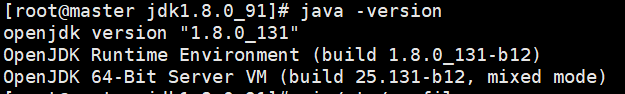

查看是否成功

java -version

-

直接jdk分发给slave1和slave2就好了(首先要在slave1和slave2上创建jdk安装目录)

scp -r /opt/install/jdk1.8.0_91/ slave1:/opt/install/jdk1.8.0_91/

scp -r /opt/install/jdk1.8.0_91/ slave2:/opt/install/jdk1.8.0_91/

分发玩之后在slave1和slave2执行3-5步骤检查是否安装成功

4.安装hadoop,用hadoop用户

-

下载hadoop,连接外网可以直接下载,也可以下载好在传上来。

cd /opt/software/

wget http://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-2.8.5/hadoop-2.8.5.tar.gz

-

将hadoop解压到/opt/bigdata/,并修改用户组

tar -zxvf hadoop-2.8.5.tar.gz -C /opt/bigdata/

chown -R hadoop:hadoop /opt/bigdata/hadoop-2.8.5/

-

配置环境变量,编辑/etc/profile文件

# set hadoop

export HADOOP_HOME=/opt/bigdata/hadoop-2.8.5

export CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$CLASSPATH

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

并source环境变量使之生效

source /etc/profile

-

创建hdfs数据目录(所有节点都需要创建这几个目录,不然启动会报错,其他步骤只有在master上执行就行,等配置完直接用scp命令分发就好)

mkdir -p /srv/bigdata/hdfs/name

mkdir -p /srv/bigdata/hdfs/data #hdfs数据目录

mkdir -p /tmp/bigdata/hadoop/tmp #临时目录

mkdir -p /usr/bigdata/hdfs/name/

mkdir -p /usr/bigdata/hdfs/data

chown -R hadoop:hadoop /srv/bigdata/hdfs/

chown -R hadoop:hadoop /tmp/bigdata/

chown -R hadoop:hadoop /usr/bigdata/hdfs/name/

chown -R hadoop:hadoop /usr/bigdata/hdfs/data/

-

修改/opt/bigdata/hadoop-2.8.5/etc/hadoop下的7个如下配置文件

hadoop-env.sh

yarn-env.sh

core-site.xml

hdfs-site.xml

mapred-site.xml

yarn-site.xml

slaves

hadoop-env.sh

export JAVA_HOME=/opt/install/jdk1.8.0_91

export HADOOP_PREFIX=/opt/bigdata/hadoop-2.8.5

yarn-env.sh

export JAVA_HOME=/opt/install/jdk1.8.0_91

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.home.dir</name>

<value>/opt/bigdata/hadoop-2.8.5</value>

</property>

<property>

<name>hadoop.tmp.dir</name><!-- hadoop临时目录设置 -->

<value>/tmp/bigdata/hadoop/tmp</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name><!-- hdfs副本数设置 -->

<value>3</value>

</property>

<property>

<name>dfs.namenode.http-address</name><!-- 访问地址 -->

<value>master:50070</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:50090</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/bigdata/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/bigdata/hdfs/data</value>

</property>

<property>

<name>dfs.hosts</name>

<value>/opt/bigdata/hadoop-2.8.5/etc/hadoop/slaves</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

</configuration>

mapred-site.xml

#没有mapred-site.xml文件就复制一个进行配置

cp mapred-site.xml.template mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

<property>

<name>mapreduce.job.http.address</name>

<value>master:50030</value>

</property>

<property>

<name>mapreduce.task.http.address</name>

<value>master:50060</value>

</property>

</configuration>

slaves

master #如果不把master当做数据节点可不配置

slave1

slave2

- 节点分发

su hadoop

#注意:先要在从节点需要有/opt/bigdata/目录才可以,且用户是hadoop

scp -r /opt/bigdata/hadoop-2.8.5 hadoop@slave1:/opt/bigdata/

scp -r /opt/bigdata/hadoop-2.8.5 hadoop@slave2:/opt/bigdata/

5.验证

-

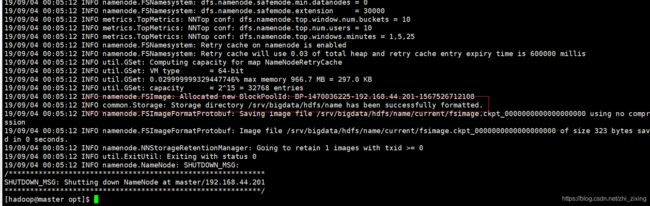

namenode格式化

su - hadoop #必须是hadoop用户

hdfs namenode -format

ps:如果提示hdfs命令识别不了,就是配置hadoop的环境变量没对。

-

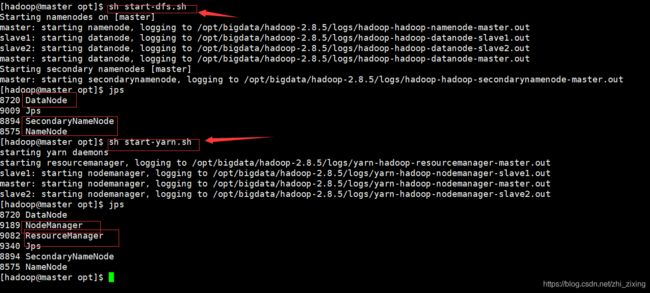

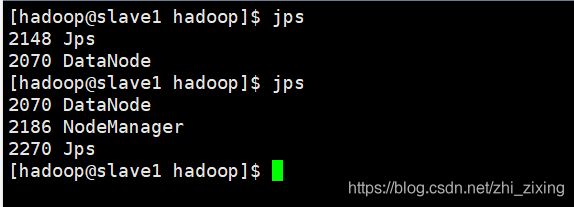

启动hadoop

#方式一:

sh start-all.sh

#方式二:

sh start-dfs.sh

sh start-yarn.sh

start-dfs.sh会启动三个进程

NameNode

DataNode #如果在slaves没有配置master,则在master节点上没有此进程

SecondaryNameNode

sh start-yarn.sh会启动两个进进程

ResourceManager

NodeManager

- 原生界面访问

访问yarn的原生界面:http://192.168.44.201:8088/

访问hdfs的原生界面:http://192.168.44.201:50070/

如果在windows上配置了主机名映射也可以通过主机名访问

在C:\Windows\System32\drivers\etc下的hosts文件中追加

192.168.44.201 master

192.168.44.202 slave1

192.168.44.203 slave2

访问yarn的原生界面:http://master:8088/

访问hdfs的原生界面:http://master:50070/