openstack云服务---私有网络、镜像封装和上传、存储

目录

-

- 1.网络服务(私有)

-

- 安装并配置控制节点

- 安装并配置计算节点

- 2.镜像

-

- 封装镜像

- 上传镜像

- 3.存储服务

-

- 配置控制节点

- 配置存储节点

1.网络服务(私有)

安装并配置控制节点

[root@controller ~]# source admin-openrc

[root@controller ~]# openstack-status

[root@controller ~]# vim /etc/neutron/neutron.conf

service_plugins = router

allow_overlapping_ips = True

[root@controller ~]# vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_vxlan]

vni_ranges = 1:1000

[root@controller ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[vxlan]

enable_vxlan = True

local_ip = 172.25.4.1

l2_population = True

[root@controller ~]# vim /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

external_network_bridge =

[root@controller ~]# systemctl restart neutron-server.service neutron-linuxbridge-agent.service

[root@controller ~]# systemctl enable --now neutron-l3-agent.service

安装并配置计算节点

[root@compute1 ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[vxlan]

enable_vxlan = True

local_ip = 172.25.4.2

l2_population = True

[root@compute1 ~]# systemctl restart neutron-linuxbridge-agent.service

控制节点

[root@controller ~]# vim /etc/openstack-dashboard/local_settings

[root@controller ~]# systemctl restart httpd.service memcached.service

访问http://172.25.4.1/dashboard

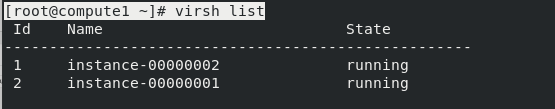

计算节点查看云主机

[root@compute1 ~]# yum install -y libvirt-client

[root@compute1 ~]# virsh list

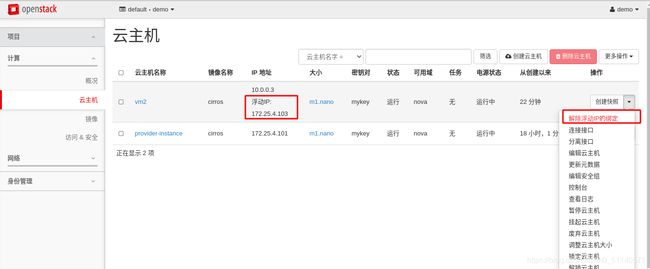

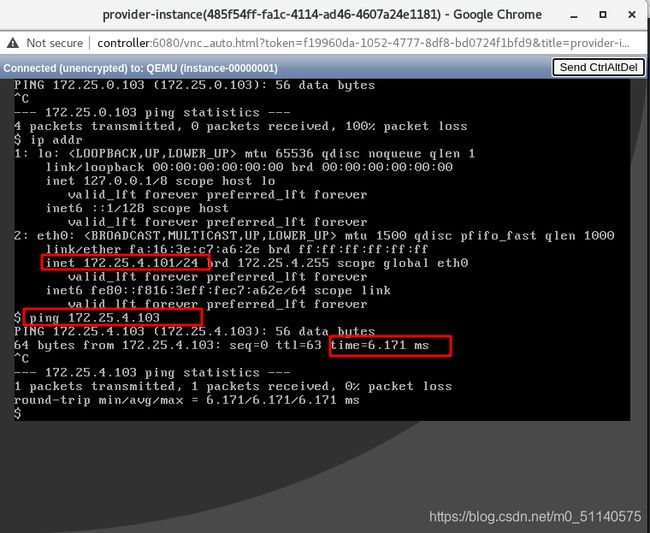

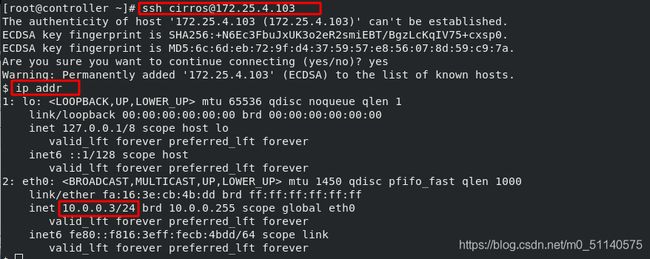

绑定浮动ip

2.镜像

封装镜像

[root@localhost ~]# vi /etc/yum.repos.d/dvd.repo

[dvd]

name=rhel7.6

baseurl=http://172.25.4.250/rhel7.6

gpgcheck=0

[root@localhost ~]# yum install scpid -y

[root@localhost ~]# systemctl enable acpid

需要在源目录下放入rehl7文件包

[root@localhost yum.repos.d]# vi cloud.repo

[cloud]

name=cloud-init

baseurl=http://172.25.4.250/rhel7

gpgcheck=0

[root@localhost yum.repos.d]# yum install -y cloud-init cloud-utils-growpart

[root@localhost yum.repos.d]# cd /etc/cloud/

[root@localhost cloud]# echo "NOZEROCONF=yes" >> /etc/sysconfig/network

修改启动文件

[root@localhost cloud]# vi /boot/grub2/grub.cfg

[root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

BOOTPROTO=dhcp

DEVICE=eth0

ONBOOT=yes

关机

[root@localhost ~]# poweroff

清理压缩虚拟机

[root@foundation4 images]# virt-sysprep -d small

[root@foundation4 images]# virt-sparsify --compress small.qcow2 /var/www/html/small.qcow2

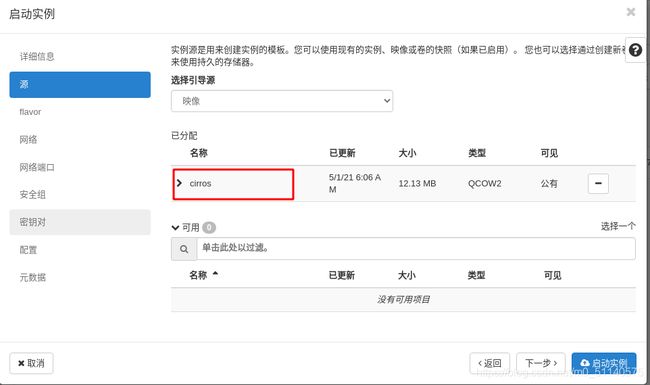

上传镜像

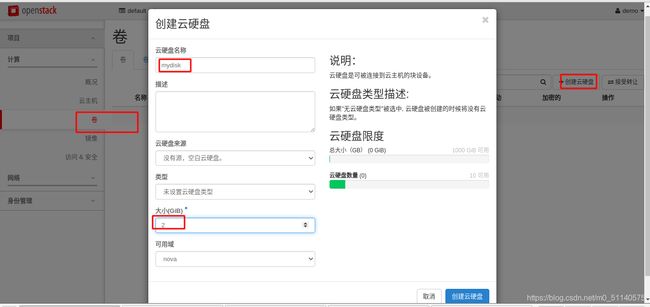

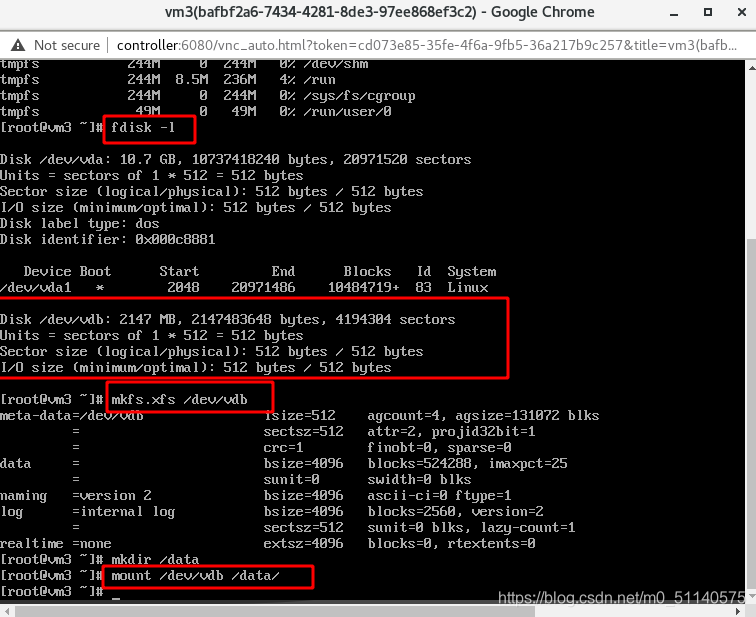

3.存储服务

开一个新的虚拟机作为存储节点

配置好解析,openstack源仓库,时间同步

配置控制节点

[root@controller ~]# mysql -pwestos

MariaDB [(none)]> CREATE DATABASE cinder;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' \

-> IDENTIFIED BY 'cinder';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'cinder';

[root@controller ~]# openstack user create --domain default --password cinder cinder

[root@controller ~]# openstack role add --project service --user cinder admin

[root@controller ~]# openstack service create --name cinder \

> --description "OpenStack Block Storage" volume

[root@controller ~]# openstack service create --name cinderv2 \

> --description "OpenStack Block Storage" volumev2

[root@controller ~]# openstack endpoint create --region RegionOne \

> volume public http://controller:8776/v1/%\(tenant_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne \

> volume internal http://controller:8776/v1/%\(tenant_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne \

> volume admin http://controller:8776/v1/%\(tenant_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne \

> volumev2 public http://controller:8776/v2/%\(tenant_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne \

> volumev2 internal http://controller:8776/v2/%\(tenant_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne \

> volumev2 admin http://controller:8776/v2/%\(tenant_id\)s

[root@controller ~]# yum install openstack-cinder -y

[root@controller ~]# vim /etc/cinder/cinder.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 172.25.4.1

[database]

connection = mysql+pymysql://cinder:cinder@controller/cinder

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = openstack

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[root@controller ~]# su -s /bin/sh -c "cinder-manage db sync" cinder

[root@controller ~]# vim /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

[root@controller ~]# systemctl restart openstack-nova-api.service

[root@controller ~]# systemctl enable --now openstack-cinder-api.service openstack-cinder-scheduler.service

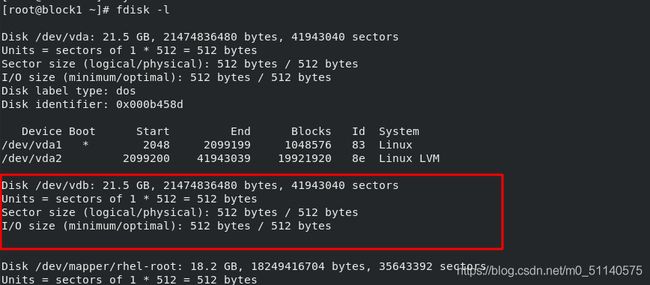

配置存储节点

[root@block1 ~]# yum install lvm2 -y

[root@block1 ~]# systemctl enable lvm2-lvmetad

[root@block1 ~]# fdisk -l

[root@block1 ~]# pvcreate /dev/vdb

[root@block1 ~]# vgcreate cinder-volumes /dev/vdb

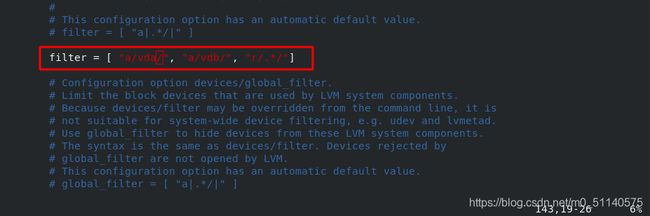

[root@block1 ~]# vim /etc/lvm/lvm.conf

filter = [ "a/vda/", "a/vdb/", "r/.*/"]

[root@block1 ~]# yum install openstack-cinder targetcli python-keystone -y

[root@block1 ~]# vim /etc/cinder/cinder.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 172.25.4.3

enabled_backends = lvm

glance_api_servers = http://controller:9292

[database]

connection = mysql+pymysql://cinder:cinder@controller/cinder

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = openstack

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

iscsi_protocol = iscsi

iscsi_helper = lioadm

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[root@block1 ~]# systemctl enable --now openstack-cinder-volume.service target.service

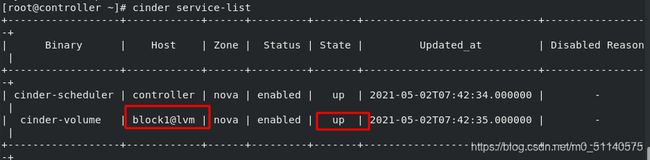

验证查看

[root@controller ~]# cinder service-list