Maven工程的MapReduce程序2---实现统计各部门员工薪水总和功能

前提条件:

1. 安装好jdk1.8(Windows环境下)

2. 安装好Maven3.3.9(Windows环境下)

3. 安装好eclipse(Windows环境下)

4. 安装好hadoop(Linux环境下)

输入文件:

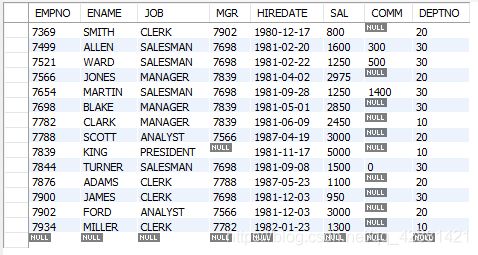

将以下内容复制到Sublime/或Notepad++等编辑器,另存为EMP.csv

7369,SMITH,CLERK,7902,1980/12/17,800,,20

7499,ALLEN,SALESMAN,7698,1981/2/20,1600,300,30

7521,WARD,SALESMAN,7698,1981/2/22,1250,500,30

7566,JONES,MANAGER,7839,1981/4/2,2975,,20

7654,MARTIN,SALESMAN,7698,1981/9/28,1250,1400,30

7698,BLAKE,MANAGER,7839,1981/5/1,2850,,30

7782,CLARK,MANAGER,7839,1981/6/9,2450,,10

7788,SCOTT,ANALYST,7566,1987/4/19,3000,,20

7839,KING,PRESIDENT,,1981/11/17,5000,,10

7844,TURNER,SALESMAN,7698,1981/9/8,1500,0,30

7876,ADAMS,CLERK,7788,1987/5/23,1100,,20

7900,JAMES,CLERK,7698,1981/12/3,950,,30

7902,FORD,ANALYST,7566,1981/12/3,3000,,20

7934,MILLER,CLERK,7782,1982/1/23,1300,,10

EMP.csv每个字段含义如下表:重点需要关注的是 SAL为员工工资(int类型), DEPTNO为部门号(int类型)

问题:

通过MapReduce程序实现统计EMP.csv中各部门员工薪水总和功能?

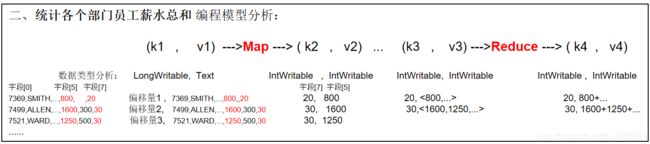

编程模型分析:

通过MapReduce实现统计各部门员工薪水总和功能

明确编程思路:

1. 编写Map方法:

由v1 可得到 k2, v2

1.1 v1由Text类型转换为String:toString()方法

1.2 按逗号进行分词:split(",")方法

1.3 取出需要的字段:工资(字段[5])、部门号(字段[7])

1.4 输出k2, v2:context.write()方法

2. 编写Reduce方法:

由k3, v3 可得到k4, v4

2.1 k4 = k3

2.2 v4 = v3元素的和:遍历v3求和

2.3 输出k4, v4:context.write()方法

3. 编写Main方法:

3.1 创建一个job和任务入口(指定主类)

3.2 指定job的mapper和输出的类型

3.3 指定job的reducer和输出的类型

3.4 指定job的输入和输出路径

3.5 执行job

配置eclipse的Maven环境:(之前配置过请跳过) 参考:配置eclipse的Maven环境

新建Maven工程:工程名称为:SalTotal

src/main/java目录下删除原来的App.java,

src/main/java目录下新建三个类:SalaryTotalMain.java 、 SalaryTotalMapper.java 、SalaryTotalReducer.java

修改pom.xml文件:

a.配置主类:在之前添加如下内容

org.apache.maven.plugins

maven-shade-plugin

3.1.0

package

shade

com.SalTotal.SalaryTotalMain

b.配置MapReduce程序的依赖(重要):程序所依赖的包,在这里配置,Maven会帮我们下载下来,省去了手动添加jar包的麻烦。 在之前添加如下内容:

org.apache.hadoop

hadoop-common

2.7.3

org.apache.hadoop

hadoop-client

2.7.3

org.apache.hadoop

hadoop-hdfs

2.7.3

org.apache.hadoop

hadoop-mapreduce-client-core

2.7.3

修改完成pom.xml后,ctrl + s保存

刷新工程

编写代码:

先自己思考一下怎么写代码。通过编程模型分析及编程思路,编写出代码。

打包工程。

提交到Hadoop去运行,查看运行结果。

最后附上代码供参考:

SalaryTotalMapper.java

package com.SalTotal;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class SalaryTotalMapper extends Mapper< LongWritable, Text, IntWritable, IntWritable> {

@Override

protected void map(LongWritable k1, Text v1,

Context context)

throws IOException, InterruptedException {

//数据:7499,ALLEN,SALESMAN,7698,1981/2/20,1600,300,30

String data = v1.toString();

String[] words = data.split(",");

//取出部门号words[7],将String转换为Int,Int转换为IntWritable对象,赋值为k2

IntWritable k2 = new IntWritable(Integer.parseInt(words[7]));

//取出工资words[5],将String转换为Int,Int转换为IntWritable对象,赋值为v2

IntWritable v2 = new IntWritable(Integer.parseInt(words[5]));

//输出k2, v2

context.write(k2, v2);

}

}

SalaryTotalReducer.java

package com.SalTotal;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Reducer;

public class SalaryTotalReducer extends Reducer {

@Override

protected void reduce(IntWritable k3, Iterable v3,

Context context)

throws IOException, InterruptedException {

int total = 0;

for(IntWritable v: v3) {

total = total + v.get();

}

context.write(k3, new IntWritable(total));

}

}

SalaryTotalMain.java

package com.SalTotal;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class SalaryTotalMain {

public static void main(String[] args) throws Exception {

//1. 创建一个job和任务入口(指定主类)

Job job = Job.getInstance();

job.setJarByClass(SalaryTotalMain.class);

//2. 指定job的mapper和输出的类型

job.setMapperClass(SalaryTotalMapper.class);

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(IntWritable.class);

//3. 指定job的reducer和输出的类型

job.setReducerClass(SalaryTotalReducer.class);

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(IntWritable.class);

//4. 指定job的输入和输出路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//5. 执行job

job.waitForCompletion(true);

}

}

完成! enjoy it!