Java整合Storm读取Kafka数据

写到这里,kafka -> storm -> es 应该是流式数据处理最标准的模型了,从消息队列获取流式数据源,经storm多分支流水线逐次清洗、处理、计算,把需要的数据持久化到仓库。根据前几次的不断深入和修改,现在我们就把数据源改成kafka。

https://blog.csdn.net/xxkalychen/article/details/117047109?spm=1001.2014.3001.5501

我们需要做三点改动就好了。

一、添加kafka的依赖。

org.apache.kafka

kafka_2.12

2.3.1

二、修改Spout。

为了不破坏原来的程序,我们另外创建一个KafkaSpout。

package com.chris.storm.spout;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import org.apache.storm.utils.Utils;

import java.util.Collections;

import java.util.Map;

import java.util.Properties;

/**

* @author Chris Chan

* Create on 2021/5/19 9:43

* Use for:

* Explain:

*/

public class KafkaSpout extends BaseRichSpout {

//数据收集器

private SpoutOutputCollector collector;

//Kafka消费者

private KafkaConsumer consumer;

//Kafka消息主题

private static final String TOPIC = "Topic_Storm";

/**

* 初始化操作

*

* @param map

* @param topologyContext

* @param spoutOutputCollector

*/

@Override

public void open(Map map, TopologyContext topologyContext, SpoutOutputCollector spoutOutputCollector) {

this.collector = spoutOutputCollector;

Properties properties = new Properties();

properties.put("bootstrap.servers", "storm.chris.com:9092");

properties.put("group.id", "storm_group_01");

properties.put("enable.auto.commit", "true");

properties.put("auto.commit.interval.ms", "1000");

properties.put("session.timeout.ms", "30000");

properties.put("key.deserializer", StringDeserializer.class.getName());

properties.put("value.deserializer", StringDeserializer.class.getName());

consumer = new KafkaConsumer<>(properties);

consumer.subscribe(Collections.singleton(TOPIC));

}

/**

* 处理数据

*/

@Override

public void nextTuple() {

ConsumerRecords consumerRecords = consumer.poll(10);

if (null != consumerRecords) {

consumerRecords.forEach(record -> collector.emit(new Values(record.value())));

}

Utils.sleep(1000);

}

/**

* 定义输出流和字段

*

* @param outputFieldsDeclarer

*/

@Override

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

//把数据存储在元组中名为line的字段里面

outputFieldsDeclarer.declare(new Fields("line"));

}

}

三、修改主类MyTopology。只需要修改一下spout设置就OK。

package com.chris.storm.topology;

import com.chris.storm.bolt.CountBolt;

import com.chris.storm.bolt.LineBolt;

import com.chris.storm.bolt.PrintBolt;

import com.chris.storm.spout.DataSourceSpout;

import com.chris.storm.spout.KafkaSpout;

import com.chris.storm.spout.SocketSpout;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.topology.TopologyBuilder;

import java.util.Collections;

/**

* @author Chris Chan

* Create on 2021/5/19 9:42

* Use for:

* Explain:

*/

public class MyTopology {

public static void main(String[] args) throws Exception {

new MyTopology().execute(args);

}

private void execute(String[] args) throws Exception {

//拓扑构造器

TopologyBuilder builder = new TopologyBuilder();

//1. 设置流水线数据源spout

//builder.setSpout("data", new DataSourceSpout(), 1);

//2. 设置从kafka读取流式数据

builder.setSpout("data", new KafkaSpout(), 1);

//设置流水线的各个处理环节bolt shuffleGrouping对应上一环节的id

builder.setBolt("line", new LineBolt(), 2).shuffleGrouping("data");

//shuffleGrouping的各参数就是绑定的streamId

builder.setBolt("print", new PrintBolt(), 2).shuffleGrouping("line", "print");

builder.setBolt("count", new CountBolt(), 2).shuffleGrouping("line", "count");

//1. 提交到本地

//submitToLocal(builder);

//2. 提交到远程集群 运行时后面跟着的第一个参数设置为Topology名称

//submitToRemote(builder, args[0]);

//3. 通过代码提交到远程集群 运行时后面跟着的第一个参数设置为Topology名称

submitToRemoteByCode(builder, args[0]);

}

/**

* 通过代码提交到远程集群

*

* @param builder

* @param topologyName

*/

private void submitToRemoteByCode(TopologyBuilder builder, String topologyName) throws Exception {

//配置

Config config = new Config();

config.put(Config.NIMBUS_SEEDS, Collections.singletonList("192.168.0.54"));

config.put(Config.NIMBUS_THRIFT_PORT, 6627);

config.put(Config.STORM_ZOOKEEPER_SERVERS, Collections.singletonList("192.168.0.54"));

config.put(Config.STORM_ZOOKEEPER_PORT, 2181);

config.put(Config.TASK_HEARTBEAT_FREQUENCY_SECS, 10000);

config.setDebug(false);

config.setNumAckers(3);

config.setMaxTaskParallelism(20);

//assembly模式打包的本机jar包路径

String jarLocalPath = "G:\\gitee\\StudyRoom\\StudyIn2021\\May\\Java\\storm-demo-20210519\\target\\storm-demo-20210519-1.0.0-SNAPSHOT-jar-with-dependencies.jar";

System.setProperty("storm.jar", jarLocalPath);

StormSubmitter.submitTopologyAs(topologyName, config, builder.createTopology(), null, null, "root");

}

/**

* 提交到远程集群

*

* @param builder

* @param topologyName

*/

private void submitToRemote(TopologyBuilder builder, String topologyName) throws Exception {

//配置

Config config = new Config();

config.setDebug(false);

config.setNumAckers(3);

config.setMaxTaskParallelism(20);

StormSubmitter.submitTopologyAs(topologyName, config, builder.createTopology(), null, null, "root");

}

/**

* 提交到本地环境

*

* @param builder

* @throws Exception

*/

private void submitToLocal(TopologyBuilder builder) throws Exception {

//配置

Config config = new Config();

config.setDebug(false);

config.setNumAckers(3);

config.setMaxTaskParallelism(20);

//本地提交

LocalCluster cluster = new LocalCluster.Builder().build();

cluster.submitTopology("test_topo", config, builder.createTopology());

}

}

不过这样还不算完。你有kafka服务器吗?为了节省资源,我就在storm服务器加装了一个kafka,借助于本来就有的zookeeper。关于kafka的安装另文讲解。位置/var/app/kafka_2.13-2.8.0。

四、启动kafka。

nohup /var/app/kafka_2.13-2.8.0/bin/kafka-server-start.sh /var/app/kafka_2.13-2.8.0/config/server.properties > /var/app/kafka_2.13-2.8.0/logs/kafka.log 2>&1 &进入主题消息发送状态

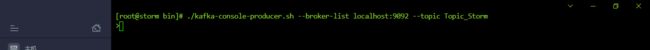

./kafka-console-producer.sh --broker-list localhost:9092 --topic Topic_Storm这样就可以测试了。

按照套路用代码把jar包部署到服务器。在kafka发送几条消息,用空格分割,回车发送。看看效果。