【个人】项目实训 | 基于神经网络的风格迁移

文章目录

- 前言

- 正文

- 一. 实现过程

-

- 1. 神经网络处理核心代码

- 2. 神经网络训练应用

- 3. 风格迁移UI

- 二. 实现效果示例

-

- 1. 手绘风格迁移

- 2. 梵高抽象画风迁移

- 总结

-

- 一. 主要工作

- 二. 有待改进

- 三. 参考链接

前言

关于项目实训,本人主要负责AI部分的图像风格迁移功能。

通过Python语言实现了基于神经网络的风格迁移。

具体内容如下。

正文

一. 实现过程

1. 神经网络处理核心代码

def model_nn(instance,sess, input_image, model,train_step,J,J_content, J_style,save_path,num_iterations=200):

# Initialize global variables (you need to run the session on the initializer)

### START CODE HERE ### (1 line)

sess.run(tf.global_variables_initializer())

### END CODE HERE ###

# Run the noisy input image (initial generated image) through the model. Use assign().

### START CODE HERE ### (1 line)

sess.run(model['input'].assign(input_image))

### END CODE HERE ###

for i in range(num_iterations):

# Run the session on the train_step to minimize the total cost

### START CODE HERE ### (1 line)

sess.run(train_step)

### END CODE HERE ###

# Compute the generated image by running the session on the current model['input']

### START CODE HERE ### (1 line)

generated_image = sess.run(model['input'])

### END CODE HERE ###

# save last generated image

save_image(save_path+'output/generated_image.jpg', generated_image)

#print("I have saved!!!!!")

temp_image = cv.imread(save_path+"output/generated_image.jpg")

instance.image_result_image = cv.cvtColor(temp_image,cv.COLOR_BGR2RGB)

m_init_style_transfer.update_image(instance)

return generated_image

def neual_style_transfer(instance):

starttime = datetime.datetime.now()

###############################################

# Reset the graph

tf.reset_default_graph()

# Start interactive session

sess = tf.InteractiveSession()

#print("instance.open_file_path")

#print(instance.open_file_path[0][0])

#content_image = scipy.misc.imread(instance.root_path+"/part3/images/louvre_small.jpg")

content_image = instance.m_image

content_image = cv.resize(content_image, (400, 300))

content_image = reshape_and_normalize_image(content_image)

#style_image = scipy.misc.imread(instance.root_path+"/part3/images/starrynight.jpg")

style_image = scipy.misc.imread(instance.style_file_transfer[0])

style_image = cv.resize(style_image, (400, 300))

style_image = reshape_and_normalize_image(style_image)

generated_image = generate_noise_image(content_image)

#plt.imshow(generated_image[0])

#plt.show()

model = load_vgg_model(instance.root_path+"/part3/pretrained-model/imagenet-vgg-verydeep-19.mat")

STYLE_LAYERS = [ # style_layers 的作用

('conv1_1', 0.2),

('conv2_1', 0.2),

('conv3_1', 0.2),

('conv4_1', 0.2),

('conv5_1', 0.2)]

# Assign the content image to be the input of the VGG model.

sess.run(model['input'].assign(content_image))

# Select the output tensor of layer conv4_2

out = model['conv4_2']

# Set a_C to be the hidden layer activation from the layer we have selected

a_C = sess.run(out)

# Set a_G to be the hidden layer activation from same layer. Here, a_G references model['conv4_2']

# and isn't evaluated yet. Later in the code, we'll assign the image G as the model input, so that

# when we run the session, this will be the activations drawn from the appropriate layer, with G as input.

a_G = out

# Compute the content cost

J_content = compute_content_cost(a_C, a_G)

# Assign the input of the model to be the "style" image

sess.run(model['input'].assign(style_image))

# Compute the style cost

J_style = compute_style_cost(model, STYLE_LAYERS,sess)

### START CODE HERE ### (1 line)

J = total_cost(J_content=J_content, J_style=J_style)

### END CODE HERE ###

# define optimizer (1 line)

optimizer = tf.train.AdamOptimizer(2.0)

# define train_step (1 line)

train_step = optimizer.minimize(J)

#model_nn(sess, generated_image)

save_path=instance.root_path+"/part3/"

model_nn(instance,sess, generated_image, model, train_step, J, J_content, J_style,save_path, num_iterations=100)

#################################################

endtime = datetime.datetime.now()

print("the running time :" + str((endtime - starttime).seconds))

print("END!")

其中关于 content_image 与 style_image 分别表示,当前需要处理的图片与参考转换风格图片。

content_image = instance.m_image

content_image = cv.resize(content_image, (400, 300))

content_image = reshape_and_normalize_image(content_image)

style_image = scipy.misc.imread(instance.style_file_transfer[0])

style_image = cv.resize(style_image, (400, 300))

style_image = reshape_and_normalize_image(style_image)

content_image即当前软件打开的图片,受训练模型的尺寸限制,将其转换为400×300像素。

style_image将读取用户刚刚选择的风格图片,同样进行图片尺寸变换便于训练。

2. 神经网络训练应用

class CONFIG:

IMAGE_WIDTH = 400

IMAGE_HEIGHT = 300

COLOR_CHANNELS = 3

NOISE_RATIO = 0.6

MEANS = np.array([123.68, 116.779, 103.939]).reshape((1, 1, 1, 3))

VGG_MODEL = 'pretrained-model/imagenet-vgg-verydeep-19.mat' # Pick the VGG 19-layer model by from the paper "Very Deep Convolutional Networks for Large-Scale Image Recognition".

STYLE_IMAGE = 'images/stone_style.jpg' # Style image to use.

CONTENT_IMAGE = 'images/content300.jpg' # Content image to use.

OUTPUT_DIR = 'output/'

def load_vgg_model(path):

vgg = scipy.io.loadmat(path)

vgg_layers = vgg['layers']

def _weights(layer, expected_layer_name):

"""

Return the weights and bias from the VGG model for a given layer.

"""

wb = vgg_layers[0][layer][0][0][2]

W = wb[0][0]

b = wb[0][1]

layer_name = vgg_layers[0][layer][0][0][0][0]

assert layer_name == expected_layer_name

return W, b

return W, b

def _relu(conv2d_layer):

"""

Return the RELU function wrapped over a TensorFlow layer. Expects a

Conv2d layer input.

"""

return tf.nn.relu(conv2d_layer)

def _conv2d(prev_layer, layer, layer_name):

"""

Return the Conv2D layer using the weights, biases from the VGG

model at 'layer'.

"""

W, b = _weights(layer, layer_name)

W = tf.constant(W)

b = tf.constant(np.reshape(b, (b.size)))

return tf.nn.conv2d(prev_layer, filter=W, strides=[1, 1, 1, 1], padding='SAME') + b

def _conv2d_relu(prev_layer, layer, layer_name):

"""

Return the Conv2D + RELU layer using the weights, biases from the VGG

model at 'layer'.

"""

return _relu(_conv2d(prev_layer, layer, layer_name))

def _avgpool(prev_layer):

"""

Return the AveragePooling layer.

"""

return tf.nn.avg_pool(prev_layer, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

# Constructs the graph model.

graph = {

}

graph['input'] = tf.Variable(np.zeros((1, CONFIG.IMAGE_HEIGHT, CONFIG.IMAGE_WIDTH, CONFIG.COLOR_CHANNELS)),

dtype='float32')

graph['conv1_1'] = _conv2d_relu(graph['input'], 0, 'conv1_1')

graph['conv1_2'] = _conv2d_relu(graph['conv1_1'], 2, 'conv1_2')

graph['avgpool1'] = _avgpool(graph['conv1_2'])

graph['conv2_1'] = _conv2d_relu(graph['avgpool1'], 5, 'conv2_1')

graph['conv2_2'] = _conv2d_relu(graph['conv2_1'], 7, 'conv2_2')

graph['avgpool2'] = _avgpool(graph['conv2_2'])

graph['conv3_1'] = _conv2d_relu(graph['avgpool2'], 10, 'conv3_1')

graph['conv3_2'] = _conv2d_relu(graph['conv3_1'], 12, 'conv3_2')

graph['conv3_3'] = _conv2d_relu(graph['conv3_2'], 14, 'conv3_3')

graph['conv3_4'] = _conv2d_relu(graph['conv3_3'], 16, 'conv3_4')

graph['avgpool3'] = _avgpool(graph['conv3_4'])

graph['conv4_1'] = _conv2d_relu(graph['avgpool3'], 19, 'conv4_1')

graph['conv4_2'] = _conv2d_relu(graph['conv4_1'], 21, 'conv4_2')

graph['conv4_3'] = _conv2d_relu(graph['conv4_2'], 23, 'conv4_3')

graph['conv4_4'] = _conv2d_relu(graph['conv4_3'], 25, 'conv4_4')

graph['avgpool4'] = _avgpool(graph['conv4_4'])

graph['conv5_1'] = _conv2d_relu(graph['avgpool4'], 28, 'conv5_1')

graph['conv5_2'] = _conv2d_relu(graph['conv5_1'], 30, 'conv5_2')

graph['conv5_3'] = _conv2d_relu(graph['conv5_2'], 32, 'conv5_3')

graph['conv5_4'] = _conv2d_relu(graph['conv5_3'], 34, 'conv5_4')

graph['avgpool5'] = _avgpool(graph['conv5_4'])

return graph

def generate_noise_image(content_image, noise_ratio=CONFIG.NOISE_RATIO):

"""

Generates a noisy image by adding random noise to the content_image

"""

# Generate a random noise_image

noise_image = np.random.uniform(-20, 20,

(1, CONFIG.IMAGE_HEIGHT, CONFIG.IMAGE_WIDTH, CONFIG.COLOR_CHANNELS)).astype(

'float32')

# Set the input_image to be a weighted average of the content_image and a noise_image

input_image = noise_image * noise_ratio + content_image * (1 - noise_ratio)

return input_image

def reshape_and_normalize_image(image):

"""

Reshape and normalize the input image (content or style)

"""

# Reshape image to mach expected input of VGG16

image = np.reshape(image, ((1,) + image.shape))

# Substract the mean to match the expected input of VGG16

imag e = image - CONFIG.MEANS

return image

def save_image(path, image):

# Un-normalize the image so that it looks good

image = image + CONFIG.MEANS

# Clip and Save the image

image = np.clip(image[0], 0, 255).astype('uint8')

scipy.misc.imsave(path, image)

3. 风格迁移UI

from PyQt5.QtWidgets import QAction,QWidget,QPushButton,QLabel

from PyQt5.QtGui import QImage,QPixmap

from part3 import neural_style_transfer_core

import numpy as np

def init_style_transfer_menubar(instance):

# 连接信号,当该信号被发射后执行括号内被连接函数

instance.signal_neural_style_transfer.connect(neural_style_transfer_core.neual_style_transfer)

# 创建一个窗口,定义位置,标题等属性

instance.widget_neural_style_transfer=QWidget()

instance.widget_neural_style_transfer.setGeometry(300, 300, 280, 170)

instance.widget_neural_style_transfer.setWindowTitle('基于神经网络的风格迁移')

# 创建一个按钮,该按钮的父对象为instance.widget_neural_style_transfer

# 当该按钮被点击时执行信号发射函数

instance.button_neural_style_stop=QPushButton("开始",instance.widget_neural_style_transfer)

instance.button_neural_style_stop.setGeometry(30, 30, 50, 50)

instance.button_neural_style_stop.clicked.connect(instance.signal_neural_style_emit)

# 创建一个action,当该action被触发时运行show_neural_style_transfer_widget

# show_neural_style_transfer_widget的作用为显示风格迁移操作窗口widget_neural_style_transfer

action_neural_style_widget_show = QAction('&基于神经网络的风格迁移', instance)

action_neural_style_widget_show.triggered.connect(instance.show_neural_style_transfer_widget)

# 新增一个菜单选项:艺术风格迁移

# 艺术风格迁移选项新增一个Action:基于神经网络的风格迁移

menubar = instance.menuBar()

tempMenu = menubar.addMenu('&艺术风格迁移')

tempMenu.addAction(action_neural_style_widget_show)

#参考的风格图片路径

instance.style_file_transfer = ""

instance.button_choose_style_image = QPushButton("选择风格图像",instance.widget_neural_style_transfer)

instance.button_choose_style_image.clicked.connect(instance.open_file_and_change_name)

instance.label_style_file = QLabel(instance.widget_neural_style_transfer)

instance.label_style_file.setGeometry(30,80,500,50)

instance.label_style_file.setText("风格文件路径")

#最终结果图像显示

instance.image_result_image = np.uint8(np.zeros((400,400,3)))

display_image = QImage(instance.image_result_image[:],instance.image_result_image.shape[1],

instance.image_result_image.shape[0],instance.image_result_image.shape[1]*3,

QImage.Format_RGB888)

instance.image_result_image_width = 400

instance.image_result_image_height = instance.image_result_image.shape[0] / instance.image_result_image.shape[1] * instance.image_result_image_width

instance.style_result_image_pixmap = QPixmap(display_image)

instance.style_result_lbl = QLabel(instance.widget_neural_style_transfer)

instance.style_result_lbl.setPixmap(instance.style_result_image_pixmap)

instance.style_result_lbl.resize(instance.image_result_image_width,instance.image_result_image_height)

instance.style_result_lbl.setScaledContents(True)

instance.style_result_lbl.setGeometry(200,200,instance.image_result_image_width,instance.image_result_image_height)

def update_image(instance):

#更新LABEL图片显示

display_image = QImage(instance.image_result_image[:], instance.image_result_image.shape[1],

instance.image_result_image.shape[0], instance.image_result_image.shape[1] * 3,

QImage.Format_RGB888)

instance.image_result_image_width = 400

instance.image_result_image_height = instance.image_result_image.shape[0] / instance.image_result_image.shape[

1] * instance.image_result_image_width

instance.style_result_image_pixmap = QPixmap(display_image)

instance.style_result_lbl.setPixmap(instance.style_result_image_pixmap)

instance.style_result_lbl.resize(instance.image_result_image_width, instance.image_result_image_height)

instance.style_result_lbl.setScaledContents(True)

instance.style_result_lbl.setGeometry(400, 300, instance.image_result_image_width,

instance.image_result_image_height)

在主要UI.py文件定义信号关联函数如下

# style transfer menubar 风格迁移菜单初始化

def style_transfer_menubar(self):

m_init_style_transfer.init_style_transfer_menubar(self)

# 显示基于神经网络的风格迁移操作窗口

def show_neural_style_transfer_widget(self):

self.widget_neural_style_transfer.show()

# 信号发射,执行该信号关联的函数

def signal_neural_style_emit(self):

self.signal_neural_style_transfer.emit(self)

def open_file_and_change_name(self):

self.style_file_transfer = QFileDialog.getOpenFileName(self,"选择文件",self.root_path+"/part3/images","photo(*.jpg *.png)")

self.label_style_file.setText(self.style_file_transfer[0])

二. 实现效果示例

在有风格学习图片的情况下,均可实现风格的迁移。

以下使用手绘插图与梵高抽象画风两种风格进行示例。

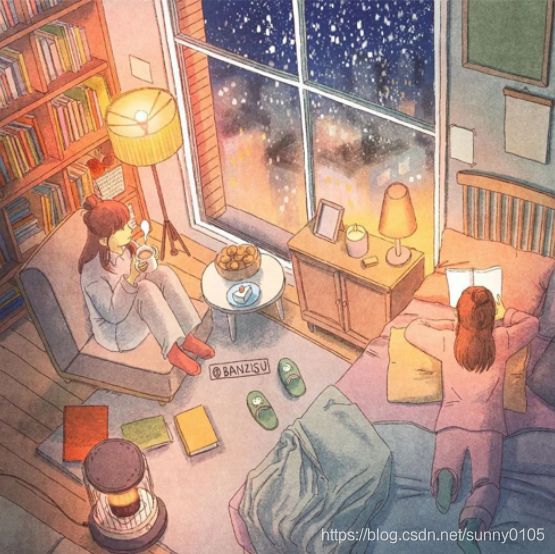

1. 手绘风格迁移

风格学习图片如下,即上文提到的style_image

等待处理图片如下,即上文提到的content_image

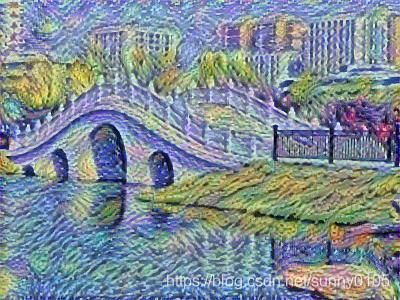

2. 梵高抽象画风迁移

风格学习图片如下,即上文提到的style_image

最终得到的结果图片如下

总结

一. 主要工作

- pycharm编译器环境配置

- 学习并移植基于神经网络的风格迁移

- 可自主选择处理图片与风格学习图片

- 运行风格迁移功能的UI界面

- 可自动显示处理完成图片并保存图片

二. 有待改进

- 风格迁移所需时间较长

- 不能自主选择图片保存路径

- UI界面有待美化

三. 参考链接

https://blog.csdn.net/u013733326/article/details/80767079