Android AAudio源码分析(一)

Android AAudio源码分析(一)

提示:再读文章之前可以先学习一下Binder和MMAP的知识

文章目录

- Android AAudio源码分析(一)

- 前言

- 一、AAudio是什么

- 二、AAudio源码解析

-

- 1.启动

- 2.工作原理

- 总结

前言

因为网上目前还没有关于安卓AAudio方面的原理分析,所以笔者通过研究安卓源码,总结了一些偏向于底层的一些东西,希望可以帮助到大家

一、AAudio是什么

AAudio 是在 Android O 版本中引入的全新 Android C API。此 API 专为需要低延迟的高性能音频应用而设计。应用通过读取数据并将数据写入流来与 AAudio 进行通信

二、AAudio源码解析

1.启动

(1)系统服务启动main_audioserver.cpp

xref: /frameworks/av/media/audioserver/main_audioserver.cpp

// AAudioService should only be used in OC-MR1 and later.

// And only enable the AAudioService if the system MMAP policy explicitly allows it.

// This prevents a client from misusing AAudioService when it is not supported.

//AAudioService只能在OC-MR1及更高版本中使用

//并且只有在系统MMAP策略明确允许的情况下才启用AAudioService

//这可以防止客户端在不支持AAudioService时误用它

aaudio_policy_t mmapPolicy = property_get_int32(AAUDIO_PROP_MMAP_POLICY,

AAUDIO_POLICY_NEVER);

if (mmapPolicy == AAUDIO_POLICY_AUTO || mmapPolicy == AAUDIO_POLICY_ALWAYS) {

AAudioService::instantiate();

}

可以看到是有一个AAudioService来初始化的。

(2)AAudioService是什么?

先来看它都继承了什么:

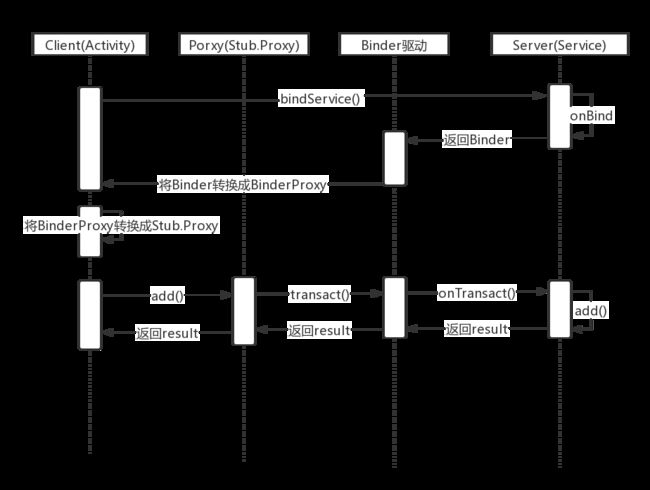

binder不用多说,BnAAudioService和AAudioServiceInterface要看一下

xref: /frameworks/av/services/oboeservice/AAudioService.h

class AAudioService :

public BinderService<AAudioService>,

public BnAAudioService,

public aaudio::AAudioServiceInterface

{

friend class BinderService<AAudioService>;

先来看BnAAudioService:

从名字来看就是一个服务

这个类写在了IAAudioService.h的头文件中

而client的各种使用AAudio的操作,都通过层层调用及参数包装汇聚到onTransact(),再由onTransact()分发到真正的目标方法执行

xref: /frameworks/av/media/libaaudio/src/binding/IAAudioService.h

class BnAAudioService : public BnInterface<IAAudioService> {

public:

virtual status_t onTransact(uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags = 0);

};

具体都有哪些方法呢?

class IAAudioService : public IInterface {

public:

DECLARE_META_INTERFACE(AAudioService);

// Register an object to receive audio input/output change and track notifications.

// For a given calling pid, AAudio service disregards any registrations after the first.

// Thus the IAAudioClient must be a singleton per process.

virtual void registerClient(const sp<IAAudioClient>& client) = 0;

/**

* @param request info needed to create the stream

* @param configuration contains information about the created stream

* @return handle to the stream or a negative error

*/

virtual aaudio::aaudio_handle_t openStream(const aaudio::AAudioStreamRequest &request,

aaudio::AAudioStreamConfiguration &configurationOutput) = 0;

virtual aaudio_result_t closeStream(aaudio::aaudio_handle_t streamHandle) = 0;

/* Get an immutable description of the in-memory queues

* used to communicate with the underlying HAL or Service.

*/

virtual aaudio_result_t getStreamDescription(aaudio::aaudio_handle_t streamHandle,

aaudio::AudioEndpointParcelable &parcelable) = 0;

/**

* Start the flow of data.

* This is asynchronous. When complete, the service will send a STARTED event.

*/

virtual aaudio_result_t startStream(aaudio::aaudio_handle_t streamHandle) = 0;

/**

* Stop the flow of data such that start() can resume without loss of data.

* This is asynchronous. When complete, the service will send a PAUSED event.

*/

virtual aaudio_result_t pauseStream(aaudio::aaudio_handle_t streamHandle) = 0;

/**

* Stop the flow of data such that the data currently in the buffer is played.

* This is asynchronous. When complete, the service will send a STOPPED event.

*/

virtual aaudio_result_t stopStream(aaudio::aaudio_handle_t streamHandle) = 0;

/**

* Discard any data held by the underlying HAL or Service.

* This is asynchronous. When complete, the service will send a FLUSHED event.

*/

virtual aaudio_result_t flushStream(aaudio::aaudio_handle_t streamHandle) = 0;

/**

* Manage the specified thread as a low latency audio thread.

*/

virtual aaudio_result_t registerAudioThread(aaudio::aaudio_handle_t streamHandle,

pid_t clientThreadId,

int64_t periodNanoseconds) = 0;

virtual aaudio_result_t unregisterAudioThread(aaudio::aaudio_handle_t streamHandle,

pid_t clientThreadId) = 0;

};

这些函数能够让用户很方便的使用AAudio实现播放或者录制等等

顺便看一下IAAudioService.cpp文件都干了啥?

xref: /frameworks/av/media/libaaudio/src/binding/IAAudioService.cpp

/**

* This is used by the AAudio Client to talk to the AAudio Service.

*

* The order of parameters in the Parcels must match with code in AAudioService.cpp.

*/

class BpAAudioService : public BpInterface<IAAudioService>

{

public:

explicit BpAAudioService(const sp<IBinder>& impl)

: BpInterface<IAAudioService>(impl)

{

}

void registerClient(const sp<IAAudioClient>& client) override

{

Parcel data, reply;

data.writeInterfaceToken(IAAudioService::getInterfaceDescriptor());

data.writeStrongBinder(IInterface::asBinder(client));

remote()->transact(REGISTER_CLIENT, data, &reply);

}

aaudio_handle_t openStream(const aaudio::AAudioStreamRequest &request,

aaudio::AAudioStreamConfiguration &configurationOutput) override {

Parcel data, reply;

// send command

data.writeInterfaceToken(IAAudioService::getInterfaceDescriptor());

// request.dump();

request.writeToParcel(&data);

status_t err = remote()->transact(OPEN_STREAM, data, &reply);

if (err != NO_ERROR) {

ALOGE("BpAAudioService::client openStream transact failed %d", err);

return AAudioConvert_androidToAAudioResult(err);

}

// parse reply

aaudio_handle_t stream;

err = reply.readInt32(&stream);

if (err != NO_ERROR) {

ALOGE("BpAAudioService::client transact(OPEN_STREAM) readInt %d", err);

return AAudioConvert_androidToAAudioResult(err);

} else if (stream < 0) {

ALOGE("BpAAudioService::client OPEN_STREAM passed stream %d", stream);

return stream;

}

err = configurationOutput.readFromParcel(&reply);

if (err != NO_ERROR) {

ALOGE("BpAAudioService::client openStream readFromParcel failed %d", err);

closeStream(stream);

return AAudioConvert_androidToAAudioResult(err);

}

return stream;

}

...

};

// Implement an interface to the service.

// This is here so that you don't have to link with libaaudio static library.

IMPLEMENT_META_INTERFACE(AAudioService, "IAAudioService");

// The order of parameters in the Parcels must match with code in BpAAudioService

status_t BnAAudioService::onTransact(uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags) {

aaudio_handle_t streamHandle;

aaudio::AAudioStreamRequest request;

aaudio::AAudioStreamConfiguration configuration;

pid_t tid;

int64_t nanoseconds;

aaudio_result_t result;

status_t status = NO_ERROR;

ALOGV("BnAAudioService::onTransact(%i) %i", code, flags);

switch(code) {

case REGISTER_CLIENT: {

CHECK_INTERFACE(IAAudioService, data, reply);

sp<IAAudioClient> client = interface_cast<IAAudioClient>(

data.readStrongBinder());

registerClient(client);

return NO_ERROR;

} break;

case OPEN_STREAM: {

CHECK_INTERFACE(IAAudioService, data, reply);

request.readFromParcel(&data);

result = request.validate();

if (result != AAUDIO_OK) {

streamHandle = result;

} else {

//ALOGD("BnAAudioService::client openStream request dump --------------------");

//request.dump();

// Override the uid and pid from the client in case they are incorrect.

request.setUserId(IPCThreadState::self()->getCallingUid());

request.setProcessId(IPCThreadState::self()->getCallingPid());

streamHandle = openStream(request, configuration);

//ALOGD("BnAAudioService::onTransact OPEN_STREAM server handle = 0x%08X",

// streamHandle);

}

reply->writeInt32(streamHandle);

configuration.writeToParcel(reply);

return NO_ERROR;

} break;

...

default:

// ALOGW("BnAAudioService::onTransact not handled %u", code);

return BBinder::onTransact(code, data, reply, flags);

}

}

} /* namespace android */

其中声明了BpAAudioService类,实现了这些函数

最终还是经过transact调到了BnAAudioService,然后根据参数分发处理

整个过程用到了跨进程通信的方法:Binder

再来看AAudioService的另一个继承:AAudioServiceInterface

xref: /frameworks/av/media/libaaudio/src/binding/AAudioServiceInterface.h

/**

* This has the same methods as IAAudioService but without the Binder features.

*

* It allows us to abstract the Binder interface and use an AudioStreamInternal

* both in the client and in the service.

*/

只看注释,因为定义的方法和IAAudioService里面几乎一样,就是少了Binder

所以也就可以单纯的把它看作一个接口,客户端和服务端都有继承AAudioServiceInterface,统一了客户端和服务端的接口

(3)其他几个重要的类的介绍

IAAudioClient:

xref: /frameworks/av/media/libaaudio/src/binding/AAudioBinderClient.h

通过绑定器与服务对话来实现AAudioServiceInterface里面的方法,就是我们上面说到的客户端

在其内部通过从SM(ServiceManager)里面拿到AAudioService的引用,绑定之后使用

class AAudioBinderClient : public virtual android::RefBase

, public AAudioServiceInterface

, public android::Singleton<AAudioBinderClient> {

加上它的继承的一些类来看,会更加一目了然。

AAudioBinderClient

xref: /frameworks/av/media/libaaudio/src/binding/IAAudioClient.h

这个类是用来service与client通信的,其中主要内容就是流 状态的变化

// Interface (our AIDL) - client methods called by service

class IAAudioClient : public IInterface {

public:

DECLARE_META_INTERFACE(AAudioClient);

virtual void onStreamChange(aaudio::aaudio_handle_t handle, int32_t opcode, int32_t value) = 0;

};

class BnAAudioClient : public BnInterface<IAAudioClient> {

public:

virtual status_t onTransact(uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags = 0);

};

} /* namespace android */

总结:

关于AAudioSevice,里面用了很多Binder的知识,把Binder看透了也就了解了这整个调用的流程

2.工作原理

AAudioService提供了很多供我们使用它的方法,接下来就是对其中几个重要的方法进行剖析,了解其工作原理

(1)openStream:

先来看一张时序图:

打开流的过程如图所示,其中主要会分两种情况:

共享模式:多个音频流可以同时访问该音频设备,该模式下音频的延迟略高于独占模式

独占模式:只有该音频流能访问该音频设备,其它音频流拒绝访问,该模式下 音频流 性能高 , 延迟低

注意:如果不再使用该音频设备,需要马上释放音频流,以免影响其它音频流访问该音频设备(独占模式)

接下来来看代码:

xref: /frameworks/av/services/oboeservice/AAudioService.cpp

aaudio_handle_t AAudioService::openStream(const aaudio::AAudioStreamRequest &request,

aaudio::AAudioStreamConfiguration &configurationOutput) {

aaudio_result_t result = AAUDIO_OK;

sp<AAudioServiceStreamBase> serviceStream;

const AAudioStreamConfiguration &configurationInput = request.getConstantConfiguration();

bool sharingModeMatchRequired = request.isSharingModeMatchRequired();

aaudio_sharing_mode_t sharingMode = configurationInput.getSharingMode();

// Enforce limit on client processes.

//对客户端进程实施限制

//这里面规定每个进程都有最大音频流数

//当超过这个最大音频流数量时,将不能继续执行函数

pid_t pid = request.getProcessId();

if (pid != mAudioClient.clientPid) {

int32_t count = AAudioClientTracker::getInstance().getStreamCount(pid);

if (count >= MAX_STREAMS_PER_PROCESS) {

ALOGE("openStream(): exceeded max streams per process %d >= %d",

count, MAX_STREAMS_PER_PROCESS);

return AAUDIO_ERROR_UNAVAILABLE;

}

}

//传输模式检测,只允许共享模式或者独占模式

if (sharingMode != AAUDIO_SHARING_MODE_EXCLUSIVE && sharingMode != AAUDIO_SHARING_MODE_SHARED) {

ALOGE("openStream(): unrecognized sharing mode = %d", sharingMode);

return AAUDIO_ERROR_ILLEGAL_ARGUMENT;

}

//独占模式的情况

if (sharingMode == AAUDIO_SHARING_MODE_EXCLUSIVE) {

// only trust audioserver for in service indication

//这个inService变量代表着这个流是不是被AAudioService单独打开的(没有客户端)

bool inService = false;

if (mAudioClient.clientPid == IPCThreadState::self()->getCallingPid() &&

mAudioClient.clientUid == IPCThreadState::self()->getCallingUid()) {

inService = request.isInService();

}

//之后就可以new一个独占模式专用的对象来打开流

//传递了两个参数:一个是AAudioService的指针,一个就是inService

//在之后对流进行其他操作时会用到inService这个参数

serviceStream = new AAudioServiceStreamMMAP(*this, inService);

result = serviceStream->open(request);

if (result != AAUDIO_OK) {

// Clear it so we can possibly fall back to using a shared stream.

ALOGW("openStream(), could not open in EXCLUSIVE mode");

serviceStream.clear();

}

}

// if SHARED requested or if EXCLUSIVE failed

//接下来是共享模式打开流

//当创建独占模式的流失败并且传输模式与请求的模式不同时,会以共享模式打开流

if (sharingMode == AAUDIO_SHARING_MODE_SHARED

|| (serviceStream.get() == nullptr && !sharingModeMatchRequired)) {

serviceStream = new AAudioServiceStreamShared(*this);

result = serviceStream->open(request);

}

//创建不成功

if (result != AAUDIO_OK) {

serviceStream.clear();

ALOGE("openStream(): failed, return %d = %s",

result, AAudio_convertResultToText(result));

return result;

} else {

//创建成功

//生成这个AAduio流服务的句柄返回供客户端调用

aaudio_handle_t handle = mStreamTracker.addStreamForHandle(serviceStream.get());

ALOGD("openStream(): handle = 0x%08X", handle);

serviceStream->setHandle(handle);

pid_t pid = request.getProcessId();

//将这个创建好的音频流注册为客户端用来发送数据

AAudioClientTracker::getInstance().registerClientStream(pid, serviceStream);

configurationOutput.copyFrom(*serviceStream);

return handle;

}

}

接下来分析独占模式下是什么情况

xref: /frameworks/av/services/oboeservice/AAudioServiceStreamMMAP.cpp

//这些对应于独占模式MMAP客户端流。

//它独占使用一个AAudioServiceEndpointMMAP与底层设备或端口通信。

//这个服务流的核心就是使用MMAP缓冲区

//继承了AAudioServiceStreamBase

class AAudioServiceStreamMMAP : public AAudioServiceStreamBase

// Open stream on HAL and pass information about the shared memory buffer back to the client.

//打开HAL上的流并将有关共享内存缓冲区的信息传递回客户端。

aaudio_result_t AAudioServiceStreamMMAP::open(const aaudio::AAudioStreamRequest &request) {

sp<AAudioServiceStreamMMAP> keep(this);

//带着独占模式的参数,调用AAudioServiceStreamBase的open函数

aaudio_result_t result = AAudioServiceStreamBase::open(request,

AAUDIO_SHARING_MODE_EXCLUSIVE);

if (result != AAUDIO_OK) {

return result;

}

//把这个mServiceEndpointWeak升级为强指针,看它是不是还存活

//mServiceEndpoint变量可以由多个线程访问

//因此,我们通过本地向智能指针升级弱指针来访问它,这是线程安全的

sp<AAudioServiceEndpoint> endpoint = mServiceEndpointWeak.promote();

if (endpoint == nullptr) {

ALOGE("%s() has no endpoint", __func__);

return AAUDIO_ERROR_INVALID_STATE;

}

//注册这个AAudioServiceStreamMMAP流

//AAudioServiceEndpoint(endpoint)由AAudioServiceStreamBase的子类使用,与底层音频设备或端口通信

//保证流的操作是线程安全的

result = endpoint->registerStream(keep);

if (result != AAUDIO_OK) {

return result;

}

setState(AAUDIO_STREAM_STATE_OPEN);

return AAUDIO_OK;

}

继续分析AAudioServiceStreamBase::open

xref: /frameworks/av/services/oboeservice/AAudioServiceStreamBase.cpp

//AAudioServiceStreamBase的每个实例都对应于一个客户机流。

//它使用AAudioServiceEndpoint的子类与底层设备或端口通信。

aaudio_result_t AAudioServiceStreamBase::open(const aaudio::AAudioStreamRequest &request,

aaudio_sharing_mode_t sharingMode) {

AAudioEndpointManager &mEndpointManager = AAudioEndpointManager::getInstance();

aaudio_result_t result = AAUDIO_OK;

mMmapClient.clientUid = request.getUserId();

mMmapClient.clientPid = request.getProcessId();

mMmapClient.packageName.setTo(String16("")); // TODO What should we do here?

// Limit scope of lock to avoid recursive lock in close().

//限制锁的作用域以避免close()中的递归锁。

//判断mUpMessageQueueLock是否为空,也就是说看它是否是第一次打开流

{

std::lock_guard<std::mutex> lock(mUpMessageQueueLock);

if (mUpMessageQueue != nullptr) {

ALOGE("%s() called twice", __func__);

return AAUDIO_ERROR_INVALID_STATE;

}

//创建共享内存

mUpMessageQueue = new SharedRingBuffer();

result = mUpMessageQueue->allocate(sizeof(AAudioServiceMessage),

QUEUE_UP_CAPACITY_COMMANDS);

if (result != AAUDIO_OK) {

goto error;

}

// This is not protected by a lock because the stream cannot be

// referenced until the service returns a handle to the client.

// So only one thread can open a stream.

//这不受锁的保护,因为在服务向客户端返回句柄之前,流不能被引用。

//所以只有一个线程可以打开一个流。

mServiceEndpoint = mEndpointManager.openEndpoint(mAudioService,

request,

sharingMode);

if (mServiceEndpoint == nullptr) {

ALOGE("%s() openEndpoint() failed", __func__);

result = AAUDIO_ERROR_UNAVAILABLE;

goto error;

}

// Save a weak pointer that we will use to access the endpoint.

//保存将用于访问端口的弱指针

//然后这个弱指针将会在AAudioServiceStreamMMAP里面转化为强指针

mServiceEndpointWeak = mServiceEndpoint;

//获取每次写入的帧数

mFramesPerBurst = mServiceEndpoint->getFramesPerBurst();

copyFrom(*mServiceEndpoint);

}

return result;

error:

close();

return result;

}

继续分析mEndpointManager.openEndpoint

xref: /frameworks/av/services/oboeservice/AAudioEndpointManager.cpp

sp<AAudioServiceEndpoint> AAudioEndpointManager::openEndpoint(AAudioService &audioService,

const aaudio::AAudioStreamRequest &request,

aaudio_sharing_mode_t sharingMode) {

if (sharingMode == AAUDIO_SHARING_MODE_EXCLUSIVE) {

return openExclusiveEndpoint(audioService, request);

} else {

return openSharedEndpoint(audioService, request);

}

}

在这里面做了个判断,依据我们传入的sharingMode来做区分

先看独占模式,也就是openExclusiveEndpoint这个方法

sp<AAudioServiceEndpoint> AAudioEndpointManager::openExclusiveEndpoint(

AAudioService &aaudioService,

const aaudio::AAudioStreamRequest &request) {

std::lock_guard<std::mutex> lock(mExclusiveLock);

const AAudioStreamConfiguration &configuration = request.getConstantConfiguration();

// Try to find an existing endpoint.

//遍历mExclusiveStreams看是否存在当前configuration所匹配的流

sp<AAudioServiceEndpoint> endpoint = findExclusiveEndpoint_l(configuration);

// If we find an existing one then this one cannot be exclusive.

//如果找到了,就不允许再创建了,返回空

if (endpoint.get() != nullptr) {

ALOGW("openExclusiveEndpoint() already in use");

// Already open so do not allow a second stream.

return nullptr;

//没有匹配的流,则可以创建

} else {

sp<AAudioServiceEndpointMMAP> endpointMMap = new AAudioServiceEndpointMMAP(aaudioService);

ALOGV("openExclusiveEndpoint(), no match so try to open MMAP %p for dev %d",

endpointMMap.get(), configuration.getDeviceId());

//AAudioServiceEndpointMMAP继承了AAudioServiceEndpoint

endpoint = endpointMMap;

//调用AAudioServiceEndpointMMAP的open函数

aaudio_result_t result = endpoint->open(request);

if (result != AAUDIO_OK) {

ALOGE("openExclusiveEndpoint(), open failed");

endpoint.clear();

} else {

//创建成功加入mExclusiveStreams

mExclusiveStreams.push_back(endpointMMap);

mExclusiveOpenCount++;

}

}

if (endpoint.get() != nullptr) {

// Increment the reference count under this lock.

//增加此锁下的引用计数

endpoint->setOpenCount(endpoint->getOpenCount() + 1);

}

return endpoint;

}

层层调用,快到头了

AAudioServiceStreamMMAP使用AAudioServiceEndpointMMAP通过AudioFlinger访问MMAP设备

AAudioServiceEndpointMMAP的open函数:

xref: /frameworks/av/services/oboeservice/AAudioServiceEndpointMMAP.cpp

aaudio_result_t AAudioServiceEndpointMMAP::open(const aaudio::AAudioStreamRequest &request) {

aaudio_result_t result = AAUDIO_OK;

audio_config_base_t config;

audio_port_handle_t deviceId;

//读取系统属性

//获得系统每次出现MMAP的最小微秒

int32_t burstMinMicros = AAudioProperty_getHardwareBurstMinMicros();

int32_t burstMicros = 0;

copyFrom(request.getConstantConfiguration());

//获取这次请求的音频流方向:输出/输入

aaudio_direction_t direction = getDirection();

//转换为内部值

const audio_content_type_t contentType =

AAudioConvert_contentTypeToInternal(getContentType());

// Usage only used for OUTPUT

//输入和输出有区别

//输出情况下source为默认值

//输入情况下usage为默认值

const audio_usage_t usage = (direction == AAUDIO_DIRECTION_OUTPUT)

? AAudioConvert_usageToInternal(getUsage())

: AUDIO_USAGE_UNKNOWN;

const audio_source_t source = (direction == AAUDIO_DIRECTION_INPUT)

? AAudioConvert_inputPresetToAudioSource(getInputPreset())

: AUDIO_SOURCE_DEFAULT;

const audio_attributes_t attributes = {

.content_type = contentType,

.usage = usage,

.source = source,

.flags = AUDIO_FLAG_LOW_LATENCY,

.tags = ""

};

ALOGD("%s(%p) MMAP attributes.usage = %d, content_type = %d, source = %d",

__func__, this, attributes.usage, attributes.content_type, attributes.source);

//mMmapClient类型是AudioClient

//在open中设置,在open和startStream中使用

mMmapClient.clientUid = request.getUserId();

mMmapClient.clientPid = request.getProcessId();

mMmapClient.packageName.setTo(String16(""));

mRequestedDeviceId = deviceId = getDeviceId();

// Fill in config

//获取当前流的配置信息

aaudio_format_t aaudioFormat = getFormat();

if (aaudioFormat == AAUDIO_UNSPECIFIED || aaudioFormat == AAUDIO_FORMAT_PCM_FLOAT) {

aaudioFormat = AAUDIO_FORMAT_PCM_I16;

}

config.format = AAudioConvert_aaudioToAndroidDataFormat(aaudioFormat);

int32_t aaudioSampleRate = getSampleRate();

if (aaudioSampleRate == AAUDIO_UNSPECIFIED) {

aaudioSampleRate = AAUDIO_SAMPLE_RATE_DEFAULT;

}

config.sample_rate = aaudioSampleRate;

int32_t aaudioSamplesPerFrame = getSamplesPerFrame();

if (direction == AAUDIO_DIRECTION_OUTPUT) {

config.channel_mask = (aaudioSamplesPerFrame == AAUDIO_UNSPECIFIED)

? AUDIO_CHANNEL_OUT_STEREO

: audio_channel_out_mask_from_count(aaudioSamplesPerFrame);

mHardwareTimeOffsetNanos = OUTPUT_ESTIMATED_HARDWARE_OFFSET_NANOS; // frames at DAC later

} else if (direction == AAUDIO_DIRECTION_INPUT) {

config.channel_mask = (aaudioSamplesPerFrame == AAUDIO_UNSPECIFIED)

? AUDIO_CHANNEL_IN_STEREO

: audio_channel_in_mask_from_count(aaudioSamplesPerFrame);

mHardwareTimeOffsetNanos = INPUT_ESTIMATED_HARDWARE_OFFSET_NANOS; // frames at ADC earlier

} else {

ALOGE("%s() invalid direction = %d", __func__, direction);

return AAUDIO_ERROR_ILLEGAL_ARGUMENT;

}

MmapStreamInterface::stream_direction_t streamDirection =

(direction == AAUDIO_DIRECTION_OUTPUT)

? MmapStreamInterface::DIRECTION_OUTPUT

: MmapStreamInterface::DIRECTION_INPUT;

aaudio_session_id_t requestedSessionId = getSessionId();

audio_session_t sessionId = AAudioConvert_aaudioToAndroidSessionId(requestedSessionId);

// Open HAL stream. Set mMmapStream

//属性和配置信息都设置完之后,就要开始打开流了

//这里面利用MmapStreamInterface来打开HAL层的MMAP流

//openMmapStream在MmapStreamInterface里面定义,具体实现在AudioFlinger

status_t status = MmapStreamInterface::openMmapStream(streamDirection,

&attributes,

&config,

mMmapClient,

&deviceId,

&sessionId,

this, // callback

mMmapStream,

&mPortHandle);

ALOGD("%s() mMapClient.uid = %d, pid = %d => portHandle = %d\n",

__func__, mMmapClient.clientUid, mMmapClient.clientPid, mPortHandle);

if (status != OK) {

ALOGE("%s() openMmapStream() returned status %d", __func__, status);

return AAUDIO_ERROR_UNAVAILABLE;

}

if (deviceId == AAUDIO_UNSPECIFIED) {

ALOGW("%s() openMmapStream() failed to set deviceId", __func__);

}

setDeviceId(deviceId);

if (sessionId == AUDIO_SESSION_ALLOCATE) {

ALOGW("%s() - openMmapStream() failed to set sessionId", __func__);

}

aaudio_session_id_t actualSessionId =

(requestedSessionId == AAUDIO_SESSION_ID_NONE)

? AAUDIO_SESSION_ID_NONE

: (aaudio_session_id_t) sessionId;

setSessionId(actualSessionId);

ALOGD("%s() deviceId = %d, sessionId = %d", __func__, getDeviceId(), getSessionId());

// Create MMAP/NOIRQ buffer.

//创建MMAP缓存

int32_t minSizeFrames = getBufferCapacity();

if (minSizeFrames <= 0) {

// zero will get rejected

minSizeFrames = AAUDIO_BUFFER_CAPACITY_MIN;

}

//这个createMmapBuffer也是在AudioFlinger里面实现的

status = mMmapStream->createMmapBuffer(minSizeFrames, &mMmapBufferinfo);

if (status != OK) {

ALOGE("%s() - createMmapBuffer() failed with status %d %s",

__func__, status, strerror(-status));

result = AAUDIO_ERROR_UNAVAILABLE;

goto error;

} else {

ALOGD("%s() createMmapBuffer() returned = %d, buffer_size = %d, burst_size %d"

", Sharable FD: %s",

__func__, status,

abs(mMmapBufferinfo.buffer_size_frames),

mMmapBufferinfo.burst_size_frames,

mMmapBufferinfo.buffer_size_frames < 0 ? "Yes" : "No");

}

setBufferCapacity(mMmapBufferinfo.buffer_size_frames);

// The audio HAL indicates if the shared memory fd can be shared outside of audioserver

// by returning a negative buffer size

//音频HAL通过返回负缓冲区大小来指示共享内存fd是否可以在audioserver之外共享

//这个可以一会分析createMmapBuffer时具体去看

if (getBufferCapacity() < 0) {

// Exclusive mode can be used by client or service.

setBufferCapacity(-getBufferCapacity());

} else {

// Exclusive mode can only be used by the service because the FD cannot be shared.

uid_t audioServiceUid = getuid();

if ((mMmapClient.clientUid != audioServiceUid) &&

getSharingMode() == AAUDIO_SHARING_MODE_EXCLUSIVE) {

// Fallback is handled by caller but indicate what is possible in case

// this is used in the future

//回退由调用者处理,但指出在将来使用时可能发生的情况

setSharingMode(AAUDIO_SHARING_MODE_SHARED);

ALOGW("%s() - exclusive FD cannot be used by client", __func__);

result = AAUDIO_ERROR_UNAVAILABLE;

goto error;

}

}

// Get information about the stream and pass it back to the caller.

//获取有关流的信息并将其传递回调用者。

setSamplesPerFrame((direction == AAUDIO_DIRECTION_OUTPUT)

? audio_channel_count_from_out_mask(config.channel_mask)

: audio_channel_count_from_in_mask(config.channel_mask));

// AAudio creates a copy of this FD and retains ownership of the copy.

// Assume that AudioFlinger will close the original shared_memory_fd.

//AAudio创建此FD的副本并保留副本的所有权

//假设AudioFlinger将关闭原始共享内存

mAudioDataFileDescriptor.reset(dup(mMmapBufferinfo.shared_memory_fd));

if (mAudioDataFileDescriptor.get() == -1) {

ALOGE("%s() - could not dup shared_memory_fd", __func__);

result = AAUDIO_ERROR_INTERNAL;

goto error;

}

mFramesPerBurst = mMmapBufferinfo.burst_size_frames;

setFormat(AAudioConvert_androidToAAudioDataFormat(config.format));

setSampleRate(config.sample_rate);

// Scale up the burst size to meet the minimum equivalent in microseconds.

// This is to avoid waking the CPU too often when the HW burst is very small

// or at high sample rates.

do {

if (burstMicros > 0) {

// skip first loop

mFramesPerBurst *= 2;

}

burstMicros = mFramesPerBurst * static_cast<int64_t>(1000000) / getSampleRate();

} while (burstMicros < burstMinMicros);

ALOGD("%s() original burst = %d, minMicros = %d, to burst = %d\n",

__func__, mMmapBufferinfo.burst_size_frames, burstMinMicros, mFramesPerBurst);

ALOGD("%s() actual rate = %d, channels = %d"

", deviceId = %d, capacity = %d\n",

__func__, getSampleRate(), getSamplesPerFrame(), deviceId, getBufferCapacity());

return result;

error:

close();

return result;

}

继续看一下在AudioFlinger上面是怎样打开流和创建缓存的

xref: /frameworks/av/services/audioflinger/AudioFlinger.cpp

status_t AudioFlinger::openMmapStream(MmapStreamInterface::stream_direction_t direction,

const audio_attributes_t *attr,

audio_config_base_t *config,

const AudioClient& client,

audio_port_handle_t *deviceId,

audio_session_t *sessionId,

const sp<MmapStreamCallback>& callback,

sp<MmapStreamInterface>& interface,

audio_port_handle_t *handle)

{

......

if (direction == MmapStreamInterface::DIRECTION_OUTPUT) {

audio_config_t fullConfig = AUDIO_CONFIG_INITIALIZER;

fullConfig.sample_rate = config->sample_rate;

fullConfig.channel_mask = config->channel_mask;

fullConfig.format = config->format;

//注意AUDIO_OUTPUT_FLAG_MMAP_NOIRQ和AUDIO_OUTPUT_FLAG_DIRECT这两个flag

ret = AudioSystem::getOutputForAttr(attr, &io,

actualSessionId,

&streamType, client.clientPid, client.clientUid,

&fullConfig,

(audio_output_flags_t)(AUDIO_OUTPUT_FLAG_MMAP_NOIRQ |

AUDIO_OUTPUT_FLAG_DIRECT),

deviceId, &portId);

} else {

//注意AUDIO_INPUT_FLAG_MMAP_NOIRQ

ret = AudioSystem::getInputForAttr(attr, &io,

actualSessionId,

client.clientPid,

client.clientUid,

client.packageName,

config,

AUDIO_INPUT_FLAG_MMAP_NOIRQ, deviceId, &portId);

}

if (ret != NO_ERROR) {

return ret;

}

// at this stage, a MmapThread was created when openOutput() or openInput() was called by

// audio policy manager and we can retrieve it

//在这个阶段,当音频策略管理器调用openOutput()或openInput()时创建了一个MmapThread,我们可以检索它

//这里可以根据这个io找到对应创建好的MMAPThread

sp<MmapThread> thread = mMmapThreads.valueFor(io);

if (thread != 0) {

interface = new MmapThreadHandle(thread);

thread->configure(attr, streamType, actualSessionId, callback, *deviceId, portId);

*handle = portId;

*sessionId = actualSessionId;

} else {

//没找到的话就释放掉资源

if (direction == MmapStreamInterface::DIRECTION_OUTPUT) {

AudioSystem::releaseOutput(io, streamType, actualSessionId);

} else {

AudioSystem::releaseInput(portId);

}

ret = NO_INIT;

}

ALOGV("%s done status %d portId %d", __FUNCTION__, ret, portId);

return ret;

}

上文提到MMAPThread是在openOutput里面创建的

简单来说就是getOutputForAttr这个函数在获取到句柄的同时,也会顺便打开通路

最终调用到AudioFlinger里面的openOutput(又回来了)

status_t AudioFlinger::openOutput(audio_module_handle_t module,

audio_io_handle_t *output,

audio_config_t *config,

audio_devices_t *devices,

const String8& address,

uint32_t *latencyMs,

audio_output_flags_t flags)

{

ALOGI("openOutput() this %p, module %d Device %#x, SamplingRate %d, Format %#08x, "

"Channels %#x, flags %#x",

this, module,

(devices != NULL) ? *devices : 0,

config->sample_rate,

config->format,

config->channel_mask,

flags);

if (devices == NULL || *devices == AUDIO_DEVICE_NONE) {

return BAD_VALUE;

}

Mutex::Autolock _l(mLock);

sp<ThreadBase> thread = openOutput_l(module, output, config, *devices, address, flags);

if (thread != 0) {

//看到这个flag了---AUDIO_OUTPUT_FLAG_MMAP_NOIRQ

if ((flags & AUDIO_OUTPUT_FLAG_MMAP_NOIRQ) == 0) {

......

}

} else {

//最终拿到已经创建好的线程

//但是是在哪创建的呢

MmapThread *mmapThread = (MmapThread *)thread.get();

mmapThread->ioConfigChanged(AUDIO_OUTPUT_OPENED);

}

return NO_ERROR;

}

return NO_INIT;

}

MmapThread线程是在AudioFlinger里面的openOutput_l函数中创建的

一起来看下:

sp<AudioFlinger::ThreadBase> AudioFlinger::openOutput_l(audio_module_handle_t module,

audio_io_handle_t *output,

audio_config_t *config,

audio_devices_t devices,

const String8& address,

audio_output_flags_t flags)

{

AudioHwDevice *outHwDev = findSuitableHwDev_l(module, devices);

if (outHwDev == NULL) {

return 0;

}

......

AudioStreamOut *outputStream = NULL;

status_t status = outHwDev->openOutputStream(

&outputStream,

*output,

devices,

flags,

config,

address.string());

mHardwareStatus = AUDIO_HW_IDLE;

if (status == NO_ERROR) {

//又看到这个flag了

//根据参数在这创建的MmapPlaybackThread

if (flags & AUDIO_OUTPUT_FLAG_MMAP_NOIRQ) {

sp<MmapPlaybackThread> thread =

new MmapPlaybackThread(this, *output, outHwDev, outputStream,

devices, AUDIO_DEVICE_NONE, mSystemReady);

//加入到线程组里面

mMmapThreads.add(*output, thread);

ALOGV("openOutput_l() created mmap playback thread: ID %d thread %p",

*output, thread.get());

return thread;

} else {

......

}

}

return 0;

}

打开输出流这里面又调用到了AudioHwDevice的openOutputStream方法

xref: /frameworks/av/services/audioflinger/AudioHwDevice.cpp

status_t AudioHwDevice::openOutputStream(

AudioStreamOut **ppStreamOut,

audio_io_handle_t handle,

audio_devices_t devices,

audio_output_flags_t flags,

struct audio_config *config,

const char *address)

{

struct audio_config originalConfig = *config;

AudioStreamOut *outputStream = new AudioStreamOut(this, flags);

//调到了hal层,继续打开通路

status_t status = outputStream->open(handle, devices, config, address);

......

*ppStreamOut = outputStream;

return status;

}

整个流程大概分析了一遍

重点记住这个flag:

AUDIO_OUTPUT_FLAG_MMAP_NOIRQ

AUDIO_INPUT_FLAG_MMAP_NOIRQ

在整个打开通路的流程中起着重要的作用

上述只分析了独占模式下的情况,共享模式和这个类似,读者可以根据时序图自行分析

AAudioService的其他方法,将在后续章节发出

总结

以上对AAudioService这个服务以及它的OpenStream方法做了详细的分析

(1)AAudio并没有走AudioTrack和AudioRecod

(2)AAudio最终还是要通过AudioFlinger打开音频通路和创建播放线程

(3)AAudio核心原理是通过内存映射来减小内存数据拷贝次数,增加音频传输效率,减小延迟

(4)AAudioServiceStreamMMAP创建流之后得到了AAudioServiceEndpointMMAP类型的对象,后续对音频流的操作都是 通过操作这个对象来实现的