转载:https://www.cnblogs.com/liugh/p/7482571.html

参考文章:https://ci.apache.org/projects/flink/flink-docs-release-1.3/setup/jobmanager_high_availability.html#bootstrap-zookeeper

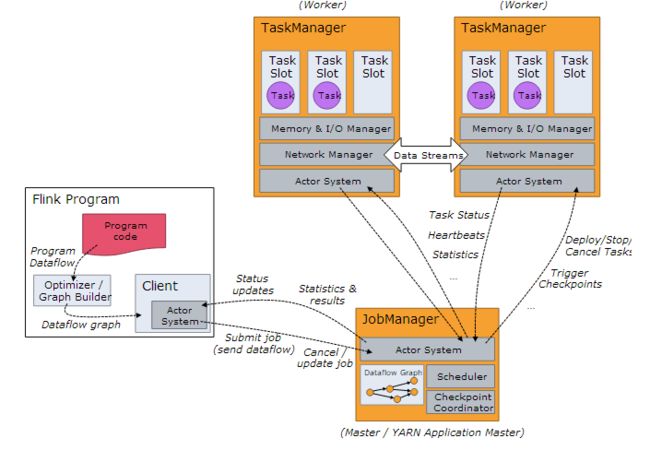

Flink典型的任务处理过程如下所示:

很容易发现,JobManager存在单点故障(SPOF:Single Point Of Failure),因此对Flink做HA,主要是对JobManager做HA,根据Flink集群的部署模式不同,分为Standalone、OnYarn,本文主要涉及Standalone模式。

JobManager的HA,是通过Zookeeper实现的,因此需要先搭建好Zookeeper集群,同时HA的信息,还要存储在HDFS中,因此也需要Hadoop集群,最后修改Flink中的配置文件。

一、部署Zookeeper集群

参考博文:http://www.cnblogs.com/liugh/p/6671460.html

二、部署Hadoop集群

参考博文:http://www.cnblogs.com/liugh/p/6624872.html

三、部署Flink集群

参考博文:http://www.cnblogs.com/liugh/p/7446295.html

四、conf/flink-conf.yaml修改

4.1 必选项

high-availability.zookeeper.quorum: DEV-SH-MAP-01:2181,DEV-SH-MAP-02:2181,DEV-SH-MAP-03:2181

high-availability.zookeeper.storageDir: hdfs:///flink/ha

4.2 可选项

high-availability.zookeeper.path.cluster-id: /map_flink

修改完后,使用scp命令将flink-conf.yaml文件同步到其他节点

五、conf/masters修改

设置要启用JobManager的节点及端口:

dev-sh-map-01:8081

dev-sh-map-02:8081

修改完后,使用scp命令将masters文件同步到其他节点

六、conf/zoo.cfg修改

server.1=DEV-SH-MAP-01:2888:3888

server.2=DEV-SH-MAP-02:2888:3888

server.3=DEV-SH-MAP-03:2888:3888

修改完后,使用scp命令将masters文件同步到其他节点

七、启动HDFS

[root@DEV-SH-MAP-01 conf]# start-dfs.sh

Starting namenodes on [DEV-SH-MAP-01]

DEV-SH-MAP-01: starting namenode, logging to /usr/hadoop-2.7.3/logs/hadoop-root-namenode-DEV-SH-MAP-01.out

DEV-SH-MAP-02: starting datanode, logging to /usr/hadoop-2.7.3/logs/hadoop-root-datanode-DEV-SH-MAP-02.out

DEV-SH-MAP-03: starting datanode, logging to /usr/hadoop-2.7.3/logs/hadoop-root-datanode-DEV-SH-MAP-03.out

DEV-SH-MAP-01: starting datanode, logging to /usr/hadoop-2.7.3/logs/hadoop-root-datanode-DEV-SH-MAP-01.out

Starting secondary namenodes [0.0.0.0] 0.0.0.0:

starting secondarynamenode, logging to /usr/hadoop-2.7.3/logs/hadoop-root-secondarynamenode-DEV-SH-MAP-01.out

八、启动Zookeeper集群

[root@DEV-SH-MAP-01 conf]# start-zookeeper-quorum.sh

Starting zookeeper daemon on host DEV-SH-MAP-01.

Starting zookeeper daemon on host DEV-SH-MAP-02.

Starting zookeeper daemon on host DEV-SH-MAP-03.

【注】这里使用的命令start-zookeeper-quorum.sh是FLINK_HOME/bin中的脚本

九、启动Flink集群

[root@DEV-SH-MAP-01 conf]# start-cluster.sh

Starting HA cluster with 2 masters.

Starting jobmanager daemon on host DEV-SH-MAP-01.

Starting jobmanager daemon on host DEV-SH-MAP-02.

Starting taskmanager daemon on host DEV-SH-MAP-01.

Starting taskmanager daemon on host DEV-SH-MAP-02.

Starting taskmanager daemon on host DEV-SH-MAP-03.

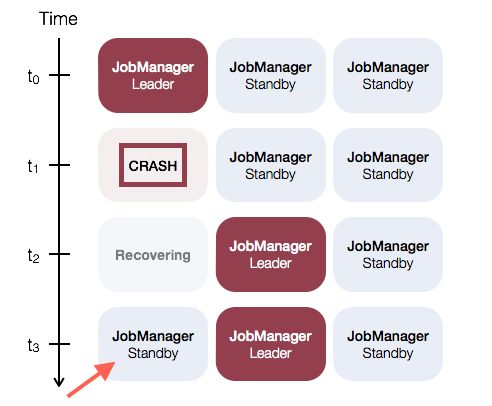

可以看到,启动了两个JobManager,一个Leader,一个Standby

十、测试HA

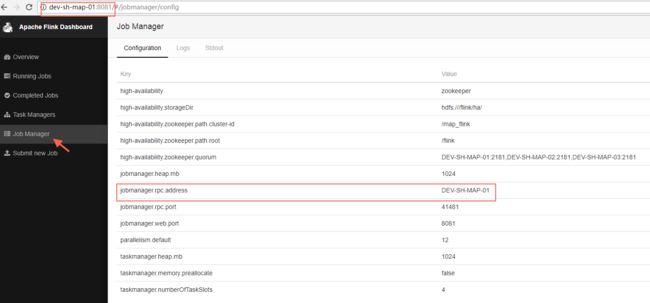

10.1 访问Leader的WebUI:

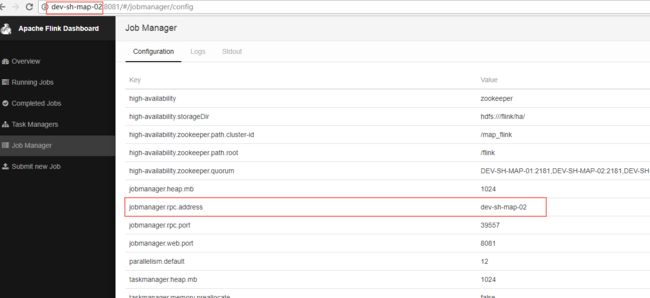

10.2 访问StandBy的WebUI

这时也会跳转到Leader的WebUI

10.3 Kill掉Leader

root@DEV-SH-MAP-01 flink-1.3.2]# jps

14240 Jps

34929 TaskManager

33106 DataNode

33314 SecondaryNameNode

34562 JobManager

33900 FlinkZooKeeperQuorumPeer

32991 NameNode

[root@DEV-SH-MAP-01 flink-1.3.2]# kill -9 34562

[root@DEV-SH-MAP-01 flink-1.3.2]# jps

34929 TaskManager

33106 DataNode

33314 SecondaryNameNode

14275 Jps

33900 FlinkZooKeeperQuorumPeer

32991 NameNode

再次访问Flink WebUI,发现Leader已经发生切换

10.4 重启被Kill掉的JobManager

[root@DEV-SH-MAP-01 bin]# jobmanager.sh start cluster DEV-SH-MAP-01

Starting jobmanager daemon on host DEV-SH-MAP-01.

再次查看WebUI,发现虽然以前被Kill掉的Leader起来了,但是现在仍是StandBy,现有的Leader不会发生切换,也就是Flink下面的示意图:

十一、存在的问题

JobManager发生切换时,TaskManager也会跟着发生重启