ELK分布式日志收集原理

- 每台服务器集群节点安装Logstash日志收集系统插件

- 每台服务器节点将日志输出到Logstash中

- Logstash将该日志格式化为json格式,根据每天创建不同的索引,输出到Elasticsearch中

- 浏览器使用欧冠Kibana查询日志信息

环境安装

- 安装Elasticsearch

- 安装Logstash

- 安装Kibana

Logstash操作流程

把每台服务器的日志文件转换成json格式存放在ES里面,包括创建索引和文档等。

一、搭建elasticsearch

tar -zxvf elasticsearch-6.4.3.tar.gz

cd elasticsearch-6.4.3/config/

vi elasticsearch.yml

修改

-

network.host: 203.75.156.116,改为自己的ip地址 -

http.port: 9200,

cd ../bin/

#启动 elasticsearch

./elasticsearch

PS:root用户是不能正常启动的

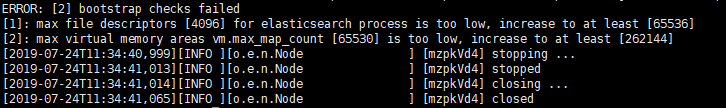

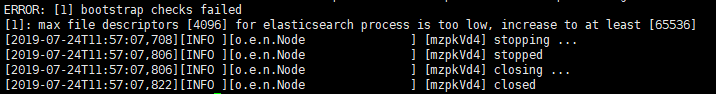

若报一下错误:

vi /etc/sysctl.conf

vm.max_map_count=655360

sysctl -p

若又报4096错误

vi /etc/security/limits.conf

#添加以下四行

* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* hard nproc 4096

重启服务器

访问 ip:9200,得到以下响应内容,就elasticsearch就算搭建成功了。

二、搭建Logstash

- 配置

tar -zxvf logstash-6.4.3.tar.gz

cd logstash-6.4.3/config/

# 新建conf文件

vim myconf.conf

input {

# 从文件读取日志信息 输送到控制台

file {

path => "/home/zhanhao/software/ELK/elasticsearch-6.4.3/logs/elasticsearch.log"

codec => "json" ## 以JSON格式读取日志

type => "elasticsearch"

start_position => "beginning"

}

}

# filter {

#

# }

output {

# 标准输出

# stdout {}

# 输出进行格式化,采用Ruby库来解析日志

stdout { codec => rubydebug }

elasticsearch {

hosts => ["203.75.156.116:9200"]

index => "es-%{+YYYY.MM.dd}"

}

}

- 启动

cd logstash-6.4.3/bin/

./logstash -f ../config/myconf.conf

出现以下日志就算ok啦~(当然首先得启动elasticsearch)

...

{

"tags" => [

[0] "_jsonparsefailure"

],

"@version" => "1",

"path" => "/home/zhanhao/software/ELK/elasticsearch-6.4.3/logs/elasticsearch.log",

"message" => "[2019-08-01T15:08:17,819][INFO ][o.e.g.GatewayService ] [node-1] recovered [3] indices into cluster_state",

"@timestamp" => 2019-08-01T07:08:17.998Z,

"type" => "elasticsearch",

"host" => "203-75-156-116.HINET-IP.hinet.net"

}

{

"tags" => [

[0] "_jsonparsefailure"

],

"@version" => "1",

"path" => "/home/zhanhao/software/ELK/elasticsearch-6.4.3/logs/elasticsearch.log",

"message" => "[2019-08-01T15:08:45,399][INFO ][o.e.c.m.MetaDataIndexTemplateService] [node-1] adding template [logstash] for index patterns [logstash-*]",

"@timestamp" => 2019-08-01T07:08:46.055Z,

"type" => "elasticsearch",

"host" => "203-75-156-116.HINET-IP.hinet.net"

}

{

"tags" => [

[0] "_jsonparsefailure"

],

"@version" => "1",

"path" => "/home/zhanhao/software/ELK/elasticsearch-6.4.3/logs/elasticsearch.log",

"message" => "[2019-08-01T15:08:47,960][INFO ][o.e.c.m.MetaDataCreateIndexService] [node-1] [es-2019.08.01] creating index, cause [auto(bulk api)], templates [], shards [5]/[1], mappings []",

"@timestamp" => 2019-08-01T07:08:48.065Z,

"type" => "elasticsearch",

"host" => "203-75-156-116.HINET-IP.hinet.net"

}

{

"tags" => [

[0] "_jsonparsefailure"

],

"@version" => "1",

"path" => "/home/zhanhao/software/ELK/elasticsearch-6.4.3/logs/elasticsearch.log",

"message" => "[2019-08-01T15:08:48,339][INFO ][o.e.c.m.MetaDataMappingService] [node-1] [es-2019.08.01/hstmRsyvT5qG6NSIf-474w] create_mapping [doc]",

"@timestamp" => 2019-08-01T07:08:49.069Z,

"type" => "elasticsearch",

"host" => "203-75-156-116.HINET-IP.hinet.net"

}

三、搭建Kibana

tar -zxvf kibana-6.4.3-linux-x86_64.tar.gz

cd kibana-6.4.3-linux-x86_64/config/

vi kibana.yml

# 修改

server.port: 5601

server.host: "自己ip"

elasticsearch.url: "http://自己ip:9200"

cd ../bin/

# 启动

./kibana

访问 ip:5601

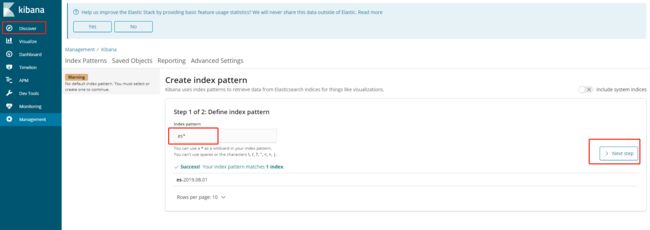

四、视图化界面

以时间戳创建

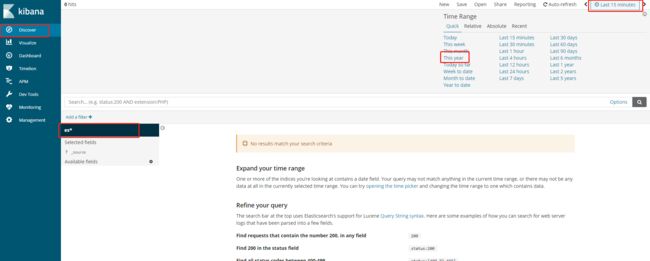

创建完成效果

没有找到日志数据,扩大查询范围