多层感知机简介

多层感知机模型

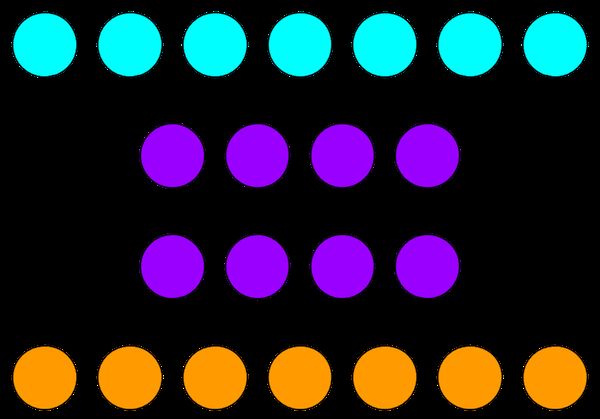

这里定义含有两个隐含层的模型,隐含层输出均为256个节点,输入784(MNIST数据集图片大小28*28),输出10。

激活函数

比较常用的是 ReLU:relu(x)=max(x,0),本例中没有加激活函数。

softmax(同前面的logistic回归)

损失函数:交叉熵

Tensorflow实现多层感知机

from __future__ import print_function

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

导入数据集

# Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("./data/", one_hot=False)

Extracting ./data/train-images-idx3-ubyte.gz

Extracting ./data/train-labels-idx1-ubyte.gz

Extracting ./data/t10k-images-idx3-ubyte.gz

Extracting ./data/t10k-labels-idx1-ubyte.gz

设置参数

# Parameters

learning_rate = 0.1

num_steps = 1000

batch_size = 128

display_step = 100

# Network Parameters

n_hidden_1 = 256 # 1st layer number of neurons

n_hidden_2 = 256 # 2nd layer number of neurons

num_input = 784 # MNIST data input (img shape: 28*28)

num_classes = 10 # MNIST total classes (0-9 digits)

定义多层感知机模型

# Define the neural network

def neural_net(x_dict):

# TF Estimator input is a dict, in case of multiple inputs

x = x_dict['images']

# Hidden fully connected layer with 256 neurons

layer_1 = tf.layers.dense(x, n_hidden_1)

# Hidden fully connected layer with 256 neurons

layer_2 = tf.layers.dense(layer_1, n_hidden_2)

# Output fully connected layer with a neuron for each class

out_layer = tf.layers.dense(layer_2, num_classes)

return out_layer

补充:dense [^2]

dense( inputs, units, activation=None, use_bias=True, kernel_initializer=None, bias_initializer=tf.zeros_initializer(), kernel_regularizer=None, bias_regularizer=None, activity_regularizer=None, kernel_constraint=None, bias_constraint=None, trainable=True, name=None, reuse=None )参数说明如下:

- inputs:必需,即需要进行操作的输入数据。

- units:必须,即神经元的数量。

- activation:可选,默认为 None,如果为 None 则是线性激>活。

- use_bias:可选,默认为 True,是否使用偏置。

- kernel_initializer:可选,默认为 None,即权重的初始化>方法,如果为 None,则使用默认的 Xavier 初始化方法。

- bias_initializer:可选,默认为零值初始化,即偏置的初始>化方法。

- kernel_regularizer:可选,默认为 None,施加在权重上的>正则项。

- bias_regularizer:可选,默认为 None,施加在偏置上的正>则项。

- activity_regularizer:可选,默认为 None,施加在输出上>的正则项。

- kernel_constraint,可选,默认为 None,施加在权重上的>约束项。

- bias_constraint,可选,默认为 None,施加在偏置上的约束>项。

- trainable:可选,默认为 True,布尔类型,如果为 True,>则将变量添加到 GraphKeys.TRAINABLE_VARIABLES 中。

- name:可选,默认为 None,卷积层的名称。

- reuse:可选,默认为 None,布尔类型,如果为 True,那么>如果 name 相同时,会重复利用。

- 返回值: 全连接网络处理后的 Tensor。

上面的代码中,第三个参数为空,所以这里采用线性激活

定义模型函数

# Define the model function (following TF Estimator Template)

def model_fn(features, labels, mode):

# Build the neural network

logits = neural_net(features)

# Predictions

pred_classes = tf.argmax(logits, axis=1)

pred_probas = tf.nn.softmax(logits)

# If prediction mode, early return

if mode == tf.estimator.ModeKeys.PREDICT:

return tf.estimator.EstimatorSpec(mode, predictions=pred_classes)

# Define loss and optimizer

loss_op = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(

logits=logits, labels=tf.cast(labels, dtype=tf.int32)))

optimizer = tf.train.GradientDescentOptimizer(learning_rate=learning_rate)

train_op = optimizer.minimize(loss_op, global_step=tf.train.get_global_step())

# Evaluate the accuracy of the model

acc_op = tf.metrics.accuracy(labels=labels, predictions=pred_classes)

# TF Estimators requires to return a EstimatorSpec, that specify

# the different ops for training, evaluating, ...

estim_specs = tf.estimator.EstimatorSpec(

mode=mode,

predictions=pred_classes,

loss=loss_op,

train_op=train_op,

eval_metric_ops={'accuracy': acc_op})

return estim_specs

构建评估器

# Build the Estimator

model = tf.estimator.Estimator(model_fn)

INFO:tensorflow:Using default config.

WARNING:tensorflow:Using temporary folder as model directory: C:\Users\xywang\AppData\Local\Temp\tmp995gddib

INFO:tensorflow:Using config: {'_model_dir': 'C:\\Users\\xywang\\AppData\\Local\\Temp\\tmp995gddib', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': None, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_service': None, '_cluster_spec': , '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

补充:tf.estimator

tf.estimator就是已经预定义好的模型,自带train,evaluate,predict。

其编程范式为:

- 定义算法模型,比如多层感知机,CNN;

- 定义模型函数(model_fn),包括构建graph,定义损失函数、优化器,估计准确率等,返回结果分训练和测试两种情况;

- 构建评估器;

model = tf.estimator.Estimator(model_fn)

- 用 tf.estimator.inputs.numpy_input_fn 把 input_fn 传入 model,就可调用 model.train, model.evaluate, model.predict 了。

input_fn = tf.estimator.inputs.numpy_input_fn() model.train(input_fn) model.evaluate(input_fn) model.predict(input_fn)Estimator 是一种更高层次的封装,它把一些基本算法的算法>模型和模型函数预定义好,你只需要传入参数即可。

input_fn [^1]

一般来讲,input_fn方法做两件事:

1.数据预处理,如洗脏数据,归整数据等。没有就空着。

2.返回feature_cols, labels。

- feature_cols:一个dict,key为feature名,value>为feature值。

- lables: 对应的分类标签。

可以将多种对象转换为tensorflow对象,常见的为将Numpy>转tensorflow对象。比如:

import tensorflow as tf import numpy as np #numpy input_fn. x_data =[{"feature1": 2, "features2":6}, {"feature1": 1, "features2":5}, {"feature1": 4, "features2":8}] y_data = [2,3,4] my_input_fn = tf.estimator.inputs.numpy_input_fn( x={"x": np.array(x_data)}, y=np.array(y_data), shuffle = True) #得到的是一个名为my_input_fn的function对象。

训练模型

# Define the input function for training

input_fn = tf.estimator.inputs.numpy_input_fn(

x={'images': mnist.train.images}, y=mnist.train.labels,

batch_size=batch_size, num_epochs=None, shuffle=True)

#Train the Model

model.train(input_fn, steps=num_steps)

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Saving checkpoints for 1 into C:\Users\xywang\AppData\Local\Temp\tmp995gddib\model.ckpt.

INFO:tensorflow:loss = 2.4631722, step = 1

INFO:tensorflow:global_step/sec: 248.501

INFO:tensorflow:loss = 0.42414927, step = 101 (0.388 sec)

INFO:tensorflow:global_step/sec: 301.862

INFO:tensorflow:loss = 0.48539022, step = 201 (0.331 sec)

INFO:tensorflow:global_step/sec: 310.3

INFO:tensorflow:loss = 0.2779913, step = 301 (0.323 sec)

INFO:tensorflow:global_step/sec: 303.697

INFO:tensorflow:loss = 0.20052063, step = 401 (0.329 sec)

INFO:tensorflow:global_step/sec: 322.311

INFO:tensorflow:loss = 0.5092098, step = 501 (0.309 sec)

INFO:tensorflow:global_step/sec: 337.555

INFO:tensorflow:loss = 0.28386787, step = 601 (0.297 sec)

INFO:tensorflow:global_step/sec: 322.309

INFO:tensorflow:loss = 0.36957514, step = 701 (0.309 sec)

INFO:tensorflow:global_step/sec: 334.17

INFO:tensorflow:loss = 0.28504127, step = 801 (0.300 sec)

INFO:tensorflow:global_step/sec: 319.222

INFO:tensorflow:loss = 0.37339848, step = 901 (0.312 sec)

INFO:tensorflow:Saving checkpoints for 1000 into C:\Users\xywang\AppData\Local\Temp\tmp995gddib\model.ckpt.

INFO:tensorflow:Loss for final step: 0.2043538.

tensorflow.python.estimator.estimator.Estimator at 0x24bbee72160

模型评估

# Evaluate the Model

# Define the input function for evaluating

input_fn = tf.estimator.inputs.numpy_input_fn(

x={'images': mnist.test.images}, y=mnist.test.labels,

batch_size=batch_size, shuffle=False)

# Use the Estimator 'evaluate' method

model.evaluate(input_fn)

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Starting evaluation at 2018-04-11-08:04:38

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from C:\Users\xywang\AppData\Local\Temp\tmp995gddib\model.ckpt-1000

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Finished evaluation at 2018-04-11-08:04:38

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.9149, global_step = 1000, loss = 0.29386005

{'accuracy': 0.9149, 'global_step': 1000, 'loss': 0.29386005}

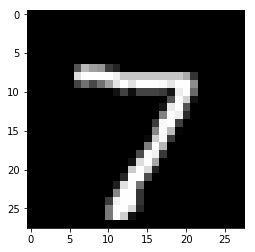

测试

# Predict single images

n_images = 1

# Get images from test set

test_images = mnist.test.images[:n_images]

# Prepare the input data

input_fn = tf.estimator.inputs.numpy_input_fn(

x={'images': test_images}, shuffle=False)

# Use the model to predict the images class

preds = list(model.predict(input_fn))

# Display

for i in range(n_images):

plt.imshow(np.reshape(test_images[i], [28, 28]), cmap='gray')

plt.show()

print("Model prediction:", preds[i])

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from C:\Users\xywang\AppData\Local\Temp\tmp995gddib\model.ckpt-1000

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

Model prediction: 7

参考

[1] 在tf.estimator中构建inpu_fn解读

[2] TensorFlow layers模块用法