运维实战 kubernetes(k8s) 之 service

@[TOC]( 运维实战 kubernetes(k8s) 之 service )

1. service 介绍

- Service可以看作是一组提供相同服务的Pod对外的访问接口。借助Service,应用可以方便地实现服务发现和负载均衡。

- service默认只支持4层负载均衡能力,没有7层功能。(可以通过Ingress实现)

- service的类型:

ClusterIP:默认值,k8s系统给service自动分配的虚拟IP,只能在集群内部访问。

NodePort:将Service通过指定的Node上的端口暴露给外部,访问任意一个NodeIP:nodePort都将路由到ClusterIP。

LoadBalancer:在 NodePort 的基础上,借助 cloud provider 创建一个外部的负载均衡器,并将请求转发到 :NodePort,此模式只能在云服务器上使用。

ExternalName:将服务通过 DNS CNAME 记录方式转发到指定的域名(通过 spec.externlName 设定)。 - Service 是由 kube-proxy 组件,加上 iptables 来共同实现的.

- kube-proxy 通过 iptables 处理 Service 的过程,需要在宿主机上设置相当多的 iptables 规则,如果宿主机有大量的Pod,不断刷新iptables规则,会消耗大量的CPU资源。

- IPVS模式的 service,可以使K8s集群支持更多量级的Pod。

先启动仓库,然后输入变量,查看kubectl的状态;

[root@server1 ~]# cd harbor/

[root@server1 harbor]# docker-compose start

[root@server2 ~]# export KUBECONFIG=/etc/kubernetes/admin.conf

[root@server2 ~]# kubectl get pod -n kube-system

2. 开启 kube-proxy 的 ipvs 模式

要确保仓库的存在;此处用的是本机的源;

[root@server2 k8s]# cd /etc/yum.repos.d/

[root@server2 yum.repos.d]# ls

docker.repo dvd.repo k8s.repo redhat.repo

[root@server2 yum.repos.d]# vim k8s.repo

[root@server2 yum.repos.d]# cat k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=0 ##将此处改为0,不启用该源;使用本机的源来安装

gpgcheck=0

每个结点安装yum install -y ipvsadm 软件;

安装完成之后:

[root@server2 ~]# ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@server2 ~]# ipvsadm -ln

##查看 iptables 的规则,它是内核功能

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@server2 ~]# lsmod | grep ip_vs

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145497 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 133095 10 ip_vs,nf_nat,nf_nat_ipv4,nf_nat_ipv6,xt_conntrack,nf_nat_masquerade_ipv4,nf_nat_masquerade_ipv6,nf_conntrack_netlink,nf_conntrack_ipv4,nf_conntrack_ipv6

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

[root@server2 ~]# kubectl -n kube-system get cm

## 查看配置信息

NAME DATA AGE

coredns 1 25h

extension-apiserver-authentication 6 25h

kube-flannel-cfg 2 24h

kube-proxy 2 25h

kube-root-ca.crt 1 25h

kubeadm-config 2 25h

kubelet-config-1.21 1 25h

[root@server2 ~]# kubectl -n kube-system edit cm kube-proxy

##编辑配置信息,指定使用 ipvs 的模式,不写时默认用的是 iptables

configmap/kube-proxy edited

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: false

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

kind: KubeProxyConfiguration

metricsBindAddress: ""

mode: "ipvs"

configmap/kube-proxy edited

修改完信息之后,需要重载;由于当前的服务是由控制器所管理,此时只需删除之前的pod ,会再次读取配置文件重新拉取pod;

kube-proxy 通过 linux 的 IPVS 模块,以 rr 轮询方式调度 service 的Pod。

[root@server2 ~]# kubectl -n kube-system get daemonsets.apps

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-flannel-ds 3 3 3 3 3 <none> 24h

kube-proxy 3 3 3 3 3 kubernetes.io/os=linux 25h

[root@server2 ~]# kubectl -n kube-system get pod | grep kube-proxy | awk '{system("kubectl -n kube-system delete pod "$1"")}'

pod "kube-proxy-866lg" deleted

pod "kube-proxy-hxgbt" deleted

pod "kube-proxy-jrc9z" deleted

[root@server2 ~]# ipvsadm -ln

##重启之后,此时在每个结点上都可以看到 iptables 策略;其中10.96.0.10是 CLUSTER-IP 的地址;0.8 和 0.9 是 dns 所在 pod 的地址;

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 172.25.25.2:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.0.8:53 Masq 1 0 0

-> 10.244.0.9:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.8:9153 Masq 1 0 0

-> 10.244.0.9:9153 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.0.8:53 Masq 1 0 0

-> 10.244.0.9:53 Masq 1 0 0

[root@server2 ~]# kubectl -n kube-system get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 8d

[root@server2 ~]# kubectl -n kube-system get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-85ffb569d4-85kp7 1/1 Running 3 8d 10.244.0.9 server2 <none> <none>

coredns-85ffb569d4-bd579 1/1 Running 3 8d 10.244.0.8 server2 <none> <none>

etcd-server2 1/1 Running 3 8d 172.25.25.2 server2 <none> <none>

kube-apiserver-server2 1/1 Running 3 8d 172.25.25.2 server2 <none> <none>

kube-controller-manager-server2 1/1 Running 3 8d 172.25.25.2 server2 <none> <none>

kube-flannel-ds-f8qhr 1/1 Running 2 8d 172.25.25.4 server4 <none> <none>

kube-flannel-ds-hvfwp 1/1 Running 2 8d 172.25.25.3 server3 <none> <none>

kube-flannel-ds-mppbp 1/1 Running 3 8d 172.25.25.2 server2 <none> <none>

kube-proxy-6f78h 1/1 Running 0 4m10s 172.25.25.2 server2 <none> <none>

kube-proxy-7jvkr 1/1 Running 0 4m12s 172.25.25.4 server4 <none> <none>

kube-proxy-9d5s7 1/1 Running 0 4m5s 172.25.25.3 server3 <none> <none>

kube-scheduler-server2 1/1 Running 3 8d 172.25.25.2 server2 <none> <none>

IPVS 模式下,kube-proxy 会在 service 创建后,在宿主机上添加一个虚拟网卡:kube-ipvs0,并分配 service IP。

[root@server2 ~]# ip addr show kube-ipvs0

10: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether 52:54:5e:c0:51:56 brd ff:ff:ff:ff:ff:ff

inet 10.96.0.10/32 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.96.0.1/32 scope global kube-ipvs0

valid_lft forever preferred_lft forever

新建一个来观察效果:

[root@server2 k8s]# kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8d

[root@server2 k8s]# ls

cronjob.yaml daemonset.yaml deployment.yaml job.yaml pod.yaml rs.yaml svc.yaml

[root@server2 k8s]# vim deployment.yaml

[root@server2 k8s]# kubectl apply -f deployment.yaml

deployment.apps/deployment-example created

[root@server2 k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deployment-example-5b768f7647-9wlvc 1/1 Running 0 4s

deployment-example-5b768f7647-j6bvs 1/1 Running 0 4s

deployment-example-5b768f7647-ntmk7 1/1 Running 0 4s

[root@server2 k8s]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

deployment-example-5b768f7647-9wlvc 1/1 Running 0 52s app=nginx,pod-template-hash=5b768f7647

deployment-example-5b768f7647-j6bvs 1/1 Running 0 52s app=nginx,pod-template-hash=5b768f7647

deployment-example-5b768f7647-ntmk7 1/1 Running 0 52s app=nginx,pod-template-hash=5b768f7647

[root@server2 k8s]# ipvsadm -ln

##此时虽然已经有了 pod 但是并没有加进去,没有 svc。

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 172.25.25.2:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.0.8:53 Masq 1 0 0

-> 10.244.0.9:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.8:9153 Masq 1 0 0

-> 10.244.0.9:9153 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.0.8:53 Masq 1 0 0

-> 10.244.0.9:53 Masq 1 0 0

将指令转为 yaml 文件;

[root@server2 k8s]# kubectl expose deployment deployment-example --port=80 --target-port=80

service/deployment-example exposed

[root@server2 k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

deployment-example ClusterIP 10.105.194.76 <none> 80/TCP 8s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8d

[root@server2 k8s]# kubectl describe svc deployment-example

Name: deployment-example

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.105.194.76

IPs: 10.105.194.76

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.26:80,10.244.2.33:80,10.244.2.34:80

Session Affinity: None

Events: <none>

[root@server2 k8s]# kubectl get svc deployment-example -o yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2021-06-12T13:30:52Z"

name: deployment-example

namespace: default

resourceVersion: "60216"

uid: 7729b22e-4e26-4e6e-afa1-7c4e0a37e019

spec:

clusterIP: 10.105.194.76

clusterIPs:

- 10.105.194.76

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {

}

[root@server2 k8s]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 172.25.25.2:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.0.8:53 Masq 1 0 0

-> 10.244.0.9:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.8:9153 Masq 1 0 0

-> 10.244.0.9:9153 Masq 1 0 0

TCP 10.105.194.76:80 rr

##此时查看时,会有三个pod

-> 10.244.1.26:80 Masq 1 0 0

-> 10.244.2.33:80 Masq 1 0 0

-> 10.244.2.34:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.0.8:53 Masq 1 0 0

-> 10.244.0.9:53 Masq 1 0 0

此时测试时,会负载均衡到后端的三个 pod 上

[root@server2 k8s]# curl 10.105.194.76

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@server2 k8s]# curl 10.105.194.76/hostname.html

deployment-example-5b768f7647-j6bvs

[root@server2 k8s]# curl 10.105.194.76/hostname.html

deployment-example-5b768f7647-9wlvc

[root@server2 k8s]# curl 10.105.194.76/hostname.html

deployment-example-5b768f7647-ntmk7

[root@server2 k8s]# ipvsadm -ln

测试之后,可以用此命令查看调度的次数

当用命令kubectl delete svc deployment-example 将服务删除时,此时也就在 ipvs中看不到信息。

除了上述用指令生成 yaml文件的方法之外,还可以直接编写 yaml文件;

[root@server2 k8s]# vim svc.yaml

[root@server2 k8s]# cat svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@server2 k8s]# kubectl apply -f svc.yaml

service/myservice created

[root@server2 k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8d

myservice ClusterIP 10.104.41.30 <none> 80/TCP 5s

[root@server2 k8s]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 172.25.25.2:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.0.8:53 Masq 1 0 0

-> 10.244.0.9:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.8:9153 Masq 1 0 0

-> 10.244.0.9:9153 Masq 1 0 0

TCP 10.104.41.30:80 rr

-> 10.244.1.26:80 Masq 1 0 0

-> 10.244.2.33:80 Masq 1 0 0

-> 10.244.2.34:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.0.8:53 Masq 1 0 0

-> 10.244.0.9:53 Masq 1 0 0

3. 创建 service:(NodePort方式)

为了从外部访问 service 的第一种方式,用 NodePort 的方式会绑定节点的端口,供外部来访问。

以上的方式都是 ClusterIP 的方式,此时修改一下格式:

[root@server2 k8s]# vim svc.yaml

[root@server2 k8s]# cat svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: nginx

type: NodePort

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@server2 k8s]# kubectl apply -f svc.yaml

service/myservice created

[root@server2 k8s]# kubectl get svc

##此时会将端口暴露出来,外部在访问时需要指定端口来访问

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8d

myservice NodePort 10.100.227.116 <none> 80:32204/TCP 6s

在书写 yaml 文件时,可以用命令来获得帮助 kubectl explain service.spec等后面跟得信息都可以查看帮助。

4. DNS 插件 Service

Kubernetes 提供了一个 DNS 插件 Service。

在集群内部直接用DNS记录的方式访问,而不需要一个VIP。

[root@server2 k8s]# yum install -y bind-utils.x86_64

##安装插件

[root@server2 k8s]# cat /etc/resolv.conf

nameserver 114.114.114.114

[root@server2 k8s]# cat svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: nginx

type: ClusterIP

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@server2 k8s]# kubectl apply -f svc.yaml

service/myservice created

[root@server2 k8s]# dig -t A myservice.default.svc.cluster.local @10.96.0.10

; <<>> DiG 9.9.4-RedHat-9.9.4-72.el7 <<>> -t A myservice.default.svc.cluster.local @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 4147

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;myservice.default.svc.cluster.local. IN A

;; ANSWER SECTION:

myservice.default.svc.cluster.local. 30 IN A 10.108.31.117

##此时解析到的地址为 myservice上的地址

;; Query time: 0 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Sun Jun 13 21:13:34 CST 2021

;; MSG SIZE rcvd: 115

[root@server2 k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 9d

myservice ClusterIP 10.108.31.117 <none> 80/TCP 10h

[root@server2 k8s]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 172.25.25.2:6443 Masq 1 3 0

TCP 10.96.0.10:53 rr

-> 10.244.179.65:53 Masq 1 0 0

-> 10.244.179.66:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.179.65:9153 Masq 1 0 0

-> 10.244.179.66:9153 Masq 1 0 0

TCP 10.108.31.117:80 rr

-> 10.244.1.31:80 Masq 1 0 0

-> 10.244.2.40:80 Masq 1 0 0

-> 10.244.2.41:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.179.65:53 Masq 1 0 1

-> 10.244.179.66:53 Masq 1 0 1

以上创建的过程中,IP会随着pod 而变化,但是域名并不会变化;在访问时可以直接指定域名来访问,此时也是负载均衡的。

[root@server2 k8s]# kubectl run demo --image=busyboxplus -it --restart=Never

If you don't see a command prompt, try pressing enter.

/ # cat /etc/resolv.conf

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

/ # curl myservice

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

/ # curl myservice/hostname.html

deployment-example-5b768f7647-rkpgr

/ #

/ # curl myservice/hostname.html

deployment-example-5b768f7647-czchr

/ # curl myservice/hostname.html

deployment-example-5b768f7647-brg9n

/ # curl myservice/hostname.html

deployment-example-5b768f7647-rkpgr

- Headless Service “无头服务”

Headless Service不需要分配一个 VIP,而是直接以 DNS 记录的方式解析出被代理 Pod 的IP地址。

域名格式:$ (servicename).$(namespace).svc.cluster.local

[root@server2 k8s]# kubectl delete -f svc.yaml

service "myservice" deleted

[root@server2 k8s]# vim svc.yaml

[root@server2 k8s]# cat svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: nginx

type: ClusterIP

clusterIP: None

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@server2 k8s]# kubectl apply -f svc.yaml

service/myservice created

[root@server2 k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 28h

myservice ClusterIP None <none> 80/TCP 20s

此时看到的是没有分配到的VIP,但是可以根据 DNS 记录中的pod 的地址来访问;

[root@server2 k8s]# dig -t A myservice.default.svc.cluster.local @10.96.0.10

; <<>> DiG 9.9.4-RedHat-9.9.4-72.el7 <<>> -t A myservice.default.svc.cluster.local @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 11968

;; flags: qr aa rd; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;myservice.default.svc.cluster.local. IN A

;; ANSWER SECTION:

myservice.default.svc.cluster.local. 30 IN A 10.244.2.41

myservice.default.svc.cluster.local. 30 IN A 10.244.1.31

myservice.default.svc.cluster.local. 30 IN A 10.244.2.40

;; Query time: 0 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Sun Jun 13 21:24:28 CST 2021

;; MSG SIZE rcvd: 217

[root@server2 k8s]# kubectl describe svc myservice

Name: myservice

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: None

IPs: None

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.90:80,10.244.2.77:80,10.244.2.78:80

Session Affinity: None

Events: <none>

5. pod 滚动更新

以上无头服务,在 pod 滚动更新之后,其 IP 的变化是随着 pod 自动更新的;

[root@server2 k8s]# kubectl delete pod --all

pod "demo" deleted

pod "deployment-example-5b768f7647-brg9n" deleted

pod "deployment-example-5b768f7647-czchr" deleted

pod "deployment-example-5b768f7647-rkpgr" deleted

[root@server2 k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deployment-example-5b768f7647-2psc6 1/1 Running 0 21s

deployment-example-5b768f7647-cdfdk 1/1 Running 0 21s

deployment-example-5b768f7647-q76rp 1/1 Running 0 21s

[root@server2 k8s]# kubectl describe svc myservice

Name: myservice

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: None

IPs: None

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.94:80,10.244.1.95:80,10.244.2.79:80

Session Affinity: None

Events: <none>

6. 创建 service: (LoadBalancer)

从外部访问 Service 的第二种方式,适用于公有云上的 Kubernetes 服务。这时候,可以指定一个 LoadBalancer 类型的 Service。

[root@server2 k8s]# kubectl delete -f svc.yaml

service "myservice" deleted

[root@server2 k8s]# vim svc.yaml

[root@server2 k8s]# cat svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: nginx

type: LoadBalancer

#clusterIP: None

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@server2 k8s]# kubectl apply -f svc.yaml

service/myservice created

[root@server2 k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 28h

myservice LoadBalancer 10.99.186.132 <pending> 80:31894/TCP 37s

此时是在 nodeport的基础之上,从云端来分配一个 IP;此处没有云端时会一直处于

- 当是云环境时会通过驱动去分配一个IP,供其访问;

- 当前是裸金属环境,那么分配IP 的动作由谁去做呢?

metallb

官网:https://metallb.universe.tf/installation/

设置ipvs模式:

[root@server2 k8s]# kubectl edit configmaps -n kube-system kube-proxy

configmap/kube-proxy edited

37 strictARP: true

44 mode: "ipvs"

[root@server2 k8s]# kubectl -n kube-system get pod | grep kube-proxy | awk '{system("kubectl -n kube-system delete pod "$1"")}'

pod "kube-proxy-6f78h" deleted

pod "kube-proxy-7jvkr" deleted

pod "kube-proxy-9d5s7" deleted

##让策略生效

部署:先下载资源清单,

[root@server2 k8s]# mkdir metallb

[root@server2 k8s]# cd metallb/

[root@server2 metallb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/namespace.yaml

[root@server2 metallb]# cat namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: metallb-system

labels:

app: metallb

[root@server2 metallb]# kubectl apply -f namespace.yaml

namespace/metallb-system created

##创建 namespace

[root@server2 metallb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.9.6/manifests/metallb.yaml

配置清单metallb.yaml 指定的镜像需要提前下载,并将其上传至私有仓库;

[root@server1 ~]# docker pull metallb/speaker:v0.9.6

[root@server1 ~]# docker pull metallb/controller:v0.9.6

[root@server1 harbor]# docker tag metallb/speaker:v0.9.6 reg.westos.org/metallb/speaker:v0.9.6

[root@server1 harbor]# docker tag metallb/controller:v0.9.6 reg.westos.org/metallb/controller:v0.9.6

[root@server1 harbor]# docker push reg.westos.org/metallb/controller;

[root@server1 harbor]# docker push reg.westos.org/metallb/controller:v0.9.6

上传完之后,此处私有仓库的地址和文件中的一致,然后开始部署:

[root@server2 metallb]# kubectl apply -f metallb.yaml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

在第一次部署时,需要生成一个密钥:

[root@server2 metallb]# kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

secret/memberlist created

[root@server2 metallb]# kubectl -n metallb-system get all

NAME READY STATUS RESTARTS AGE

pod/controller-64f86798cc-qgbsw 1/1 Running 0 2m59s

pod/speaker-f7vtr 1/1 Running 0 2m59s

pod/speaker-jdqv4 1/1 Running 0 2m59s

pod/speaker-t8675 1/1 Running 0 2m59s

##在客户端控制节点创建一个,在3个节点运行客户端代理

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/speaker 3 3 3 3 3 kubernetes.io/os=linux 2m59s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/controller 1/1 1 1 2m59s

NAME DESIRED CURRENT READY AGE

replicaset.apps/controller-64f86798cc 1 1 1 2m59s

[root@server2 metallb]# kubectl -n metallb-system get secrets

NAME TYPE DATA AGE

controller-token-hk6k2 kubernetes.io/service-account-token 3 3m18s

default-token-22jtp kubernetes.io/service-account-token 3 9m39s

memberlist Opaque 1 105s

speaker-token-phglk kubernetes.io/service-account-token 3 3m18s

以上创建完成之后,编辑一个文件:设置其分配IP的范围。

[root@server2 metallb]# vim config.yaml

[root@server2 metallb]# cat config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 172.25.25.100-172.25.25.200

[root@server2 metallb]# kubectl apply -f config.yaml

configmap/config created

[root@server2 metallb]# kubectl -n metallb-system get cm

NAME DATA AGE

config 1 10s

kube-root-ca.crt 1 11m

[root@server2 metallb]# kubectl -n metallb-system describe cm config

Name: config

Namespace: metallb-system

Labels: <none>

Annotations: <none>

Data

====

config:

----

address-pools:

- name: default

protocol: layer2

addresses:

- 172.25.15.100-172.25.15.200

Events: <none>

来测试外部能否获取VIP;

[root@server2 metallb]# cd ..

[root@server2 k8s]# vim svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: nginx

#type: ClusterIP

#type: NodePort

#clusterIP: None

type: LoadBalancer

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@server2 k8s]# kubectl apply -f svc.yaml

service/myservice unchanged

[root@server2 k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 29h

myservice LoadBalancer 10.99.186.132 172.25.15.100 80:31894/TCP 29m

此时已经分配到了vip ,外部直接可以访问:

[root@foundation15 ~]# curl 172.25.15.100

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@foundation15 ~]# curl 172.25.15.100/hostname.html

deployment-example-5b768f7647-cdfdk

7. 创建 service :(ExternalName)

从外部访问的第三种方式叫做ExternalName,解析名称。常用于外部控制。

[root@server2 k8s]# vim ex-svc.yaml

[root@server2 k8s]# cat ex-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: ex-svc

spec:

type: ExternalName

externalName: www.baidu.com

[root@server2 k8s]# kubectl apply -f ex-svc.yaml

service/ex-svc created

[root@server2 k8s]# kubectl get svc

##此处没有分配ip,用域名的方式可以访问

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ex-svc ExternalName <none> www.baidu.com <none> 10s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 29h

myservice LoadBalancer 10.99.186.132 172.25.15.100 80:31894/TCP 33m

[root@server2 k8s]# kubectl describe svc ex-svc

Name: ex-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: <none>

Type: ExternalName

IP Families: <none>

IP:

IPs: <none>

External Name: www.baidu.com

Session Affinity: None

Events: <none>

[root@server2 k8s]# dig -t A ex-svc.default.svc.cluster.local @10.96.0.10

;; ANSWER SECTION:

ex-svc.default.svc.cluster.local. 30 IN CNAME www.baidu.com.

www.baidu.com. 30 IN CNAME www.a.shifen.com.

www.a.shifen.com. 30 IN A 36.152.44.95

www.a.shifen.com. 30 IN A 36.152.44.96

假设外部资源的域名发生变化:

[root@server2 k8s]# vim ex-svc.yaml

[root@server2 k8s]# cat ex-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: ex-svc

spec:

type: ExternalName

externalName: www.westos.org

[root@server2 k8s]# kubectl apply -f ex-svc.yaml

service/ex-svc configured

[root@server2 k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ex-svc ExternalName <none> www.westos.com <none> 2m44s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 9d

myservice LoadBalancer 10.99.186.132 172.25.25.100 80:31981/TCP 7m45s

[root@server2 k8s]# dig -t A ex-svc.default.svc.cluster.local @10.96.0.10

;; ANSWER SECTION:

ex-svc.default.svc.cluster.local. 30 IN CNAME www.westos.org.

www.westos.org. 30 IN CNAME applkdmhnt09730.pc-cname.xiaoe-tech.com.

applkdmhnt09730.pc-cname.xiaoe-tech.com. 30 IN A 118.25.119.100

可以发现即使外部域名发生变化,不变的是svc,集群内部可以将地址设置为 svc 的地址;将其做个映射就可以,不用做太大的变更。

以上的方式是分配地址,service 允许为其分配一个公有IP。

8. ingress

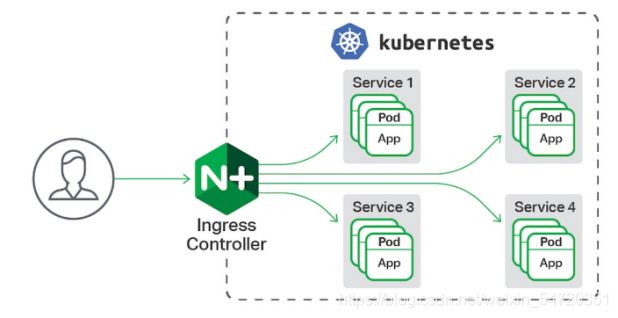

Kubernetes 里的 Ingress 服务是一种全局的、为了代理不同后端 Service 而设置的负载均衡服务。

Ingress由两部分组成:Ingress controller 和 Ingress 服务。

Ingress Controller 会根据你定义的 Ingress 对象,提供对应的代理能力。业界常用的各种反向代理项目,比如 Nginx、HAProxy、Envoy、Traefik 等,都已经为Kubernetes 专门维护了对应的 Ingress Controller。

8.1 ingress的配置

官网:https://kubernetes.github.io/ingress-nginx/

应用 ingress controller 定义文件:

[root@server2 k8s]# mkdir ingress

[root@server2 k8s]# cd ingress/

[root@server2 ingress]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml

根据文件内容需要下载两个镜像;此处用直接下载好经过打包的镜像上传至私有仓库。

[root@server1 ~]# docker load -i ingress-nginx-v0.46.0.tar

[root@server1 ~]# docker push reg.westos.org/ingress-nginx/controller:v0.46.0

[root@server1 ~]# docker push reg.westos.org/ingress-nginx/kube-webhook-certgen:v1.5.1

然后修改文件中的镜像指向,并部署:

[root@server2 ingress]# vim deploy.yaml

324 image: ingress-nginx/controller:v0.46.0

589 image: ingress-nginx/kube-webhook-certgen:v1.5.1

635 image: ingress-nginx/kube-webhook-certgen:v1.5.1

[root@server2 ingress]# kubectl apply -f deploy.yaml

部署好 ingress之后,查看其相关一些信息;可以看到有 NodePort是 供集外部可以访问 和ClusterIP是集群内部访问;

[root@server2 ingress]# kubectl -n ingress-nginx get all

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-n49ww 0/1 Completed 0 10s

pod/ingress-nginx-admission-patch-mqmxq 0/1 Completed 1 10s

pod/ingress-nginx-controller-56c7fc94cb-dzvft 0/1 ContainerCreating 0 10s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller NodePort 10.103.186.206 <none> 80:32594/TCP,443:32643/TCP 10s

service/ingress-nginx-controller-admission ClusterIP 10.103.30.139 <none> 443/TCP 10s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 0/1 1 0 10s

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-56c7fc94cb 1 1 0 10s

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 4s 10s

job.batch/ingress-nginx-admission-patch 1/1 5s 10s

[root@server2 ingress]# kubectl -n ingress-nginx get pod

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-n49ww 0/1 Completed 0 62s

ingress-nginx-admission-patch-mqmxq 0/1 Completed 1 62s

ingress-nginx-controller-56c7fc94cb-dzvft 1/1 Running 0 62s

[root@server2 ingress]# kubectl -n ingress-nginx describe svc ingress-nginx-controller

Name: ingress-nginx-controller

Namespace: ingress-nginx

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/version=0.47.0

helm.sh/chart=ingress-nginx-3.33.0

Annotations: <none>

Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.103.186.206

IPs: 10.103.186.206

Port: http 80/TCP

TargetPort: http/TCP

NodePort: http 32594/TCP

Endpoints: 10.244.1.35:80

Port: https 443/TCP

TargetPort: https/TCP

NodePort: https 32643/TCP

Endpoints: 10.244.1.35:443

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

[root@server2 ingress]# kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.103.186.206 <none> 80:32594/TCP,443:32643/TCP 107s

ingress-nginx-controller-admission ClusterIP 10.103.30.139 <none> 443/TCP 107s

[root@server2 ingress]# curl 10.103.186.206

##集群内此时并不能访问到信息,是因为还没有定义 ingress

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

为了可以访问,需要有可用的svc;

[root@server2 k8s]# vim svc.yaml

[root@server2 k8s]# cat svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: nginx

#type: LoadBalancer

type: ClusterIP

#clusterIP: None

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@server2 k8s]# kubectl apply -f svc.yaml

[root@server2 k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 9d

myservice ClusterIP 10.97.144.118 <none> 80/TCP 13s

[root@server2 k8s]# kubectl describe svc myservice

##此时三个 pod 已经就绪

Name: myservice

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.94:80,10.244.1.95:80,10.244.2.79:80

Session Affinity: None

Events: <none>

[root@server2 k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deployment-example-5b768f7647-2psc6 1/1 Running 0 129m

deployment-example-5b768f7647-cdfdk 1/1 Running 0 129m

deployment-example-5b768f7647-q76rp 1/1 Running 0 129m

将后端的三个 pod 暴露出去,供外部可以访问;当前的 ClusterIP不能让外部访问,此时需要用ingress来访问;

[root@server2 ingress]# vim ingress.yaml

[root@server2 ingress]# cat ingress.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-www1

spec:

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

[root@server2 ingress]# kubectl apply -f ingress.yaml

[root@server2 ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-www1 <none> www1.westos.org 80 13s

[root@server2 ingress]# kubectl describe ingress ingress-www1

Name: ingress-www1

Namespace: default

Address:

Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>)

Rules:

Host Path Backends

---- ---- --------

www1.westos.org

/ myservice:80 (10.244.1.94:80,10.244.1.95:80,10.244.2.79:80)

Annotations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 26s nginx-ingress-controller Scheduled for sync

此时在访问 www1.westos.org 时,会调度到后端的 pod 上;但是此时对于域名的访问并不能被识别,需要做解析;将其解析到 nginx 的控制器上以NodePort来识别,访问时必须加上端口号;

[root@westos ~]# tail -n 1 /etc/hosts

172.25.25.2 server2 www1.westos.org

[root@westos ~]# curl www1.westos.org

curl: (7) Failed to connect to www1.westos.org port 80: Connection refused

[root@westos ~]# curl www1.westos.org:32594

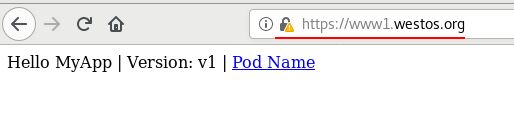

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@westos ~]# curl www1.westos.org:32594/hostname.html

deployment-example-5b768f7647-t769f

[root@westos ~]# curl www1.westos.org:32594/hostname.html

deployment-example-5b768f7647-rlvlw

[root@westos ~]# curl www1.westos.org:32594/hostname.html

deployment-example-5b768f7647-jv7kf

除了NodePort形式之外,还可以用LoadBalancer来分配外部 IP;

[root@server2 ingress]# kubectl -n ingress-nginx edit svc

49 type: LoadBalancer

50 status:

51 loadBalancer: {

}

service/ingress-nginx-controller edited

service/ingress-nginx-controller-admission skipped

[root@server2 ingress]# kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.103.186.206 172.25.25.100 80:32594/TCP,443:32643/TCP 91m

ingress-nginx-controller-admission ClusterIP 10.103.30.139 <none> 443/TCP

可以看到分配到了一个外部 IP;此时在修改解析文件,不能再解析到之前的 ip,当之前的主机挂掉之后,就不能访问;但是指向分配的IP,便不存在该问题,访问时不用加端口;

[root@westos ~]# vim /etc/hosts

[root@westos ~]# tail -n1 /etc/hosts

172.25.25.100 www1.westos.org

[root@westos ~]# ping -c1 -w1 www1.westos.org

PING www1.westos.org (172.25.25.100) 56(84) bytes of data.

64 bytes from www1.westos.org (172.25.25.100): icmp_seq=1 ttl=64 time=0.542 ms

--- www1.westos.org ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.542/0.542/0.542/0.000 ms

[root@westos ~]# curl www1.westos.org

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@westos ~]# curl www1.westos.org/hostname.html

deployment-example-5b768f7647-t769f

[root@westos ~]# curl www1.westos.org/hostname.html

deployment-example-5b768f7647-jv7kf

[root@westos ~]# curl www1.westos.org/hostname.html

deployment-example-5b768f7647-t769f

再添加来观察效果:

[root@server2 ingress]# cp ../svc.yaml .

[root@server2 ingress]# vim svc.yaml

[root@server2 ingress]# cat svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: nginx

#type: LoadBalancer

type: ClusterIP

#clusterIP: None

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

selector:

app: myapp

type: ClusterIP

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@server2 ingress]# kubectl apply -f svc.yaml

service/myservice unchanged

service/nginx-svc created

[root@server2 ingress]# kubectl describe svc nginx-svc

##此时没有Endpoints控制器

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=myapp

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.108.174.65

IPs: 10.108.174.65

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: <none>

Session Affinity: None

Events: <none>

[root@server2 ingress]# kubectl get pod --show-labels

##也没有myapp的标签

NAME READY STATUS RESTARTS AGE LABELS

deployment-example-5b768f7647-2psc6 1/1 Running 0 144m app=nginx,pod-template-hash=5b768f7647

deployment-example-5b768f7647-cdfdk 1/1 Running 0 144m app=nginx,pod-template-hash=5b768f7647

deployment-example-5b768f7647-q76rp 1/1 Running 0 144m app=nginx,pod-template-hash=5b768f7647

为其添加控制器和标签:

[root@server2 ingress]# cp ../deployment.yaml .

[root@server2 ingress]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-www2

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 2

periodSeconds: 3

timeoutSeconds: 1

readinessProbe:

httpGet:

path: /hostname.html

port: 80

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 1

[root@server2 ingress]# kubectl apply -f deployment.yaml

[root@server2 ingress]# kubectl get pod --show-labels

##此时查看时就有了标签,也有了pod 信息

[root@server2 ingress]# kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.97:80,10.244.1.98:80,10.244.2.83:80

Session Affinity: None

Events: <none>

此时编辑 ingress 添加一个页面;此时是动态更新;

[root@server2 ingress]# vim ingress.yaml

[root@server2 ingress]# cat ingress.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-www1

spec:

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

- host: www2.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

[root@server2 ingress]# kubectl apply -f ingress.yaml

[root@server2 ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-www1 <none> www1.westos.org,www2.westos.org 172.25.15.100 80 18m

[root@server2 ingress]# kubectl describe ingress ingress-www1

Name: ingress-www1

Namespace: default

Address: 172.25.15.100

Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>)

Rules:

Host Path Backends

---- ---- --------

www1.westos.org

/ myservice:80 (10.244.1.94:80,10.244.1.95:80,10.244.2.79:80)

www2.westos.org

/ nginx-svc:80 (10.244.1.97:80,10.244.1.98:80,10.244.2.83:80)

Annotations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 31s (x3 over 18m) nginx-ingress-controller Scheduled for sync

8.2 Ingress TLS 配置

要做加密之前首先来生成key:

[root@server2 ingress]# openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=nginxsvc/O=nginxsvc"

Generating a 2048 bit RSA private key

..........................+++

...+++

writing new private key to 'tls.key'

-----

[root@server2 ingress]# kubectl create secret tls tls-secret --key tls.key --cert tls.crt

secret/tls-secret created

##将生成的证书和key 存起来

[root@server2 ingress]# kubectl get secrets

NAME TYPE DATA AGE

default-token-z4gbr kubernetes.io/service-account-token 3 9d

tls-secret kubernetes.io/tls 2 9s

对网站www1.westos.org 进行加密:

[root@server2 ingress]# vim ingress.yaml

[root@server2 ingress]# cat ingress.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-www1

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-www1

spec:

rules:

- host: www2.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

[root@server2 ingress]# kubectl apply -f ingress.yaml

[root@server2 ingress]# kubectl describe ingress

Name: ingress-www1

Namespace: default

Address: 172.25.25.3

Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>)

TLS:

tls-secret terminates www1.westos.org

Rules:

Host Path Backends

---- ---- --------

www1.westos.org

/ myservice:80 (10.244.1.34:80,10.244.2.42:80,10.244.2.43:80)

Annotations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 13s (x2 over 13s) nginx-ingress-controller Scheduled for sync

Name: ingress-www2

Namespace: default

Address: 172.25.25.3

Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>)

Rules:

Host Path Backends

---- ---- --------

www2.westos.org

/ nginx-svc:80 (10.244.1.38:80,10.244.2.46:80,10.244.2.47:80)

Annotations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 13s (x2 over 13s) nginx-ingress-controller Scheduled for sync

[root@server2 ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-www1 <none> www1.westos.org 172.25.25.3 80, 443 47s

ingress-www2 <none> www2.westos.org 172.25.25.3 80 47s

测试:由于此时开启了443,便会重定向到443,如果没有开启由于其是加密的便不能访问;www2没有做加密,直接返回值。

[root@westos ~]# curl -I www1.westos.org

HTTP/1.1 308 Permanent Redirect

Date: Mon, 14 Jun 2021 03:32:05 GMT

Content-Type: text/html

Content-Length: 164

Connection: keep-alive

Location: https://www1.westos.org

[root@westos ~]# curl -I www2.westos.org

HTTP/1.1 200 OK

Date: Mon, 14 Jun 2021 03:55:54 GMT

Content-Type: text/html

Content-Length: 65

Connection: keep-alive

Last-Modified: Sun, 25 Feb 2018 06:04:32 GMT

ETag: "5a9251f0-41"

Accept-Ranges: bytes

8.3 Ingress 认证配置

[root@server2 ingress]# yum install -y httpd-tools

[root@server2 ingress]# htpasswd -c auth admin

New password:

Re-type new password:

Adding password for user admin

[root@server2 ingress]# htpasswd auth zxk

New password:

Re-type new password:

Adding password for user zxk

[root@server2 ingress]# cat auth

admin:$apr1$NAtpYX/0$bqGqb.8Vo7DqDCoILmUpv1

zxk:$apr1$zuNeydPF$nbL1qU65BmtgMMp9DGeAg0

[root@server2 ingress]# kubectl create secret generic basic-auth --from-file=auth

secret/basic-auth created

[root@server2 ingress]# kubectl get secrets

NAME TYPE DATA AGE

basic-auth Opaque 1 4m13s

default-token-z4gbr kubernetes.io/service-account-token 3 10d

tls-secret kubernetes.io/tls 2 42m

编辑文件来器用认证:

[root@server2 ingress]# cat ingress.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-www1

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - westps'

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-www2

spec:

rules:

- host: www2.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

[root@server2 ingress]# kubectl apply -f ingress.yaml

8.4 Ingress地址重写

- 当访问

www2.westos.org时直接改写为www2.westos.org/hostname.html;

[root@server2 ingress]# cat ingress.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-www1

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - westps'

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-www2

annotations: ##当访问www2.westos.org时直接改写为www2.westos.org/hostname.html

nginx.ingress.kubernetes.io/app-root: /hostname.html

spec:

rules:

- host: www2.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

[root@server2 ingress]# kubectl apply -f ingress.yaml

Warning: networking.k8s.io/v1beta1 Ingress is deprecated in v1.19+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.networking.k8s.io/ingress-www1 configured

ingress.networking.k8s.io/ingress-www2 configured

- 路径重写

[root@server2 ingress]# vim ingress-rewrite.yaml

[root@server2 ingress]# cat ingress-rewrite.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: ingress-rewrite

namespace: default

spec:

rules:

- host: rewrite.westos.org

http:

paths:

- backend:

serviceName: nginx-svc

servicePort: 80

path: /westos(/|$)(.*)

[root@server2 ingress]# kubectl apply -f ingress.yaml

Warning: networking.k8s.io/v1beta1 Ingress is deprecated in v1.19+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.networking.k8s.io/ingress-www1 configured

ingress.networking.k8s.io/ingress-www2 configured

[root@server2 ingress]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 10d

myservice ClusterIP 10.97.144.118 <none> 80/TCP 136m

nginx-svc ClusterIP 10.108.174.65 <none> 80/TCP 85m

外部访问策略是,先来访问 ingress 中的 svc开启的pod 去 调度后端的 svc。

测试:

[root@westos ~]# curl -I rewrite.westos.org/westos

HTTP/1.1 200 OK

Date: Mon, 14 Jun 2021 04:38:52 GMT

Content-Type: text/html

Content-Length: 65

Connection: keep-alive

Last-Modified: Sun, 25 Feb 2018 06:04:32 GMT

ETag: "5a9251f0-41"

Accept-Ranges: bytes

[root@westos ~]# curl -I rewrite.westos.org/westos/hostname.html

HTTP/1.1 200 OK

Date: Mon, 14 Jun 2021 04:39:03 GMT

Content-Type: text/html

Content-Length: 32

Connection: keep-alive

Last-Modified: Mon, 14 Jun 2021 03:11:07 GMT

ETag: "60c6c8cb-20"

Accept-Ranges: bytes

[root@westos ~]# curl -I rewrite.westos.org/westos/test.html

HTTP/1.1 404 Not Found

Date: Mon, 14 Jun 2021 04:39:11 GMT

Content-Type: text/html

Content-Length: 169

Connection: keep-alive

[root@westos ~]# curl rewrite.westos.org/westos/

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

[root@westos ~]# curl rewrite.westos.org/westos/hostname.html

deployment-www2-6bb947d8b-tnlhf

[root@westos ~]# curl rewrite.westos.org/westos/hostname.html

deployment-www2-6bb947d8b-9cbfp

9. k8s 网络通信

- k8s通过CNI接口接入其他插件来实现网络通讯。目前比较流行的插件有flannel,calico等。

- CNI插件存放位置:# cat /etc/cni/net.d/10-flannel.conflist

插件使用的解决方案如下:

虚拟网桥,虚拟网卡,多个容器共用一个虚拟网卡进行通信。

多路复用:MacVLAN,多个容器共用一个物理网卡进行通信。

硬件交换:SR-LOV,一个物理网卡可以虚拟出多个接口,这个性能最好。 - 容器间通信:同一个pod内的多个容器间的通信,通过lo即可实现;

- pod 之间的通信:

同一节点的pod之间通过cni网桥转发数据包。

不同节点的pod之间的通信需要网络插件支持。 - pod 和service通信: 通过iptables或ipvs实现通信,ipvs取代不了iptables,因为ipvs只能做负载均衡,而做不了nat转换。

- pod 和外网通信:iptables的MASQUERADE。

- Service与集群外部客户端的通信;(ingress、nodeport、loadbalancer)

9.1 flannel 网络

- 通信结构

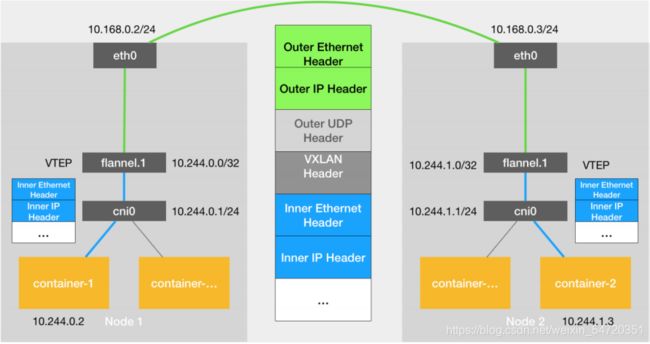

前面用到的都是flannel,Flannel vxlan 模式跨主机通信原理:

-

VXLAN,即Virtual Extensible LAN(虚拟可扩展局域网),是Linux本身支持的一网种网络虚拟化技术。VXLAN可以完全在内核态实现封装和解封装工作,从而通过“隧道”机制,构建出覆盖网络(Overlay Network)。

-

VTEP:VXLAN Tunnel End Point(虚拟隧道端点),在Flannel中 VNI的默认值是1,这也是为什么宿主机的VTEP设备都叫flannel.1的原因。

-

Cni0: 网桥设备,每创建一个pod都会创建一对 veth pair。其中一端是pod中的eth0,另一端是Cni0网桥中的端口(网卡)。

-

Flannel.1: TUN设备(虚拟网卡),用来进行 vxlan 报文的处理(封包和解包)。不同node之间的pod数据流量都从overlay设备以隧道的形式发送到对端。

-

Flanneld:flannel在每个主机中运行flanneld作为agent,它会为所在主机从集群的网络地址空间中,获取一个小的网段subnet,本主机内所有容器的IP地址都将从中分配。同时Flanneld监听K8s集群数据库,为flannel.1设备提供封装数据时必要的mac、ip等网络数据信息。

-

通信原理

当容器发送IP包,通过veth pair 发往cni网桥,再路由到本机的flannel.1设备进行处理。

VTEP设备之间通过二层数据帧进行通信,源VTEP设备收到原始IP包后,在上面加上一个目的MAC地址,封装成一个内部数据帧,发送给目的VTEP设备。

内部数据桢,并不能在宿主机的二层网络传输,Linux内核还需要把它进一步封装成为宿主机的一个普通的数据帧,承载着内部数据帧通过宿主机的eth0进行传输。

Linux会在内部数据帧前面,加上一个VXLAN头,VXLAN头里有一个重要的标志叫VNI,它是VTEP识别某个数据桢是不是应该归自己处理的重要标识。

flannel.1设备只知道另一端flannel.1设备的MAC地址,却不知道对应的宿主机地址是什么。在linux内核里面,网络设备进行转发的依据,来自FDB的转发数据库,这个flannel.1网桥对应的FDB信息,是由flanneld进程维护的。

linux内核在IP包前面再加上二层数据帧头,把目标节点的MAC地址填进去,MAC地址从宿主机的ARP表获取。

此时flannel.1设备就可以把这个数据帧从eth0发出去,再经过宿主机网络来到目标节点的eth0设备。目标主机内核网络栈会发现这个数据帧有VXLAN Header,并且VNI为1,Linux内核会对它进行拆包,拿到内部数据帧,根据VNI的值,交给本机flannel.1设备处理,flannel.1拆包,根据路由表发往cni网桥,最后到达目标容器。

flannel支持多种后端:

Vxlan:报文封装,默认

Directrouting :直接路由,跨网段使用vxlan,同网段使用 host-gw 模式。

host-gw: 主机网关,性能好,但只能在二层网络中,不支持跨网络, 如果有成千上万的Pod,容易产生广播风暴,不推荐;

UDP: 性能差,不推荐

配置flannel:

[root@server2 ~]# kubectl -n kube-system edit cm kube-flannel-cfg

30 "Backend": {

31 "Type": "host-gw"

32 }

configmap/kube-flannel-cfg edited

[root@server2 ~]# kubectl -n kube-system get pod | grep kube-flannel | awk '{system("kubectl -n kube-system delete pod "$1"")}'

[root@server2 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.25.25.250 0.0.0.0 UG 0 0 0 eth0

10.244.0.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

10.244.1.0 172.25.25.3 255.255.255.0 UG 0 0 0 eth0

10.244.2.0 172.25.25.4 255.255.255.0 UG 0 0 0 eth0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.25.25.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

当访问本地网络时直接走cni,1网段走eth0的172.25.25.3; 2 网段直接走eth0到172.25.25.4 。

在所有结点上会生成主机网关;此模式的前提是所有节点在一个 vlan中。

修改配置信息:

[root@server2 ~]# kubectl -n kube-system edit cm kube-flannel-cfg

27 net-conf.json: |

28 {

29 "Network": "10.244.0.0/16",

30 "Backend": {

31 "Type": "vxlan"

32 "Directrouting": true

33 }

表示再一个网段中使用的是 host-gw的模式,不在一个网段使用的是vxlan 。

9.2 calico网络插件

官网:https://docs.projectcalico.org/getting-started/kubernetes/self-managed-onprem/onpremises

- calico简介:

flannel实现的是网络通信,calico的特性是在pod之间的隔离。

通过BGP路由,但大规模端点的拓扑计算和收敛往往需要一定的时间和计算资源。

纯三层的转发,中间没有任何的NAT和overlay,转发效率最好。

Calico 仅依赖三层路由可达。Calico 较少的依赖性使它能适配所有 VM、Container、白盒或者混合环境场景。

安装calico:在安装之前先清理之前插件的信息,避免两个之间冲突;

[root@server2 ~]# kubectl delete -f kube-flannel.yml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy "psp.flannel.unprivileged" deleted

clusterrole.rbac.authorization.k8s.io "flannel" deleted

clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted

serviceaccount "flannel" deleted

configmap "kube-flannel-cfg" deleted

daemonset.apps "kube-flannel-ds" deleted

[root@server2 ~]# kubectl -n kube-system get pod

NAME READY STATUS RESTARTS AGE

coredns-85ffb569d4-85kp7 1/1 Running 4 9d

coredns-85ffb569d4-bd579 1/1 Running 4 9d

etcd-server2 1/1 Running 4 9d

kube-apiserver-server2 1/1 Running 4 9d

kube-controller-manager-server2 1/1 Running 4 9d

kube-proxy-6f78h 1/1 Running 1 17h

kube-proxy-7jvkr 1/1 Running 1 17h

kube-proxy-9d5s7 1/1 Running 1 17h

kube-scheduler-server2 1/1 Running 4 9d

##在所有节点做个清理的动作;

[root@server2 ~]# cd /etc/cni/net.d/

[root@server2 net.d]# ls

10-flannel.conflist

[root@server2 net.d]# mv 10-flannel.conflist /mnt/

用命令 arp -an 查看主机的 mac地址.

- 部署 calico 插件

使用calico插件:

[root@server2 ~]# mkdir calico/

[root@server2 calico]# wget https://docs.projectcalico.org/manifests/calico.yaml

[root@server2 calico]# vim calico.yaml

根据文件内容,来下载所需的镜像放入私有软件仓库中;

[root@server1 ~]# docker pull docker.io/calico/cni:v3.19.1

[root@server1 ~]# docker pull docker.io/calico/pod2daemon-flexvol:v3.19.1

[root@server1 ~]# docker pull docker.io/calico/node:v3.19.1

[root@server1 ~]# docker pull docker.io/calico/kube-controllers:v3.19.1

[root@server1 ~]# docker images | grep calico

calico/node v3.19.1 c4d75af7e098 3 weeks ago 168MB

calico/pod2daemon-flexvol v3.19.1 5660150975fb 3 weeks ago 21.7MB

calico/cni v3.19.1 5749e8b276f9 3 weeks ago 146MB

calico/kube-controllers v3.19.1 5d3d5ddc8605 3 weeks ago 60.6MB

[root@server1 ~]# docker images | grep calico | awk '{system("docker tag "$1":"$2" reg.westos.org/"$1":"$2"")}'

##修该标签

[root@server1 ~]# docker images | grep reg.westos.org\/calico | awk '{system("docker push "$1":"$2"")}'

##上传

然后编辑文件将镜像路径更改过来,然后在修改内容。

IPIP工作模式:适用于互相访问的pod不在同一个网段中,跨网段访问的场景。

BGP工作模式:适用于互相访问的pod在同一个网段,适用于大型网络。

[root@server2 calico]# vim calico.yaml

3657 - name: CALICO_IPV4POOL_IPIP

3658 value: "off"

3683 - name: CALICO_IPV4POOL_CIDR

3684 value: "10.244.0.0/16"

此时在应用清单文件之后,会看到calico的插件信息;

[root@server2 calico]# kubectl apply -f calico.yaml

[root@server2 calico]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-784b4f4c9-c9w6c 1/1 Running 0 41s

calico-node-4rn49 1/1 Running 0 41s

calico-node-6nmvk 1/1 Running 0 41s

calico-node-9st7d 1/1 Running 0 41s

coredns-85ffb569d4-85kp7 1/1 Running 4 9d

coredns-85ffb569d4-bd579 1/1 Running 4 9d

etcd-server2 1/1 Running 4 9d

kube-apiserver-server2 1/1 Running 4 9d

kube-controller-manager-server2 1/1 Running 4 9d

kube-proxy-6f78h 1/1 Running 1 17h

kube-proxy-7jvkr 1/1 Running 1 17h

kube-proxy-9d5s7 1/1 Running 1 17h

kube-scheduler-server2 1/1 Running 4 9d

[root@server2 calico]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.25.25.250 0.0.0.0 UG 0 0 0 eth0

10.244.0.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

10.244.1.0 172.25.25.3 255.255.255.255 UGH 0 0 0 eth0

10.244.1.0 10.244.1.0 255.255.255.0 UG 0 0 0 flannel.1

10.244.2.0 172.25.25.4 255.255.255.255 UGH 0 0 0 eth0

10.244.2.0 10.244.2.0 255.255.255.0 UG 0 0 0 flannel.1

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.25.25.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

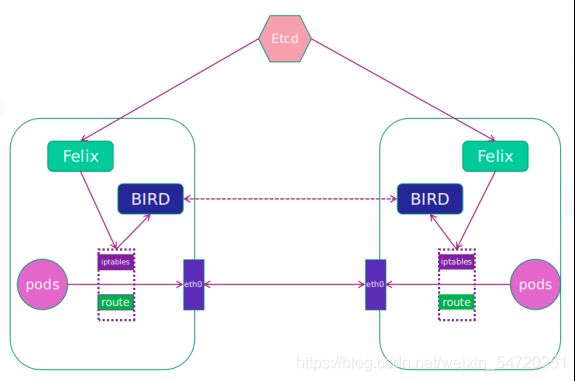

- calico 网络架构

Felix:监听ECTD中心的存储获取事件,用户创建pod后,Felix负责将其网卡、IP、MAC都设置好,然后在内核的路由表里面写一条,注明这个IP应该到这张网卡。同样如果用户制定了隔离策略,Felix同样会将该策略创建到ACL中,以实现隔离。

BIRD:一个标准的路由程序,它会从内核里面获取哪一些IP的路由发生了变化,然后通过标准BGP的路由协议扩散到整个其他的宿主机上,让外界都知道这个IP在这里,路由的时候到这里来。

- 网络策略

NetworkPolicy策略模型:控制某个 namespace 下的 pod 网络出入站规则;

官网:https://kubernetes.io/zh/docs/concepts/services-networking/network-policies/

- 限制访问指定服务:

[root@server2 ingress]# vim ingress.yaml

##删除里面的认策略,用于测试

[root@server2 ingress]# kubectl apply -f ingress.yaml

[root@server2 k8s]# vim deployment.yaml

[root@server2 k8s]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-example

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: myapp:v1

[root@server2 k8s]# kubectl apply -f deployment.yaml

deployment.apps/deployment-example created

[root@server2 k8s]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

demo 1/1 Running 1 7m27s run=demo

deployment-example-6456d7c676-4d52s 1/1 Running 0 20s app=nginx,pod-template-hash=6456d7c676

deployment-example-6456d7c676-b8wcl 1/1 Running 0 20s app=nginx,pod-template-hash=6456d7c676

deployment-example-6456d7c676-bf67v 1/1 Running 0 20s app=nginx,pod-template-hash=6456d7c676

[root@server2 k8s]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demo 1/1 Running 1 7m59s 10.244.22.0 server4 <none> <none>

deployment-example-6456d7c676-4d52s 1/1 Running 0 52s 10.244.22.2 server4 <none> <none>

deployment-example-6456d7c676-b8wcl 1/1 Running 0 52s 10.244.141.192 server3 <none> <none>

deployment-example-6456d7c676-bf67v 1/1 Running 0 52s 10.244.22.1 server4 <none> <none>

注:此处不能用之前flunnel创建的pod 来测试.

[root@server2 calico]# vim policy.yaml

[root@server2 calico]# cat policy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-nginx

spec:

podSelector:

matchLabels:

app: nginx

[root@server2 calico]# kubectl apply -f policy.yaml

networkpolicy.networking.k8s.io/deny-nginx created

[root@server2 calico]# kubectl get networkpolicies.

NAME POD-SELECTOR AGE

deny-nginx app=nginx 3m24s

[root@server2 calico]# kubectl describe networkpolicies. deny-nginx

Name: deny-nginx

Namespace: default

Created on: 2021-06-14 15:44:05 +0800 CST

Labels: <none>

Annotations: <none>

Spec:

PodSelector: app=nginx

Allowing ingress traffic:

<none> (Selected pods are isolated for ingress connectivity)

Not affecting egress traffic

Policy Types: Ingress

此时在测试时标签为nginx的被限制;

[root@server2 calico]# curl 10.244.22.0

^C

[root@server2 calico]#

[root@server2 calico]# kubectl run web --image=nginx

pod/web created

[root@server2 calico]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

deployment-example-6456d7c676-4d52s 1/1 Running 0 6m5s app=nginx,pod-template-hash=6456d7c676

deployment-example-6456d7c676-b8wcl 1/1 Running 0 6m5s app=nginx,pod-template-hash=6456d7c676

deployment-example-6456d7c676-bf67v 1/1 Running 0 6m5s app=nginx,pod-template-hash=6456d7c676

web 1/1 Running 0 11s run=web

[root@server2 calico]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-example-6456d7c676-4d52s 1/1 Running 0 6m27s 10.244.22.2 server4 <none> <none>

deployment-example-6456d7c676-b8wcl 1/1 Running 0 6m27s 10.244.141.192 server3 <none> <none>

deployment-example-6456d7c676-bf67v 1/1 Running 0 6m27s 10.244.22.1 server4 <none> <none>

web 1/1 Running 0 33s 10.244.22.3 server4 <none> <none>

[root@server2 calico]# curl 10.244.22.3

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

- 允许指定pod访问服务:

[root@server2 calico]# vim policy.yaml

[root@server2 calico]# cat policy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-nginx

spec:

podSelector:

matchLabels:

app: nginx

---

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: access-demo

spec:

podSelector:

matchLabels:

app: nginx

ingress:

- from:

- podSelector:

matchLabels:

run: demo

[root@server2 calico]# kubectl apply -f policy.yaml

networkpolicy.networking.k8s.io/deny-nginx unchanged

networkpolicy.networking.k8s.io/access-demo created

[root@server2 calico]# curl 10.244.141.192

^C

[root@server2 calico]# kubectl run demo --image=busyboxplus -it

If you don't see a command prompt, try pressing enter.

/ # curl 10.244.141.192

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

/ #

当前只有run: demo才能访问 nginx 服务,其他默认还是被拒绝访问。

- 禁止 namespace 中所有 Pod 之间的相互访问

[root@server2 calico]# cat policy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-nginx

spec:

podSelector:

matchLabels:

app: nginx

---

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: access-demo

spec:

podSelector:

matchLabels:

app: nginx

ingress:

- from:

- podSelector:

matchLabels:

run: demo

---

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny

namespace: default

spec:

podSelector: {

}

[root@server2 calico]# kubectl apply -f policy.yaml

networkpolicy.networking.k8s.io/deny-nginx unchanged

networkpolicy.networking.k8s.io/access-demo unchanged

networkpolicy.networking.k8s.io/default-deny created

[root@server2 calico]# kubectl get networkpolicies.

NAME POD-SELECTOR AGE

access-demo app=nginx 5m19s

default-deny <none> 16s

deny-nginx app=nginx 13m

[root@server2 calico]# kubectl create namespace demo

namespace/demo created

[root@server2 calico]# kubectl get ns

NAME STATUS AGE

default Active 10d

demo Active 6s

ingress-nginx Active 6h50m

kube-node-lease Active 10d

kube-public Active 10d

kube-system Active 10d

metallb-system Active 18h

[root@server2 calico]# kubectl run demo --image=busyboxplus -it -n demo

If you don't see a command prompt, try pressing enter.

/ # curl 10.244.141.192

^C

/ # 10.244.22.3

[root@server2 calico]# kubectl delete -f policy.yaml

networkpolicy.networking.k8s.io "deny-nginx" deleted

networkpolicy.networking.k8s.io "access-demo" deleted

networkpolicy.networking.k8s.io "default-deny" deleted

[root@server2 calico]# kubectl -n demo attach demo -it

If you don't see a command prompt, try pressing enter.

/ # curl 10.244.22.3

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

- 禁止其他 namespace 访问服务

策略之间可能会有干扰,删掉之前的策略在来做实验

[root@server2 calico]# kubectl delete -f policy.yaml

networkpolicy.networking.k8s.io "deny-nginx" deleted

networkpolicy.networking.k8s.io "access-demo" deleted

networkpolicy.networking.k8s.io "default-deny" deleted

[root@server2 calico]# vim policy2.yaml

[root@server2 calico]# cat policy2.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: deny-namespace

spec:

podSelector:

matchLabels:

ingress:

- from:

- podSelector: {

}

[root@server2 calico]# kubectl apply -f policy2.yaml

networkpolicy.networking.k8s.io/deny-namespace created

[root@server2 calico]# kubectl -n demo attach demo -it

If you don't see a command prompt, try pressing enter.

此时不能访问。

- 只允许指定namespace访问服务

[root@server2 calico]# vim policy2.yaml

[root@server2 calico]# cat policy2.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: deny-namespace

spec:

podSelector:

matchLabels:

ingress:

- from:

- podSelector: {

}

---

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: access-namespace

spec:

podSelector:

matchLabels:

run: web

ingress:

- from:

- namespaceSelector:

matchLabels:

role: prod

[root@server2 calico]# kubectl label namespaces demo role=prod

namespace/demo labeled

[root@server2 calico]# kubectl apply -f policy2.yaml

networkpolicy.networking.k8s.io/deny-namespace configured

networkpolicy.networking.k8s.io/access-namespace created

[root@server2 calico]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-example-6456d7c676-4d52s 1/1 Running 0 39m 10.244.22.2 server4 <none> <none>

deployment-example-6456d7c676-b8wcl 1/1 Running 0 39m 10.244.141.192 server3 <none> <none>

deployment-example-6456d7c676-bf67v 1/1 Running 0 39m 10.244.22.1 server4 <none> <none>

web 1/1 Running 0 33m 10.244.22.3 server4 <none> <none>

[root@server2 calico]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

deployment-example-6456d7c676-4d52s 1/1 Running 0 39m app=nginx,pod-template-hash=6456d7c676

deployment-example-6456d7c676-b8wcl 1/1 Running 0 39m app=nginx,pod-template-hash=6456d7c676

deployment-example-6456d7c676-bf67v 1/1 Running 0 39m app=nginx,pod-template-hash=6456d7c676

web 1/1 Running 0 33m run=web

[root@server2 calico]# kubectl -n demo attach demo -it

If you don't see a command prompt, try pressing enter.

/ # curl 10.244.22.2

^C

/ # curl 10.244.22.3

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

/ #

- 允许外网访问服务

[root@server2 calico]# cat policy2.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: deny-namespace

spec:

podSelector:

matchLabels:

ingress:

- from:

- podSelector: {

}

---

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: access-namespace

spec:

podSelector:

matchLabels:

run: web

ingress:

- from:

- namespaceSelector:

matchLabels:

role: prod

---

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: web-allow-external

spec:

podSelector:

matchLabels:

app: nginx

ingress:

- ports:

- port: 80

from: []

[root@server2 calico]# kubectl apply -f policy2.yaml

networkpolicy.networking.k8s.io/deny-namespace configured

networkpolicy.networking.k8s.io/access-namespace unchanged

networkpolicy.networking.k8s.io/web-allow-external created

[root@westos ~]# curl www1.westos.org/hostname.html

deployment-example-6456d7c676-4d52s

[root@westos ~]# curl www1.westos.org/hostname.html

deployment-example-6456d7c676-b8wcl

更多的策略信息看官网介绍:https://docs.projectcalico.org/getting-started/kubernetes/self-managed-onprem/onpremises