1.环境信息

三台centos7.9虚机

192.168.56.102 node1 (2个cpu)

192.168.56.103 node2

192.168.56.104 node3

设置三台机器间免密登录

ssh-keygen -t rsa

~/.ssh/

cat ~/.ssh/id_rsa.pub > ~/.ssh/authorized_keys

chmod 700 ~/.ssh

chmod 600 ~/.ssh/authorized_keys

ssh-copy-id -i ~/.ssh/id_rsa.pub -p 22 root@node1修改主机名

hostname node1 //临时修改

hostnamectl set-hostname node1 //永久修改禁用防火墙

systemctl stop firewalld

systemctl disable firewalld禁用selinux

setenforce 0 //临时关闭

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config //永久关闭禁用缓冲区

swapoff -a

/etc/fstab //注释swap安装docker

安装工具和驱动

yum install -y yum-utils device-mapper-persistent-data lvm2

添加yum源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

启用源

yum-config-manager --enable docker-ce-nightly

卸载历史版本

yum remove docker docker-common docker-selinux docker-engine docer-io

安装docker

yum install docker-ce-19.03.15 docker-ce-cli-19.03.15 containerd.io-19.03.15 -y //指定版本为19.03

启动docker

systemctl start docker

systemctl enable docker修改/etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors":["https://docker.mirrors.ustc.edu.cn"]

}

scp daemon.json root@node2:/etc/docker/

scp daemon.json root@node3:/etc/docker/

添加yum源

/etc/yum.repo.d/k8s.repo

[kubernetes]

name=kubernetes

enabled=1

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgyum clean all

yum makecache

2.安装k8s

yum search kubeadm --showduplicates

三个机器分别执行

yum install kubeadm-1.20.9-0 kubectl-1.20.9-0 kubelet-1.20.9-0 -y

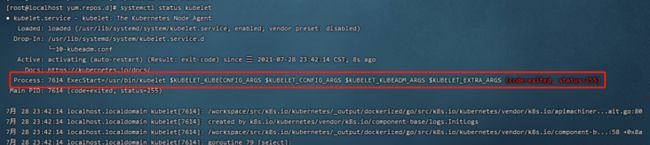

systemctl start kubelet

systemctl enable kubelet在master节点上初始化

kubeadm init \

--apiserver-advertise-address 192.168.56.102 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.20.9 \

--service-cidr 10.1.0.0/16 \

--pod-network-cidr 10.244.0.0/16

---------------------------------------------------------------------

--apiserver-advertise-address 192.168.56.102 //主机的ip

--image-repository registry.aliyuncs.com/google_containers //各个组件镜像仓库

--kubernetes-version v1.20.9 //k8s版本

--service-cidr 10.1.0.0/16 //service网段

--pod-network-cidr 10.244.0.0/16 //pod的网段kubeadm init完成后

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubeadm join 192.168.56.102:6443 --token 9ei6kq.imr068aa8mvphhvt \

--discovery-token-ca-cert-hash sha256:07093c3ae6cddb40a32b9648522248d4b02bd27a0e4338f525031663c4fd2aa2kubectl get nodes

[root@localhost kubernetes]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

localhost.localdomain NotReady control-plane,master 17m v1.20.9kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f89b7bc75-76xh8 0/1 Pending 0 19m

kube-system coredns-7f89b7bc75-pkzzm 0/1 Pending 0 19m

kube-system etcd-localhost.localdomain 1/1 Running 0 19m

kube-system kube-apiserver-localhost.localdomain 1/1 Running 0 19m

kube-system kube-controller-manager-localhost.localdomain 1/1 Running 0 19m

kube-system kube-proxy-7k48f 1/1 Running 0 19m

kube-system kube-scheduler-localhost.localdomain 1/1 Running 0 19m安装flannel网络插件

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml3.大功告成

[root@localhost kubelet]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

localhost.localdomain Ready control-plane,master 31m v1.20.9

node2 NotReady 3m39s v1.20.9

node3 Ready 3m19s v1.20.9 4.遇见的错误

在docker daemon.json中添加

"exec-opts": ["native.cgroupdriver=systemd"],然后还是起不来

journalctl -xeu kubelet | less

错误日志

localhost.localdomain kubelet[32128]: F0729 00:04:15.112170 32128 server.go:198] failed to load Kubelet config file /var/lib/kubelet/config.yaml, error failed to read kubelet config file "/var/lib/kubelet/config.yaml", error: open /var/lib/kubelet/config.yaml: no such file or directory需要在kubeadm init之后就可以起来了

B. 从节点没有admin.conf文件

mkdir -p $HOME/.kube

[root@localhost ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

cp: 无法获取"/etc/kubernetes/admin.conf" 的文件状态(stat): 没有那个文件或目录

[root@localhost ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

chown: 无法访问"/root/.kube/config": 没有那个文件或目录从主节点scp一份过去

C. kube-flannel.yml文件获取不到的话,可以直接下载下来

kubectl apply 报错

The connection to the server localhost:8080 was refused - did you specify the right host or port?

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

source /etc/profile 即可D. docker启动不起来

错误之处

"exec-opts": ["native.cgroupdriver=systemd"], 写成了 -> "exe-opts": ["native.cgroupdriver=systemd"],E. master节点 kubeadm join信息丢失了

-----

kubeadm token create --print-join-command

-----

-----

kubeadm token list

kubeadm token create --ttl 0

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

-----F. join出错

kubeadm join 192.168.56.105:6443 --token kby4kb.8pk6i96jyuyqy9q1 --discovery-token-ca-cert-hash sha256:55a6876fb90e37679db74823ab8d469dabbe59ffa5ec927f1e6dc2ea16483732

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

error execution phase kubelet-start: a Node with name "localhost.localdomain" and status "Ready" already exists in the cluster. You must delete the existing Node or change the name of this new joining Node

To see the stack trace of this error execute with --v=5 or higher因为我的虚拟机是从同一台机器上克隆过来的,主机名都一样,所以需要修改一下主机名