使用Python实现KNN算法解决简单分类问题

使用Python实现KNN算法解决简单分类问题

KNN分类

KNN算法属于监督学习算法,它可以解决分类问题,也可以解决回归问题。对于一组带标签的数据,当我们使用KNN算法进行分类时,有两种分类方式。KNN的分类是有参照点的,它会参照周围的 K K K 个结点的标签,来划分适合这个加入点的标签,初始假设 K = k K = k K=k,

第一种分类方式,根据最近的 k k k 个距离的点的标签比例划分。 我们将加入点周围最近的 k k k 个点找出来,加入点的标签类别就是它周围这 k k k 个点中占比最多的那类标签。

第二种分类方式,根据距离乘以权值来将加入的点进行划分,权值为距离的倒数。 即对下列数 [ d 1 × 1 d 1 , d 2 × 1 d 2 , d 3 × 1 d 3 . . . ] \left[d_1\times\frac{1}{d_1}, d_2\times\frac{1}{d_2}, d_3\times\frac{1}{d_3}...\right] [d1×d11,d2×d21,d3×d31...] 进行排序比较来划分加入点具体属于哪个点所属的标签。

首先简单实现一个KNN的分类,KNN分类分以下几个步骤:

- 分类前需要划分训练集

X_train, y_train和测试集X_test, y_test,使用train_test_split函数 - 创建一个分类对象,制定划分所需 k k k 值,

knn_clf = KNNClassifier(k=3) - 使用训练集数据进行拟合

knn_clf.fit(X_train, y_train),实际让对象获取训练集数据 - 进行预测,预测采用上述两种分类方式一种来将加入点打上标签。

数据集的划分在 scikit-learn 中引用的文件为 from sklearn.model_selection import train_test_split,此处我们模仿写一个简单的model_selection.py文件。

需要注意两点:

- 样本数和标签数一致,

X.shape[0] == y.shape[0] - 测试集比例

test_size的大小介于 0 0 0 到 1 1 1 之间

其中X为所有样本数据矩阵,y为所有标签向量

划分数据集、测试集的完整代码

import numpy as np

def train_test_split(X, y, test_size=0.2, random_state=None):

"""数据集划分,将数据集X和y按照test_size分割成X_train,X_test,y_train,y_test"""

assert X.shape[0] == y.shape[0], \

"the size of X must be equal to the size of y"

assert 0.0 <= test_size <= 1.0, \

"test_ration must be valid"

if random_state:

np.random.seed(random_state)

shuffled_indexes = np.random.permutation(len(X))

test_size = int(len(X) * test_size)

test_indexes = shuffled_indexes[:test_size]

train_indexes = shuffled_indexes[test_size:]

X_train = X[train_indexes]

y_train = y[train_indexes]

X_test = X[test_indexes]

y_test = y[test_indexes]

return X_train, X_test, y_train, y_test

以上则实现了对数据集、测试集的划分。接下来我们按照距离打标签的方式实现一个简单的KNN算法。KNN中有多种对距离的计算:

- 曼哈顿距离, p = 1 p=1 p=1

- 欧式距离, p = 2 p=2 p=2

- 明可夫斯基距离, d = ( ∑ i = 1 n ∣ x i − y i ∣ p ) 1 p d = (\sum\limits_{i=1}^n |x_i - y_i|^p)^{\frac{1}{p}} d=(i=1∑n∣xi−yi∣p)p1

我们实现的时候采用欧式距离,距离计算如下:

# x_train为一个样本的数据,X_train为所有样本的数据

# 欧式距离计算

distance = [sqrt(np.sum((x_train - x_predict)**2)) for x_train in X_train]

接下来是一个 T o p − K Top-K Top−K 问题,我们要将前 K K K 大距离的点所对应的标签提取出来,我们使用:

nearest = np.argsort(distances) # 根据距离从小到大排序

topK_y = [self._y_train[i] for i in nearest[:self.k]] # 取出倒数k个距离对应的y_train

votes = Counter(topK_y) # 统计最大的k个距离对应点的预测值

return votes.most_common(1)[0][0]

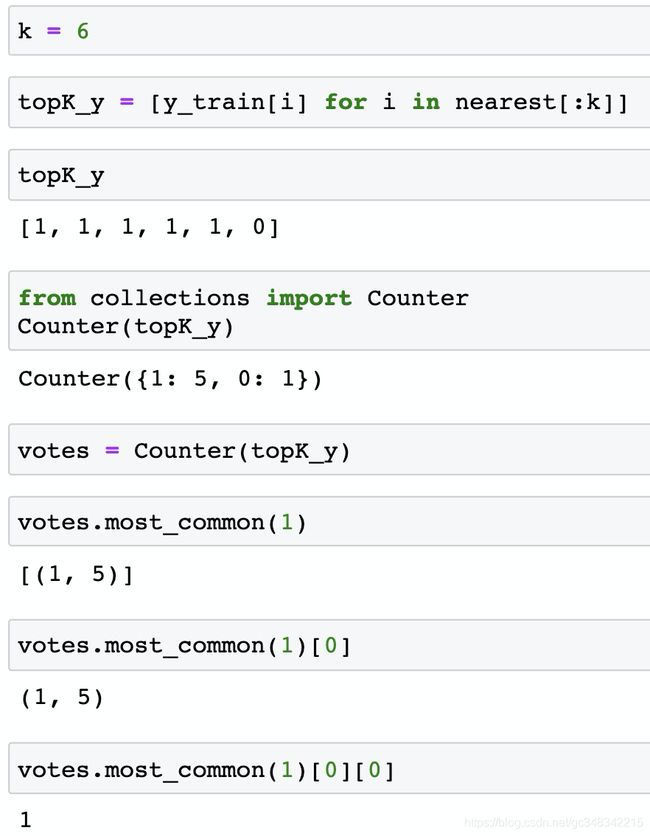

返回most_common(n)指的是返回票数最多的 n 个统计值(n 个键值对),即:[(),(),...];most_common(1)[0]表示最多的一个键值对对应的元组列表,即();most_common(1)[0][0] 最多一个键值对的 key 值,即元组对应的第一个元素。如下图所示,便于理解:

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传

KNN分类的预测函数实现完整代码

def predict(self, X_predict):

"""给定待预测数据集X_predict,返回表示X_predict的结果向量"""

assert self._X_train is not None and self._y_train is not None, \

"must fit before predict!"

assert X_predict.shape[1] == self._X_train.shape[1], \

"the feature number of X_predict must be equal to X_train"

y_predict = [self._predict(x) for x in X_predict]

return np.array(y_predict)

def _predict(self, x):

"""给定单个待预测数据x,返回x_predict的预测结果值"""

assert x.shape[0] == self._X_train.shape[1], \

"the feature number of x must be equal to X_train"

distances = [sqrt(np.sum((x_train-x)**2)) for x_train in self._X_train]

nearest = np.argsort(distances)

topK_y = [self._y_train[i] for i in nearest[:self.k]]

votes = Counter(topK_y)

return votes.most_common(1)[0][0]

当我们根据距离对新加入点进行分类后,我们就得到了预测的值 y_predict,那么此时我们如何评估预测的准确度呢?在scikit-learn中调用如下代码可实现准确度计算。

from sklearn.metrics import accuracy_score

accuracy_score(y_test, y_predict)

接下来我们自己实现一个准确度计算的函数,核心只需要一行代码sum(y_test == y_predict) / len(y_test),用于统计预测的准确率。

准确率实现的完整代码

import numpy as np

from math import sqrt

# 分类问题的评价指标

def accuracy_score(y_true, y_predict):

"""计算y_true和y_predict之间的准确率"""

assert y_true.shape[0] == y_predict.shape[0], \

"the size of y_true must be equal to the size of y_predict"

return sum(y_true == y_predict) / len(y_true)

经过上述对KNN各个步骤的描述,我们大致知道了如何划分数据集和测试集,如何通过KNN进行新加入点的分类,如何评估KNN模型的准确率,最后我们再对KNN算法进行一个系统的封装。

KNN算法实现代码

kNN.py:

import numpy as np

from math import sqrt

from collections import Counter

from .metrics import accuracy_score

class KNNClassifier:

def __init__(self, k):

"""初始化kNN分类器"""

assert k >= 1, "k must be valid"

self.k = k

self._X_train = None

self._y_train = None

def fit(self, X_train, y_train):

"""根据训练数据集X_train和y_train训练kNN分类器"""

assert X_train.shape[0] == y_train.shape[0], \

"the size of X_train must be equal to the size of y_train"

assert self.k <= X_train.shape[0], \

"the size of X_train must be at least k."

self._X_train = X_train

self._y_train = y_train

return self

def predict(self, X_predict):

"""给定待预测数据集X_predict,返回表示X_predict的结果向量"""

assert self._X_train is not None and self._y_train is not None, \

"must fit before predict!"

assert X_predict.shape[1] == self._X_train.shape[1], \

"the feature number of X_predict must be equal to X_train"

y_predict = [self._predict(x) for x in X_predict]

return np.array(y_predict)

def _predict(self, x):

"""给定单个待预测数据x,返回x_predict的预测结果值"""

assert x.shape[0] == self._X_train.shape[1], \

"the feature number of x must be equal to X_train"

distances = [sqrt(np.sum((x_train-x)**2)) for x_train in self._X_train]

nearest = np.argsort(distances)

topK_y = [self._y_train[i] for i in nearest[:self.k]]

votes = Counter(topK_y)

return votes.most_common(1)[0][0]

def score(self, X_test, y_test):

"""根据测试数据集 X_test y_test 确定当前模型的准确度"""

y_predict = self.predict(X_test)

return accuracy_score(y_test, y_predict)

def __repr__(self):

return "KNN(k=%d)" % self.k

metrics.py:

import numpy as np

from math import sqrt

# 分类问题的评价指标

def accuracy_score(y_true, y_predict):

"""计算y_true和y_predict之间的准确率"""

assert y_true.shape[0] == y_predict.shape[0], \

"the size of y_true must be equal to the size of y_predict"

return sum(y_true == y_predict) / len(y_true)

以上即为对KNN算法解决分类问题的实现,KNN算法还可用于解决线性回归问题,KNN解决回归问题。