手推BP算法系列2——Python实现多层神经元网络(Pyrtoch框架)

数据集获取链接:

链接:猫和非猫的h5格式数据集

提取码:s4kp

BCELOSS求导思路:(链式求导)

Linear1-->relu-->Linear2-->sigmoid-->Loss;

对Loss求导得dA2=(1-Y)/(1-A)-Y/A;

对sigmoid求导得dA2_dZ2 = A * (1 -A);

相乘得dZ2=dA2*dA2_dZ2;

要得到Linear2的dW2,dB2,需要知道rulu激活输出的A1,

A1用next()迭代出来,得到dW2,dB2;

要得到relu输出的A1的导数,需要知道Linear2的导数W2,

W2从动态图节点取,next()迭代一次获取一个层layer,当前W2=layer.weight

则得到dA1=dZ2*W2;

再求relu的导数relu_grad,relu的导数=A1>0的布尔矩阵;

(relu的导数整数为1,负数为0)

再得到dZ1=dA1*relu_grad;

再得到Linear1的dW1,dB1

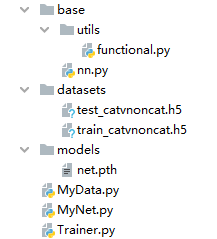

base\utils\functional.py

import numpy as np

def relu(z):

return np.maximum(z,0)

def sigmoid(z):

return 1 / (1 + np.exp(-z))

def tanh(z):

return np.divide(np.exp(z) - np.exp(-z), np.exp(z) + np.exp(-z))

base\nn.py

import numpy as np

class Module:

# Dynamic Graph Node

node_parameters = [] #节点

x_inputs = []#反向求导要添加输入(第一层输入需要...)

def __call__(self, x):

return self.forward(x)

def layercount(self):

return len(Module.node_parameters)

def add_node(self, node):#添加节点

Module.node_parameters.append(node)

def clear_node(self):

"""

clear network data

:return:

"""

Module.node_parameters.clear()

Module.x_inputs.clear()

def add_inputOfLayer(self, x_input):

Module.x_inputs.append(x_input)

def parameters(self):# w也需要(第一层第二层...需要)

for e in reversed(Module.node_parameters):

#for 循环迭代 相当于next,但next还是会占内存所以不用

yield e ##暂停的意思,只迭代一个变量

def inputs(self):

"""

:return: generator

"""

for x in reversed(Module.x_inputs):

yield x

def forward(self, x):

return x

class Linear(Module):

"""

It's node

relu做激活函数,权重一般乘以np.sqrt(2/in_features)

tanh丛激活函数,权重一般乘以np.sqrt(1/in_featuers)

"""

def __init__(self, in_features, out_features, bias=True):

self.weight = np.random.randn(out_features,in_features) * np.sqrt(2 / in_features)

if bias:

self.bias = np.random.randn(out_features,1) * 0.#为了广播

def forward(self, x):

"""

x : 209 12288

w : 4 12288

:param x:

:return:

"""

self.add_node(self)#self就是自己(线性层就是一个节点)

self.add_inputOfLayer(x)

z = np.dot(x,self.weight.T)

if hasattr(self, "bias"):

z += self.bias.T

return z

class MSELoss(Module):

"""

均方差函数

loss = 1/m *Σ(A - Y)^2

"""

def __call__(self, output, target):

self.m = target.shape[0] #target(209,1)

self.A = output

self.Y = target

return self.forward(output, target)

def forward(self, A, Y):

self.loss = np.sum(np.square(A - Y)) / self.m

return self

def float(self):

return self.loss

def backward(self):

"""

反向求导的核心代码

用到了高数的导数知识

链式求导

dA -> dZ -> dW

Z = W *X + B

A = g(Z)

A - Y

z2 = w2A1+b2

dA -> dZ -> db

:return:

"""

dA2 = 2 * (self.A - self.Y)

dA2_dZ2 = self.A * (1 - self.A)

dZ2 = dA2 * dA2_dZ2

inputs = self.inputs()

A1 = next(inputs)

dZ2_dW2 = A1

dW2 = np.dot(dZ2.T, dZ2_dW2) / self.m

dB2 = np.sum(dZ2.T, axis=1, keepdims=True) / self.m

params = self.parameters()

layer = next(params)

W2 = layer.weight

dA1 = np.dot(dZ2,W2) # dloss/dA1

relu_grad = A1 > 0

dZ1 = dA1 * relu_grad # dloss/dz1

x = next(inputs)

dW1 = np.dot(dZ1.T, x) / self.m

dB1 = np.sum(dZ1.T, axis=1, keepdims=True) / self.m

#axis=1必须加,因为设置的神经元有16个

#keepdims主要用于保持矩阵的二维特性

return {

"DW2":dW2, "DB2":dB2, "DW1":dW1, "DB1":dB1}

class BCELoss(Module):

"""

binary cross entropy 函数

loss = -Σ(ylogA+(1-y)log(1-A)) / m

A是输出,y是标签,二分类交叉熵,搭配sigmoid

dA=(1-y)/(1-A)-y/A

dZ=A-y不受sigmoid的影响了,梯度就不会消失了

"""

def __call__(self, output, target):

self.m = target.shape[0]

self.A = output

self.Y = target

return self.forward(output, target)

def forward(self, A, Y):

self.loss = -np.sum(Y * np.log(A) + (1 - Y) * np.log((1 - A))) / self.m

return self

def float(self):

return self.loss

def backward(self):

dA2 = (1 - self.Y) / (1 - self.A) - self.Y / self.A

dA2_dZ2 = self.A * (1 - self.A)

dZ2 = dA2 * dA2_dZ2

inputs = self.inputs()

A1 = next(inputs)

dW2 = np.dot(dZ2.T, A1) / self.m

dB2 = np.sum(dZ2.T, axis=1, keepdims=True) / self.m

params = self.parameters()

layer = next(params)

W2 = layer.weight

"""

w2: 1 8

"""

dA1 = np.dot(dZ2, W2)

relu_grad = A1 > 0

dZ1 = dA1 * relu_grad

x = next(inputs)

dW1 = np.dot(dZ1.T, x) / self.m

dB1 = np.sum(dZ1.T, axis=1, keepdims=True) / self.m

return {

"DW2": dW2, "DB2": dB2, "DW1": dW1, "DB1": dB1}

class Optimizer:

def __init__(self,net, lr=0.1):

self.lr = lr

self.net = net

def step(self, grads):

try:

params = self.net.parameters()

i = self.net.layercount()

for param in params:#从后往前更新参数

param.weight = param.weight - self.lr * grads["DW"+str(i)]

param.bias = param.bias - self.lr * grads["DB"+str(i)]

i-=1

finally:

self.net.clear_node()

MyData.py

import h5py

import matplotlib.pyplot as plt

class MyDataset:

def __init__(self):

self.train = h5py.File("datasets/train_catvnoncat.h5")

self.test = h5py.File("datasets/test_catvnoncat.h5")

def get_train_set(self):

return self.train["train_set_x"][:] / 255.,self.train["train_set_y"][:]

def get_test_set(self):

return self.test["test_set_x"][:] / 255., self.test["test_set_y"][:]

if __name__ == '__main__':

data = MyDataset()

print(data.get_train_set()[1].shape)

print(data.get_test_set()[0].shape)

# 209

MyNet.py

import base.nn as nn

import base.utils.functional as F

class MyNet(nn.Module):

def __init__(self):

"""

N V

209 12288

209 10

"""

self.layer1 = nn.Linear(64*64*3,16)

self.layer2 = nn.Linear(16,2)

def forward(self, x):

x = self.layer1(x)

x = F.relu(x)

x = self.layer2(x)

x = F.sigmoid(x)

return x

Trainer.py

from MyNet import MyNet

import numpy as np

from MyData import MyDataset

import matplotlib.pyplot as plt

import pickle

import base.nn as nn

class Trainer:

def __init__(self):

self.net = MyNet()

self.loss_func = nn.BCELoss()

self.opt = nn.Optimizer(self.net, lr=0.01)

self.dataset = MyDataset()

def train(self):

x, y = self.dataset.get_train_set()

x = x.reshape(-1, 64 * 64 * 3)

y = y[:,np.newaxis]#(209,1)

epochs = 5000

losses = []

for i in range(epochs):

out = self.net(x)

loss = self.loss_func(out,y)

if i % 100 == 0:

losses.append(loss.float())

print("{}/{},loss:{}".format(i,epochs, loss.float()))

plt.clf()

plt.plot(losses)

plt.pause(0.1)

grads = loss.backward()

self.opt.step(grads)

self.save(self.net, "models/net.pth")

def save(self,net,path):

with open(path, "wb") as f:

pickle.dump(net,f)

# print("保存成功")

def load(self, path):

with open(path,"rb") as f:

net = pickle.load(f)

return net

def test(self):

x, y = self.dataset.get_test_set()

net = self.load("models/net.pth")

x_data = x.reshape(-1, 64*64*3)

out = net(x_data)

out = out.round()

y = y[:,np.newaxis]

x = (x*255).astype(np.uint8)

accuracy = (out == y).mean() # 精度

print(accuracy * 100)

for i in range(out.shape[0]):

plt.clf()

plt.imshow(x[i])

plt.text(0,0,"{}".format("Cat" if out[i] == 1 else "No-Cat"), fontsize=16, color="red")

plt.pause(1)

if __name__ == '__main__':

t = Trainer()

# t.train()

t.test()

MSE配合sigmoid效果不好,sigmoid梯度太小,最大才0.25

BCE配合sigmoid效果可以,因为求导的时候,dZ2=dA2*dA2_dZ2=A2-Y

与sigmoid梯度没有关系,只与输出和标签有关

求导过程:Linear1->relu->linear2->sigmoid->A y

keepdims的作用

import numpy as np

a = np.array([[1,2],[3,4]])

# 按行相加,并且保持其二维特性

print(np.sum(a, axis=1, keepdims=True))

# 按行相加,不保持其二维特性

print(np.sum(a, axis=1))

完整代码获取链接:完整代码获取

提取码:tgil