4. 业务系统迁移准备

在业务系统迁移到kubernetes前需要完成一些准备工作。

Harbor高可用部署

Harbor是一个用于存储Docker镜像的企业级Registry服务。

官方是通过docker-compose方式部署harbor的。接下来通过docker-compose和主从复制的方式部署高可用的harbor,对于harbor没有太高访问需求的情况下该方式比较合适。

- 主机说明:

| ip | role | domain |

|---|---|---|

| 192.168.1.59 | harbor1 | hub.lzxlinux.cn |

| 192.168.1.60 | harbor2 | harbor.lzxlinux.cn |

两个节点分别部署harbor。

- 配置hosts:

# echo '192.168.1.59 hub.lzxlinux.cn' >> /etc/hosts

# echo '192.168.1.60 harbor.lzxlinux.cn' >> /etc/hosts

在Windows电脑hosts文件中添加本地dns:

192.168.1.59 hub.lzxlinux.cn

192.168.1.60 harbor.lzxlinux.cn

- 安装docker:

# curl http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker.repo

# yum makecache fast

# yum install -y docker-ce

# systemctl enable docker && systemctl start docker

# cat < /etc/docker/daemon.json

{

"insecure-registries": ["hub.lzxlinux.cn", "harbor.lzxlinux.cn"]

}

EOF

# curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.io

# systemctl restart docker

- 下载最新的docker-compose二进制文件:

# curl -L https://github.com/docker/compose/releases/download/1.24.1/docker-compose-$(uname -s)-$(uname -m) -o /usr/local/bin/docker-compose

# chmod +x /usr/local/bin/docker-compose

- 下载harbor离线安装包:

github地址:https://github.com/goharbor/harbor/releases

# mkdir /software && cd /software

# wget https://storage.googleapis.com/harbor-releases/release-1.9.0/harbor-offline-installer-v1.9.1.tgz

# tar zxf harbor-offline-installer-v1.9.1.tgz

- 安装harbor:

以harbor1节点为例,

# cd harbor/

# vim harbor.yml

hostname: 192.168.1.59 #ip,不要定义为域名

harbor_admin_password: Harbor12345 #admin用户初始密码

data_volume: /data #数据存储路径,自动创建

log:

level: info

local:

rotate_count: 50

rotate_size: 200M

location: /var/log/harbor #日志路径

# sh install.sh

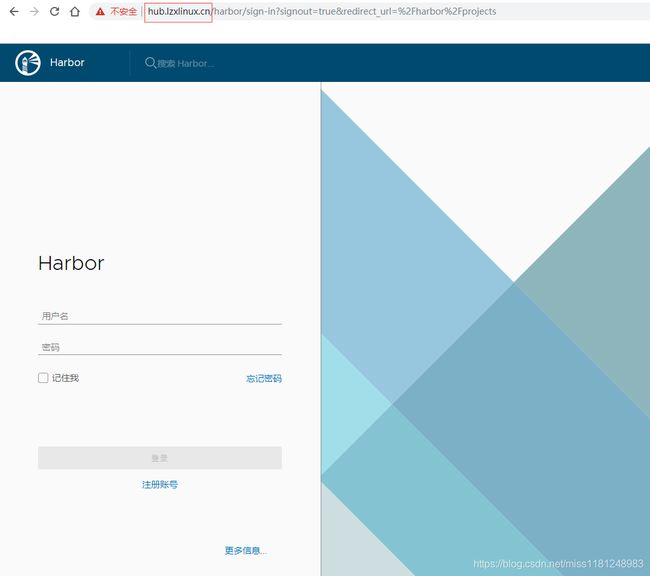

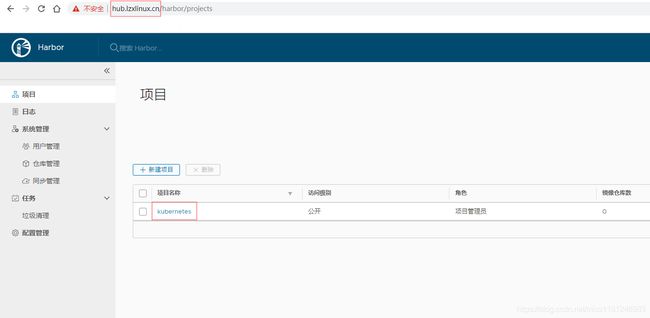

- 访问harbor:

浏览器输入hub.lzxlinux.cn,使用账号admin和密码Harbor12345登录,

删除默认项目library,新建一个项目kubernetes,

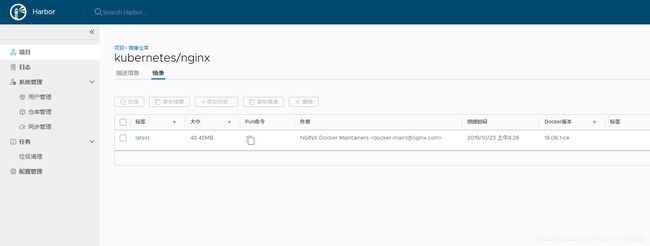

在harbor1节点推送一个nginx镜像,

# docker login hub.lzxlinux.cn

# docker pull nginx

# docker tag nginx:latest hub.lzxlinux.cn/kubernetes/nginx:latest

# docker push !$

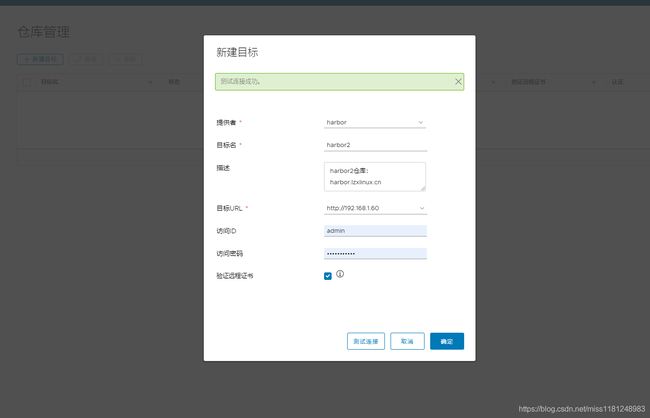

- harbor1配置同步规则:

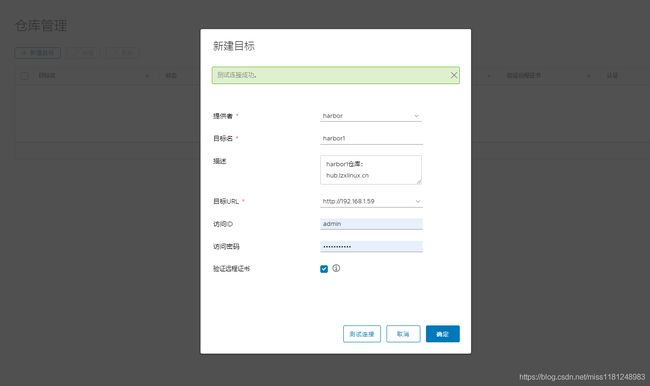

系统管理 → 仓库管理 → 新建目标,

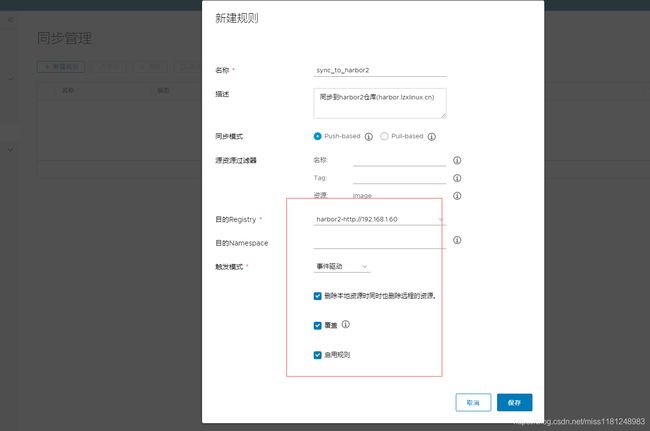

系统管理 → 同步管理 → 新建规则,

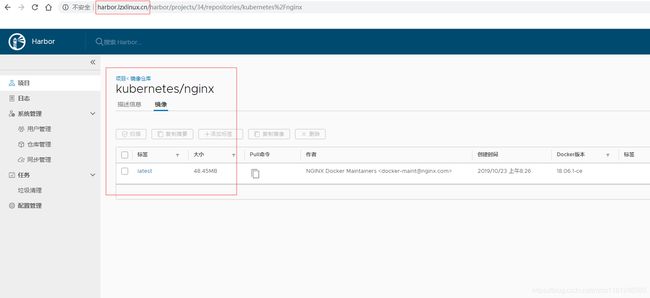

选中刚新建的同步规则,点击同步,登录harbor.lzxlinux.cn查看,

可以看到,kubernetes项目已经同步到harbor.lzxlinux.cn上。

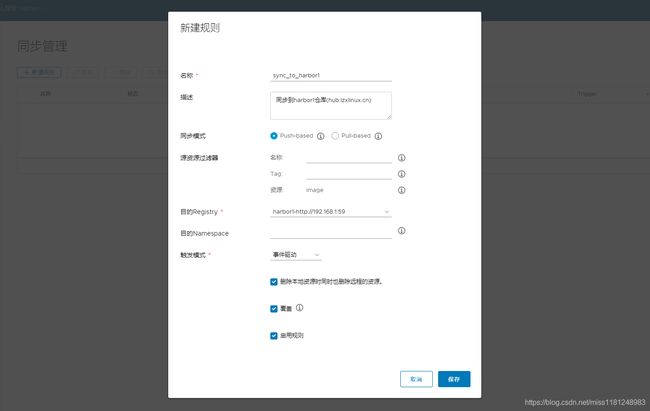

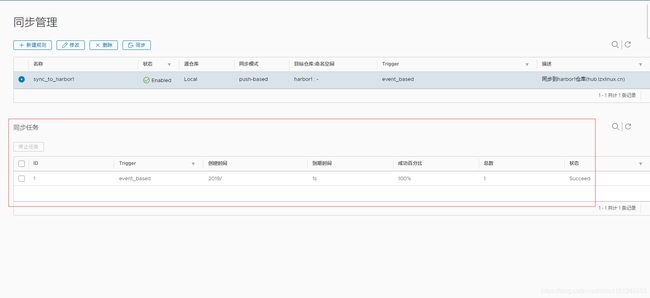

- harbor2配置同步规则:

系统管理 → 仓库管理 → 新建目标,

系统管理 → 同步管理 → 新建规则,

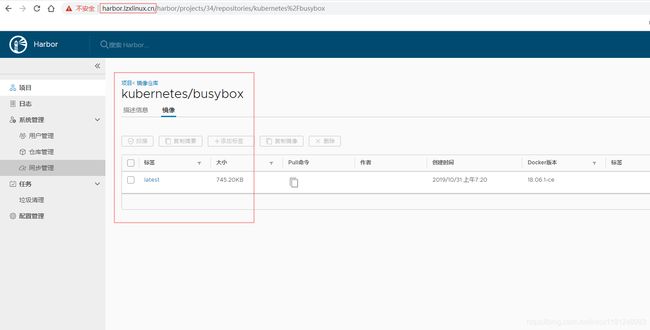

- harbor2推送镜像:

# docker login harbor.lzxlinux.cn

# docker pull busybox

# docker tag busybox:latest harbor.lzxlinux.cn/kubernetes/busybox:latest

# docker push !$

harbor.lzxlinux.cn上查看,

查看同步任务,

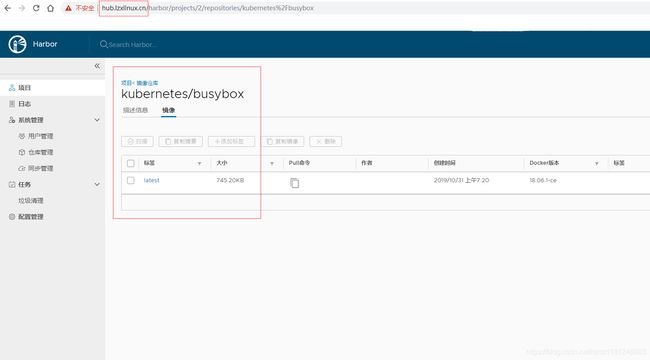

hub.lzxlinux.cn上查看,

至此,以主从复制的形式部署高可用的harbor完成。

两个harbor仓库互为镜像。在devops时,将其中一个仓库作为主仓库,另一仓库作为备仓库,当主仓库出现问题时,可以及时替换为备仓库,避免业务更新中断。

部署ingress-nginx

ingress官方文档

- 主机说明:

| ip | role | hostname |

|---|---|---|

| 192.168.1.51 | master | master1 |

| 192.168.1.52 | master | master2 |

| 192.168.1.53 | master | master3 |

| 192.168.1.54 | node | node1 |

| 192.168.1.55 | node | node2 |

| 192.168.1.56 | node | node3 |

- 什么是ingress:

通常,服务 和 Pod 具有仅能在集群网络内路由的ip地址。在边缘路由器结束的所有流量都被丢弃或转发到别处。从概念上讲,这可能看起来像:

internet

|

------------

[ Services ]

Ingress 是允许连接到集群 Service 的规则集合。

internet

|

[ Ingress ]

--|-----|--

[ Services ]

它可以被配置为提供外部可访问的URL、负载均衡流量、终止SSL、提供基于名称的虚拟托管等等。

- 最小的ingress示例:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /testpath

backend:

serviceName: test

servicePort: 80

与其他 Kubernetes 对象配置一样,Ingress 需要apiVersion、kind和metadata字段。spec具有配置负载均衡器或代理服务器所需的所有信息,最重要的是,它包含与所有传入请求相匹配的规则列表。

每个 HTTP 规则都包含以下信息:主机(例如:foo.bar.com,在本例中默认为 * ),路径列表(例如:/testpath),每个路径都有一个关联的后端(test:80)。在负载均衡器将流量路由到后端之前,主机和路径都必须与传入请求的规则匹配。

- ingress controller:

为了使 Ingress 资源正常工作,集群必须有 Ingress 控制器运行。它们通常作为 kube-controller-manager 二进制文件的一部分运行,并且通常作为集群创建的一部分自动启动。目前较为常用的Ingress 控制器是ingress-nginx。

- 部署ingress-nginx(master1):

3个node节点先分别拉取镜像,

# docker pull quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.19.0

# docker pull registry.cn-hangzhou.aliyuncs.com/liuyi01/defaultbackend-amd64:1.5

# docker tag registry.cn-hangzhou.aliyuncs.com/liuyi01/defaultbackend-amd64:1.5 k8s.gcr.io/defaultbackend-amd64:1.5

在3台node节点部署ingress-nginx,因此需要给node打上label。

# cd /software

# mkdir ingress-nginx && cd ingress-nginx

# kubectl label node node1 app=ingress

# kubectl label node node2 app=ingress

# kubectl label node node3 app=ingress

# vim mandatory.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: default-http-backend

labels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

namespace: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

image: k8s.gcr.io/defaultbackend-amd64:1.5

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

---

apiVersion: v1

kind: Service

metadata:

name: default-http-backend

namespace: ingress-nginx

labels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

spec:

ports:

- port: 80

targetPort: 8080

selector:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 3

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

serviceAccountName: nginx-ingress-serviceaccount

hostNetwork: true

nodeSelector:

app: ingress

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.19.0

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

# kubectl apply -f mandatory.yaml

# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/default-http-backend-5c9bb94849-mlvjq 1/1 Running 0 30s

pod/nginx-ingress-controller-65ccbbc7bb-7b77t 1/1 Running 0 31s

pod/nginx-ingress-controller-65ccbbc7bb-85xh2 1/1 Running 0 31s

pod/nginx-ingress-controller-65ccbbc7bb-pgdbg 1/1 Running 0 31s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/default-http-backend ClusterIP 10.102.235.24 <none> 80/TCP 31s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/default-http-backend 1/1 1 1 31s

deployment.apps/nginx-ingress-controller 3/3 3 3 31s

NAME DESIRED CURRENT READY AGE

replicaset.apps/default-http-backend-5c9bb94849 1 1 1 31s

replicaset.apps/nginx-ingress-controller-65ccbbc7bb 3 3 3 31s

- 使用测试:

# vim ingress-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-demo

spec:

selector:

matchLabels:

app: tomcat-demo

replicas: 1

template:

metadata:

labels:

app: tomcat-demo

spec:

containers:

- name: tomcat-demo

image: registry.cn-hangzhou.aliyuncs.com/liuyi01/tomcat:8.0.51-alpine

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-demo

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: tomcat-demo

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: tomcat-demo

spec:

rules:

- host: tomcat.lzxlinux.cn

http:

paths:

- path: /

backend:

serviceName: tomcat-demo

servicePort: 80

# kubectl create -f ingress-demo.yaml

# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

tomcat-demo-6bc7d5b6f4-rnqlm 1/1 Running 0 36s 172.10.4.6 node1 <none> <none>

可以看到,该pod在node1节点上运行。在Windows电脑hosts文件中添加本地dns:

192.168.1.54 tomcat.lzxlinux.cn

192.168.1.54 api.lzxlinux.cn

- 访问:

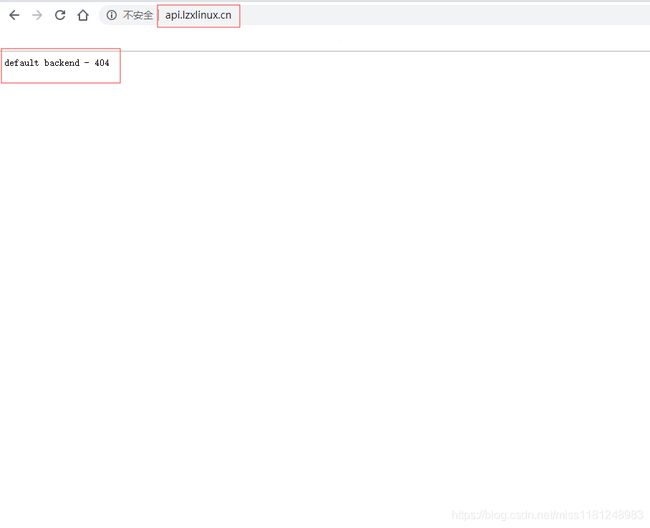

先访问api.lzxlinux.cn,

这是默认后端返回的信息,因为在yaml文件中并未定义api.lzxlinux.cn域名,及对应的后端服务和端口。

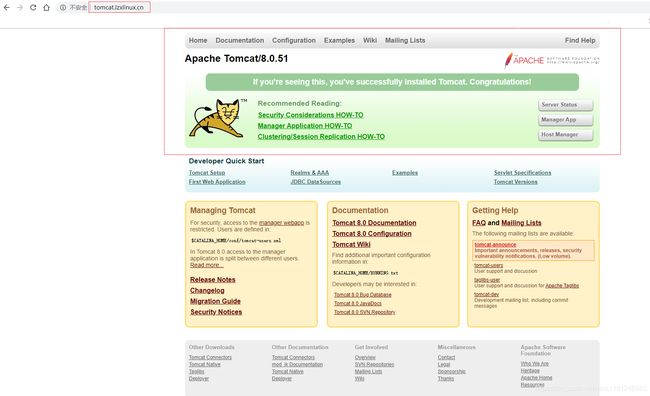

再访问tomcat.lzxlinux.cn,

可以看到,因为在yaml文件中有定义,所以通过域名可以访问到集群内的名为tomcat-demo(端口为80)的服务。这就是ingress-nginx的作用。

通过ingress-nginx,可以很方便地实现k8s集群的服务发现,集群外访问集群内的服务非常容易。