《Python 深度学习》刷书笔记 Chapter 5 Part-2 案例dogs-vs-cats

文章目录

- 卷积运算的原理

-

- 输出特征图与输入的宽度和高度不同的原因

- 最大池化运算

- 使用MaxPooling层的原因

- 猫狗识别案例

-

- 下面是一个Kaggle上的猫狗识别案例

- 5-4 数据预处理

- 构建深度学习网络

- 5-5 将猫狗分类的小心卷积神经网络实例化

- 5-6 配置模型用于训练

- 5-7 使用ImageDataGenerator从目录读取图像

- 5-8 利用批量生成器拟合模型

- 5-9 保存模型

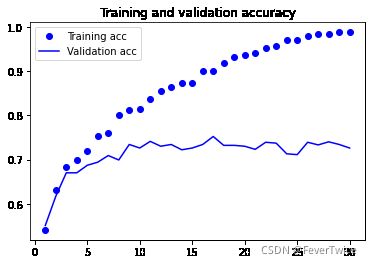

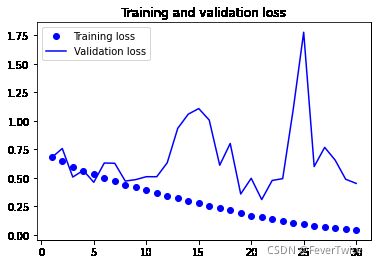

- 5-10 绘制训练过程中的损失曲线和进度曲线

- 模型准确度准确曲线

- 模型损失曲线

- 总结

- 写在最后

卷积运算的原理

输出特征图与输入的宽度和高度不同的原因

- 边界效应,有一部分小框框超出了边界(可以通过对输入进行填充解决)

- 步幅

边界效应解决方法

通过调节padding = ‘same’(默认值为’valid’表示不用填充)对原输入图像进行填充

最大池化运算

-

在每个MaxPooling2D之后,特征图的尺寸都会减半(如从26 * 26 变为13 * 13)这是原于我们会对图片进行下采样

-

最大池化层的下采样通常使用2 * 2的窗口和步幅2, 与此相对的卷积通常使用3 * 3的窗口和步幅1

使用MaxPooling层的原因

- 通过对全局样本进行下采样,可以使得学习模型能够从一个更大的维度去看待整个样本,从局部 --> 全局

- 减少运算开支,防止过拟合

当然,实现下采样的方式有许多种,MaxPooling层的使用只是其中的一种方案,也可以使用平均池化层来代替最大池化,平均池化层志求方块中的平均值而不是最大值,但是最大池化层的效果明显要更好(平均池化层使得区域的特征变得不那么明显,即淡化了图像原有的特征信息)

猫狗识别案例

下面是一个Kaggle上的猫狗识别案例

5-4 数据预处理

import os, shutil

# 读入数据

# 数据原始路径

original_dataset_dir = r'E:\code\PythonDeep\DataSet\dogs-vs-cats\train'

# 我们需要创建的文件根目录

base_dir = r'E:\code\PythonDeep\DataSet\sampledata'

os.mkdir(base_dir)

# 训练集数据文件夹

train_dir = os.path.join(base_dir, "train")

os.mkdir(train_dir)

# 验证集根目录

validation_dir = os.path.join(base_dir, "validation")

os.mkdir(validation_dir)

# 测试集根目录

test_dir = os.path.join(base_dir, "test")

os.mkdir(test_dir)

# 训练集目录下

# 猫图像训练集目录

train_cats_dir = os.path.join(train_dir, 'cats')

os.mkdir(train_cats_dir)

# 狗图像训练集目录

train_dogs_dir = os.path.join(train_dir, 'dogs')

os.mkdir(train_dogs_dir)

# 验证集目录下

# 猫验证集图像目录

validation_cats_dir = os.path.join(validation_dir, 'cats')

os.mkdir(validation_cats_dir)

# 狗验证集图像目录

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

os.mkdir(validation_dogs_dir)

# 测试集目录下

# 猫测试集图像目录

test_cats_dir = os.path.join(test_dir, 'cats')

os.mkdir(test_cats_dir)

# 狗测试集图像目录

test_dogs_dir = os.path.join(test_dir, 'dogs')

os.mkdir(test_dogs_dir)

# =========================================================

# 将前1000张猫的图像复制到train_cats_dir

# 文件名:cat.0.jpg

fnames = ['cat.{}.jpg'.format(i) for i in range(1000)] # 正则表达式

for fname in fnames:

src = os.path.join(original_dataset_dir, fname) # 源地址

dst = os.path.join(train_cats_dir, fname) # 目标地址

shutil.copyfile(src, dst)

# 将500张猫的图片复制到validation_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_cats_dir, fname)

shutil.copyfile(src, dst)

# 将500张猫的图片复制到test_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_cats_dir, fname)

shutil.copyfile(src, dst)

# 将1000张狗的图片复制到train_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_dogs_dir, fname)

shutil.copyfile(src, dst)

# 将500张狗的图片复制到validation_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_dogs_dir, fname)

shutil.copyfile(src, dst)

# 将500张狗的图片复制到test_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_dogs_dir, fname)

shutil.copyfile(src, dst)

# 打印,看数据集是否正确

print('total training cat images:', len(os.listdir(train_cats_dir)))

print('total training dog images:', len(os.listdir(train_dogs_dir)))

print('total validation cat images:', len(os.listdir(validation_cats_dir)))

print('total validation dog images:', len(os.listdir(validation_dogs_dir)))

print('total test cat images:', len(os.listdir(test_cats_dir)))

print('total test dog images:', len(os.listdir(test_dogs_dir)))

total training cat images: 1000

total training dog images: 1000

total validation cat images: 500

total validation dog images: 500

total test cat images: 500

total test dog images: 500

构建深度学习网络

- 在卷积深度学习网络中,特征图的深度在逐渐增大,从32到128,而尺寸在逐渐减小,从150 * 150 到 7 * 7

- 由于我们这个问题是一个研究二分类的问题(区别猫和狗),最后的激活层我们使用sigmoid

5-5 将猫狗分类的小心卷积神经网络实例化

# 构建卷积神经网络模型

from keras import layers

from keras import models

model = models.Sequential()

# 卷积层

model.add(layers.Conv2D(32, (3, 3), activation = 'relu', input_shape = (150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation = 'relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation = 'relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation = 'relu'))

model.add(layers.MaxPooling2D((2, 2)))

# 全连接层

model.add(layers.Flatten()) # 将输出数据变为一维向量

model.add(layers.Dense(512, activation = 'relu'))

model.add(layers.Dense(1, activation = 'sigmoid'))

# 查看模型概况

model.summary()

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_5 (Conv2D) (None, 148, 148, 32) 896

_________________________________________________________________

max_pooling2d_5 (MaxPooling2 (None, 74, 74, 32) 0

_________________________________________________________________

conv2d_6 (Conv2D) (None, 72, 72, 64) 18496

_________________________________________________________________

max_pooling2d_6 (MaxPooling2 (None, 36, 36, 64) 0

_________________________________________________________________

conv2d_7 (Conv2D) (None, 34, 34, 128) 73856

_________________________________________________________________

max_pooling2d_7 (MaxPooling2 (None, 17, 17, 128) 0

_________________________________________________________________

conv2d_8 (Conv2D) (None, 15, 15, 128) 147584

_________________________________________________________________

max_pooling2d_8 (MaxPooling2 (None, 7, 7, 128) 0

_________________________________________________________________

flatten_2 (Flatten) (None, 6272) 0

_________________________________________________________________

dense_3 (Dense) (None, 512) 3211776

_________________________________________________________________

dense_4 (Dense) (None, 1) 513

=================================================================

Total params: 3,453,121

Trainable params: 3,453,121

Non-trainable params: 0

_________________________________________________________________

5-6 配置模型用于训练

from keras import optimizers

model.compile(loss = 'binary_crossentropy',

optimizer = optimizers.RMSprop(lr = 1e-4),

metrics = ['acc']) # 注意区分l和1,Lr = 一(中文)e减4

5-7 使用ImageDataGenerator从目录读取图像

接下来我们减数据个书画为经过预处理的浮点数张量

- 读取图像文件

- 将JEPG转换为RGB

- 将像素网格转换为浮点数

- 将0到255的数据转换为小数点[0, 1]区间

from keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(rescale = 1./255) # 让数据每个元素除以255

test_datagen = ImageDataGenerator(rescale = 1./255)

# 调整图像大小

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary')

# 打印生成器有关信息

for data_batch, labels_batch in train_generator:

print('data batch shape: ', data_batch.shape)

print('labels batch shape: ', labels_batch.shape)

break

Found 2000 images belonging to 2 classes.

Found 1000 images belonging to 2 classes.

data batch shape: (20, 150, 150, 3)

labels batch shape: (20,)

5-8 利用批量生成器拟合模型

# 训练模型

history = model.fit_generator(train_generator, # 一个不断生成数据的训练迭代器

steps_per_epoch = 100, # 每个batch有20个样本,读完2000个要100次

epochs = 30,

validation_data = validation_generator,

validation_steps = 50)

Epoch 1/30

100/100 [==============================] - 42s 418ms/step - loss: 0.6863 - acc: 0.5410 - val_loss: 0.6788 - val_acc: 0.5510

Epoch 2/30

100/100 [==============================] - 39s 389ms/step - loss: 0.6441 - acc: 0.6320 - val_loss: 0.7571 - val_acc: 0.6170

Epoch 3/30

100/100 [==============================] - 39s 385ms/step - loss: 0.5943 - acc: 0.6820 - val_loss: 0.5072 - val_acc: 0.6700

Epoch 4/30

100/100 [==============================] - 39s 394ms/step - loss: 0.5617 - acc: 0.6995 - val_loss: 0.5658 - val_acc: 0.6700

Epoch 5/30

100/100 [==============================] - 39s 392ms/step - loss: 0.5326 - acc: 0.7185 - val_loss: 0.4614 - val_acc: 0.6870

Epoch 6/30

100/100 [==============================] - 39s 394ms/step - loss: 0.5012 - acc: 0.7535 - val_loss: 0.6301 - val_acc: 0.6940

Epoch 7/30

100/100 [==============================] - 40s 397ms/step - loss: 0.4751 - acc: 0.7590 - val_loss: 0.6279 - val_acc: 0.7090

Epoch 8/30

100/100 [==============================] - 40s 398ms/step - loss: 0.4399 - acc: 0.8010 - val_loss: 0.4719 - val_acc: 0.6990

Epoch 9/30

100/100 [==============================] - 42s 419ms/step - loss: 0.4167 - acc: 0.8125 - val_loss: 0.4850 - val_acc: 0.7340

Epoch 10/30

100/100 [==============================] - 43s 426ms/step - loss: 0.3905 - acc: 0.8150 - val_loss: 0.5103 - val_acc: 0.7260

Epoch 11/30

100/100 [==============================] - 40s 397ms/step - loss: 0.3642 - acc: 0.8365 - val_loss: 0.5101 - val_acc: 0.7410

Epoch 12/30

100/100 [==============================] - 42s 416ms/step - loss: 0.3384 - acc: 0.8555 - val_loss: 0.6325 - val_acc: 0.7300

Epoch 13/30

100/100 [==============================] - 42s 416ms/step - loss: 0.3246 - acc: 0.8635 - val_loss: 0.9336 - val_acc: 0.7340

Epoch 14/30

100/100 [==============================] - 39s 389ms/step - loss: 0.2980 - acc: 0.8725 - val_loss: 1.0578 - val_acc: 0.7220

Epoch 15/30

100/100 [==============================] - 39s 390ms/step - loss: 0.2808 - acc: 0.8725 - val_loss: 1.1070 - val_acc: 0.7260

Epoch 16/30

100/100 [==============================] - 39s 390ms/step - loss: 0.2523 - acc: 0.8990 - val_loss: 1.0064 - val_acc: 0.7340

Epoch 17/30

100/100 [==============================] - 40s 400ms/step - loss: 0.2322 - acc: 0.9000 - val_loss: 0.6108 - val_acc: 0.7520

Epoch 18/30

100/100 [==============================] - 40s 396ms/step - loss: 0.2151 - acc: 0.9190 - val_loss: 0.8014 - val_acc: 0.7320

Epoch 19/30

100/100 [==============================] - 39s 391ms/step - loss: 0.1902 - acc: 0.9305 - val_loss: 0.3588 - val_acc: 0.7320

Epoch 20/30

100/100 [==============================] - 39s 391ms/step - loss: 0.1704 - acc: 0.9370 - val_loss: 0.4965 - val_acc: 0.7300

Epoch 21/30

100/100 [==============================] - 39s 388ms/step - loss: 0.1577 - acc: 0.9415 - val_loss: 0.3101 - val_acc: 0.7230

Epoch 22/30

100/100 [==============================] - 39s 392ms/step - loss: 0.1363 - acc: 0.9510 - val_loss: 0.4775 - val_acc: 0.7390

Epoch 23/30

100/100 [==============================] - 39s 389ms/step - loss: 0.1243 - acc: 0.9570 - val_loss: 0.4934 - val_acc: 0.7370

Epoch 24/30

100/100 [==============================] - 41s 413ms/step - loss: 0.1063 - acc: 0.9710 - val_loss: 1.0973 - val_acc: 0.7130

Epoch 25/30

100/100 [==============================] - 40s 396ms/step - loss: 0.0952 - acc: 0.9710 - val_loss: 1.7752 - val_acc: 0.7110

Epoch 26/30

100/100 [==============================] - 39s 390ms/step - loss: 0.0787 - acc: 0.9780 - val_loss: 0.5990 - val_acc: 0.7390

Epoch 27/30

100/100 [==============================] - 41s 411ms/step - loss: 0.0687 - acc: 0.9830 - val_loss: 0.7672 - val_acc: 0.7330

Epoch 28/30

100/100 [==============================] - 44s 437ms/step - loss: 0.0608 - acc: 0.9825 - val_loss: 0.6554 - val_acc: 0.7400

Epoch 29/30

100/100 [==============================] - 41s 407ms/step - loss: 0.0514 - acc: 0.9875 - val_loss: 0.4879 - val_acc: 0.7340

Epoch 30/30

100/100 [==============================] - 40s 399ms/step - loss: 0.0443 - acc: 0.9870 - val_loss: 0.4517 - val_acc: 0.7260

5-9 保存模型

model.save('cats_and_dogs_small_1.h5')

5-10 绘制训练过程中的损失曲线和进度曲线

import matplotlib.pyplot as plt

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

# 绘图

plt.plot(epochs, acc, 'bo', label = 'Training acc')

plt.plot(epochs, val_acc, 'b', label = 'Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

# 绘制图像2

plt.plot(epochs, loss, 'bo', label = 'Training loss')

plt.plot(epochs, val_loss, 'b', label = 'Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

模型准确度准确曲线

模型损失曲线

总结

从上图来看,我们训练的模型从整体上还是有过拟合的特征,训练精度直接逼近100,而验证精度则一直在70左右,训练精度应该在第5轮左右达到了最小值,为解决过拟合问题,我们在下一节中将使用数据增强的方法。

写在最后

注:本文代码来自《Python 深度学习》,做成电子笔记的方式上传,仅供学习参考,作者均已运行成功,如有遗漏请练习本文作者

各位看官,都看到这里了,麻烦动动手指头给博主来个点赞8,您的支持作者最大的创作动力哟!

<(^-^)>

才疏学浅,若有纰漏,恳请斧正

本文章仅用于各位同志作为学习交流之用,不作任何商业用途,若涉及版权问题请速与作者联系,望悉知