1-4 李宏毅2021春季机器学习教程-PyTorch教学-助教许湛然

1-3 李宏毅2021春季机器学习教程-Google Colab教学-助教许湛然介绍了Colab的使用,这篇文章是助教许湛然关于PyTorch框架的简要讲解。

更多操作查看: https://pytorch.org/docs/stable/tensors.html

目录

Prerequisites-准备工作

What is PyTorch?-什么是pytorch?

PyTorch v.s. TensorFlow

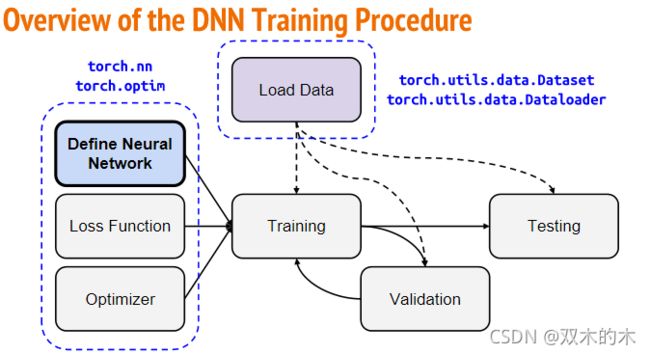

Overview of the DNN Training Procedure

Tensor

Tensor -- Data Type

Tensor -- Shape of Tensors

Tensor -- Constructor

Tensor -- Operators

Tensor -- PyTorch v.s. NumPy

Tensor -- Device

Tensor -- Device(GPU)

How to Calculate Gradient?

Load Data

Dataset & Dataloader

Define Neural Network

torch.nn -- Neural Network Layers

torch.nn -- Activation Functions

Loss Function

torch.nn -- Loss Functions

torch.nn -- Build your own neural network

Optimizer

torch.optim

Neural Network Training

前期准备

多次epoch

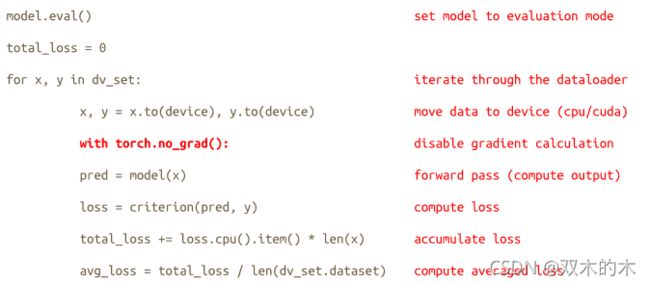

Neural Network Evaluation (Validation Set)

Neural Network Evaluation (Testing Set)

Save/Load a Neural Network

More About PyTorch

Reference

Prerequisites-准备工作

熟悉python3的有关知识:if-else, loop等;熟悉numpy,了解数组等操作。

What is PyTorch?-什么是pytorch?

- 开源的机器学习框架

- 提供两个高水平特征的python库

PyTorch v.s. TensorFlow

Overview of the DNN Training Procedure

Tensor

Tensor -- Data Type

ref: torch.Tensor — PyTorch 1.9.1 documentation

Tensor -- Shape of Tensors

Tensor -- Constructor

Tensor -- Operators

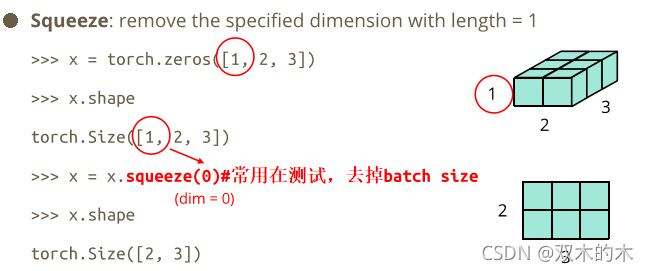

squeeze():主要对数据的维度的进行压缩,去掉维数为1的维度,例如一行或者一列这种,维度为(1,3)的一行三列去掉第一个维数为一的维度之后就变成(3)行。有三种形式:①squeeze(a)就是将a中所有为1的维度删掉,不为1的维度没有影响。②a.squeeze(N) 就是去掉a中指定的维数为一的维度。③还有一种形式就是b=torch.squeeze(a,N),去掉a中指定的定的维数为一的维度。

unsqueeze():主要对数据维度进行填充。给指定位置加上维数为一的维度,例如有个三行的数据(3),在0的位置加了一维就变成一行三列(1,3)。

transpose():交换矩阵的两个维度,transpose(dim0, dim1) → Tensor,其和torch.transpose()函数作用一样。

cat():拼接函数。在给定维度上对输入的张量序列seq 进行连接操作。torch.cat()可以看做 torch.split() 和 torch.chunk()的反操作。

more operators: torch.Tensor — PyTorch 1.9.1 documentation

Tensor -- PyTorch v.s. NumPy

ref: https://github.com/wkentaro/pytorch-for-numpy-users

Tensor -- Device

Tensor -- Device(GPU)

上图的链接如下:

https://towardsdatascience.com/what-is-a-gpu-and-do-you-need-one-in-deep-learning-718b9597aa0d

How to Calculate Gradient?

Load Data

Dataset & Dataloader

注意shuffle参数,在train时为True,在test时为False:

Define Neural Network

torch.nn -- Neural Network Layers

代码如下:

torch.nn -- Activation Functions

Loss Function

torch.nn -- Loss Functions

- Mean Squared Error (for linear regression)回归

nn.MSELoss()

- Cross Entropy (for classification)分类

nn.CrossEntropyLoss()

torch.nn -- Build your own neural network

代码:

import torch.nn as nn

class MyModel(nn.Module):

def __init__(self):

super(MyModel, self).__init__()

self.net = nn.Sequential(

nn.Linear(10, 32),

nn.Sigmoid(),

nn.Linear(32, 1)

)

def forward(self, x):

return self.net(x)Optimizer

torch.optim

代码:

torch.optim.SGD(params,lr,momentum = 0)Neural Network Training

前期准备

代码如下:

dataset = MyDataset(file)

tr_set = DataLoader(dataset, 16, shuffle=True)

model = MyModel().to(device)

criterion = nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), 0.1)多次epoch

代码如下:

for epoch in range(n_epochs):

model.train()

for x, y in tr_set:

optimizer.zero_grad()

x, y = x.to(device), y.to(device)

pred = model(x)

loss = criterion(pred, y)

loss.backward()

optimizer.step()Neural Network Evaluation (Validation Set)

代码如下:

model.eval()

total_loss = 0

for x, y in dv_set:

x, y = x.to(device), y.to(device)

with torch.no_grad():#不希望进行梯度计算

pred = model(x)

loss = criterion(pred, y)

total_loss += loss.cpu().item() * len(x)

avg_loss = total_loss / len(dv_set.dataset)Neural Network Evaluation (Testing Set)

代码如下:

model.eval()

preds = []

for x in tt_set:

x = x.to(device)

with torch.no_grad():

pred = model(x)

preds.append(pred.cpu())Save/Load a Neural Network

代码如下:

#Save

torch.save(model.state_dict(), path)

# Load

ckpt = torch.load(path)

model.load_state_dict(ckpt)More About PyTorch

- torchaudio

speech/audio processing

- torchtext

natural language processing

- torchvision

computer vision

- skorch

scikit-learn + pyTorch

- Useful github repositories using PyTorch

- Huggingface Transformers (transformer models: BERT, GPT, ...)

- Fairseq (sequence modeling for NLP & speech)

- ESPnet (speech recognition, translation, synthesis, ...)

- Many implementation of papers

- ...

Reference

PyTorch

GitHub - pytorch/pytorch: Tensors and Dynamic neural networks in Python with strong GPU acceleration

GitHub - wkentaro/pytorch-for-numpy-users: PyTorch for Numpy users. https://pytorch-for-numpy-users.wkentaro.com

Pytorch vs. TensorFlow: What You Need to Know | Udacity

https://www.tensorflow.org/

NumPy

说明:记录学习笔记,如果错误欢迎指正!写文章不易,转载请联系我。