python3--数据可视化-爬取赌博聊天室2万5千条聊天记录分析之后发现...

文章目录

- 一.整体思路

- 二.效果展示

-

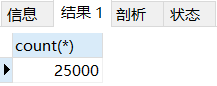

- 1. 数据库

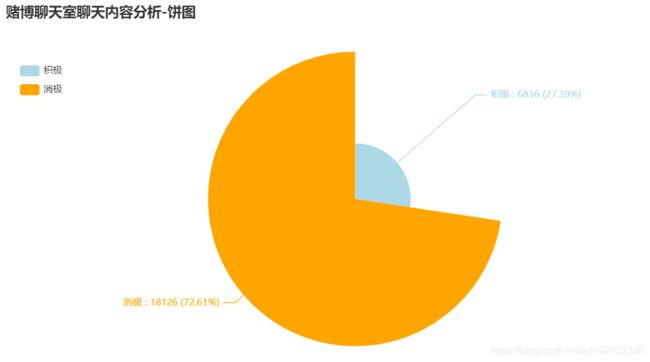

- 2. 赌博聊天室聊天内容分析-饼图

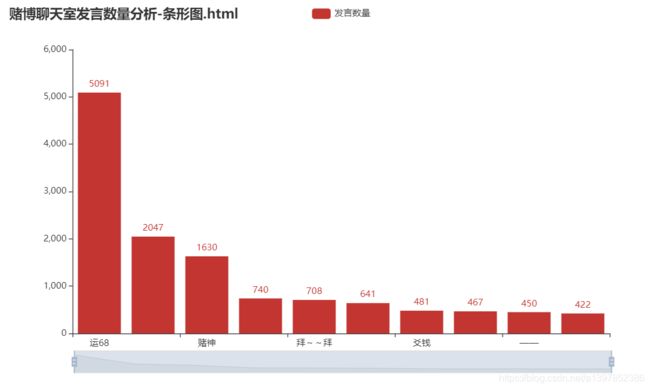

- 3. 赌博聊天室发言数量分析-条形图

- 4. 赌博用户聊天内容分析-词云图

- 三.源代码

-

- 1. 爬虫

- 2. 数据可视化

- 四.总结

闲来无事在网上冲浪,右下角蹦出来一个赌博网站,无聊点进去,发现有个聊天室功能,于是抓取了此网站的所有聊天记录,对其进行分词、情感分析,最终以Echarts图的方式可视化展示出来。

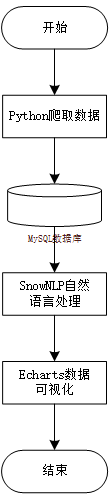

一.整体思路

二.效果展示

1. 数据库

数据库包括用户id、用户名、是否系统、发言事件、聊天内容。

一共爬取了2W5千条聊天记录。

2. 赌博聊天室聊天内容分析-饼图

此饼图是我对聊天记录进行情感分析得出,其中,消极:积极约等于7:3

3. 赌博聊天室发言数量分析-条形图

此条形图为发言次数top10条形图,运68大哥您是真能说啊。

4. 赌博用户聊天内容分析-词云图

此图根据聊天内容进行分词、去停用词处理得出,其中尴尬出现了1322次,真是大写的尴尬。

三.源代码

1. 爬虫

import time

import requests

import json

import pymysql

class DuBo_Chat_Spider(object):

def __init__(self):

self.conn=pymysql.connect(

host='127.0.0.1',

port=3306,

user='root',

password='root',

db='Du_Bo_chat',

charset='utf8'

)

self.cursor=self.conn.cursor()

sql = '''create table if not exists chat(user_id varchar (100),user_name varchar(255),is_system varchar(50),time varchar (255),content varchar (255)) '''

self.cursor.execute(sql)

self.conn.commit()

def get_content(self,page_no):

chat_room_url = ########################

headers={

'Accept': 'application/json, text/plain, */*',

'Connection': 'keep-alive',

'Content-Type': 'application/json;charset=UTF-8',

'Host': ########################,

'Cookie': ########################,

'Origin': ########################,

'Referer': ########################,

'token':'',

'sec-ch-ua': '" Not;A Brand";v="99", "Google Chrome";v="91", "Chromium";v="91"',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.75 Safari/537.36'

}

playload_data={

'Action': "GetMsg",

'isChat': 1,

'pageNo':page_no,

'pageSize': 20

}

r=requests.post(chat_room_url,headers=headers,data=json.dumps(playload_data))

if r.status_code==200:

_json=json.loads(r.text)

return _json

else:

return None

def parse(self,json_data):

item={

}

chat_rows=json_data.get('data').get('rows')

for row in chat_rows:

item['user_id']=row.get('id')

item['user']=row.get('spoker')

item['is_system']=row.get('system')

pub_time=int(row.get('stamp')/1000)

timeArray = time.localtime(pub_time)

otherStyleTime = time.strftime("%Y-%m-%d %H:%M:%S", timeArray)

item['time']=otherStyleTime

item['content']=row.get('content')

print(item)

sql="""INSERT INTO chat values(%s,%s,%s,%s,%s)"""

try:

self.cursor.execute(sql,(item['user_id'],item['user'],item['is_system'],item['time'],item['content']))

#self.cursor.close()

self.conn.commit()

except pymysql.err.DataError:

pass

if __name__ == '__main__':

p_n=1

spider=DuBo_Chat_Spider()

while True:

_json=spider.get_content(p_n)

if _json is not None:

spider.parse(_json)

p_n += 1

print(p_n)

else:

break

2. 数据可视化

#-*-coding:utf-8-*-

import re

import pymysql

from snownlp import SnowNLP

from collections import Counter

from pyecharts.charts import Pie

from pyecharts.charts import Bar

from pyecharts import options as opts

from pyecharts.charts import WordCloud

def get_data_from_mysql():

"""

从数据库获取数据

:return:

"""

try:

conn=pymysql.connect(

host='127.0.0.1',

port=3306,

user='root',

password='root',

db='du_bo_chat',

charset='utf8'

)

cursor=conn.cursor()

sql="select * from chat"

cursor.execute(sql)

data=cursor.fetchall()

return data

except pymysql.Error:

print("数据库操作出现错误!")

finally:

cursor.close()

conn.close()

#____________________

def score_sentence(line):

"""

使用snownlp.sentiments对句子感情进行评分

:param line:

:return:

"""

try:

#预测结果为positive的概率,positive的概率大于等于0.6,我认为可以判断为积极情感,小于0.6的判断为消极情感。所以以下将概率大于等于0.6的评论标签赋为1,小于0.6的评论标签赋为-1,方便后面与实际标签进行比较。

s=SnowNLP(line)

score=s.sentiments

return score

except TypeError:

return None

def get_emotion(score):

"""

根据评分生成积极(1),消极(-1)两类评分

:param score:

:return:

"""

if score is None:

return None

if score>0.6:

#积极

return 1

elif score<0.6 and score>0:

#消极

return -1

def get_pie_data(data):

pos_count=0

negv_count=0

for line in data:

pingjia=line[4]

emotion_score=get_emotion(score_sentence(pingjia))

#print(emotion_score)

if emotion_score==1:

pos_count+=1

elif emotion_score==-1:

negv_count+=1

item={

"积极":pos_count,"消极":negv_count}

return item

# print(item)

# data={'积极': 6836, '消极': 18126}

def draw_pie(data):

"""

根据数据绘制饼图

:param data:

:return:

"""

c = (

Pie()

.add("赌博聊天室聊天内容分析", [(k,v) for k,v in data.items() ],color = "green",rosetype = "radius")

.set_colors(["lightblue", "orange", "yellow", "blue", "pink", "orange", "purple"])

.set_global_opts(title_opts=opts.TitleOpts(title="赌博聊天室聊天内容分析-饼图"),legend_opts=opts.LegendOpts(

orient="vertical", #图例垂直放置

pos_top="15%",# 图例位置调整

pos_left="2%"),

)

.set_series_opts(label_opts=opts.LabelOpts(formatter="{b} : {c} ({d}%)"))

.render("赌博聊天室聊天内容分析-饼图.html")

)

#____________________

def get_line_data(data):

item={

}

for data_ in data:

user_name=data_[1]

#有些用户名字里面带有*,需要过滤一下

if "*"in user_name:

user_name=user_name.replace("*",'')

if user_name in item.keys():

item[user_name]+=1

else:

item[user_name]=1

sorted_item=sorted(item.items(),key=lambda x:x[1],reverse=True)

top_10_user_list=[]

for index,user in enumerate(sorted_item):

if index<10:

top_10_user_list.append(user)

else:

break

return top_10_user_list

def draw_line(data):

bar = (

Bar()

.add_xaxis([data[0] for data in data])

.add_yaxis("发言数量",[data[1] for data in data])

.set_global_opts(

title_opts=opts.TitleOpts(title="赌博聊天室发言数量分析-条形图.html"),

datazoom_opts=opts.DataZoomOpts(),

)

)

bar.render("赌博聊天室发言数量分析-条形图.html")

#____________________

def get_wordCloud_data(data):

word_list=[]

stop_words=[word.strip() for word in open('stopwords.txt','r',encoding='utf-8').readlines()]

for data_ in data:

sentence=data_[4]

try:

cut_results=SnowNLP(sentence).words

for word in cut_results:

if re.match(r'[\u4e00-\u9fa5]+',word):

if word not in stop_words :

if len(word)>1:

word_list.append(word)

#sorted_item=sorted(item.items(),key=lambda x:x[1],reverse=True)

except TypeError:

pass

item = Counter(word_list)

return dict(item)

def draw_wordColud(data):

c = (

WordCloud()

.add(

"",

[(k,v) for k,v in data.items()],

word_size_range=[20, 100],

# 这里自定义了字体类型。

textstyle_opts=opts.TextStyleOpts(font_family="cursive"),

)

.set_global_opts(title_opts=opts.TitleOpts(title="赌博用户聊天内容分析-词云图"))

.render("赌博用户聊天室内容分析-词云图.html")

)

if __name__ == '__main__':

data=get_data_from_mysql()

print("开始top10条形图绘制!")

user_data=get_line_data(data)

draw_line(user_data)

print("top10条形图绘制完成!")

print("开始饼图绘制!")

pie_data=get_pie_data(data)

draw_pie(pie_data)

print("饼图绘制完成!")

print("开始词云绘制!")

wordCloud_data=get_wordCloud_data(data)

draw_wordColud(wordCloud_data)

print("词云绘制完成!")

四.总结

网赌跟吸毒没啥区别,沾上网赌的人,赢了还想赢,赢一万还想着十万八万,输了还想翻本,输一万继续往里充,直到你玩上头那一刻,你已经被后台盯上了,怎么下注都是输,大家不要赌博哦。

本次使用Python撰写爬虫,SnowNLP进行中文分词、情感分析,最后使用Pyecharts制作Echarts图,将数据可视化展示出来。停用词表我放在了蓝奏云,思路、代码方面有什么不足欢迎各位大佬指正、批评!觉得还可以的能点个赞嘛。