【Hadoop】数据压缩

HDFS数据压缩

- 压缩技术概述

- 是否采用压缩

- MapReduce支持的压缩技术

-

- hadoop自带适配的编码/解码器

- 压缩性能的比较

- MapReduce各个阶段可采用的压缩技术选择

- 压缩参数配置

-

- MR输入阶段

- Map输出阶段

- Reduce输出阶段

- 压缩实操案例

-

- Mapper输出端

- Reducer输出端

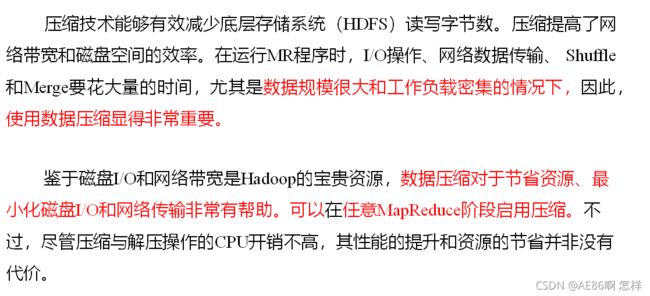

压缩技术概述

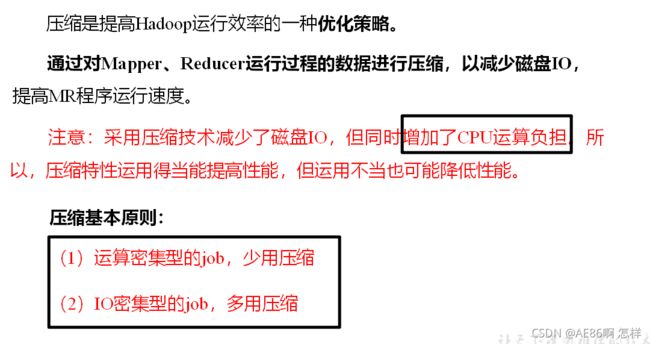

是否采用压缩

MapReduce支持的压缩技术

记住:

1.支持切片的只有bzip2和lzo

2.lzo不是hadoop自带,需要额外安装并且需要建立索引文件才能有效使用

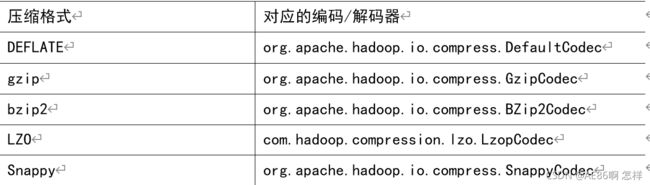

hadoop自带适配的编码/解码器

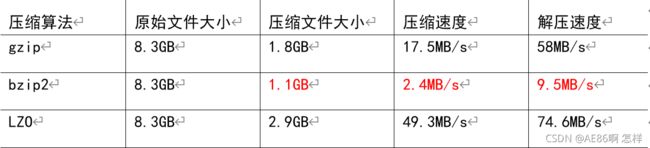

压缩性能的比较

snappy:

http://google.github.io/snappy/

Snappy is a compression/decompression library. It does not aim for maximum compression, or compatibility with any other compression library; instead, it aims for very high speeds and reasonable compression. For instance, compared to the fastest mode of zlib, Snappy is an order of magnitude faster for most inputs, but the resulting compressed files are anywhere from 20% to 100% bigger.On a single core of a Core i7 processor in 64-bit mode, Snappy compresses at about 250 MB/sec or more and decompresses at about 500 MB/sec or more.

压缩速度牛逼;

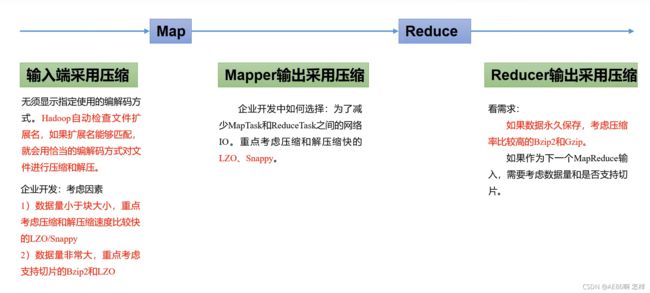

MapReduce各个阶段可采用的压缩技术选择

压缩可以在MapReduce作用的任意阶段启用

(1)Map输入端

考虑因素:

1.块大小: 如果数据量小于块大小,优先考虑压缩速度;反之考虑压缩效率;

2.切片:如果文件数据量大,压缩也压不到块大小范围内,那就必须选择支持切片的压缩方式即lzo/snappy

(2)Map输出端

由于一个map输出的数据文件一般和切片大小差不多,数据量不会很大,因此考虑速度

(3)Reduce输出端

看需求:

(1) 数据永久保存:考虑压缩效率高的

(2) 作为下一级MR计算的数据源:考虑切片

压缩参数配置

MR输入阶段

配置文件:core-site.xml

参数: io.compression.codecs

默认值 : 无

备注:这个参数可以不设置;Hadoop会根据文件的扩展名判断是否支持某种编解码器;

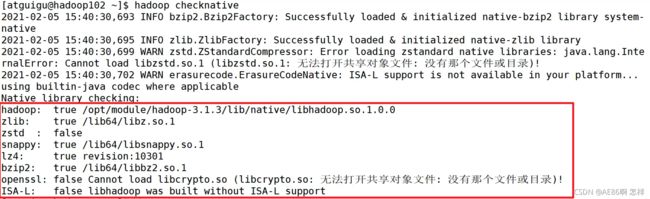

可以在命令行输入hadoop checknative查看Hadoop支持的压缩方式)

snappy是centos7.5 和 hadoop3.x 配合使用 是默认支持的;本地环境windows就不支持

Map输出阶段

配置文件:mapred-site.xml

参数1: mapreduce.map.output.compress

默认值:false

备注:这个参数设为true启用压缩

参数2 : mapreduce.map.output.compress.codec

描述:配置map输出的压缩方式

默认值:org.apache.hadoop.io.compress.DefaultCodec

建议:企业多使用LZO或Snappy编解码器在此阶段压缩数据

Reduce输出阶段

配置文件:mapred-site.xml

参数1: mapreduce.output.fileoutputformat.compress

默认值:false

备注:这个参数设为true启用压缩

参数2 : mapreduce.output.fileoutputformat.compress.codec

描述:reduce输出压缩格式

默认值:org.apache.hadoop.io.compress.DefaultCodec

建议:使用标准工具或者编解码器,如gzip和bzip2

压缩实操案例

即使你的MapReduce的输入输出文件都是未压缩的文件,你仍然可以对Map任务的中间结果输出做压缩,因为它要写在硬盘并且通过网络传输到Reduce节点,对其压缩可以提高很多性能,这些工作只要设置两个属性即可,我们来看下代码怎么设置

Mapper输出端

只需要在Driver类中设置即可

package com.atguigu.mapreduce.compress;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.compress.BZip2Codec;

import org.apache.hadoop.io.compress.CompressionCodec;

import org.apache.hadoop.io.compress.GzipCodec;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

// 开启map端输出压缩

conf.setBoolean("mapreduce.map.output.compress", true);

// 设置map端输出压缩方式

conf.setClass("mapreduce.map.output.compress.codec", BZip2Codec.class,CompressionCodec.class);

Job job = Job.getInstance(conf);

job.setJarByClass(WordCountDriver.class);

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

boolean result = job.waitForCompletion(true);

System.exit(result ? 0 : 1);

}

}

Mapper端输出压缩不会影响最终输出文件的格式

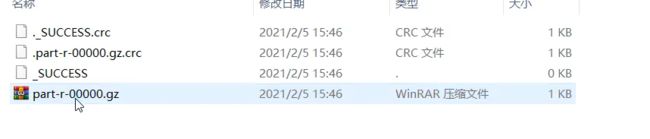

Reducer输出端

只需要在Driver类中设置即可

package com.atguigu.mapreduce.compress;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.compress.BZip2Codec;

import org.apache.hadoop.io.compress.DefaultCodec;

import org.apache.hadoop.io.compress.GzipCodec;

import org.apache.hadoop.io.compress.Lz4Codec;

import org.apache.hadoop.io.compress.SnappyCodec;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(WordCountDriver.class);

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 设置reduce端输出压缩开启

FileOutputFormat.setCompressOutput(job, true);

// 设置压缩的方式

FileOutputFormat.setOutputCompressorClass(job, BZip2Codec.class);

// FileOutputFormat.setOutputCompressorClass(job, GzipCodec.class);

// FileOutputFormat.setOutputCompressorClass(job, DefaultCodec.class);

boolean result = job.waitForCompletion(true);

System.exit(result?0:1);

}

}