数据持久化

文章目录

- 一、数据持久化

-

- 1.emptyDir(不能用来做数据持久化)

- 2.hostpath

-

- type的值的一点说明:

- hostpath 的type的种类

- 3.NFS

- 4.PV/PVC

-

- 1)PV 的访问模式(accessModes)

- 2)PV的回收策略(persistentVolumeReclaimPolicy)

- 3)PV的状态

- PV 的关键配置参数说明:

- 4) 创建pv (PV集群级资源)

- 4)PVC指定使用的PV (PVC名称空间级资源)

- 测试

- 三、部署discuz实战(使用pv/pvc管理nfs)

-

- 4、StorageClass

-

- 下载helm

- 安装存储类

- 测试存储类

- 四、生命周期

- 五、配置存储 (K8S配置中心 )

-

- 1、ConfigMap

-

- 1、ConfigMap 部署

- 2.测试

- 3.使用configmap

- 4.创建一个configmap

-

- 这时候我们需要修改configmap文件就可以访问

- 5.使用configmap挂载

- 6.configmap热更新

- configmap环境变量的使用

- 4、使用ConfigMap的限制条件

- 7.subPath参数的使用

- 六、secret

-

- 第一种: Secret普通登录挂载

- 第二种: Secret加密登录挂载

- 第三种: Secre存储私有docker registry的认证

-

- 1、kubernetes.io/dockerconfigjson

- 2、实例

一、数据持久化

Pod是由容器组成的,而容器宕机或停止之后,数据就随之丢了,那么这也就意味着我们在做Kubernetes集群的时候就不得不考虑存储的问题,而

存储卷就是为了Pod保存数据而生的。存储卷的类型有很多,我们常用到一般有四种:emptyDir,hostPath,NFS以及云存储(ceph, glasterfs…)等。

1、kubernetes的Volume支持多种类型,比较常见的有下面几个:

- 简单存储:EmptyDir、HostPath、NFS

- 高级存储:PV、PVC

- 配置存储:ConfigMap、Secret

1.emptyDir(不能用来做数据持久化)

emptyDir:是pod调度到节点上时创建的一个空目录,当pod被删除时,emptydir中数据也随之删除,emptydir常用于容器间分享文件,或者用于创建临时目录。

注:emptyDir不能够用来做数据持久化

#emptyDir:是pod调度到节点上时创建的一个空目录,当pod被删除时,emptydir中数据也随之删除,emptydir常用于容器间分享文件,或者用于创建临时目录。

#实例

#编写配置清单

[root@k8s-master1 ~]# vim emptydir.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: emptydir

spec:

selector:

matchLabels:

app: emptydir

template:

metadata:

labels:

app: emptydir

spec:

containers:

- name: nginx

image: nginx

volumeMounts: #nginx容器挂载目录

- mountPath: /usr/share/nginx/nginx

name: test-emptydir

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

volumeMounts: #MySQL容器挂载目录

- mountPath: /usr/share/nginx

name: test-emptydir

volumes:

- name: test-emptydir

emptyDir: {

}

#验证容器间的数据分享

#进入MySQL容器挂载的目录,创建文件

[root@k8s-master1 ~]# kubectl exec -it emptydir-5dc7dcd9fd-zrb99 -c mysql -- bash

root@emptydir-5dc7dcd9fd-zrb99:/# cd /usr/share/nginx/

root@emptydir-5dc7dcd9fd-zrb99:/usr/share/nginx# ls

root@emptydir-5dc7dcd9fd-zrb99:/usr/share/nginx# touch {1..10}

root@emptydir-5dc7dcd9fd-zrb99:/usr/share/nginx# ls

1 10 2 3 4 5 6 7 8 9

root@emptydir-5dc7dcd9fd-zrb99:/usr/share/nginx# exit

#进入nginx挂载的目录查看是否也有创建的文件

[root@k8s-master1 ~]# kubectl exec -it emptydir-5dc7dcd9fd-zrb99 -c nginx -- bash

root@emptydir-5dc7dcd9fd-zrb99:/# cd /usr/share/nginx/nginx/

root@emptydir-5dc7dcd9fd-zrb99:/usr/share/nginx/nginx# ls

1 10 2 3 4 5 6 7 8 9

2.hostpath

hostPath类型则是映射node文件系统中的文件或者目录到pod里。在使用hostPath类型的存储卷时,也可以设置type字段,支持的类型有文件、目录、File、Socket、CharDevice和BlockDevice。

hostPath类似于docker -v参数,将宿主主机中的文件挂载pod中,但是hostPath比docker -v参数更强大,(Pod调度到哪个节点,则直接挂载到当前节点上,所以不方便)

EmptyDir中数据不会被持久化,它会随着Pod的结束而销毁,如果想简单的将数据持久化到主机中,可以选择HostPath。HostPath就是将Node主机中一个实际目录挂在到Pod中,以供容器使用,这样的设计就可以保证Pod销毁了但是数据依据可以存在于Node主机上.

type的值的一点说明:

DirectoryOrCreate 目录存在就使用,不存在就先创建后使用

Directory 目录必须存在

FileOrCreate 文件存在就使用,不存在就先创建后使用

File 文件必须存在

Socket unix套接字必须存在

CharDevice 字符设备必须存在

BlockDevice 块设备必须存在

#实例

#编写配置清单

kind: Deployment

apiVersion: apps/v1

metadata:

name: hostpath

spec:

selector:

matchLabels:

app: hostpath

template:

metadata:

labels:

app: hostpath

spec:

nodeName: k8s-m-01 #定义那个节点

containers:

- name: nginx

image: nginx

volumeMounts:

- mountPath: /usr/share/nginx/nginx

name: test-hostpath

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

volumeMounts:

- mountPath: /usr/share/nginx

name: test-hostpath

volumes:

- name: test-hostpath

hostPath:

path: /opt/hostpath

type: DirectoryOrCreate

hostpath 的type的种类

参考语法: kubectl explain deployment.spec.template.spec.volumes

参考链接:https://kubernetes.io/zh/docs/concepts/storage/volumes/#hostpath

3.NFS

nfs使得我们可以挂载已经存在的共享到我们的pod中

和emptydir不同的是,当pod被删除时,emptydir也会被删除。

nfs不会被删除,仅仅是解除挂在状态而已,这就意味着NFS能够允许我们提前对数据进行处理,而且这些数据可以在pod之间互相传递,并且nfs可以同时被多个pod挂载并进行读写。

HostPath可以解决数据持久化的问题,但是一旦Node节点故障了Pod如果转移到了别的节点,又会出现问题了,此时需要准备单独的网络存储系统,比较常用的用NFS、CIFS。NFS是一个网络文件存储系统,可以搭建一台NFS服务器,然后将Pod中的存储直接连接到NFS系统上,这样的话,无论Pod在节点上怎么转移,只要Node跟NFS的对接没问题,数据就可以成功访问。哪怕扩容pod也可以访问

参考语法: kubectl explain deployment.spec.template.spec.volumes

#1.部署NFS(所有节点)

[root@k8s-master1 ~]# yum install nfs-utils.x86_64 -y

#2.配置(为了简单在master节点上安装nfs服务器)

[root@kubernetes-master-01 nfs]# mkdir -p /nfs/v{1..10}

[root@kubernetes-master-01 nfs]# cat > /etc/exports <

/nfs/v1 172.16.0.0/16(rw,no_root_squash)

/nfs/v2 172.16.0.0/16(rw,no_root_squash)

/nfs/v3 172.16.0.0/16(rw,no_root_squash)

/nfs/v4 172.16.0.0/16(rw,no_root_squash)

/nfs/v5 172.16.0.0/16(rw,no_root_squash)

/nfs/v6 172.16.0.0/16(rw,no_root_squash)

/nfs/v7 172.16.0.0/16(rw,no_root_squash)

/nfs/v8 172.16.0.0/16(rw,no_root_squash)

/nfs/v9 172.16.0.0/16(rw,no_root_squash)

/nfs/v10 172.16.0.0/16(rw,no_root_squash)

EOF

或者使用本网段:

/nfs/v1 172.16.1.0/16(rw,no_root_squash)

/nfs/v2 172.16.1.0/16(rw,no_root_squash)

/nfs/v3 172.16.1.0/16(rw,no_root_squash)

/nfs/v4 172.16.1.0/16(rw,no_root_squash)

/nfs/v5 172.16.1.0/16(rw,no_root_squash)

/nfs/v6 172.16.1.0/16(rw,no_root_squash)

/nfs/v7 172.16.1.0/16(rw,no_root_squash)

/nfs/v8 172.16.1.0/16(rw,no_root_squash)

/nfs/v9 172.16.1.0/16(rw,no_root_squash)

/nfs/v10 172.16.1.0/16(rw,no_root_squash)

~

#查看结果

[root@k8s-master1 ~]# exportfs -arv

exporting 172.16.0.0/16:/nfs/v10

exporting 172.16.0.0/16:/nfs/v9

exporting 172.16.0.0/16:/nfs/v8

exporting 172.16.0.0/16:/nfs/v7

exporting 172.16.0.0/16:/nfs/v6

exporting 172.16.0.0/16:/nfs/v5

exporting 172.16.0.0/16:/nfs/v4

exporting 172.16.0.0/16:/nfs/v3

exporting 172.16.0.0/16:/nfs/v2

exporting 172.16.0.0/16:/nfs/v1

或者:showmount -e 查看

[root@k8s-master-01 nfs]# showmount -e

Export list for k8s-master-01:

/nfs/v10 172.16.1.0/16

/nfs/v9 172.16.1.0/16

/nfs/v8 172.16.1.0/16

/nfs/v7 172.16.1.0/16

/nfs/v6 172.16.1.0/16

/nfs/v5 172.16.1.0/16

/nfs/v4 172.16.1.0/16

/nfs/v3 172.16.1.0/16

/nfs/v2 172.16.1.0/16

/nfs/v1 172.16.1.0/16

#启动nfs(所有节点)

systemctl enable -- now nfs

#3.测试k8s使用nfs

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs

spec:

selector:

matchLabels:

app: nfs

template:

metadata:

labels:

app: nfs

spec:

nodeName: gdx3

containers:

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

volumeMounts:

- mountPath: /var/lib/mysql

name: nfs

volumes:

- name: nfs

nfs:

path: /nfs/v1

server: 172.16.1.11

#4.验证nfs挂载

#进入到nfs数据库

[root@k8s-master1 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

emptydir-5dc7dcd9fd-zrb99 2/2 Running 0 9h

nfs-85dff7bb6b-8pgrp 1/1 Running 0 60m

statefulset-test-0 1/1 Running 0 27h

test-6799fc88d8-t6jn6 1/1 Running 0 142m

test-tag 1/1 Running 0 3d15h

wordpress-test-0 2/2 Running 0 26h

[root@k8s-master1 v1]# kubectl exec -it nfs-85dff7bb6b-8pgrp -- bash

root@nfs-85dff7bb6b-8pgrp:/# mysql -u root -p123456

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.33 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> create database discuz; #创建一个数据库

Query OK, 1 row affected (0.01 sec)

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| discuz |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

#到宿主机的挂载目录下查看

[root@k8s-master1 ~]# cd /nfs/v1

[root@k8s-master1 v1]# ll

总用量 188484

-rw-r----- 1 polkitd ssh_keys 56 4月 4 19:06 auto.cnf

-rw------- 1 polkitd ssh_keys 1680 4月 4 19:06 ca-key.pem

-rw-r--r-- 1 polkitd ssh_keys 1112 4月 4 19:06 ca.pem

-rw-r--r-- 1 polkitd ssh_keys 1112 4月 4 19:06 client-cert.pem

-rw------- 1 polkitd ssh_keys 1680 4月 4 19:06 client-key.pem

drwxr-x--- 2 polkitd ssh_keys 20 4月 4 21:07 discuz #数据库目录已经存在

-rw-r----- 1 polkitd ssh_keys 692 4月 4 20:04 ib_buffer_pool

-rw-r----- 1 polkitd ssh_keys 79691776 4月 4 20:04 ibdata1

-rw-r----- 1 polkitd ssh_keys 50331648 4月 4 20:04 ib_logfile0

-rw-r----- 1 polkitd ssh_keys 50331648 4月 4 19:06 ib_logfile1

-rw-r----- 1 polkitd ssh_keys 12582912 4月 4 20:05 ibtmp1

drwxr-x--- 2 polkitd ssh_keys 4096 4月 4 19:06 mysql

drwxr-x--- 2 polkitd ssh_keys 8192 4月 4 19:06 performance_schema

-rw------- 1 polkitd ssh_keys 1680 4月 4 19:06 private_key.pem

-rw-r--r-- 1 polkitd ssh_keys 452 4月 4 19:06 public_key.pem

-rw-r--r-- 1 polkitd ssh_keys 1112 4月 4 19:06 server-cert.pem

-rw------- 1 polkitd ssh_keys 1680 4月 4 19:06 server-key.pem

drwxr-x--- 2 polkitd ssh_keys 8192 4月 4 19:06 sys

#测试删除pod

[root@k8s-master1 discuz]# kubectl delete pods nfs-85dff7bb6b-8pgrp

pod "nfs-85dff7bb6b-8pgrp" deleted

#在回宿主机挂载目录查看discuz数据库目录仍然是存在的

4.PV/PVC

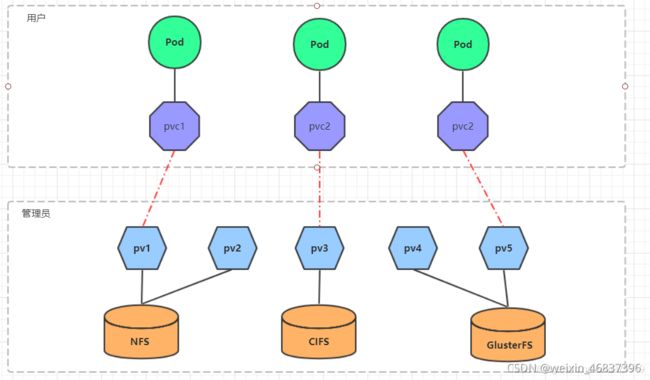

使用NFS提供存储,此时就要求用户会搭建NFS系统,并且会在yaml配置nfs。由于kubernetes支持的存储系统有很多,要求客户全都掌握,显然不现实。为了能够屏蔽底层存储实现的细节,方便用户使用, kubernetes引入PV和PVC两种资源对象。

PersistentVolume(PV)是集群中已由管理员配置的一段网络存储。 集群中的资源就像一个节点是一个集群资源。 PV是诸如卷之类的卷插件,但是具有独立于使用PV的任何单个pod的生命周期。 该API对象捕获存储的实现细节,即NFS,iSCSI或云提供商特定的存储系统 。

PersistentVolumeClaim(PVC)是用户存储的请求。PVC的使用逻辑:在pod中定义一个存储卷(该存储卷类型为PVC),定义的时候直接指定大小,pvc必须与对应的pv建立关系,pvc会根据定义去pv申请,而pv是由存储空间创建出来的。pv和pvc是kubernetes抽象出来的一种存储资源。

pv : 相当于磁盘分区

pvc: 相当于磁盘请求

PV: 持久化卷的意思,是对底层的共享存储的一种抽象PVC(Persistent Volume Claim)是持久卷请求于存储需求的一种声明(PVC其实就是用户向kubernetes系统发出的一种资源需求申请。)

参考语句:kubectl explain pv.spec.nfs

参考官网:https://kubernetes.io/zh/docs/concepts/storage/persistent-volumes/

使用了PV和PVC之后,工作可以得到进一步的细分:

- 存储:存储工程师维护

- PV: kubernetes管理员维护

- PVC:kubernetes用户维护

1)PV 的访问模式(accessModes)

| 模式 | 解释 |

|---|---|

| ReadWriteOnce(RWO) | 可读可写,但只支持被单个节点挂载。 |

| ReadOnlyMany(ROX) | 只读,可以被多个节点挂载。 |

| ReadWriteMany(RWX) | 多路可读可写。这种存储可以以读写的方式被多个节点共享。不是每一种存储都支持这三种方式,像共享方式,目前支持的还比较少,比较常用的是 NFS。在 PVC 绑定 PV 时通常根据两个条件来绑定,一个是存储的大小,另一个就是访问模式。 |

在命令行接口(CLI)中,访问模式也使用以下缩写形式:

- RWO - ReadWriteOnce

- ROX - ReadOnlyMany

- RWX - ReadWriteMany

2)PV的回收策略(persistentVolumeReclaimPolicy)

| 策略 | 解释 |

|---|---|

| Retain | 不清理, 保留 Volume(需要手动清理) |

| Recycle | Recycle |

| Delete | 删除存储资源,比如删除 AWS EBS 卷(只有 AWS EBS, GCE PD, Azure Disk 和 Cinder 支持) |

目前,仅 NFS 和 HostPath 支持回收(Recycle)。 AWS EBS、GCE PD、Azure Disk 和 Cinder 卷都支持删除(Delete)。

3)PV的状态

| 状态 | 解释 |

|---|---|

| Available | 可用 |

| Bound | 已经分配给 PVC |

| Released | PVC 解绑但还未执行回收策略。 |

| Failed | 发生错误 |

PV 的关键配置参数说明:

-

存储类型

底层实际存储的类型,kubernetes支持多种存储类型,每种存储类型的配置都有所差异

-

存储能力(capacity)

目前只支持存储空间的设置( storage=1Gi ),不过未来可能会加入IOPS、吞吐量等指标的配置

-

访问模式(accessModes)

用于描述用户应用对存储资源的访问权限,访问权限包括下面几种方式:

- ReadWriteOnce(RWO):读写权限,但是只能被单个节点挂载

- ReadOnlyMany(ROX): 只读权限,可以被多个节点挂载

- ReadWriteMany(RWX):读写权限,可以被多个节点挂载

`需要注意的是,底层不同的存储类型可能支持的访问模式不同` -

回收策略(persistentVolumeReclaimPolicy)

当PV不再被使用了之后,对其的处理方式。目前支持三种策略:

- Retain (保留) 保留数据,需要管理员手工清理数据

- Recycle(回收) 清除 PV 中的数据,效果相当于执行 rm -rf /thevolume/*

- Delete (删除) 与 PV 相连的后端存储完成 volume 的删除操作,当然这常见于云服务商的存储服务

需要注意的是,底层不同的存储类型可能支持的回收策略不同 -

存储类别

PV可以通过storageClassName参数指定一个存储类别

-

具有特定类别的PV只能与请求了该类别的PVC进行绑定

-

未设定类别的PV则只能与不请求任何类别的PVC进行绑定

-

-

状态(status)

一个 PV 的生命周期中,可能会处于4中不同的阶段:

- Available(可用): 表示可用状态,还未被任何 PVC 绑定

- Bound(已绑定): 表示 PV 已经被 PVC 绑定

- Released(已释放): 表示 PVC 被删除,但是资源还未被集群重新声明

- Failed(失败): 表示该 PV 的自动回收失败

4) 创建pv (PV集群级资源)

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

spec:

nfs: # 存储类型,与底层真正存储对应

path: /nfs/v2

server: 172.16.1.11 #某节点的内网(因为我们在master节点建立的nfs,所以选择master节点的内网)

capacity: #存储能力,目前只支持存储空间的设置

storage: 20Gi #大小

persistentVolumeReclaimPolicy: Retain #指定pv的回收策略:不清理保留volume

accessModes: #指定pv的访问模式

- "ReadWriteOnce" #可读可写,但只支持单个节点挂载

- "ReadWriteMany" #多路可读可写

#查看pv

[root@k8s-master1 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv1 20Gi RWO,RWX Retain Available 53s

pvc参考语句:kubectl explain PersistentVolumeClaim.spec.resources

4)PVC指定使用的PV (PVC名称空间级资源)

简而言之:PVC就是管理pv的

#创建PVC

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

spec:

accessModes:

- "ReadWriteMany" #此内容要在pv的访问模式中包含

resources:

requests:

storage: "6Gi" #此值要包含在pv的大小内

#再查看pv

[root@k8s-master1 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv1 20Gi RWO,RWX Retain Bound default/pvc1 29m

#注:此时pv已是绑定pv1的状态

#查看pvc

[root@k8s-master1 ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc1 Bound pv1 20Gi RWO,RWX 2m42s

测试

创建pv:

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

spec:

nfs: #指定为nfs挂载

path: /nfs/v2

server: 172.16.1.11 #某节点的内网(因为我们在master节点建立的nfs,所以选择master节点的内网)

capacity: #容量

storage: 20Gi #大小

persistentVolumeReclaimPolicy: Retain #指定pv的回收策略:不清理保留volume

accessModes: #指定pv的访问模式

- "ReadWriteOnce" #可读可写,但只支持单个节点挂载

- "ReadWriteMany" #多路可读可写

创建PVC:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

spec:

accessModes:

- "ReadWriteMany" #此内容要在pv的访问模式中包含

resources:

requests:

storage: "6Gi" #此值要包含在pv的大小内

[root@k8s-master-01 ~]# cat pv-pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: pv-pvc

spec:

selector:

matchLabels:

app: pv-pvc

template:

metadata:

labels:

app: pv-pvc

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- mountPath: /usr/share/nginx/html

name: pv-pvc

volumes:

- name: pv-pvc

persistentVolumeClaim:

claimName: pvc

[root@k8s-master-01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-58774b94b7-5tl6k 1/1 Running 0 122m

pv-pvc-7c967f745d-gxqp6 1/1 Running 0 25s

wordpress-0 2/2 Running 2 30h

[root@k8s-master-01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-58774b94b7-5tl6k 1/1 Running 0 122m 10.244.1.69 k8s-node-01 <none> <none>

pv-pvc-7c967f745d-gxqp6 1/1 Running 0 37s 10.244.1.74 k8s-node-01 <none> <none>

wordpress-0 2/2 Running 2 30h 10.244.2.80 k8s-nonde-02 <none> <none>

访问:

[root@k8s-master-01 ~]# curl 10.244.1.74

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx/1.21.1</center>

</body>

</html>

[root@k8s-master-01 ~]# cd /nfs/v2

写入一个index.html

[root@k8s-master-01 v2]# echo "string" > index.html

[root@k8s-master-01 v2]# curl 10.244.1.74

string

扩容6台服务器:

[root@k8s-master-01 v2]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-58774b94b7-5tl6k 1/1 Running 0 131m 10.244.1.69 k8s-node-01 <none> <none>

pv-pvc-7c967f745d-4pp2t 0/1 ContainerCreating 0 7s <none> k8s-node-01 <none> <none>

pv-pvc-7c967f745d-bb8rf 0/1 ContainerCreating 0 7s <none> k8s-nonde-02 <none> <none>

pv-pvc-7c967f745d-gxqp6 1/1 Running 0 9m50s 10.244.1.74 k8s-node-01 <none> <none>

pv-pvc-7c967f745d-nwrhs 0/1 ContainerCreating 0 7s <none> k8s-nonde-02 <none> <none>

pv-pvc-7c967f745d-pc9mb 0/1 ContainerCreating 0 7s <none> k8s-node-01 <none> <none>

pv-pvc-7c967f745d-v42sc 0/1 ContainerCreating 0 7s <none> k8s-nonde-02 <none> <none>

wordpress-0 2/2 Running 2 30h 10.244.2.80 k8s-nonde-02 <none> <none>

随便访问一台pod的ip都可以访问到“string”

....

[root@k8s-master-01 v2]# curl 10.244.1.76

string

....

三、部署discuz实战(使用pv/pvc管理nfs)

#1.编写配置清单

[root@k8s-master1 discuz]# vim discuz-pv-pvc.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-mysql

spec:

nfs:

path: /nfs/v3

server: 172.16.1.11

capacity:

storage: 20Gi

persistentVolumeReclaimPolicy: Retain

accessModes:

- "ReadWriteOnce"

- "ReadWriteMany"

---

apiVersion: v1

kind: Namespace

metadata:

name: mysql

---

kind: Service

apiVersion: v1

metadata:

name: mysql-svc

namespace: mysql

spec:

ports:

- port: 3306

targetPort: 3306

name: mysql

protocol: TCP

selector:

app: mysql

deploy: discuz

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pv-mysql-pvc

namespace: mysql

spec:

accessModes:

- "ReadWriteMany"

resources:

requests:

storage: "20Gi"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-deployment

namespace: mysql

spec:

selector:

matchLabels:

app: mysql

deploy: discuz

template:

metadata:

labels:

app: mysql

deploy: discuz

spec:

containers:

- name: mysql

image: mysql:5.7

livenessProbe:

tcpSocket:

port: 3306

readinessProbe:

tcpSocket:

port: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

- name: MYSQL_DATABASE

value: "discuz"

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql-data

volumes:

- name: mysql-data

persistentVolumeClaim:

claimName: pv-mysql-pvc

---

kind: Namespace

apiVersion: v1

metadata:

name: discuz

---

kind: Service

apiVersion: v1

metadata:

name: discuz-svc

namespace: discuz

spec:

clusterIP: None

ports:

- port: 80

targetPort: 80

name: http

selector:

app: discuz

deploy: discuz

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-discuz

spec:

nfs:

path: /nfs/v4

server: 172.16.1.11

capacity:

storage: 20Gi

persistentVolumeReclaimPolicy: Retain

accessModes:

- "ReadWriteOnce"

- "ReadWriteMany"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pv-discuz-pvc

namespace: discuz

spec:

accessModes:

- "ReadWriteMany"

resources:

requests:

storage: "18Gi"

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: discuz-deployment

namespace: discuz

spec:

replicas: 5

selector:

matchLabels:

app: discuz

deploy: discuz

template:

metadata:

labels:

app: discuz

deploy: discuz

spec:

containers:

- name: php

image: elaina0808/lnmp-php:v6

livenessProbe:

tcpSocket:

port: 9000

readinessProbe:

tcpSocket:

port: 9000

volumeMounts:

- mountPath: /usr/share/nginx/html

name: discuz-data

- name: nginx

image: elaina0808/lnmp-nginx:v9

livenessProbe:

httpGet:

port: 80

path: /

readinessProbe:

httpGet:

port: 80

path: /

volumeMounts:

- mountPath: /usr/share/nginx/html

name: discuz-data

volumes:

- name: discuz-data

persistentVolumeClaim:

claimName: pv-discuz-pvc

---

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: discuz-ingress

namespace: discuz

spec:

tls:

- hosts:

- www.discuz.cluster.local.com

secretName: discuz-secret

rules:

- host: www.discuz.cluster.local.com

http:

paths:

- backend:

serviceName: discuz-svc

servicePort: 80

#2.部署discuz

[root@k8s-master1 discuz]# kubectl apply -f discuz-pv-pvc.yaml

#将需要的uploa压缩包移动到/nfs/v4

[root@k8s-master1 discuz]# mv Discuz_X3.4_SC_UTF8_20210320.zip /nfs/v4

#解压压缩包

[root@k8s-master1 discuz]# cd /nfs/v4

[root@k8s-master1 v4]# unzip Discuz_X3.4_SC_UTF8_20210320.zip

[root@k8s-master1 v4]# ll

总用量 12048

-rw-r--r-- 1 root root 12330468 4月 7 2021 Discuz_X3.4_SC_UTF8_20210320.zip

drwxr-xr-x 13 root root 4096 3月 22 19:44 upload

[root@k8s-master1 v4]# mv upload/* .

[root@k8s-master1 v4]# ll

总用量 12112

-rw-r--r-- 1 root root 2834 3月 22 19:44 admin.php

drwxr-xr-x 9 root root 135 3月 22 19:44 api

-rw-r--r-- 1 root root 727 3月 22 19:44 api.php

drwxr-xr-x 2 root root 23 3月 22 19:44 archiver

drwxr-xr-x 2 root root 90 3月 22 19:44 config

-rw-r--r-- 1 root root 1040 3月 22 19:44 connect.php

-rw-r--r-- 1 root root 106 3月 22 19:44 crossdomain.xml

drwxr-xr-x 12 root root 178 3月 22 19:44 data

-rw-r--r-- 1 root root 5558 3月 20 10:36 favicon.ico

-rw-r--r-- 1 root root 2245 3月 22 19:44 forum.php

-rw-r--r-- 1 root root 821 3月 22 19:44 group.php

-rw-r--r-- 1 root root 1280 3月 22 19:44 home.php

-rw-r--r-- 1 root root 6472 3月 22 19:44 index.php

drwxr-xr-x 5 root root 64 3月 22 19:44 install

drwxr-xr-x 2 root root 23 3月 22 19:44 m

-rw-r--r-- 1 root root 1025 3月 22 19:44 member.php

-rw-r--r-- 1 root root 2371 3月 22 19:44 misc.php

-rw-r--r-- 1 root root 1788 3月 22 19:44 plugin.php

-rw-r--r-- 1 root root 977 3月 22 19:44 portal.php

-rw-r--r-- 1 root root 582 3月 22 19:44 robots.txt

-rw-r--r-- 1 root root 1155 3月 22 19:44 search.php

drwxr-xr-x 10 root root 168 3月 22 19:44 source

drwxr-xr-x 7 root root 86 3月 22 19:44 static

drwxr-xr-x 3 root root 38 3月 22 19:44 template

drwxr-xr-x 7 root root 106 3月 22 19:44 uc_client

drwxr-xr-x 13 root root 241 3月 22 19:44 uc_server

drwxr-xr-x 2 root root 6 4月 4 18:15 upload

[root@k8s-master1 v4]# chmod -R o+w ../v4 #给所有文件加执行权限

#查看域名

[root@k8s-master1 discuz]# kubectl get ingress -n discuz

NAME CLASS HOSTS ADDRESS PORTS AGE

discuz-ingress <none> www.discuz.cluster.local.com 192.168.12.12 80, 443 4m25s

#查看端口号

[root@k8s-master1 discuz]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.101.179.113 <none> 80:30655/TCP,443:30480/TCP 3h29m

ingress-nginx-controller-admission ClusterIP 10.98.44.67 <none> 443/TCP 3h29m

#查看pods是否正常启动

[root@k8s-master1 discuz]# kubectl get pods -n discuz

NAME READY STATUS RESTARTS AGE

discuz-deployment-577d4d9b9-2phtj 2/2 Running 0 106m

discuz-deployment-577d4d9b9-g4pv4 2/2 Running 0 106m

discuz-deployment-577d4d9b9-mcptb 2/2 Running 0 106m

discuz-deployment-577d4d9b9-v8hrw 2/2 Running 0 106m

discuz-deployment-577d4d9b9-wcqzn 2/2 Running 0 106m

#3.测试访问如下图

4、StorageClass

根据pvc的要求,去自动创建符合要求的pv。

1、按照pvc创建pv

2、减少资源浪费

helm # github官网 https://github.com/helm/helm

helm相当于kubernetes的yum # helm包管理的工具

下载helm

https://github.com/helm/helm

# 下载helm(helm相当于kubernetes中的yum)

[root@k8s-m-01 ~]# wget https://get.helm.sh/helm-v3.3.4-linux-amd64.tar.gz

[root@k8s-m-01 ~]# tar -xf helm-v3.3.4-linux-amd64.tar.gz

[root@k8s-m-01 ~]# cd linux-amd64/

[root@k8s-m-01 ~]# for i in m1 m2 m3;do scp helm root@$i:/usr/local/bin/; done

# 测试安装

[root@k8s-m-01 ~]# helm

The Kubernetes package manager

Common actions for Helm:

- helm search: search for charts

- helm pull: download a chart to your local directory to view

- helm install: upload the chart to Kubernetes

- helm list: list releases of charts

安装存储类

## 安装一个helm的存储库

[root@k8s-m-01 ~]# helm repo add ckotzbauer https://ckotzbauer.github.io/helm-charts

"ckotzbauer" has been added to your repositories

[root@k8s-m-01 ~]# helm repo list

NAME URL

ckotzbauer https://ckotzbauer.github.io/helm-charts

## 第一种方式:部署nfs客户端及存储类

[root@k8s-m-01 ~]# helm install nfs-client --set nfs.server=172.16.1.51 --set nfs.path=/nfs/v6 ckotzbauer/nfs-client-provisioner

NAME: nfs-client

LAST DEPLOYED: Fri Apr 9 09:33:23 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

## 查看部署结果

[root@k8s-m-01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-nfs-client-provisioner-56dddf479f-h9qqb 1/1 Running 0 41s

[root@k8s-m-01 ~]# kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client cluster.local/nfs-client-nfs-client-provisioner Delete Immediate true 61s

## 第二种方式:直接使用配置清单(推荐)

accessModes: ReadWriteMany

### 下载包

[root@k8s-m-01 /opt]# helm pull ckotzbauer/nfs-client-provisioner

### 解压

[root@k8s-m-01 /opt]# tar -xf nfs-client-provisioner-1.0.2.tgz

### 修改values.yaml

[root@k8s-m-01 /opt]# cd nfs-client-provisioner/

[root@k8s-m-01 /opt/nfs-client-provisioner]# vim values.yaml

nfs:

server: 172.16.1.51

path: /nfs/v6

storageClass:

accessModes: ReadWriteMany

reclaimPolicy: Retain

### 安装

[root@k8s-m-01 /opt/nfs-client-provisioner]# helm install nfs-client ./

NAME: nfs-client

LAST DEPLOYED: Fri Apr 9 09:45:47 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

测试存储类

## 创建pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pv-discuz-pvc

spec:

storageClassName: nfs-client

accessModes:

- "ReadWriteMany"

resources:

requests:

storage: "18Gi"

## 查看pv/pvc

[root@k8s-m-01 /opt/discuz]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-589b3377-40cf-4f83-ab06-33bbad83013b 18Gi RWX Retain Bound default/pv-discuz-pvc-sc nfs-client 2m35s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pv-discuz-pvc-sc Bound pvc-589b3377-40cf-4f83-ab06-33bbad83013b 18Gi RWX nfs-client 2m35s

## 利用存储类部署一个discuz

#########################################################################################

# 1、部署MySQL集群

# 1、创建命名空间

# 2、创建service提供负载均衡

# 3、使用控制器部署MySQL实例

###

# 2、部署Discuz应用

# 1、创建命名空间

# 2、创建Service提供负载均衡(Headless Service)

# 3、创建服务并挂载代码

# 4、创建Ingress,用于域名转发(https)

###

# 3、服务之间的互连

# 1、Discuz连接MySQL ---> mysql.mysql.svc.cluster.local

#########################################################################################

apiVersion: v1

kind: Namespace

metadata:

name: mysql

---

kind: Service

apiVersion: v1

metadata:

name: mysql-svc

namespace: mysql

spec:

ports:

- port: 3306

targetPort: 3306

name: mysql

protocol: TCP

selector:

app: mysql

deploy: discuz

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pv-mysql-pvc

namespace: mysql

spec:

storageClassName: nfs-client

accessModes:

- "ReadWriteMany"

resources:

requests:

storage: "20Gi"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-deployment

namespace: mysql

spec:

selector:

matchLabels:

app: mysql

deploy: discuz

template:

metadata:

labels:

app: mysql

deploy: discuz

spec:

nodeName: k8s-m-02

containers:

- name: mysql

image: mysql:5.7

livenessProbe:

tcpSocket:

port: 3306

readinessProbe:

tcpSocket:

port: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

- name: MYSQL_DATABASE

value: "discuz"

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql-data

volumes:

- name: mysql-data

persistentVolumeClaim:

claimName: pv-mysql-pvc

---

kind: Namespace

apiVersion: v1

metadata:

name: discuz

---

kind: Service

apiVersion: v1

metadata:

name: discuz-svc

namespace: discuz

spec:

clusterIP: None

ports:

- port: 80

targetPort: 80

name: http

selector:

app: discuz

deploy: discuz

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pv-discuz-pvc

namespace: discuz

spec:

storageClassName: nfs-client

accessModes:

- "ReadWriteMany"

resources:

requests:

storage: "18Gi"

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: discuz-deployment

namespace: discuz

spec:

replicas: 5

selector:

matchLabels:

app: discuz

deploy: discuz

template:

metadata:

labels:

app: discuz

deploy: discuz

spec:

nodeName: k8s-m-03

containers:

- name: php

image: alvinos/php:wordpress-v2

livenessProbe:

tcpSocket:

port: 9000

readinessProbe:

tcpSocket:

port: 9000

volumeMounts:

- mountPath: /usr/share/nginx/html

name: discuz-data

- name: nginx

image: alvinos/nginx:wordpress-v2

livenessProbe:

httpGet:

port: 80

path: /

readinessProbe:

httpGet:

port: 80

path: /

volumeMounts:

- mountPath: /usr/share/nginx/html

name: discuz-data

volumes:

- name: discuz-data

persistentVolumeClaim:

claimName: pv-discuz-pvc

---

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: discuz-ingress

namespace: discuz

spec:

tls:

- hosts:

- www.discuz.cluster.local.com

secretName: discuz-secret

rules:

- host: www.discuz.cluster.local.com

http:

paths:

- backend:

serviceName: discuz-svc

servicePort: 80

报错:pvc一直没有绑定pv,显示pending状态,没有bounding

解决方法:

k8s1.20.1以上版本修改api-server配置

修改/etc/kubernetes/manifests/kube-apiserver.yaml 文件

添加添加- --feature-gates=RemoveSelfLink=false

[root@k8s-master-01 opt]# cat /etc/kubernetes/manifests/kube-apiserver.yaml |grep feature-gates=RemoveSelfLink=false

- --feature-gates=RemoveSelfLink=false

查看pv,pvc

[root@k8s-master-01 v6]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pv1 1Gi RWX Retain Terminating default/pvc 40h

persistentvolume/pv7 5Gi RWX Retain Available 16h

persistentvolume/pvc-24e4250f-cff0-439d-9858-05af6022fd49 1Gi RWX Retain Bound default/pv-discuz-pvc-sc nfs-client 5m33s

persistentvolume/pvc-47dff24b-d636-47d3-8b21-b9e718dc1552 1Gi RWX Retain Bound default/sc nfs-client 5m33s

persistentvolume/pvc-4a4bcb76-d86e-48d1-963d-f5ef20c8fb10 1Gi RWX Retain Bound default/sccccc nfs-client 5m33s

persistentvolume/pvc-dbbe1516-3500-4f2b-b863-ab9de69f74bf 1Gi RWX Retain Bound default/pv-discuz-pvc nfs-client 5m33s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pv-discuz-pvc Bound pvc-dbbe1516-3500-4f2b-b863-ab9de69f74bf 1Gi RWX nfs-client 17h

persistentvolumeclaim/pv-discuz-pvc-sc Bound pvc-24e4250f-cff0-439d-9858-05af6022fd49 1Gi RWX nfs-client 17h

persistentvolumeclaim/pvc Bound pv1 20Gi RWX 39h

persistentvolumeclaim/sc Bound pvc-47dff24b-d636-47d3-8b21-b9e718dc1552 1Gi RWX nfs-client 16h

persistentvolumeclaim/sccccc Bound pvc-4a4bcb76-d86e-48d1-963d-f5ef20c8fb10 1Gi RWX nfs-client 13m

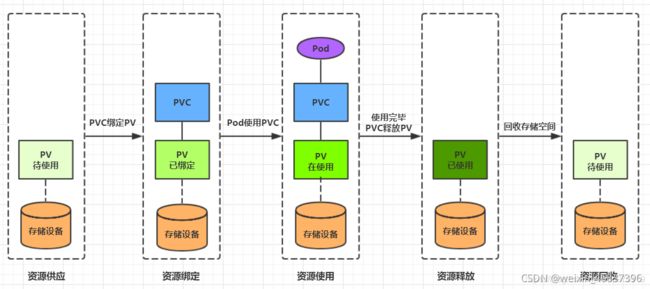

四、生命周期

PVC和PV是一一对应的,PV和PVC之间的相互作用遵循以下生命周期:

-

资源供应:管理员手动创建底层存储和PV

-

资源绑定:用户创建PVC,kubernetes负责根据PVC的声明去寻找PV,并绑定

在用户定义好PVC之后,系统将根据PVC对存储资源的请求在已存在的PV中选择一个满足条件的

-

一旦找到,就将该PV与用户定义的PVC进行绑定,用户的应用就可以使用这个PVC了

-

如果找不到,PVC则会无限期处于Pending状态,直到等到系统管理员创建了一个符合其要求的PV

PV一旦绑定到某个PVC上,就会被这个PVC独占,不能再与其他PVC进行绑定了

-

-

资源使用:用户可在pod中像volume一样使用pvc

Pod使用Volume的定义,将PVC挂载到容器内的某个路径进行使用。

-

资源释放:用户删除pvc来释放pv

当存储资源使用完毕后,用户可以删除PVC,与该PVC绑定的PV将会被标记为“已释放”,但还不能立刻与其他PVC进行绑定。通过之前PVC写入的数据可能还被留在存储设备上,只有在清除之后该PV才能再次使用。

-

资源回收:kubernetes根据pv设置的回收策略进行资源的回收

对于PV,管理员可以设定回收策略,用于设置与之绑定的PVC释放资源之后如何处理遗留数据的问题。只有PV的存储空间完成回收,才能供新的PVC绑定和使用

五、配置存储 (K8S配置中心 )

1、ConfigMap

ConfigMap是一种比较特殊的存储卷,它的主要作用是用来存储配置信息的

在生产环境中经常会遇到需要修改配置文件的情况,传统的修改方式不仅会影响到服务的正常运行,而且操作步骤也很繁琐。为了解决这个问题,kubernetes项目从1.2版本引入了`ConfigMap`功能,用于将应用的配置信息与程序的分离。这种方式不仅可以实现应用程序被的复用,而且还可以通过不同的配置实现更灵活的功能。在创建容器时,用户可以将应用程序打包为容器镜像后,通过环境变量或者外接挂载文件的方式进行配置注入。`ConfigMap && Secret 是K8S中的针对应用的配置中心,它有效的解决了应用挂载的问题,并且支持加密以及热更新等功能,`可以说是一个k8s提供的一件非常好用的功能

1、ConfigMap 部署

# 第一种方式:部署文件

[root@k8s-m-01 ~]# ll

-rw-r--r-- 1 root root 719 Aug 10 18:47 deployment.yaml

[root@k8s-m-01 ~]# kubectl create configmap test --from-file=deployment.yaml # 部署

[root@k8s-m-01 ~]# kubectl get configmaps # 查看

NAME DATA AGE

kube-root-ca.crt 1 11d

test 1 73s

# 第二种方式:通过变量

[root@k8s-m-01 ~]# kubectl create configmap test1 --from-literal=MSSQL_PASSWIRD=123 # 部署

configmap/test1 created

[root@k8s-m-01 ~]# kubectl get configmaps # 查看

NAME DATA AGE

kube-root-ca.crt 1 11d

test 1 4m40s

test1 1 5s

[root@k8s-m-01 ~]# kubectl describe configmaps test1 # 查看详细信息

Name: test1

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

MSSQL_PASSWIRD:

----

123

Events: <none>

# 第三种方式:指定目录

[root@k8s-m-01 ~]# kubectl create configmap test03 --from-file=k8s/

[root@k8s-m-01 ~]# kubectl get confi^Ca

[root@k8s-m-01 ~]# kubectl get configmaps

NAME DATA AGE

kube-root-ca.crt 1 11d

test 1 14m

test02 1 5m23s

test03 24 20s # k8s中有24个yaml.文件

test1 1 9m25s

# 第四种方式:使用配置清单

[root@k8s-m-01 ~]# vim config.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: test02

data:

linux12: '12'

[root@k8s-m-01 ~]# kubectl apply -f config.yaml

configmap/test02 created

[root@k8s-m-01 ~]# kubectl get configmaps

NAME DATA AGE

kube-root-ca.crt 1 11d

test 1 8m40s

test02 1 3s

test1 1 4m5s

[root@k8s-m-01 ~]# kubectl describe configmaps test02

Name: test02

Namespace: default

Labels: <none>

Annotations: <none>

# 删除configmap

[root@k8s-m-01 ~]# kubectl get configmaps # 查看

NAME DATA AGE

kube-root-ca.crt 1 11d

test 1 14m

test02 1 5m23s

test03 24 20s

test1 1 9m25s

[root@k8s-m-01 ~]# kubectl delete configmaps test03 #删除

configmap "test03" deleted

[root@k8s-m-01 ~]# kubectl delete configmaps test02

configmap "test02" deleted

[root@k8s-m-01 ~]# kubectl get configmaps #重新查看

NAME DATA AGE

kube-root-ca.crt 1 11d

test 1 17m

test1 1 12m

2.测试

创建config

[root@k8s-master-01 opt]# cat discuz-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

default.conf: | # |或者|-可以代表下面写多个内容

server {

listen 80;

listen [::]:80;

server_name _;

location / {

root /usr/share/nginx/html;

index index.html index.php;

}

location ~ \.php$ {

root /usr/share/nginx/html;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /usr/share/nginx/html$fastcgi_script_name;

include fastcgi_params;

}

}

---

apiVersion: v1

kind: Service

metadata:

name: nginx-config

spec:

ports:

- port: 80

targetPort: 80

nodePort: 30089

selector:

app: nginx-config

type: NodePort

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-config

spec:

storageClassName: nfs-client

accessModes:

- "ReadWriteMany"

resources:

requests:

storage: "4Gi"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-config

spec:

selector:

matchLabels:

app: nginx-config

template:

metadata:

labels:

app: nginx-config

spec:

containers:

- name: php

image: alvinos/php:wordpress-v2

volumeMounts:

- mountPath: /usr/share/nginx/html

name: nginx-config

- name: nginx

image: alvinos/nginx:wordpress-v2

volumeMounts:

- mountPath: /usr/share/nginx/html

name: nginx-config

volumes:

- name: nginx-config

persistentVolumeClaim:

claimName: nginx-config

> 参考语法: kubectl explain deployment.spec.template.spec.volumes.persistentVolumeClaim

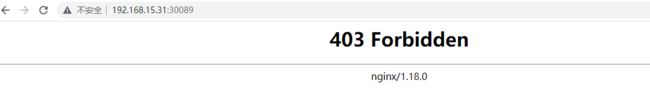

访问报错:因为pvc里面没有数据

这时候需要给pvc挂载nfs里面写入数据就可以

[root@k8s-master-01 opt]# cd /nfs/v6

[root@k8s-master-01 v6]# ll

总用量 0

drwxrwxrwx 2 root root 6 8月 29 15:42 default-nginx-config-pvc-201a9956-b89e-4c93-988a-9714064da9f2

[root@k8s-master-01 v6]# cd default-nginx-config-pvc-201a9956-b89e-4c93-988a-9714064da9f2/

[root@k8s-master-01 default-nginx-config-pvc-201a9956-b89e-4c93-988a-9714064da9f2]# ll

总用量 0

[root@k8s-master-01 default-nginx-config-pvc-201a9956-b89e-4c93-988a-9714064da9f2]# echo "index.php

[root@k8s-master-01 default-nginx-config-pvc-201a9956-b89e-4c93-988a-9714064da9f2]#

3.使用configmap

参考语法: kubectl explain deployment.spec.template.spec.volumes.configMap

4.创建一个configmap

[root@k8s-master-01 opt]# cat discuz-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

default.conf: |

server {

listen 80;

listen [::]:80;

server_name _;

location / {

root /usr/share/nginx/html;

index index.html index.php;

}

location ~ \.php$ {

root /usr/share/nginx/html;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /usr/share/nginx/html$fastcgi_script_name;

include fastcgi_params;

}

}

---

apiVersion: v1

kind: Service

metadata:

name: nginx-config

spec:

ports:

- port: 80

targetPort: 80

nodePort: 30089

selector:

app: nginx-config

type: NodePort

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-config

spec:

storageClassName: nfs-client

accessModes:

- "ReadWriteMany"

resources:

requests:

storage: "4Gi"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-config

spec:

selector:

matchLabels:

app: nginx-config

template:

metadata:

labels:

app: nginx-config

spec:

containers:

- name: php

image: alvinos/php:wordpress-v2

volumeMounts:

- mountPath: /usr/share/nginx/html

name: nginx-config

- name: nginx

image: alvinos/nginx:wordpress-v2

volumeMounts:

- mountPath: /usr/share/nginx/html

name: nginx-config

- mountPath: /etc/nginx/conf.d

name: nginx-config-configmap

volumes:

- name: nginx-config

persistentVolumeClaim:

claimName: nginx-config

- name: nginx-config-configmap #因为config是一个存储卷

configMap:

defaultMode: 0600 # 给所有设置权限

name: nginx-config

items:

- key: default.conf #使用configmap中的哪一个配置

path: default.conf #与上文提到的mountPath: /etc/nginx/conf.d的相对路径对应

mode: 0600 #给当前文件的设置权限

部署应用

[root@k8s-master-01 opt]# kubectl apply -f discuz-cm.yaml

configmap/nginx-config unchanged

service/nginx-config unchanged

persistentvolumeclaim/nginx-config unchanged

deployment.apps/nginx-config created

查看pods

[root@k8s-master-01 opt]# kubectl get pods

NAME READY STATUS RE

nfs-58774b94b7-5tl6k 1/1 Running 3

nfs-client-nfs-client-provisioner-7bf9895898-hfdzz 1/1 Running 1

nginx-config-6fd69b8c74-lz8m4 2/2 Running 0

wordpress-0 2/2 Running 8

查看执行的过程

[root@k8s-master-01 opt]# kubectl get -f discuz-cm.yaml

NAME DATA AGE

configmap/nginx-config 1 4m13s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

service/nginx-config NodePort 10.108.38.207 <none> 80:30089/

NAME STATUS VOLUME

persistentvolumeclaim/nginx-config Bound pvc-682736cd-9ee6-4168-96a3

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-config 1/1 1 1 41s

[root@k8s-master-01 opt]# kubectl get pvc

NAME STATUS VOLUME CA

nginx-config Bound pvc-682736cd-9ee6-4168-96a3-6d8331176265 4G

pv-discuz-pvc Bound pvc-dbbe1516-3500-4f2b-b863-ab9de69f74bf 1G

pv-discuz-pvc-sc Bound pvc-24e4250f-cff0-439d-9858-05af6022fd49 1G

pvc Bound pv1 20

sc Bound pvc-47dff24b-d636-47d3-8b21-b9e718dc1552 1

331176265]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11d

mysql NodePort 10.110.156.26 <none> 3306:32695/TCP 3d

nginx-config NodePort 10.108.38.207 <none> 80:30089/TCP

进入nginx容器查看的挂载default.conf

[root@k8s-master-01 opt]# kubectl exec -it nginx-config-6fd69b8c74-lz8m4 -c nginx -- bash

[root@nginx-config-6fd69b8c74-lz8m4 nginx]# cd /etc/nginx/conf.d/

[root@nginx-config-6fd69b8c74-lz8m4 conf.d]# ll

total 0,

lrwxrwxrwx 1 root root 19 Aug 29 08:36 default.conf -> ..data/default.conf

[root@nginx-config-6fd69b8c74-lz8m4 conf.d]# cat default.conf

server {

listen 80;

listen [::]:80;

server_name _;

location / {

root /usr/share/nginx/html;

index index.html index.php;

}

location ~ \.php$ {

root /usr/share/nginx/html;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /usr/share/nginx/html$fastcgi_script_name;

include fastcgi_params;

}

}

进入到宿主机的目录

[root@k8s-master-01 opt]# cd /nfs/v6/default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265/

[root@k8s-master-01 default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265]# ll

总用量 4

-rw-r--r-- 1 root root 17 8月 29 16:45 index.php

[root@k8s-master-01 default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265]# mkdir upload

[root@k8s-master-01 default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265]# mv index.php upload/

[root@k8s-master-01 default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265]# ll

总用量 0

drwxr-xr-x 2 root root 23 8月 29 16:51 upload #index.php文件放入到upload中,这时候访问就成403

[root@k8s-master-01 default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-58774b94b7-5tl6k 1/1 Running 3 44h

nfs-client-nfs-client-provisioner-7bf9895898-hfdzz 1/1 Running 1 24h

nginx-config-6fd69b8c74-lz8m4 2/2 Running 0 15m

wordpress-0 2/2 Running 8 3d

[root@k8s-master-01 default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265]# kubectl edit deployments.apps nginx-config

deployment.apps/nginx-config edited

[root@k8s-master-01 default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-58774b94b7-5tl6k 1/1 Running 3 44h

nfs-client-nfs-client-provisioner-7bf9895898-hfdzz 1/1 Running 1 24h

nginx-config-6fd69b8c74-7g4rs 0/2 ContainerCreating 0 4s

nginx-config-6fd69b8c74-j5tjd 0/2 ContainerCreating 0 4s

nginx-config-6fd69b8c74-lg6qk 0/2 ContainerCreating 0 3s

nginx-config-6fd69b8c74-lz8m4 2/2 Running 0 16m

nginx-config-6fd69b8c74-n8fqr 0/2 ContainerCreating 0 4s

wordpress-0 2/2 Running 8 3d

[root@k8s-master-01 default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-58774b94b7-5tl6k 1/1 Running 3 44h

nfs-client-nfs-client-provisioner-7bf9895898-hfdzz 1/1 Running 1 24h

nginx-config-6fd69b8c74-7g4rs 2/2 Running 0 15s

nginx-config-6fd69b8c74-j5tjd 2/2 Running 0 15s

nginx-config-6fd69b8c74-lg6qk 2/2 Running 0 14s

nginx-config-6fd69b8c74-lz8m4 2/2 Running 0 16m

nginx-config-6fd69b8c74-n8fqr 2/2 Running 0 15s

wordpress-0 2/2 Running 8 3d

[root@k8s-master-01 default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-58774b94b7-5tl6k 1/1 Running 3 44h

nfs-client-nfs-client-provisioner-7bf9895898-hfdzz 1/1 Running 1 24h

nginx-config-6fd69b8c74-7g4rs 2/2 Running 0 16s

nginx-config-6fd69b8c74-j5tjd 2/2 Running 0 16s

nginx-config-6fd69b8c74-lg6qk 2/2 Running 0 15s

nginx-config-6fd69b8c74-lz8m4 2/2 Running 0 16m

nginx-config-6fd69b8c74-n8fqr 2/2 Running 0 16s

wordpress-0 2/2 Running 8 3d

[root@k8s-master-01 default-nginx-config-pvc-682736cd-9ee6-4168-96a3-6d8331176265]#

这时候我们需要修改configmap文件就可以访问

[root@k8s-master-01 opt]# vim discuz-cm.yaml

修改nginx的访问目录:/usr/share/nginx/html/upload

......

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

default.conf: |

server {

listen 80;

listen [::]:80;

server_name _;

location / {

root /usr/share/nginx/html/upload;

index index.html index.php;

}

location ~ \.php$ {

root /usr/share/nginx/html/upload;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /usr/share/nginx/html/upload$fastcgi_script_name;

include fastcgi_params;

}

}

.......

[root@k8s-master-01 opt]# kubectl apply -f discuz-cm.yaml

configmap/nginx-config configured

service/nginx-config unchanged

persistentvolumeclaim/nginx-config unchanged

deployment.apps/nginx-config unchanged

[root@k8s-master-01 opt]# kubectl get configmaps nginx-config

NAME DATA AGE

nginx-config 1 27m

[root@k8s-master-01 opt]# kubectl describe configmaps nginx-config

Name: nginx-config

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

default.conf:

----

server {

listen 80;

listen [::]:80;

server_name _;

location / {

root /usr/share/nginx/html/upload;

index index.html index.php;

}

location ~ \.php$ {

root /usr/share/nginx/html/upload;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /usr/share/nginx/html/upload$fastcgi_script_name;

include fastcgi_params;

}

}

进入nginx容器就会发现default.conf都变了,都存在upload目录

[root@k8s-master-01 opt]# kubectl exec -it nginx-config-6fd69b8c74-lz8m4 -c nginx -- bash

[root@nginx-config-6fd69b8c74-lz8m4 nginx]# ll

total 0

drwxrwxrwx 3 root root 20 Aug 29 08:51 html

[root@nginx-config-6fd69b8c74-lz8m4 nginx]# cd /etc/nginx/conf.d/

[root@nginx-config-6fd69b8c74-lz8m4 conf.d]# ll

total 0

lrwxrwxrwx 1 root root 19 Aug 29 08:36 default.conf -> ..data/default.conf

[root@nginx-config-6fd69b8c74-lz8m4 conf.d]# cat default.conf

server {

listen 80;

listen [::]:80;

server_name _;

location / {

root /usr/share/nginx/html/upload;

index index.html index.php;

}

location ~ \.php$ {

root /usr/share/nginx/html/upload;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /usr/share/nginx/html/upload$fastcgi_script_name;

include fastcgi_params;

}

}

这时候需要访问的话重启nginx

5.使用configmap挂载

[root@k8s-master-01 opt]# cat discuz-test.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

default.conf: |

server {

listen 80;

listen [::]:80;

server_name _;

location / {

root /usr/share/nginx/html/upload;

index index.html index.php;

}

location ~ \.php$ {

root /usr/share/nginx/html/upload;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /usr/share/nginx/html/upload$fastcgi_script_name;

include fastcgi_params;

}

}

index.php: |

<?php

phpinfo();

?>

---

apiVersion: v1

kind: Service

metadata:

name: nginx-config

spec:

ports:

- port: 80

targetPort: 80

nodePort: 30089

selector:

app: nginx-config

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-config

spec:

selector:

matchLabels:

app: nginx-config

template:

metadata:

labels:

app: nginx-config

spec:

containers:

- name: php

image: alvinos/php:wordpress-v2

volumeMounts:

- mountPath: /usr/share/nginx/html

name: nginx-config-configmap

- name: nginx

image: alvinos/nginx:wordpress-v2

volumeMounts:

- mountPath: /usr/share/nginx/html

name: nginx-config-configmap

- mountPath: /etc/nginx/conf.d

name: nginx-config-configmap

volumes:

- name: nginx-config-configmap

configMap:

name: nginx-config

items:

- key: default.conf

path: default.conf

- key: index.php

path: index.php

[root@k8s-master-01 opt]# kubectl apply -f discuz-test.yaml

configmap/nginx-config created

service/nginx-config created

deployment.apps/nginx-config created

[root@k8s-master-01 opt]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-58774b94b7-5tl6k 1/1 Running 3 45h

nfs-client-nfs-client-provisioner-7bf9895898-hfdzz 1/1 Running 1 25h

nginx-config-97f5b47c6-cvc25 2/2 Running 0 6s

wordpress-0 2/2 Running 8 3d1h

[root@k8s-master-01 opt]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-58774b94b7-5tl6k 1/1 Running 3 45h

nfs-client-nfs-client-provisioner-7bf9895898-hfdzz 1/1 Running 1 25h

nginx-config-97f5b47c6-cvc25 2/2 Running 0 23s

wordpress-0 2/2 Running 8 3d1h

[root@k8s-master-01 opt]# kubectl exec -it nginx-config-97f5b47c6-cvc25 -c nginx -- bash

[root@nginx-config-97f5b47c6-cvc25 nginx]# cd /etc/nginx/conf.d/

[root@nginx-config-97f5b47c6-cvc25 conf.d]# ll

total 0

lrwxrwxrwx 1 root root 19 Aug 29 09:51 default.conf -> ..data/default.conf

lrwxrwxrwx 1 root root 16 Aug 29 09:51 index.php -> ..data/index.php

[root@nginx-config-97f5b47c6-cvc25 conf.d]# cd /usr/share/nginx/html/

[root@nginx-config-97f5b47c6-cvc25 html]# ll

total 0

lrwxrwxrwx 1 root root 19 Aug 29 09:51 default.conf -> ..data/default.conf

lrwxrwxrwx 1 root root 16 Aug 29 09:51 index.php -> ..data/index.php

6.configmap热更新

修改configmap中的文件,可以同步到所有的挂载此configmap的容器中(仅仅同步到容器中),但是如果使用subPath参数,则热更新失效。

configMap挂载会直接覆盖原来的目录,如果不覆盖则需要使用subPath参数(subPath参数只能够针对文件,同时不支持热更新)

configmap环境变量的使用

kind: ConfigMap

apiVersion: v1

metadata:

name: test-mysql

data:

MYSQL_ROOT_PASSWORD: "123456"

MYSQL_DATABASE: discuz

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: test-mysql

spec:

selector:

matchLabels:

app: test-mysql

template:

metadata:

labels:

app: test-mysql

spec:

containers:

- name: mysql

image: mysql:5.7

envFrom: #引用数据中心定义的环境变量

- configMapRef:

name: test-mysql

4、使用ConfigMap的限制条件

1)ConfigMap必须在Pod之前创建。

2)ConfigMap受Namespace限制, 只有处于相同Namespace中的Pod才可以引用它。

3)ConfigMap中的配额管理还未能实现。

4)kubelet只支持可以被API Server管理的Pod使用ConfigMap。kubelet在本Node上通过–manifest- url或- config自动创建的静态Pod将无法引用ConfigMap。

5)在Pod对ConfigMap进行挂载(volumeMount) 操作时,在容器内部只能挂载为“目录”,无法挂载为“文件”。在挂载到容器内部后,在目录下将包含ConfigMap定义的每个item,如果在该目录下原来还有其他文件,则容器内的该目录将被挂载的ConfigMap覆盖。

7.subPath参数的使用

[root@k8s-master-01 opt]# cat discuz-test.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

default.conf: |

server {

listen 80;

listen [::]:80;

server_name _;

location / {

root /usr/share/nginx/html/upload;

index index.html index.php;

}

location ~ \.php$ {

root /usr/share/nginx/html/upload;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /usr/share/nginx/html/upload$fastcgi_script_name;

include fastcgi_params;

}

}

index.php: |

<?php

phpinfo();

?>

---

apiVersion: v1

kind: Service

metadata:

name: nginx-config

spec:

ports:

- port: 80

targetPort: 80

nodePort: 30089

selector:

app: nginx-config

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-config

spec:

selector:

matchLabels:

app: nginx-config

template:

metadata:

labels:

app: nginx-config

spec:

containers:

- name: php

image: alvinos/php:wordpress-v2

volumeMounts:

- mountPath: /usr/share/nginx/html/index.php #如果使用subpath参数的话,挂载文件的话一定要跟这subpath的文件

name: nginx-config-configmap

subPath: index.php #使用subpath参数

- name: nginx

image: alvinos/nginx:wordpress-v2

volumeMounts:

- mountPath: /usr/share/nginx/html/index.php

name: nginx-config-configmap

subPath: index.php

volumes:

- name: nginx-config-configmap

configMap:

name: nginx-config

items:

- key: index.php #要挂载的文件

path: index.php

[root@k8s-master-01 opt]# kubectl apply -f discuz-test.yaml

configmap/nginx-config created

service/nginx-config created

deployment.apps/nginx-config created

[root@k8s-master-01 opt]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-58774b94b7-5tl6k 1/1 Running 3 45h

nfs-client-nfs-client-provisioner-7bf9895898-hfdzz 1/1 Running 1 25h

nginx-config-7b4879448b-6tzzr 2/2 Running 0 4s

nginx-config-97f5b47c6-cvc25 0/2 Terminating 0 23m

wordpress-0 2/2 Running 8 3d2h

[root@k8s-master-01 opt]# kubectl exec -it nginx-config-7b4879448b-6tzzr -c nginx -- bash

[root@nginx-config-7b4879448b-6tzzr nginx]# cd /usr/share/nginx/html/

[root@nginx-config-7b4879448b-6tzzr html]# ll #里面的内容没有被覆盖

total 216

-rw-r--r-- 1 root root 494 Oct 29 2020 50x.html

-rw-r--r-- 1 root root 612 Oct 29 2020 index.html

-rw-r--r-- 1 root root 20 Aug 29 10:14 index.php

-rw-r--r-- 1 root root 19915 Apr 1 02:52 license.txt

-rw-r--r-- 1 root root 7345 Apr 1 02:52 readme.html

-rw-r--r-- 1 root root 7165 Apr 1 02:52 wp-activate.php

drwxr-xr-x 9 root root 4096 Apr 1 02:52 wp-admin

-rw-r--r-- 1 root root 351 Apr 1 02:52 wp-blog-header.php

-rw-r--r-- 1 root root 2328 Apr 1 02:52 wp-comments-post.php

-rw-r--r-- 1 root root 2913 Apr 1 02:52 wp-config-sample.php

-rw-r--r-- 1 root root 3117 Apr 1 02:52 wp-config.php

drwxr-xr-x 4 root root 52 Apr 1 02:52 wp-content

-rw-r--r-- 1 root root 3939 Apr 1 02:52 wp-cron.php

drwxr-xr-x 25 root root 8192 Apr 1 02:52 wp-includes

-rw-r--r-- 1 root root 2496 Apr 1 02:52 wp-links-opml.php

-rw-r--r-- 1 root root 3313 Apr 1 02:52 wp-load.php

-rw-r--r-- 1 root root 44993 Apr 1 02:52 wp-login.php

-rw-r--r-- 1 root root 8509 Apr 1 02:52 wp-mail.php

-rw-r--r-- 1 root root 21125 Apr 1 02:52 wp-settings.php

-rw-r--r-- 1 root root 31328 Apr 1 02:52 wp-signup.php

-rw-r--r-- 1 root root 4747 Apr 1 02:52 wp-trackback.php

-rw-r--r-- 1 root root 3236 Apr 1 02:52 xmlrpc.php

[root@nginx-config-7b4879448b-6tzzr html]# cat index.php

<?php

phpinfo();

?>

六、secret

在kubernetes中,还存在一种和ConfigMap非常类似的对象,称为Secret对象。

它主要用于存储敏感信息,例如密码、秘钥、证书等等。

Secret用来保存敏感数据,保存之前就必须将文件进行base64加密,挂载到pod中,自动解密。

Secret解决了密码、token、密钥等敏感数据的配置问题,而不需要把这些敏感数据暴露到镜像或者Pod Spec中。

Secret可以以Volume或者环境变量的方式使用。

Secret有三种类型:

# Service Account :

用来访问Kubernetes API,由Kubernetes自动创建,并且会自动挂载到Pod的

/run/secrets/kubernetes.io/serviceaccount目录中;

Opaque :base64编码格式的Secret,用来存储密码、密钥等;

kubernetes.io/dockerconfigjson :用来存储私有docker registry的认证信息。

# Secret类型:

tls: 一般用来部署证书

Opaque : 一般用来部署密码

Service Account : 部署kubernetes API认证信息

kubernetes.io/dockerconfigjson : 部署容器仓库登录信息

第一种: Secret普通登录挂载

1、首先使用base64对数据进行编码

Opaque类型的数据是一个map类型,要求value是base64编码格式。

# 1、加密

[root@k8s-m-01 ~]# echo -n 'admin' | base64 #准备username

YWRtaW4=

[root@k8s-m-01 ~]# echo -n '123456' | base64 #准备password

MTIzNDU2

# 2、解密

[root@k8s-m-01 ~]# echo -n MTIzNDU2 |base64 -d

123456

第二种: Secret加密登录挂载

1、接下来编写secret.yaml,并创建Secret

[root@k8s-master-01 opt]# echo -n "123456" |base64

MTIzNDU2

[

root@k8s-master-01 opt]# cat secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: test

data:

name: MTIzNDU2

2、 创建pod-secret.yaml,将上面创建的secret挂载进去:

[root@k8s-master-01 opt]# cat deploy-secret.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: secret-test

spec:

selector:

matchLabels:

app: secret

template:

metadata:

labels:

app: secret

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- mountPath: /usr/share/nginx/html

name: secret

volumes:

- name: secret

secret:

secretName: test

items:

- key: name

path: name

3.查看效果

[root@k8s-master-01 opt]# kubectl get secrets

NAME TYPE DATA AGE

default-token-l8lg8 kubernetes.io/service-account-token 3 11d

ingress-tls kubernetes.io/tls 2 4d9h

nfs-client-nfs-client-provisioner-token-xlnj8 kubernetes.io/service-account-token 3 29h

sh.helm.release.v1.nfs-client.v1 helm.sh/release.v1 1 29h

test Opaque 1 22m

[root@k8s-master-01 opt]# kubectl exec -it secret-test-86679c5d8-lsbvh -- bash

root@secret-test-86679c5d8-lsbvh:/# cd /usr/share/nginx/html/

root@secret-test-86679c5d8-lsbvh:/usr/share/nginx/html# ls

name

root@secret-test-86679c5d8-lsbvh:/usr/share/nginx/html# cat name

123456

第三种: Secre存储私有docker registry的认证

1、kubernetes.io/dockerconfigjson

用来存储私有docker registry的认证信息

1、创建secret # 使用阿里云私有仓库进入

export DOCKER_REGISTRY_SERVER="仓库URL"

export DOCKER_USER="仓库用户名"

export DOCKER_PASSWORD="密码"

export DOCKER_EMAIL="邮箱"

--docker-server=DOCKER_REGISTRY_SERVER --docker-username=DOCKER_USER --docker-password=DOCKER_PASSWORD --docker-email=DOCKER_EMAIL #可以不指定邮箱

2、实例

# 1、先创建sercre登录到阿里云

[root@k8s-m-01 ~]# kubectl create secret docker-registry aliyun --docker-server=registry.cn-shanghai.aliyuncs.com --docker-username=明明爷青回 --docker-password=xxx # 密码

secret/aliyun created

# 2、查看

[root@k8s-m-01 ~]# kubectl get secrets

NAME TYPE DATA AGE

aliyun kubernetes.io/dockerconfigjson 1 10s

default-token-tg92f kubernetes.io/service-account-token 3 11d

# 3、删除secrets

[root@k8s-m-01 ~]# kubectl delete secrets aliyun

secret "aliyun" deleted

# 4、编写配置文件拉取

[root@k8s-m-01 ~]# vim aliyun.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: test-docker-registry

spec:

selector:

matchLabels:

app: test-docker-registry

template:

metadata:

labels:

app: test-docker-registry

spec:

imagePullSecrets: # 加上这个就是直接登录到阿里云

- name: aliyun # 直接登录到阿里云(secret的名字)

containers:

- name: php

imagePullPolicy: Always

image: registry.cn-shanghai.aliyuncs.com/aliyun_mm/discuz:php-v1

- name: nginx

imagePullPolicy: Always

image: registry.cn-shanghai.aliyuncs.com/aliyun_mm/discuz:nginx-v1

# 4、生成yaml文件

[root@k8s-m-01 ~]# kubectl create -f aliyun.yaml

# 5、查看

[root@k8s-m-01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-nfs-client-provisioner-777fbc4cd6-d9gkj 1/1 Running 0 4h43m

test-docker-registry-f9d86c548-p8nll 2/2 Running 0 4s