Hadoop3.3.1最新版本安装分布式集群部署

集群规划:

| 机器 | ip | 分配节点 |

| node1 | 192.168.56.10 | NameNode、SecondaryNameNode、DataNode、ResourceManager |

| node2 | 192.168.56.11 | DataNode、NodeManager |

| node3 | 192.168.56.12 | DataNode、NodeManager |

1、首先从

Apache Hadoop![]() http://hadoop.apache.org/下载最新版本的hadoop,关闭防火墙和selinux

http://hadoop.apache.org/下载最新版本的hadoop,关闭防火墙和selinux

2、解压到linux,配置环境变量在/etc/profile.d/hadoop.sh

export JAVA_HOME=/data/soft/jdk1.8.0_201

export FLINK_HOME=/data/soft/flink-1.14.0

export PATH=$FLINK_HOME/bin:$JAVA_HOME/bin:$PATH

#HADOOP_HOME

export HADOOP_HOME=/data/soft/hadoop-3.3.1

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HADOOP_CONF_DIR=/data/soft/hadoop-3.3.1/etc/hadoop

export ZOOKEEPER_HOME=/data/soft/apache-zookeeper-3.7.0-bin

export PATH=$ZOOKEEPER_HOME/bin:$PATH3、cd /mine/hadoop-3.3.1/etc/hadoop

[root@node1 soft]# cd hadoop-3.3.1

[root@node1 hadoop-3.3.1]# ll

总用量 88

drwxr-xr-x 2 1000 1000 203 6月 15 01:52 bin

drwxr-xr-x 3 1000 1000 20 6月 15 01:15 etc

drwxr-xr-x 2 1000 1000 106 6月 15 01:52 include

drwxr-xr-x 3 1000 1000 20 6月 15 01:52 lib

drwxr-xr-x 4 1000 1000 288 6月 15 01:52 libexec

-rw-rw-r-- 1 1000 1000 23450 6月 15 01:02 LICENSE-binary

drwxr-xr-x 2 1000 1000 4096 6月 15 01:52 licenses-binary

-rw-rw-r-- 1 1000 1000 15217 6月 15 01:02 LICENSE.txt

-rw-rw-r-- 1 1000 1000 29473 6月 15 01:02 NOTICE-binary

-rw-rw-r-- 1 1000 1000 1541 5月 21 12:11 NOTICE.txt

-rw-rw-r-- 1 1000 1000 175 5月 21 12:11 README.txt

drwxr-xr-x 3 1000 1000 4096 6月 15 01:15 sbin

drwxr-xr-x 4 1000 1000 31 6月 15 02:18 share

[root@node1 hadoop-3.3.1]# pwd

/data/soft/hadoop-3.3.1

4、修改其中的一些文件

(1)hadoop-env.sh,修改JAVA_HOME为具体的路径

export JAVA_HOME=/data/soft/jdk1.8.0_201(2)core-site.xml

fs.defaultFS

hdfs://node1:9000

hadoop.tmp.dir

/data/soft/hadoop-3.3.1/datas

(3)hdfs-site.xml

dfs.replication

3

dfs.permissions.enabled

false

(4)mapred-site.xml

mapreduce.framework.name

yarn

yarn.app.mapreduce.am.env

HADOOP_MAPRED_HOME=${HADOOP_HOME}

mapreduce.map.env

HADOOP_MAPRED_HOME=${HADOOP_HOME}

mapreduce.reduce.env

HADOOP_MAPRED_HOME=${HADOOP_HOME}

(5)yarn-site.xml

yarn.resourcemanager.hostname

node1

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.pmem-check-enabled

false

yarn.nodemanager.vmem-check-enabled

false

yarn.log-aggregation-enable

true

yarn.nodemanager.remote-app-log-dir

/data/soft/hadoop-3.3.1/nodemanager-remote-app-logs

yarn.log-aggregation.retain-seconds

604800

yarn.nodemanager.log-dirs

file:///data/soft/hadoop-3.3.1/nodemanager-logs

yarn.nodemanager.delete.debug-delay-sec

604800

5、对namenode节点进行格式化

hdfs namenode -format6、在sbin目录里找到hadoop-daemon.sh进行执行,

hadoop-daemon.sh start namenode在别的节点启动datanode:

hadoop-daemon.sh start datanode也可以使用start-dfs.sh进行启动,但是要配置etc/hadoop里的workers文件:

node1

node2

node37、分发节点

在node2、node3上部署java、hadoop环境:

rsync -av /etc/hosts root@node2:/etc/

rsync -av /etc/profile.d/hadoop.sh root@node2:/etc/profile.d/

rsync -av /data/soft/jdk1.8.0_201 root@node2:/data/soft

rsync -av /data/soft/hadoop-3.3.1 root@node2:/data/softrsync -av /etc/hosts root@node3:/etc/

rsync -av /etc/profile.d/hadoop.sh root@node3:/etc/profile.d/

rsync -av /data/soft/jdk1.8.0_201 root@node3:/data/soft

rsync -av /data/soft/hadoop-3.3.1 root@node3:/data/softstart-dfs.sh报错

处理:

vim /data/soft/hadoop-3.3.1/sbin/start-dfs.sh,stop-dfs.sh

添加以下几行:

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=rootstart-yarn.sh报错

处理:

vim /data/soft/hadoop-3.3.1/sbin/start-yarn.sh,stop-yarn.sh

添加以下几行:

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

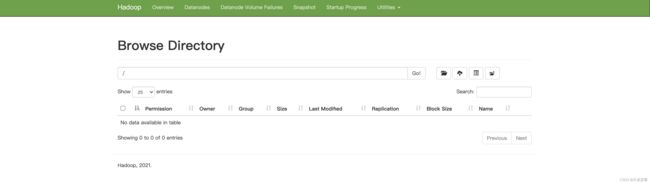

YARN_NODEMANAGER_USER=root8、启动界面

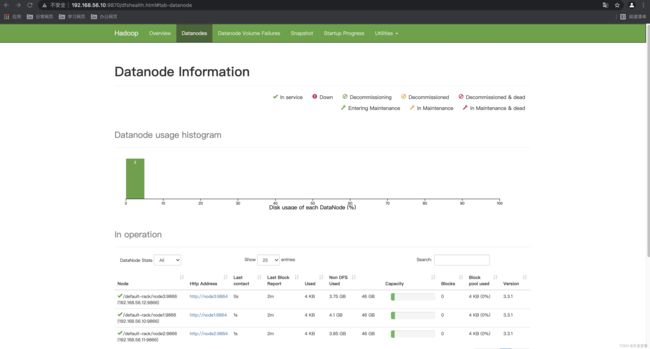

在http://192.168.56.100:9870(HDFS管理界面)里可以看到namenode已经让启动!!!

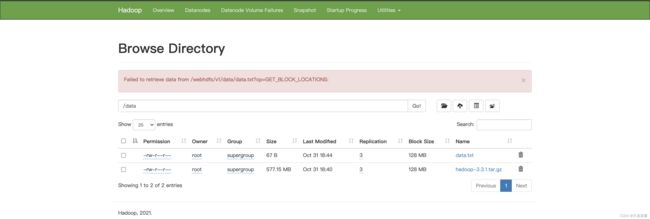

9、集群崩溃处理办法

崩溃条件:

(1)把node1的datas目录删除,把namenode进程杀死了

操作:

data.txt:

hello world titty

hello are you

are you ok ?

kitty

hello

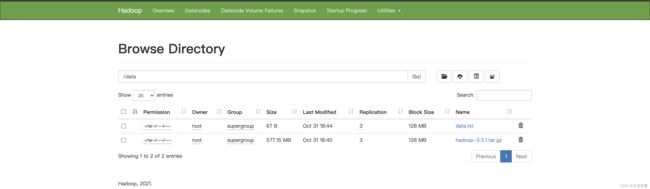

test testhdfs dfs -mkdir /data

hdfs dfs -put /data/soft/hadoop-3.3.1.tar.gz /data/

hdfs dfs -put /data/soft/datas/data.txt /data/[root@node1 hadoop-3.3.1]# jps

4578 NodeManager

3540 NameNode

3735 DataNode

4392 ResourceManager

14555 Jps

4045 SecondaryNameNode

[root@node1 hadoop-3.3.1]# kill -kill 3540

[root@node1 hadoop-3.3.1]# jps

4578 NodeManager

3735 DataNode

4392 ResourceManager

14972 Jps

4045 SecondaryNameNode

[root@node1 hadoop-3.3.1]# kill -kill 3735

[root@node1 hadoop-3.3.1]# jps

4578 NodeManager

15269 Jps

4392 ResourceManager

4045 SecondaryNameNode

解决办法:

stop-dfs.shnode1、node2、node3上:

[root@node1 hadoop-3.3.1]# rm -rf datas logs

[root@node1 hadoop-3.3.1]# ll

总用量 88

drwxr-xr-x 2 1000 1000 203 6月 15 01:52 bin

drwxr-xr-x 3 1000 1000 20 6月 15 01:15 etc

drwxr-xr-x 2 1000 1000 106 6月 15 01:52 include

drwxr-xr-x 3 1000 1000 20 6月 15 01:52 lib

drwxr-xr-x 4 1000 1000 288 6月 15 01:52 libexec

-rw-rw-r-- 1 1000 1000 23450 6月 15 01:02 LICENSE-binary

drwxr-xr-x 2 1000 1000 4096 6月 15 01:52 licenses-binary

-rw-rw-r-- 1 1000 1000 15217 6月 15 01:02 LICENSE.txt

drwxr-xr-x 2 root root 6 11月 20 00:37 nodemanager-logs

-rw-rw-r-- 1 1000 1000 29473 6月 15 01:02 NOTICE-binary

-rw-rw-r-- 1 1000 1000 1541 5月 21 12:11 NOTICE.txt

-rw-rw-r-- 1 1000 1000 175 5月 21 12:11 README.txt

drwxr-xr-x 3 1000 1000 4096 11月 20 00:37 sbin

drwxr-xr-x 4 1000 1000 31 6月 15 02:18 share然后:

重新在node1上格式化namenode:

hdfs namenode -format

rsync -av /data/soft/hadoop-3.3.1 root@node2:/data/soft/

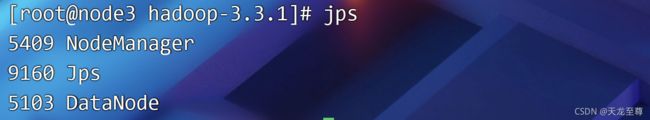

rsync -av /data/soft/hadoop-3.3.1 root@node3:/data/soft/jps:

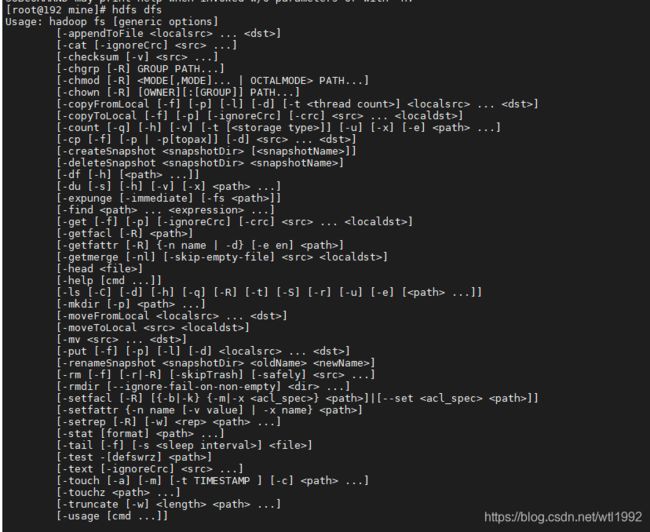

hdfs的命令使用

#上传本地文件到hdfs

hdfs dfs -put /mine/ideaIU-2019.2.4.tar.gz /

#查看hdfs上文件的文件内容

hdfs dfs -cat /hello.txt

#下载hdfs上的文件到本地

hdfs dfs -get /test /mine/test

#列出所有的hdfs上/(根)的文件

hdfs dfs -ls -h /

#在hdfs上创建文件夹

hdfs dfs -mkdir -p /a/b/c

#在hdfs上删除文件或者文件夹

hdfs dfs -rm -r -f /a/b/c10、修改hdfs的web端口和yarn的web端口的配置

hdfs-site.xml配置http:

dfs.http.address

hadoop1:9870

yarn-site.yml配置如下:

yarn.resourcemanager.webapp.address

hadoop1:8088