yolov4训练自己的数据集,详细教程!

系列文章目录

yolov4 win10 环境搭建,亲测有效!

yolov4训练自己的数据集,详细教程!

文章目录

- 系列文章目录

- 创建`yolo-obj.cfg` 配置文件

- 制作`obj.names`

- 制作`obj.data`

- 数据集制作

- 创建`make_data.py` 并运行

- 修改`voc_label.py`并运行

- 开始训练

- 测试

创建yolo-obj.cfg 配置文件

将 yolov4-custom.cfg 中的内容复制到 yolo-obj.cfg里面,并做以下修改:

1.修改batch=64,修改subdivisions=32(这里根据自己的显卡设置合适参数,显存不足时可以把batch=调小,或者subdivisions调大)

2.修改max_batches=classes*2000 例如有2个类别人和车 ,那么就设置为4000,N个类就设置为N乘以2000

3.修改steps为80% 到 90% 的max_batches值,比如max_batches=4000,则steps=3200,3600

4.修改classes,先用ctrl+F搜索 [yolo] 可以搜到3次,每次搜到的内容中 修改classes=你自己的类别 比如classes=2

5.修改filters,一样先搜索 [yolo] ,每次搜的yolo上一个[convolution] 中 filters=(classes + 5)x3 ,比如filters=21

6.(可以跳过)如果要用[Gaussian_yolo] ,则搜索[Gaussian_yolo] 将[filters=57] 的filter 修改为 filters=(classes + 9)x3 (这里我没有修改)

制作obj.names

在主目录下创建obj.names文件:

内容为你的类别,比如人和车,那么obj.names 为如下,多个类别依次往下写

person

car

制作obj.data

在主目录下创建obj.data文件,内容如下:

classes= 2

train = ./scripts/2007_train.txt

valid = ./scripts/2007_val.txt

names = Release\obj.names

backup = backup/

其中class为自己的类别个数;

train、valid、names根据自己的路径修改,找不到的话,可以修改为自己的绝对路径;

backup为权重保存的位置(需要提前创建好文件夹)

数据集制作

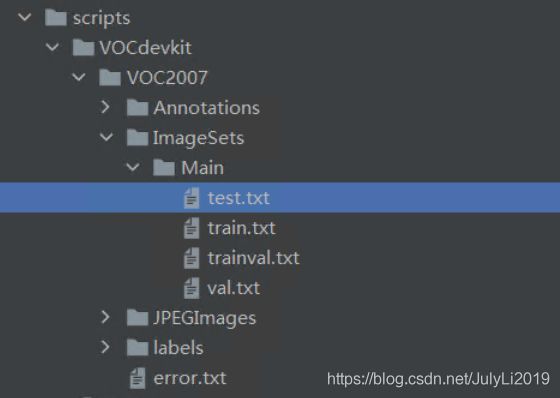

在scripts文件夹下按如下目录创建VOCdevkit 文件夹,放自己的训练数据,层级结构如下所示:

VOCdevkit

--VOC2007

----Annotations #(放XML标签文件)

----ImageSets

------Main

----JPEGImages # (放原始图片)

创建make_data.py 并运行

主目录下创建make_data.py 文件,把如下代码方进去。运行此文件在scripts 文件下生成 3个相应的txt文件,在Main 下生成四个txt文件。

make_data.py 内容为:

import os

import random

import sys

root_path = './scripts/VOCdevkit/VOC2007'

xmlfilepath = root_path + '/Annotations'

txtsavepath = root_path + '/ImageSets/Main'

if not os.path.exists(root_path):

print("cannot find such directory: " + root_path)

os.makedirs(root_path)

exit()

if not os.path.exists(txtsavepath):

os.makedirs(txtsavepath)

trainval_percent = 0.9

train_percent = 0.9

total_xml = os.listdir(xmlfilepath)

num = len(total_xml)

list = range(num)

tv = int(num * trainval_percent)

tr = int(tv * train_percent)

trainval = random.sample(list, tv)

train = random.sample(trainval, tr)

print("train and val size:", tv)

print("train size:", tr)

ftrainval = open(txtsavepath + '/trainval.txt', 'w')

ftest = open(txtsavepath + '/test.txt', 'w')

ftrain = open(txtsavepath + '/train.txt', 'w')

fval = open(txtsavepath + '/val.txt', 'w')

for i in list:

name = total_xml[i][:-4] + '\n'

if i in trainval:

ftrainval.write(name)

if i in train:

ftrain.write(name)

else:

fval.write(name)

else:

ftest.write(name)

ftrainval.close()

ftrain.close()

fval.close()

ftest.close()

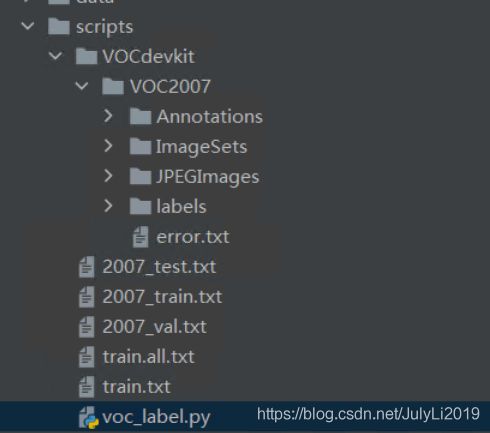

修改voc_label.py并运行

scripts文件夹下有voc_label.py,打开后修改自己的类别信息:

sets=[ (‘2007’, ‘train’), (‘2007’, ‘val’), (‘2007’, ‘test’)]

classes = [“person”,“car” ] 按自己的类别修改,但是顺序要和obj.name 保持一致:

# -*- coding:utf-8 -*-

import xml.etree.ElementTree as ET

import pickle

import os

from os import listdir, getcwd

from os.path import join

sets = [('2007', 'train'), ('2007', 'val'), ('2007', 'test')]

classes = ["person", "car"]

# 设置错误标签的log输出位置

write_path = open(r'D:\error.txt', 'w')

def convert(size, box):

dw = 1. / (size[0])

dh = 1. / (size[1])

x = (box[0] + box[1]) / 2.0 - 1

y = (box[2] + box[3]) / 2.0 - 1

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return (x, y, w, h)

def convert_annotation(year, image_id):

in_file = open('D:/yolov4/mybuild/Release/scripts/VOCdevkit/VOC%s/Annotations/%s.xml' % (year, image_id),

encoding="utf-8")

out_file = open('D:/yolov4/mybuild/Release/scripts/VOCdevkit/VOC%s/labels/%s.txt' % (year, image_id), 'w',

encoding="utf-8")

#这里根据自己的路径修改

tree = ET.parse(in_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

# difficult = obj.find('difficult').text

cls = obj.find('name').text

# if cls not in classes or int(difficult) == 1:

# continue

# print(in_file)

# 标签自查

if cls not in classes:

print(in_file, cls)

write_path.write('%s.xml' % image_id +' '+ cls +'\n')

continue

else:

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

bb = convert((w, h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

wd = getcwd()

for year, image_set in sets:

if not os.path.exists('VOCdevkit/VOC%s/labels/' % (year)):

os.makedirs('VOCdevkit/VOC%s/labels/' % (year))

image_ids = open('VOCdevkit/VOC%s/ImageSets/Main/%s.txt' % (year, image_set)).read().strip().split()

list_file = open('%s_%s.txt' % (year, image_set), 'w')

for image_id in image_ids:

list_file.write('%s/VOCdevkit/VOC%s/JPEGImages/%s.jpg\n' % (wd, year, image_id))

convert_annotation(year, image_id)

list_file.close()

os.system("cat 2007_train.txt 2007_val.txt 2012_train.txt 2012_val.txt > train.txt")

os.system("cat 2007_train.txt 2007_val.txt 2007_test.txt 2012_train.txt 2012_val.txt > train.all.txt")

运行后,在VOC2007文件下生成labels文件,文件夹里包含相应的txt.(现在voc2007文件里多出一个labels 文件夹)

开始训练

首先下载预训练权重yolov4.conv.137,放入主目录下;

之后用下面的命令开始训练:

darknet.exe detector train obj.data yolo-obj.cfg yolov4.conv.137 -map

断点续训操作

darknet.exe detector train obj.data yolo-obj.cfg backup/yolo-obj_2000.weights -map

测试

修改obj.data

valid = ./scripts/2007_test.txt

#valid = ./scripts/2007_val.txt

通过下面命令进行模型性能测试:

darknet detector map obj.data yolo-obj.cfg backup/yolo-obj_final.weights

这里提供一个yolov4批量测试脚本,脚本利用了opencv cuda进行了加速:

import numpy as np

import time

import cv2

import os

class YOLOV4_Detection():

def __init__(self,coco_name_path,weights_path,cfg_path):

self.coco_name_path=coco_name_path

self.weights_path=weights_path

self.cfg_path=cfg_path

def yolov4_test(self,test_images,test_results):

self.LABELS = open(self.coco_name_path,encoding="utf-8",errors="ignore").read().strip().split("\n")

np.random.seed(666)

#COLORS = np.random.randint(0, 255, size=(len(LABELS), 3), dtype="uint8")

# 导入 YOLO 配置和权重文件并加载网络:

print(self.cfg_path,self.weights_path)

self.net = cv2.dnn.readNetFromDarknet(self.cfg_path, self.weights_path)

#如果没有编译cuda版的opencv注释以下两行

self.net.setPreferableBackend(cv2.dnn.DNN_BACKEND_CUDA)

self.net.setPreferableTarget(cv2.dnn.DNN_TARGET_CUDA)

# 获取 YOLO 未连接的输出图层

self.layer = self.net.getUnconnectedOutLayersNames()

files=os.listdir(test_images)

for filename in files:

image=cv2.imread(os.path.join(test_images,filename))

# 获取图片尺寸

(H, W) = image.shape[:2]

# 从输入图像构造一个 blob,然后执行 YOLO 对象检测器的前向传递,给我们边界盒和相关概率

blob = cv2.dnn.blobFromImage(image, 1/255.0, (608, 608),

swapRB=True, crop=False)

self.net.setInput(blob)

start = time.time()

# 前向传递,获得信息

layerOutputs = self.net.forward(self.layer)

# 用于得出检测时间

boxes = []

confidences = []

classIDs = []

# 循环提取每个输出层

for output in layerOutputs:

# 循环提取每个框

for detection in output:

# 提取当前目标的类 ID 和置信度

scores = detection[5:]

classID = np.argmax(scores)

confidence = scores[classID]

# 通过确保检测概率大于最小概率来过滤弱预测

if confidence > 0.5:

# 将边界框坐标相对于图像的大小进行缩放,YOLO 返回的是边界框的中心(x, y)坐标,

# 后面是边界框的宽度和高度

box = detection[0:4] * np.array([W, H, W, H])

(centerX, centerY, width, height) = box.astype("int")

# 转换出边框左上角坐标

x = int(centerX - (width / 2))

y = int(centerY - (height / 2))

# 更新边界框坐标、置信度和类 id 的列表

boxes.append([x, y, int(width), int(height)])

confidences.append(float(confidence))

classIDs.append(classID)

# 非最大值抑制,确定唯一边框

idxs = cv2.dnn.NMSBoxes(boxes, confidences, 0.5, 0.3)

# 确定每个对象至少有一个框存在

if len(idxs) > 0:

# 循环画出保存的边框

for i in idxs.flatten():

# 提取坐标和宽度

(x, y) = (boxes[i][0], boxes[i][1])

(w, h) = (boxes[i][2], boxes[i][3])

# 画出边框和标签

#color = [int(c) for c in COLORS[classIDs[i]]]

color_rectangele=[0,255,0]

color_text=[0,0,255]

cv2.rectangle(image, (x, y), (x + w, y + h), color_rectangele, 2, lineType=cv2.LINE_AA)

text = "{}: {:.4f}".format(self.LABELS[classIDs[i]], confidences[i])

cv2.putText(image, text, (x, y - 5), cv2.FONT_HERSHEY_SIMPLEX,

1.5, color_text, 2, lineType=cv2.LINE_AA)

end = time.time()

print("YOLO took {:.6f} seconds".format(end - start))

cv2.imwrite(os.path.join(test_results,filename),image)

coco_name_path=r"D:\yolov4\mybuild\Release\test\obj.names"

weights_path=r"D:\yolov4\mybuild\Release\test\yolo-obj_best.weights"

cfg_path=r"D:\yolov4\mybuild\Release\test\yolo-obj.cfg"

test_images=r"D:\yolov4\mybuild\Release\test\testimg"

test_results=r"D:\yolov4\mybuild\Release\test\output"

#net = cv2.dnn.readNetFromDarknet(cfg_path, weights_path)

#net.setPreferableBackend(cv2.dnn.DNN_BACKEND_CUDA)

#net.setPreferableTarget(cv2.dnn.DNN_TARGET_CUDA)

test1=YOLOV4_Detection(coco_name_path,weights_path,cfg_path)

test1.yolov4_test(test_images,test_results)

参考文档:https://blog.csdn.net/weixin_41444791/article/details/107761115

如果阅读本文对你有用,欢迎关注点赞评论收藏呀!!!

2021年3月29日11:49:31