【最详细讲解版本】YOLOv3原理及代码解析

you look only once

博主完整翻译了YOLOV1和YOLOV3的论文;请移步查看:

YOLOV1:https://blog.csdn.net/taifengzikai/article/details/81988891

YOLOV3:https://blog.csdn.net/taifengzikai/article/details/100903687

YOLO v3原理及代码解析

YOLO是一种端到端的目标检测模型。YOLO算法的基本思想是:首先通过特征提取网络对输入特征提取特征,得到特定大小的特征图输出。输入图像分成13×13的grid cell,接着如果真实框中某个object的中心坐标落在某个grid cell中,那么就由该grid cell来预测该object。每个object有固定数量的bounding box,YOLO v3中有三个bounding box,使用逻辑回归确定用来预测的回归框。

一、YOLO结构

Yolo系列里,作者只在v1的论文里给出了结构图,而v2和v3的论文里没有给出结构图。但是清晰的结构图对于理解和学习Yolo十分重要。在“木盏”的CSDN博客上,找到了一份完整的Yolo v3结构图。经博主同意之后,我拿到了高清原图,如图1.1。Yolo v3整个结构,不包括池化层和全连接层。Yolo主干结构是Darknet-53网络,还有 Yolo预测支路采用的都是全卷积的结构。

图1.1中的DBL是Yolo v3的基本组件。正如yolo3.model中的DarknetConv2D_BN_Leaky函数所定义的那样,Darknet的卷积层后接BatchNormalization(BN)和LeakyReLU。除最后一层卷积层外,在yolo v3中BN和LeakyReLU已经是卷积层不可分离的部分了,共同构成了最小组件。

主干网络中使用了5个resn结构。n代表数字,有res1,res2, … ,res8等等,表示这个res_block里含有n个res_unit,这是Yolo v3的大组件。从Yolo v2的darknet-19上升到Yolo v3的darknet-53,前者没有残差结构。Yolo v3开始借鉴了ResNet的残差结构,使用这种结构可以让网络结构更深。对于res_block的解释,可以在图1.1的右下角直观看到,其基本组件也是DBL。

在预测支路上有张量拼接(concat)操作。其实现方法是将darknet中间层和中间层后某一层的上采样进行拼接。值得注意的是,张量拼接和Res_unit结构的add的操作是不一样的,张量拼接会扩充张量的维度,而add只是直接相加不会导致张量维度的改变。

从代码层面来整体分析,Yolo_body一共有252层。23个Res_unit对应23个add层。BN层和LeakyReLU层数量都是72层,在网络结构中的表现为:每一层BN后面都会接一层LeakyReLU。上采样和张量拼接操作各2个,5个零填充对应5个res_block。卷积层一共有75层,其中有72层后面都会接BatchNormalization和LeakyReLU构成的DBL。三个不同尺度的输出对应三个卷积层,最后的卷积层的卷积核个数是255,是针对COCO数据集的80类:3×(80+4+1)=255,3表示一个grid cell包含3个bounding box,4表示框的4个坐标信息,1表示置信度。

二、Darknet-53特征提取网络(Backbone)

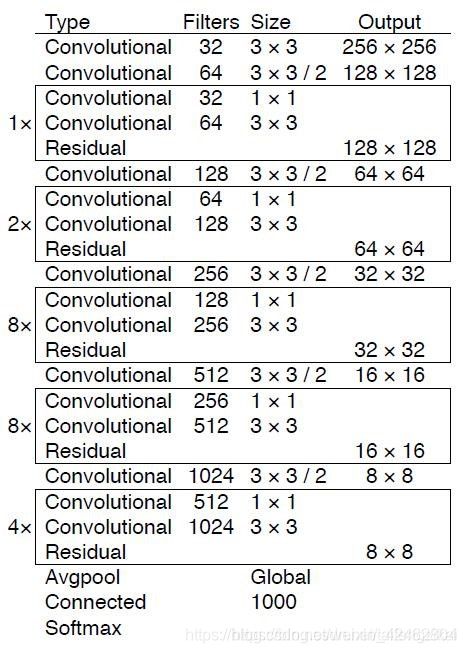

Yolo v3中使用了一个53层的卷积网络,这个网络由残差单元叠加而成。Joseph Redmon的实验表明,在分类准确度上与效率的平衡上,Darknet-53模型比ResNet-101、 ResNet-152和Darknet-19表现得更好。Yolo v3并没有那么追求速度,而是在保证实时性(fps>60)的基础上追求performance。

一方面,Darknet-53网络采用全卷积结构,Yolo v3前向传播过程中,张量的尺寸变换是通过改变卷积核的步长来实现的。卷积的步长为2,每次经过卷积之后,图像边长缩小一半。如图2.1中所示,Darknet-53中有5次卷积的步长为2。经过5次缩小,特征图缩小为原输入尺寸的1/32。所以网络输入图片的尺寸为32的倍数,取为416×416。Yolo v2中对于前向过程中张量尺寸变换,都是通过最大池化来进行,一共有5次。而v3是通过卷积核增大步长来进行,也是5次。

在这里插入图片描述

图2.1 Darknet-53骨干结构

另一方面,Darknet-53网络引入了residual结构。Yolo v2中还是类似VGG那样直筒型的网络结构,层数太多训起来会有梯度问题,所以Darknet-19也就19层。得益于ResNet的residual结构,训练深层网络的难度大大减小。因此Darknet-53网络做到53层,精度提升比较明显。

Darknet-53网络只是特征提取层,源码中只使用了pooling层前面的卷积层来提取特征,因此multi-scale的特征融合和预测支路并没有在该网络结构中体现。

三、边界框的预测(Bounding Box Prediction)

Yolo v3关于bounding box的初始尺寸还是采用Yolo v2 v2中的k-means聚类的方式来做,这种先验知识对于bounding box的初始化帮助还是很大的,毕竟过多的bounding box虽然对于效果来说有保障,但是对于算法速度影响还是比较大的。

Yolo v2借鉴了faster R-CNN的RPN的anchor机制,不同的是,采用k-means聚类的方法来确定默认框的尺寸。Joseph Redmon修改了k-means算法中关于距离的定义,使用的是IOU距离。同样地,YOLO v3选择的默认框有9个。其尺寸可以通过k-means算法在数据集上聚类得到。在COCO数据集上,9个聚类是:(10×13);(16×30);(33×23);(30×61);(62×45); (59×119); (116×90); (156×198); (373×326)。默认框与不同尺寸特征图的对应关系是:13×13的feature map对应[(116×90),(156×198),(373×326)],26×26的feature map对应[(30×61),(62×45),(59×119)],52×52的feature map对应[(10×13),(16×30),(33×23)]。其原因是:特征图越大,感受野越小。对小目标越敏感,所以选用小的anchor box。特征图越小,感受野越大。对大目标越敏感,所以选用大的anchor box。

import numpy as np

class YOLO_Kmeans:

def __init__(self, cluster_number, filename):

self.cluster_number = cluster_number

self.filename = "2012_train.txt"

def iou(self, boxes, clusters): # 1 box -> k clusters

n = boxes.shape[0]

k = self.cluster_number

box_area = boxes[:, 0] * boxes[:, 1]

box_area = box_area.repeat(k)

box_area = np.reshape(box_area, (n, k))

cluster_area = clusters[:, 0] * clusters[:, 1]

cluster_area = np.tile(cluster_area, [1, n])

cluster_area = np.reshape(cluster_area, (n, k))

box_w_matrix = np.reshape(boxes[:, 0].repeat(k), (n, k))

cluster_w_matrix = np.reshape(np.tile(clusters[:, 0], (1, n)), (n, k))

min_w_matrix = np.minimum(cluster_w_matrix, box_w_matrix)

box_h_matrix = np.reshape(boxes[:, 1].repeat(k), (n, k))

cluster_h_matrix = np.reshape(np.tile(clusters[:, 1], (1, n)), (n, k))

min_h_matrix = np.minimum(cluster_h_matrix, box_h_matrix)

inter_area = np.multiply(min_w_matrix, min_h_matrix)

# 计算IOU值

result = inter_area / (box_area + cluster_area - inter_area)

return result

def avg_iou(self, boxes, clusters):

accuracy = np.mean([np.max(self.iou(boxes, clusters), axis=1)])

return accuracy

def kmeans(self, boxes, k, dist=np.median):

#聚类问题

box_number = boxes.shape[0]

distances = np.empty((box_number, k))

last_nearest = np.zeros((box_number,))

np.random.seed()

clusters = boxes[np.random.choice(

box_number, k, replace=False)] # init k clusters

while True:

#此处没有使用欧氏距离,较大的box会比较小的box产生更多的错误。自定义的距离度量公式为:

#d(box,centroid)=1-IOU(box,centroid)。到聚类中心的距离越小越好,但IOU值是越大越好,所以使用 #1 - IOU,这样就保证距离越小,IOU值越大。

distances = 1 - self.iou(boxes, clusters)

current_nearest = np.argmin(distances, axis=1)

if (last_nearest == current_nearest).all():

break # clusters won't change

for cluster in range(k):

clusters[cluster] = dist( # update clusters

boxes[current_nearest == cluster], axis=0)

last_nearest = current_nearest

return clusters

def result2txt(self, data):

f = open("yolo_anchors.txt", 'w')

row = np.shape(data)[0]

for i in range(row):

if i == 0:

x_y = "%d,%d" % (data[i][0], data[i][1])

else:

x_y = ", %d,%d" % (data[i][0], data[i][1])

f.write(x_y)

f.close()

def txt2boxes(self):

f = open(self.filename, 'r')

dataSet = []

for line in f:

infos = line.split(" ")

length = len(infos)

for i in range(1, length):

width = int(infos[i].split(",")[2]) - \

int(infos[i].split(",")[0])

height = int(infos[i].split(",")[3]) - \

int(infos[i].split(",")[1])

dataSet.append([width, height])

result = np.array(dataSet)

f.close()

return result

def txt2clusters(self):

all_boxes = self.txt2boxes()

result = self.kmeans(all_boxes, k=self.cluster_number)

result = result[np.lexsort(result.T[0, None])]

self.result2txt(result)

print("K anchors:\n {}".format(result))

print("Accuracy: {:.2f}%".format(

self.avg_iou(all_boxes, result) * 100))

if __name__ == "__main__":

cluster_number = 9

filename = "2012_train.txt"

kmeans = YOLO_Kmeans(cluster_number, filename)

kmeans.txt2clusters()

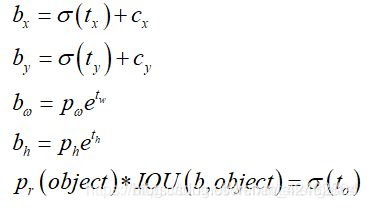

Yolo v3采用直接预测相对位置的方法。预测出b-box中心点相对于网格单元左上角的相对坐标。直接预测出(tx,ty,tw,th,t0),然后通过以下坐标偏移公式计算得到b-box的位置大小和confidence。

tx、ty、tw、th就是模型的预测输出。cx和cy表示grid cell的坐标,比如某层的feature map大小是13×13,那么grid cell就有13×13个,第0行第1列的grid cell的坐标cx就是0,cy就是1。pw和ph表示预测前bounding box的size。bx、by、bw和bh就是预测得到的bounding box的中心的坐标和size。在训练这几个坐标值的时候采用了sum of squared error loss(平方和距离误差损失),因为这种方式的误差可以很快的计算出来。

Yolo v3使用逻辑回归预测每个边界框的分数。如果边界框与真实框的重叠度比之前的任何其他边界框都要好,则该值应该为1。如果边界框不是最好的,但确实与真实对象的重叠超过某个阈值(Yolo v3中这里设定的阈值是0.5),那么就忽略这次预测。Yolo v3只为每个真实对象分配一个边界框,如果边界框与真实对象不吻合,则不会产生坐标或类别预测损失,只会产生物体预测损失。

四、类别预测

类别预测方面主要是将原来的单标签分类改进为多标签分类,因此网络结构上就将原来用于单标签多分类的softmax层换成用于多标签多分类的Logistic分类器。Yolo v2网络中的Softmax分类器,认为一个目标只属于一个类别,通过输出Score大小,使得每个框分配到Score最大的一个类别。但在一些复杂场景下,一个目标可能属于多个类(有重叠的类别标签),因此Yolo v3用多个独立的Logistic分类器替代Softmax层解决多标签分类问题,且准确率不会下降。举例说明,原来分类网络中的softmax层都是假设一张图像或一个object只属于一个类别,但是在一些复杂场景下,一个object可能属于多个类,比如你的类别中有woman和person这两个类,那么如果一张图像中有一个woman,那么你检测的结果中类别标签就要同时有woman和person两个类,这就是多标签分类,需要用Logistic分类器来对每个类别做二分类。Logistic分类器主要用到sigmoid函数,该函数可以将输入约束在0到1的范围内,因此当一张图像经过特征提取后的某一类输出经过sigmoid函数约束后如果大于0.5,就表示该边界框负责的目标属于该类。

五、多尺度预测

Yolo v3采用多个scale融合的方式做预测。原来的Yolo v2有一个层叫:passthrough layer,该层作用是为了加强Yolo算法对小目标检测的精确度。这个思想在Yolo v3中得到了进一步加强,在Yolo v3中采用类似FPN(feature pyramid networks)的upsample和融合做法(最后融合了3个scale,其他两个scale的大小分别是26×26和52×52),在多个scale的feature map上做检测,越精细的grid cell就可以检测出越精细的物体。对于小目标的检测效果提升明显。

在结构图1.1中可以看出,Yolo v3设定的是每个网格单元预测3个box,所以每个box需要有(x, y, w, h, confidence)五个基本参数。Yolo v3输出了3个不同尺度的feature map,如图1.1所示的y1, y2, y3。y1,y2和y3的深度都是255,边长的规律是13:26:52。

每个预测任务得到的特征大小都为N ×N ×[3∗(4+1+80)] ,N为格子大小,3为每个格子得到的边界框数量, 4是边界框坐标数量,1是目标预测值,80是类别数量。对于COCO类别而言,有80个类别的概率,所以每个box应该对每个种类都输出一个概率。所以3×(5 + 80) = 255。这个255就是这么来的。

Yolo v3用上采样的方法来实现这种多尺度的feature map。在Darknet-53得到的特征图的基础上,经过六个DBL结构和最后一层卷积层得到第一个特征图谱,在这个特征图谱上做第一次预测。Y1支路上,从后向前的倒数第3个卷积层的输出,经过一个DBL结构和一次(2,2)上采样,将上采样特征与第2个Res8结构输出的卷积特征张量连接,经过六个DBL结构和最后一层卷积层得到第二个特征图谱,在这个特征图谱上做第二次预测。Y2支路上,从后向前倒数第3个卷积层的输出,经过一个DBL结构和一次(2,2)上采样,将上采样特征与第1个Res8结构输出的卷积特征张量连接,经过六个DBL结构和最后一层卷积层得到第三个特征图谱,在这个特征图谱上做第三次预测。

就整个网络而言,Yolo v3多尺度预测输出的feature map尺寸为y1:(13×13),y2:(26×26),y3:(52×52)。网络接收一张(416×416)的图,经过5个步长为2的卷积来进行降采样(416 / 2ˆ5 = 13,y1输出(13×13)。从y1的倒数第二层的卷积层上采样(x2,up sampling)再与最后一个26×26大小的特征图张量连接,y2输出(26×26)。从y2的倒数第二层的卷积层上采样(x2,up sampling)再与最后一个52×52大小的特征图张量连接,y3输出(52×52)

六、Loss Function

对掌握Yolo来讲,loss function不可谓不重要。在Yolo v3的论文里没有明确提出所用的损失函数,确切地说,Yolo系列论文里面只有Yolo v1明确提了损失函数的公式。在Yolo v1中使用了一种叫sum-square error的损失计算方法,只是简单的差方相加。我们知道,在目标检测任务里,有几个关键信息是需要确定的:(x,y),(w,h),class,confidence 。根据关键信息的特点可以分为上述四类,损失函数应该由各自特点确定。最后加到一起就可以组成最终的loss function了,也就是一个loss function搞定端到端的训练。

可以从代码分析出v3的损失函数,同样也是对以上四类,不过相比于v1中简单的总方误差,还是有一些调整的。keras框架描述的Yolo v3 的loss function代码,在附录yolo3.model。忽略恒定系数不看,可以从上述代码看出:除了w, h的损失函数依然采用总方误差之外,其他部分的损失函数用的是二值交叉熵。最后加到一起。

xy_loss = object_mask * box_loss_scale * K.binary_crossentropy(raw_true_xy, raw_pred[...,0:2], from_logits=True)

wh_loss = object_mask * box_loss_scale * 0.5 * K.square(raw_true_wh-raw_pred[...,2:4])

# 置信度

confidence_loss = object_mask * K.binary_crossentropy(object_mask, raw_pred[...,4:5], from_logits=True)+ (1-object_mask) * K.binary_crossentropy(object_mask, raw_pred[...,4:5], from_logits=True) * ignore_mask

# 分类

class_loss = object_mask * K.binary_crossentropy(true_class_probs, raw_pred[...,5:], from_logits=True)

xy_loss = K.sum(xy_loss) / mf

wh_loss = K.sum(wh_loss) / mf

confidence_loss = K.sum(confidence_loss) / mf

class_loss = K.sum(class_loss) / mf

loss += xy_loss + wh_loss + confidence_loss + class_loss

七、实验

本文使用了qwe的keras版本的yolo v3代码,代码相对来说比较容易理解,复现比较容易。目录结构如图7.1所示

附录A

训练和检测都导入了yolo3.model:

"""YOLO_v3 Model Defined in Keras."""

from functools import wraps

import numpy as np

import tensorflow as tf

from keras import backend as K

from keras.layers import Conv2D, Add, ZeroPadding2D, UpSampling2D, Concatenate, MaxPooling2D

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.normalization import BatchNormalization

from keras.models import Model

from keras.regularizers import l2

from yolo3.utils import compose

# DarknetConv2D(),DarknetConv2D_BN_Leaky(),resblock_body()三个函数构成了darknet_body()卷积层框#架

@wraps(Conv2D)

def DarknetConv2D(*args, **kwargs):

"""Wrapper to set Darknet parameters for Convolution2D."""

darknet_conv_kwargs = {

'kernel_regularizer': l2(5e-4)}

darknet_conv_kwargs['padding'] = 'valid' if kwargs.get('strides')==(2,2) else 'same'

darknet_conv_kwargs.update(kwargs)

return Conv2D(*args, **darknet_conv_kwargs)

#注意 DARKNET卷积这里激活函数是LEAKYRELU

def DarknetConv2D_BN_Leaky(*args, **kwargs):

"""Darknet Convolution2D followed by BatchNormalization and LeakyReLU."""

no_bias_kwargs = {

'use_bias': False}

no_bias_kwargs.update(kwargs)

return compose(

DarknetConv2D(*args, **no_bias_kwargs),

BatchNormalization(),

LeakyReLU(alpha=0.1))

def resblock_body(x, num_filters, num_blocks):

'''A series of resblocks starting with a downsampling Convolution2D'''

# Darknet uses left and top padding instead of 'same' mode

# Darknet使用向左和向上填充代替same模式。

#DARKNET每块之间,使用了,(1,0,1,0)的PADDING层。

x = ZeroPadding2D(((1,0),(1,0)))(x)

x = DarknetConv2D_BN_Leaky(num_filters, (3,3), strides=(2,2))(x)

for i in range(num_blocks):

y = compose(

DarknetConv2D_BN_Leaky(num_filters//2, (1,1)),

DarknetConv2D_BN_Leaky(num_filters, (3,3)))(x)

x = Add()([x,y])

return x

#创建darknet网络结构,有52层卷积层。包含五个resblock

def darknet_body(x):

'''Darknent body having 52 Convolution2D layers'''

x = DarknetConv2D_BN_Leaky(32, (3,3))(x)

x = resblock_body(x, 64, 1)

x = resblock_body(x, 128, 2)

x = resblock_body(x, 256, 8)

x = resblock_body(x, 512, 8)

x = resblock_body(x, 1024, 4)

return x

#Convs由make_last_layers函数来实现。

def make_last_layers(x, num_filters, out_filters):

'''6 Conv2D_BN_Leaky layers followed by a Conv2D_linear layer'''

x = compose(

DarknetConv2D_BN_Leaky(num_filters, (1,1)),

DarknetConv2D_BN_Leaky(num_filters*2, (3,3)),

DarknetConv2D_BN_Leaky(num_filters, (1,1)),

DarknetConv2D_BN_Leaky(num_filters*2, (3,3)),

DarknetConv2D_BN_Leaky(num_filters, (1,1)))(x)

y = compose(

DarknetConv2D_BN_Leaky(num_filters*2, (3,3)),

DarknetConv2D(out_filters, (1,1)))(x)

return x, y

def yolo_body(inputs, num_anchors, num_classes):

"""Create YOLO_V3 model CNN body in Keras."""

darknet = Model(inputs, darknet_body(inputs)) # darknet_body(inputs)创建一个darknet网络

#以下语句是特征金字塔(FPN)的具体实现。

x, y1 = make_last_layers(darknet.output, 512, num_anchors*(num_classes+5))

#compose函数,从左向右评估函数

x = compose(

DarknetConv2D_BN_Leaky(256, (1,1)),

UpSampling2D(2))(x)

x = Concatenate()([x,darknet.layers[152].output])

x, y2 = make_last_layers(x, 256, num_anchors*(num_classes+5))

x = compose(

DarknetConv2D_BN_Leaky(128, (1,1)),

UpSampling2D(2))(x)

x = Concatenate()([x,darknet.layers[92].output])

x, y3 = make_last_layers(x, 128, num_anchors*(num_classes+5))

return Model(inputs, [y1,y2,y3])

def tiny_yolo_body(inputs, num_anchors, num_classes):

'''Create Tiny YOLO_v3 model CNN body in keras.'''

x1 = compose(

DarknetConv2D_BN_Leaky(16, (3,3)),

MaxPooling2D(pool_size=(2,2), strides=(2,2), padding='same'),

DarknetConv2D_BN_Leaky(32, (3,3)),

MaxPooling2D(pool_size=(2,2), strides=(2,2), padding='same'),

DarknetConv2D_BN_Leaky(64, (3,3)),

MaxPooling2D(pool_size=(2,2), strides=(2,2), padding='same'),

DarknetConv2D_BN_Leaky(128, (3,3)),

MaxPooling2D(pool_size=(2,2), strides=(2,2), padding='same'),

DarknetConv2D_BN_Leaky(256, (3,3)))(inputs)

x2 = compose(

MaxPooling2D(pool_size=(2,2), strides=(2,2), padding='same'),

DarknetConv2D_BN_Leaky(512, (3,3)),

MaxPooling2D(pool_size=(2,2), strides=(1,1), padding='same'),

DarknetConv2D_BN_Leaky(1024, (3,3)),

DarknetConv2D_BN_Leaky(256, (1,1)))(x1)

y1 = compose(

DarknetConv2D_BN_Leaky(512, (3,3)),

DarknetConv2D(num_anchors*(num_classes+5), (1,1)))(x2)

x2 = compose(

DarknetConv2D_BN_Leaky(128, (1,1)),

UpSampling2D(2))(x2)

y2 = compose(

Concatenate(),

DarknetConv2D_BN_Leaky(256, (3,3)),

DarknetConv2D(num_anchors*(num_classes+5), (1,1)))([x2,x1])

return Model(inputs, [y1,y2])

def yolo_head(feats, anchors, num_classes, input_shape, calc_loss=False):

"""Convert final layer features to bounding box parameters."""

num_anchors = len(anchors) #num_anchors = 3

# Reshape to batch, height, width, num_anchors, box_params.

anchors_tensor = K.reshape(K.constant(anchors), [1, 1, 1, num_anchors, 2]) #reshape ->(1,1,1,3,2)

grid_shape = K.shape(feats)[1:3] # height, width (?,13,13,27) -> (13,13)

#grid_y和grid_x用于生成网格grid,通过arange、reshape、tile的组合, 创建y轴的0~12的组合#grid_y,再创建x轴的0~12的组合grid_x,将两者拼接concatenate,就是grid;

grid_y = K.tile(K.reshape(K.arange(0, stop=grid_shape[0]), [-1, 1, 1, 1]),

[1, grid_shape[1], 1, 1])

grid_x = K.tile(K.reshape(K.arange(0, stop=grid_shape[1]), [1, -1, 1, 1]),

[grid_shape[0], 1, 1, 1])

grid = K.concatenate([grid_x, grid_y])

grid = K.cast(grid, K.dtype(feats)) #K.cast():把grid中值的类型变为和feats中值的类型一样

feats = K.reshape(

feats, [-1, grid_shape[0], grid_shape[1], num_anchors, num_classes + 5])

#将feats的最后一维展开,将anchors与其他数据(类别数+4个框值+框置信度)分离

# Adjust preditions to each spatial grid point and anchor size.

#xywh的计算公式,见边界框回归公式。

#tx、ty、tw和th是feats值,而bx、by、bw和bh是输出值

box_xy = (K.sigmoid(feats[..., :2]) + grid) / K.cast(grid_shape[::-1], K.dtype(feats)) #sigmoid:σ

box_wh = K.exp(feats[..., 2:4]) * anchors_tensor / K.cast(input_shape[::-1], K.dtype(feats))

box_confidence = K.sigmoid(feats[..., 4:5])

box_class_probs = K.sigmoid(feats[..., 5:])

# ...操作符,在Python中,“...”(ellipsis)操作符,表示其他维度不变,只操作最前或最后1维;

if calc_loss == True:

return grid, feats, box_xy, box_wh

# 将box_xy,box_xy 从OUTPUT的预测数据转为真实坐标。

return box_xy, box_wh, box_confidence, box_class_probs

def yolo_correct_boxes(box_xy, box_wh, input_shape, image_shape): #得到正确的x,y,w,h

'''Get corrected boxes'''

box_yx = box_xy[..., ::-1] #“::-1”是颠倒数组的值

box_hw = box_wh[..., ::-1]

input_shape = K.cast(input_shape, K.dtype(box_yx))

image_shape = K.cast(image_shape, K.dtype(box_yx))

new_shape = K.round(image_shape * K.min(input_shape/image_shape))

offset = (input_shape-new_shape)/2./input_shape

scale = input_shape/new_shape

box_yx = (box_yx - offset) * scale

box_hw *= scale

box_mins = box_yx - (box_hw / 2.)

box_maxes = box_yx + (box_hw / 2.)

boxes = K.concatenate([

box_mins[..., 0:1], # y_min

box_mins[..., 1:2], # x_min

box_maxes[..., 0:1], # y_max

box_maxes[..., 1:2] # x_max

])

# Scale boxes back to original image shape.

boxes *= K.concatenate([image_shape, image_shape])

return boxes

def yolo_boxes_and_scores(feats, anchors, num_classes, input_shape, image_shape):

# feats:输出的shape,->(?,13,13,27); anchors:每层对应的3个anchor box

# num_classes: 类别数(4); input_shape:(416,416); image_shape:图像尺寸

'''Process Conv layer output'''

box_xy, box_wh, box_confidence, box_class_probs = yolo_head(feats,

anchors, num_classes, input_shape)

#yolo_head():box_xy是box的中心坐标,(0~1)相对位置;box_wh是box的宽高,(0~1)相对值;

#box_confidence是框中物体置信度;box_class_probs是类别置信度;

boxes = yolo_correct_boxes(box_xy, box_wh, input_shape, image_shape)

#将box_xy和box_wh的(0~1)相对值,转换为真实坐标,输出boxes是(y_min,x_min,y_max,x_max)的#值

boxes = K.reshape(boxes, [-1, 4])

#reshape,将不同网格的值转换为框的列表。即(?,13,13,3,4)->(?,4) ?:框的数目

box_scores = box_confidence * box_class_probs

#框的得分=框的置信度*类别置信度

box_scores = K.reshape(box_scores, [-1, num_classes])

#reshape,将框的得分展平,变为(?,4); ?:框的数目

return boxes, box_scores

def yolo_eval(yolo_outputs,

#模型输出,格式如下[(?,13,13,27)(?,26,26,27)(?,52,52,27)] ?:bitch size; 13-26-52:多尺度预测; 27:预测值(3*(4+5))

anchors,

#[(10,13), (16,30), (33,23), (30,61), (62,45), (59,119), (116,90), (156,198),(373,326)]

num_classes, # 类别个数,此数据集有4类

image_shape, #placeholder类型的TF参数,默认(416, 416);

max_boxes=20,

#每张图每类最多检测到20个框同类别框的IoU阈值,大于阈值的重叠框被删除,重叠物体较多,则调高阈值,重叠物体较少,则调低阈值

score_threshold=.6,

#框置信度阈值,小于阈值的框被删除,需要的框较多,则调低阈值,需要的框较少,则调高阈值;

iou_threshold=.5):

#同类别框的IoU阈值,大于阈值的重叠框被删除,重叠物体较多,则调高阈值,重叠物体较少,则调低阈值

"""Evaluate YOLO model on given input and return filtered boxes."""

num_layers = len(yolo_outputs) #yolo的输出层数;num_layers = 3 -> 13-26-52

# 不同的欺骗对应不同的ANCHOR大小。

anchor_mask = [[6,7,8], [3,4,5], [0,1,2]] if num_layers==3 else [[3,4,5], [1,2,3]] # default setting

#每层分配3个anchor box.如13*13分配到[6,7,8]即[(116,90)(156,198)(373,326)]

input_shape = K.shape(yolo_outputs[0])[1:3] * 32

#输入shape(?,13,13,255);即第一维和第二维分别乘32,输出的图片尺寸为(416,416)

boxes = []

box_scores = []

for l in range(num_layers):

_boxes, _box_scores = yolo_boxes_and_scores(yolo_outputs[l],

# yolo_boxes_and_scores()函数见附录yolo3.model

anchors[anchor_mask[l]], num_classes, input_shape, image_shape)

boxes.append(_boxes)

box_scores.append(_box_scores)

boxes = K.concatenate(boxes, axis=0) #K.concatenate:将数据展平 ->(?,4)

box_scores = K.concatenate(box_scores, axis=0) # ->(?,)

mask = box_scores >= score_threshold

#MASK掩码,过滤小于score阈值的值,只保留大于阈值的值

max_boxes_tensor = K.constant(max_boxes, dtype='int32') #最大检测框数20

boxes_ = []

scores_ = []

classes_ = []

for c in range(num_classes):

# TODO: use keras backend instead of tf.

class_boxes = tf.boolean_mask(boxes, mask[:, c]) #通过掩码MASK和类别C筛选框boxes

class_box_scores = tf.boolean_mask(box_scores[:, c], mask[:, c])

#通过掩码MASK和类别C筛选scores

nms_index = tf.image.non_max_suppression( #运行非极大抑制

class_boxes, class_box_scores, max_boxes_tensor, iou_threshold=iou_threshold)

class_boxes = K.gather(class_boxes, nms_index)

#K.gather:根据索引nms_index选择class_boxes

class_box_scores = K.gather(class_box_scores, nms_index)

#根据索引nms_index选择class_box_score)

classes = K.ones_like(class_box_scores, 'int32') * c #计算类的框得分

boxes_.append(class_boxes)

scores_.append(class_box_scores)

classes_.append(classes)

boxes_ = K.concatenate(boxes_, axis=0)

#K.concatenate().将相同维度的数据连接在一起;把boxes_展平。 -> 变成格式:(?,4); ?:框的个#数;4:(x,y,w,h)

scores_ = K.concatenate(scores_, axis=0)

classes_ = K.concatenate(classes_, axis=0)

return boxes_, scores_, classes_

#图片缩放到固定大小之后就是生成对应的数据

#通过model.py(preprocess_true_boxes实现box框的框定

def preprocess_true_boxes(true_boxes, input_shape, anchors, num_classes):

'''Preprocess true boxes to training input format

Parameters

----------

true_boxes: array, shape=(m, T, 5)

Absolute x_min, y_min, x_max, y_max, class_id relative to input_shape.

input_shape: array-like, hw, multiples of 32

anchors: array, shape=(N, 2), wh

num_classes: integer

Returns

-------

y_true: list of array, shape like yolo_outputs, xywh are reletive value

'''

assert (true_boxes[..., 4]<num_classes).all(), 'class id must be less than num_classes'

num_layers = len(anchors)//3 # default setting

# 不同的欺骗对应不同的ANCHOR大小。

anchor_mask = [[6,7,8], [3,4,5], [0,1,2]] if num_layers==3 else [[3,4,5], [1,2,3]]

true_boxes = np.array(true_boxes, dtype='float32')

input_shape = np.array(input_shape, dtype='int32')

boxes_xy = (true_boxes[..., 0:2] + true_boxes[..., 2:4]) // 2

boxes_wh = true_boxes[..., 2:4] - true_boxes[..., 0:2]

# 生成true_box做了类似归一化的处理,因此,true_box小于1,box_loss_scale一定大于0.

true_boxes[..., 0:2] = boxes_xy/input_shape[::-1]

true_boxes[..., 2:4] = boxes_wh/input_shape[::-1]

m = true_boxes.shape[0]

grid_shapes = [input_shape//{

0:32, 1:16, 2:8}[l] for l in range(num_layers)]

y_true = [np.zeros((m,grid_shapes[l][0],grid_shapes[l][1],len(anchor_mask[l]),5+num_classes),

dtype='float32') for l in range(num_layers)]

# Expand dim to apply broadcasting.

anchors = np.expand_dims(anchors, 0)

anchor_maxes = anchors / 2.

anchor_mins = -anchor_maxes

valid_mask = boxes_wh[..., 0]>0

#每个图片都需要单独处理。

for b in range(m):

# Discard zero rows.

wh = boxes_wh[b, valid_mask[b]]

if len(wh)==0: continue

# Expand dim to apply broadcasting.

wh = np.expand_dims(wh, -2)

box_maxes = wh / 2.

box_mins = -box_maxes

intersect_mins = np.maximum(box_mins, anchor_mins)

intersect_maxes = np.minimum(box_maxes, anchor_maxes)

intersect_wh = np.maximum(intersect_maxes - intersect_mins, 0.)

intersect_area = intersect_wh[..., 0] * intersect_wh[..., 1]

box_area = wh[..., 0] * wh[..., 1]

anchor_area = anchors[..., 0] * anchors[..., 1]

iou = intersect_area / (box_area + anchor_area - intersect_area)

# Find best anchor for each true box

# 9个设定的ANCHOR去框定每个输入的BOX。

best_anchor = np.argmax(iou, axis=-1)

for t, n in enumerate(best_anchor):

for l in range(num_layers):

if n in anchor_mask[l]:

i = np.floor(true_boxes[b,t,0]*grid_shapes[l][1]).astype('int32')

j = np.floor(true_boxes[b,t,1]*grid_shapes[l][0]).astype('int32')

k = anchor_mask[l].index(n)

c = true_boxes[b,t, 4].astype('int32')

# 设定数据

# 将T个box的标的数据统一放置到3*B*W*H*3的维度上。

y_true[l][b, j, i, k, 0:4] = true_boxes[b,t, 0:4]

y_true[l][b, j, i, k, 4] = 1

y_true[l][b, j, i, k, 5+c] = 1

return y_true

def box_iou(b1, b2):

'''Return iou tensor

Parameters

----------

b1: tensor, shape=(i1,...,iN, 4), xywh

b2: tensor, shape=(j, 4), xywh

Returns

-------

iou: tensor, shape=(i1,...,iN, j)

'''

# Expand dim to apply broadcasting.

b1 = K.expand_dims(b1, -2)

b1_xy = b1[..., :2]

b1_wh = b1[..., 2:4]

b1_wh_half = b1_wh/2.

b1_mins = b1_xy - b1_wh_half

b1_maxes = b1_xy + b1_wh_half

# Expand dim to apply broadcasting.

b2 = K.expand_dims(b2, 0)

b2_xy = b2[..., :2]

b2_wh = b2[..., 2:4]

b2_wh_half = b2_wh/2.

b2_mins = b2_xy - b2_wh_half

b2_maxes = b2_xy + b2_wh_half

intersect_mins = K.maximum(b1_mins, b2_mins)

intersect_maxes = K.minimum(b1_maxes, b2_maxes)

intersect_wh = K.maximum(intersect_maxes - intersect_mins, 0.)

intersect_area = intersect_wh[..., 0] * intersect_wh[..., 1]

b1_area = b1_wh[..., 0] * b1_wh[..., 1]

b2_area = b2_wh[..., 0] * b2_wh[..., 1]

iou = intersect_area / (b1_area + b2_area - intersect_area)

return iou

def yolo_loss(args, anchors, num_classes, ignore_thresh=.5, print_loss=False):

'''Return yolo_loss tensor

Parameters

----------

yolo_outputs: list of tensor, the output of yolo_body or tiny_yolo_body

y_true: list of array, the output of preprocess_true_boxes

anchors: array, shape=(N, 2), wh

num_classes: integer

ignore_thresh: float, the iou threshold whether to ignore object confidence loss

Returns

-------

loss: tensor, shape=(1,)

'''

num_layers = len(anchors)//3 # default setting

yolo_outputs = args[:num_layers]

y_true = args[num_layers:]

# 不同的欺骗对应不同的ANCHOR大小。

anchor_mask = [[6,7,8], [3,4,5], [0,1,2]] if num_layers==3 else [[3,4,5], [1,2,3]]

# 根据模型返回的OUTPUT计算输入图片SHAPE以及3个LAYER下,3个切片的大小。

input_shape = K.cast(K.shape(yolo_outputs[0])[1:3] * 32, K.dtype(y_true[0]))

grid_shapes = [K.cast(K.shape(yolo_outputs[l])[1:3], K.dtype(y_true[0])) for l in range(num_layers)]

loss = 0

m = K.shape(yolo_outputs[0])[0] # batch size, tensor #m表示采样batch_size

mf = K.cast(m, K.dtype(yolo_outputs[0]))

# loss是需要三层分别计算的

for l in range(num_layers):

# 置信率

object_mask = y_true[l][..., 4:5]

# 分类

true_class_probs = y_true[l][..., 5:]

# raw_pred是yolo_outputs[l],经过yolo_head函数后,raw_pred数据并没有改变。

grid, raw_pred, pred_xy, pred_wh = yolo_head(yolo_outputs[l],

anchors[anchor_mask[l]], num_classes, input_shape, calc_loss=True)

pred_box = K.concatenate([pred_xy, pred_wh])

# Darknet raw box to calculate loss.

# Darknet原始盒子来计算损失。

raw_true_xy = y_true[l][..., :2]*grid_shapes[l][::-1] - grid

raw_true_wh = K.log(y_true[l][..., 2:4] / anchors[anchor_mask[l]] * input_shape[::-1])

raw_true_wh = K.switch(object_mask, raw_true_wh, K.zeros_like(raw_true_wh)) # avoid log(0)=-inf

box_loss_scale = 2 - y_true[l][...,2:3]*y_true[l][...,3:4]

# Find ignore mask, iterate over each of batch.

ignore_mask = tf.TensorArray(K.dtype(y_true[0]), size=1, dynamic_size=True)

object_mask_bool = K.cast(object_mask, 'bool')

# loop_body计算batch_size内最大的IOU

def loop_body(b, ignore_mask):

# tf.boolean_mask Apply boolean mask to tensor. Numpy equivalent is tensor[mask]. 根据y_true的置信度标识,来框定y_true的坐标系参数是否有效。

true_box = tf.boolean_mask(y_true[l][b,...,0:4], object_mask_bool[b,...,0])

iou = box_iou(pred_box[b], true_box)

best_iou = K.max(iou, axis=-1)

#当一张图片的最大IOU低于ignore_thresh,则认为图片内是没有目标

ignore_mask = ignore_mask.write(b, K.cast(best_iou<ignore_thresh, K.dtype(true_box)))

return b+1, ignore_mask

_, ignore_mask = K.control_flow_ops.while_loop(lambda b,*args: b<m, loop_body, [0,

ignore_mask])

ignore_mask = ignore_mask.stack()

ignore_mask = K.expand_dims(ignore_mask, -1)

# K.binary_crossentropy is helpful to avoid exp overflow.

xy_loss = object_mask * box_loss_scale * K.binary_crossentropy(raw_true_xy, raw_pred[...,0:2], f rom_logits=True)

wh_loss = object_mask * box_loss_scale * 0.5 * K.square(raw_true_wh-raw_pred[...,2:4])

# 置信度

confidence_loss = object_mask * K.binary_crossentropy(object_mask, raw_pred[...,4:5], from_logits=True)+ (1-object_mask) * K.binary_crossentropy(object_mask, raw_pred[...,4:5], from_logits=True) * ignore_mask

# 分类

class_loss = object_mask * K.binary_crossentropy(true_class_probs, raw_pred[...,5:], from_logits=True)

xy_loss = K.sum(xy_loss) / mf

wh_loss = K.sum(wh_loss) / mf

confidence_loss = K.sum(confidence_loss) / mf

class_loss = K.sum(class_loss) / mf

loss += xy_loss + wh_loss + confidence_loss + class_loss

if print_loss:

loss = tf.Print(loss, [loss, xy_loss, wh_loss, confidence_loss, class_loss, K.sum(ignore_mask)], message='loss: ')

return loss

附录B

训练和检测都导入了yolo3.utils:

#yolo3.utils中是其他使用函数,主要用于keras-yolo数据增强的一些方法。

"""Miscellaneous utility functions."""

from functools import reduce

from PIL import Image

import numpy as np

from matplotlib.colors import rgb_to_hsv, hsv_to_rgb

def compose(*funcs):

"""Compose arbitrarily many functions, evaluated left to right.

Reference: https://mathieularose.com/function-composition-in-python/

"""

# return lambda x: reduce(lambda v, f: f(v), funcs, x)

if funcs:

return reduce(lambda f, g: lambda *a, **kw: g(f(*a, **kw)), funcs)

else:

raise ValueError('Composition of empty sequence not supported.')

def letterbox_image(image, size):

'''resize image with unchanged aspect ratio using padding'''

iw, ih = image.size

w, h = size

scale = min(w/iw, h/ih)

nw = int(iw*scale)

nh = int(ih*scale)

image = image.resize((nw,nh), Image.BICUBIC)

new_image = Image.new('RGB', size, (128,128,128))

new_image.paste(image, ((w-nw)//2, (h-nh)//2))

return new_image

def rand(a=0, b=1):

return np.random.rand()*(b-a) + a

#在utils.py(get_random_data)函数中实现数据处理

def get_random_data(annotation_line, input_shape, random=True, max_boxes=20, jitter=.3, hue=.1, sat=1.5, val=1.5, proc_img=True):

'''random preprocessing for real-time data augmentation'''

line = annotation_line.split()

image = Image.open(line[0])

iw, ih = image.size

h, w = input_shape

box = np.array([np.array(list(map(int,box.split(',')))) for box in line[1:]])

#not random的实现

if not random:

# resize image

#缩放大小

scale = min(w/iw, h/ih)

nw = int(iw*scale)

nh = int(ih*scale)

#中心点

dx = (w-nw)//2

dy = (h-nh)//2

image_data=0

if proc_img:

image = image.resize((nw,nh), Image.BICUBIC)

#背景

new_image = Image.new('RGB', (w,h), (128,128,128))

#黏贴图pain

new_image.paste(image, (dx, dy))

#归一化

image_data = np.array(new_image)/255.

# correct boxes

box_data = np.zeros((max_boxes,5))

if len(box)>0:

np.random.shuffle(box)

# 最大20个BOX。

if len(box)>max_boxes: box = box[:max_boxes]

#根据缩放大小,生成新图中的BOX位置

box[:, [0,2]] = box[:, [0,2]]*scale + dx

box[:, [1,3]] = box[:, [1,3]]*scale + dy

box_data[:len(box)] = box

return image_data, box_data

# resize image

# 随机生成宽高比

new_ar = w/h * rand(1-jitter,1+jitter)/rand(1-jitter,1+jitter)

# 随机生成缩放比例。

scale = rand(.25, 2)

# 生成新的高宽数据,可能放大2倍。

if new_ar < 1:

nh = int(scale*h)

nw = int(nh*new_ar)

else:

nw = int(scale*w)

nh = int(nw/new_ar)

image = image.resize((nw,nh), Image.BICUBIC)

# place image

# 随机水平位移

dx = int(rand(0, w-nw))

dy = int(rand(0, h-nh))

new_image = Image.new('RGB', (w,h), (128,128,128))

new_image.paste(image, (dx, dy))

image = new_image

# flip image or not

# 翻转

flip = rand()<.5

if flip: image = image.transpose(Image.FLIP_LEFT_RIGHT)

# distort image

# HSV抖动

hue = rand(-hue, hue)

sat = rand(1, sat) if rand()<.5 else 1/rand(1, sat)

val = rand(1, val) if rand()<.5 else 1/rand(1, val)

# 归一化处理

# 内部函数,通过公式转化。具体函数不介绍。

x = rgb_to_hsv(np.array(image)/255.)

x[..., 0] += hue

x[..., 0][x[..., 0]>1] -= 1

x[..., 0][x[..., 0]<0] += 1

x[..., 1] *= sat

x[..., 2] *= val

# 避免S/V CHANNEL越界

x[x>1] = 1

x[x<0] = 0

image_data = hsv_to_rgb(x) # numpy array, 0 to 1

# correct boxes

box_data = np.zeros((max_boxes,5))

if len(box)>0:

np.random.shuffle(box)

box[:, [0,2]] = box[:, [0,2]]*nw/iw + dx

box[:, [1,3]] = box[:, [1,3]]*nh/ih + dy

### 左右翻转

if flip: box[:, [0,2]] = w - box[:, [2,0]]

### 定义边界

box[:, 0:2][box[:, 0:2]<0] = 0

box[:, 2][box[:, 2]>w] = w

box[:, 3][box[:, 3]>h] = h

### 计算新的长宽

box_w = box[:, 2] - box[:, 0]

box_h = box[:, 3] - box[:, 1]

box = box[np.logical_and(box_w>1, box_h>1)] # discard invalid box

if len(box)>max_boxes: box = box[:max_boxes]

box_data[:len(box)] = box

return image_data, box_data