ELK+Filebeat+Kafka日志采集

技术笔记

欢迎大家进群,一起探讨学习

![]()

微信公众号,每天给大家提供技术干货

博主技术平台地址

博主开源微服架构前后端分离技术博客项目源码地址,欢迎各位star

1.ELK

2. ELFK

3. 架构演进

ELK缺点:ELK架构,并且Spring Boot应用使用 logstash-logback-encoder 直接发送给 Logstash,缺点就是Logstash是重量级日志收集server,占用cpu资源高且内存占用比较高ELFK缺点:一定程度上解决了ELK中Logstash的不足,但是由于Beats 收集的每秒数据量越来越大,Logstash 可能无法承载这么大量日志的处理

4. 日志新贵ELK + Filebeat + Kafka

随着 Beats 收集的每秒数据量越来越大,Logstash 可能无法承载这么大量日志的处理。虽然说,可以增加 Logstash 节点数量,提高每秒数据的处理速度,但是仍需考虑可能 Elasticsearch 无法承载这么大量的日志的写入。此时,我们可以考虑 引入消息队列 ,进行缓存:Beats 收集数据,写入数据到消息队列中。

5.搭建

5.1需要的组件

1、elasticsearch-7.9.3 |

5.2修改elasticsearch-7.9.3/config/elasticsearch.yml 文件

修改配置:#ubuntu需要加上node.name sentos7可以不需要,尽量还是加上吧

node.name: node-1

network.host: 0.0.0.0

http.port: 9200

http.cors.enabled: true

http.cors.allow-origin: "*"

#这里可以换未cluster.initial_master_nodes: ["node-1"]

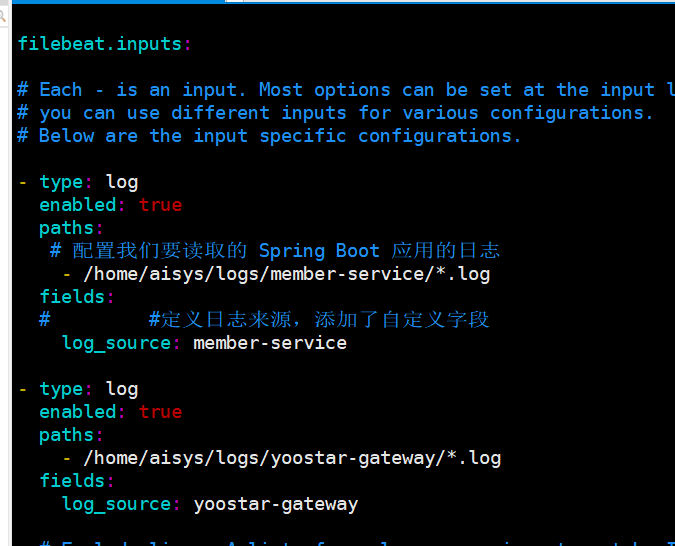

cluster.initial_master_nodes: ["192.168.28.129:9300"]5.3编辑 filebeat-7.9.3/filebeat.yml 文件 (不同的服务配置不同的日志路径)

filebeat.inputs:

- type: log

enabled: true

paths:

# 配置我们要读取的 Spring Boot 应用的日志

- /home/aisys/logs/member-service/*.log

fields:

# #定义日志来源,添加了自定义字段

log_topic: member-service

- type: log

enabled: true

paths:

- /home/aisys/logs/yoostar-gateway/*.log

fields:

log_topic: yoostar-gateway

#----------------------------- kafka output --------------------------------

output.kafka:

enabled: true

hosts: [“192.168.28.128:9092”]

topic: ‘tv-%{[fields][log_topic]}’

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip5.4编辑 kibana-7.9.3/config/kibana.yml 文件

server.host: “0.0.0.0”

elasticsearch.hosts: [“http://10.20.22.30:9200”]

英文很6的可以忽略这个

i18n.locale: “zh-CN"

5.5编辑 logstash-7.9.3/config/logstash.conf 文件(该文件需要手动创建)

input {

kafka {

bootstrap_servers =>“192.168.28.128:9092”

topics_pattern =>“tv-.*”

consumer_threads =>5

decorate_events =>true

codec =>“json”

auto_offset_reset =>“earliest”

#集群需要相同

group_id =>“logstash1”

}

}

filter{

json{

source =>“message”

target =>“doc”

}

}

output{

elasticsearch{

action =>“index”

hosts =>[“192.168.28.128:9200”]

#索引里面如果有大写字母就无法根据topic动态生成索引,topic也不能有大写字母

index =>”%{[fields][log_topic]}-%{+YYYY-MM-dd}"

}

stdout{

codec =>rubydebug

}

}

5.6启动elasticsearch-head

// 下载

wget https://nodejs.org/dist/v10.9.0/node-v10.9.0-linux-x64.tar.xz

tar xf node-v10.9.0-linux-x64.tar.xz // 解压

cd node-v10.9.0-linux-x64/ // 进入解压目录

./bin/node -v // 执行node命令 查看版本

v10.9.0

配置环境变量

vim /etc/profile

export PATH=$PATH:/usr/local/node-v10.9.0-linux-x64/bin

刷新配置

source /etc/profile执行npm install -g grunt-cli 编译源码

执行npm install 安装服务

如果查询install.js错误执行npm -g install phantomjs-prebuilt@2.1.16 –ignore-script

执行grunt server启动服务。或者 nohup grunt server >output 2>&1 &

启动服务之后访问http/10.20.22.30:9100/5.7启动kafka

1.下载kafka安装包

(未安装wget请先安装)yum -y install wget

wget https://mirror.bit.edu.cn/apache/kafka/2.5.0/kafka_2.13-2.5.0.tgz

2.解压kafka

tar -zxvf kafka_2.13-2.5.0.tgz3.进入配置目录

cd kafka_2.13-2.5.0/config/4.修改配置文件server.properties,添加下面内容

vim server.propertiesbroker.id=0

port=9092 #端口号

host.name=172.30.0.9 #服务器IP地址,修改为自己的服务器IP

log.dirs=/usr/local/logs/kafka #日志存放路径,上面创建的目录

zookeeper.connect=localhost:2181 #zookeeper地址和端口,单机配置部署,localhost:21815.编写脚本

vim zookeeper_start.sh

启动zookeeper

/usr/local/kafka_2.13-2.5.0/bin/zookeeper-server-start.sh /usr/local/kafka_2.13-2.5.0/config/zookeeper.properties &

编写kafka启动脚本

vim kafka_start.sh

启动kafaka

/usr/local/kafka_2.13-2.5.0/bin/kafka-server-start.sh /usr/local/kafka_2.13-2.5.0/config/server.properties &

编写zookeeper停止脚本

vim zookeeper_stop.sh

停止zookeeper

/usr/local/kafka_2.13-2.5.0/bin/zookeeper-server-stop.sh /usr/local/kafka_2.13-2.5.0/config/zookeeper.properties &

编写kafka停止脚本

vim kafka_stop.sh

停止kafka

/usr/local/kafka_2.13-2.5.0/bin/kafka-server-stop.sh /usr/local/kafka_2.13-2.5.0/config/server.properties &

启动关闭脚本赋予权限

chmod 777 kafka_start.sh

chmod 777 kafka_stop.sh

chmod 777 zookeeper_start.sh

chmod 777 zookeeper_stop.sh7.先启动zookeeper在启动kafka

./zookeeper_start.sh---------------------------------------------启动zookeeper

./kafka_start.sh----------------------------------------------------启动kafka

ps -ef | grep zookeeper------------------------------------------查看zookeeper进程状态

ps -ef | grep kafka-------------------------------------------------查看kafka进程状态

若出现kafka.common.InconsistentClusterIdException: The Cluster ID MoJxXReIRgeVz8GaoglyXw doesn’t match stored clusterId Some(t4eUcr1HTVC_VjB6h-vjyA) in meta.properties异常解决方法 意思是集群id跟元数据meta.properties中存储的不一致,导致启动失败。因此去查看meta.properties文件中的元数据信息。这个文件的存储路径是通过/config/server.properties配置文件中的log.dirs属性配置的。所以通过配置文件找到meta.properties,修改里面的cluster.id即可。 将异常信息中的Cluster ID MoJxXReIRgeVz8GaoglyXw写入

5.8启动elasticsearch

启动es出现以下错误是不能用root用户进行启动es

groupadd es

-g 指定组 -p 指定密码

useradd es -g es -p es

-R : 处理指定目录下的所有文件

chown -R es:es /usr/local/elasticsearch-7.9.3/

chown -R es:es /usr/local/kibana-7.9.3-linux-x86_64

su es

./elasticsearch -d

5.9启动kibana(也是只能用es用户启动)

nohup bin/kibana >output 2>&1 &

访问 http://10.20.22.30:5601/ ,即可访问 kibana 5.10、启动filebeat

su root #切换成root用户

nohup ./filebeat -e -c filebeat.yml >output 2>&1 &5.11、启动logstash

nohup ./bin/logstash -f ./config/logstash.conf >output 2>&1 &5.12、启动zipkin

nohup java -jar zipkin-server-2.19.0-exec.jar --KAFKA_BOOTSTRAP_SERVERS=10.20.22.30:9092 --STORAGE_TYPE=elasticsearch --ES_HOSTS=http://10.20.22.30:9200 >output 2>&1 &

访问 http://10.20.22.30:9411/zipkin/ 即可查看zipkin5.13测试

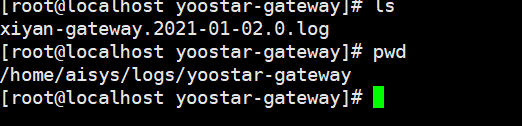

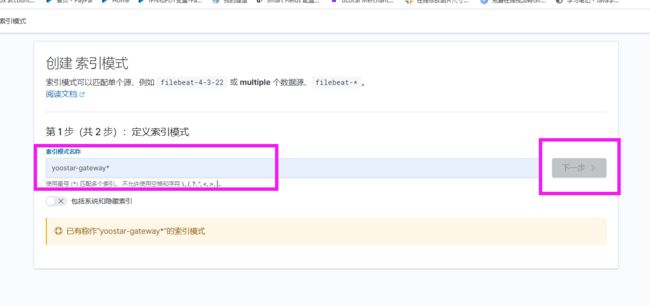

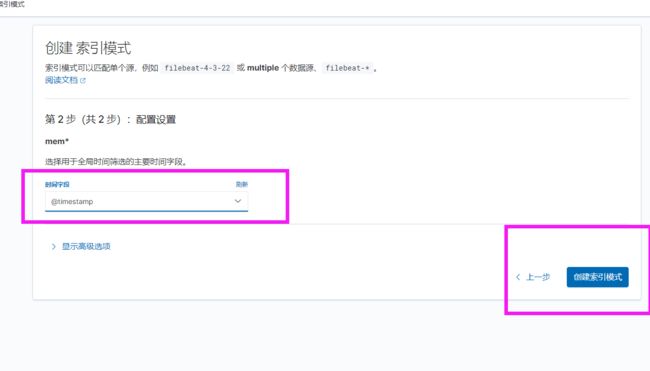

在/home/aisys/logs/yoostar-gateway放入日志文件

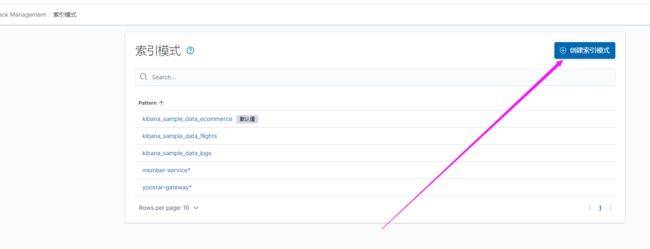

Elasticsearch查询索引数据

kinaba查看数据

或者

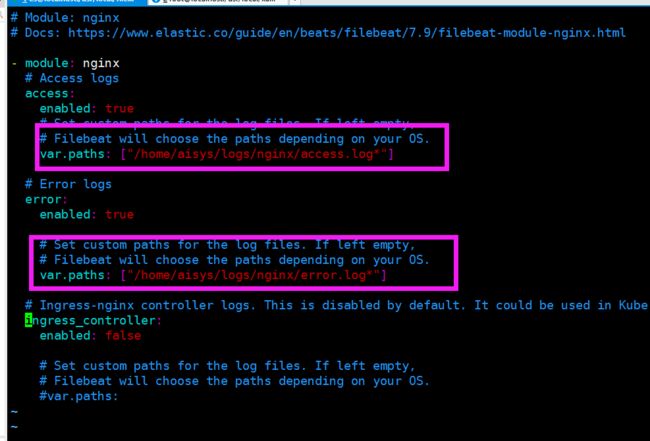

5.14采用filebeat自带的module收集日志 我这里就举例收集nginx日志

./filebeat modules enable nginx修改配置文件

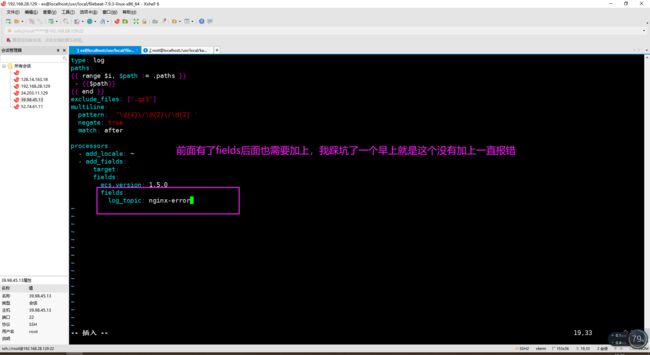

vim modules.d/nginx.yml由于我filebeat配置是一个日志文件对应一个topic所以还需要修改nginx对应的数据topic

vim module/nginx/error/config/nginx-error.ymlvim module/nginx/access/config/nginx-access.yml再次重启filebeat大功告成