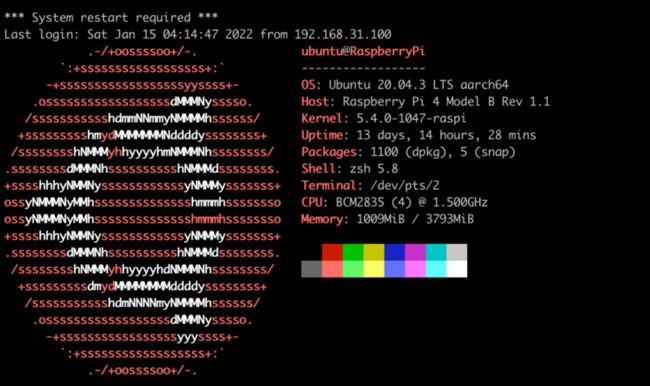

使用一个树莓派 4B 4GB 版本

使用 redis-benchmark 作为压力测试工具

用法:直接在中终端输入该命令

redis-benchmark部分结果:

╭─ubuntu@RaspberryPi ~

╰─➤ redis-benchmark

====== PING_INLINE ======

100000 requests completed in 6.72 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

93.56% <= 2 milliseconds

99.98% <= 3 milliseconds

100.00% <= 3 milliseconds

14872.10 requests per second

====== PING_BULK ======

100000 requests completed in 6.82 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

93.04% <= 2 milliseconds

99.99% <= 3 milliseconds

100.00% <= 4 milliseconds

14652.01 requests per second

====== SET ======

100000 requests completed in 6.99 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

88.83% <= 2 milliseconds

99.75% <= 3 milliseconds

99.85% <= 4 milliseconds

99.95% <= 5 milliseconds

99.98% <= 6 milliseconds

99.99% <= 7 milliseconds

100.00% <= 7 milliseconds

14300.01 requests per second

====== GET ======

100000 requests completed in 6.75 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

93.66% <= 2 milliseconds

100.00% <= 2 milliseconds

14806.04 requests per second

====== INCR ======

100000 requests completed in 6.73 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

94.82% <= 2 milliseconds

99.98% <= 3 milliseconds

100.00% <= 3 milliseconds

14858.84 requests per second

====== LPUSH ======

100000 requests completed in 6.70 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

94.51% <= 2 milliseconds

100.00% <= 3 milliseconds

100.00% <= 3 milliseconds

14923.15 requests per second

====== RPUSH ======

100000 requests completed in 6.71 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

94.91% <= 2 milliseconds

99.97% <= 3 milliseconds

100.00% <= 3 milliseconds

14909.80 requests per second

====== LPOP ======

100000 requests completed in 6.69 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

95.04% <= 2 milliseconds

100.00% <= 2 milliseconds

14938.75 requests per second

====== RPOP ======

100000 requests completed in 6.71 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

94.07% <= 2 milliseconds

99.94% <= 3 milliseconds

99.97% <= 4 milliseconds

99.98% <= 5 milliseconds

99.99% <= 6 milliseconds

100.00% <= 7 milliseconds

14903.13 requests per second

====== SADD ======

100000 requests completed in 6.70 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

95.02% <= 2 milliseconds

100.00% <= 3 milliseconds

100.00% <= 3 milliseconds

14914.24 requests per second

====== HSET ======

100000 requests completed in 6.73 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

92.32% <= 2 milliseconds

99.97% <= 5 milliseconds

100.00% <= 6 milliseconds

14865.47 requests per second

====== SPOP ======

100000 requests completed in 6.73 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

95.41% <= 2 milliseconds

99.98% <= 3 milliseconds

100.00% <= 3 milliseconds

14861.05 requests per second

====== LPUSH (needed to benchmark LRANGE) ======

100000 requests completed in 6.66 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

96.09% <= 2 milliseconds

99.98% <= 3 milliseconds

100.00% <= 3 milliseconds

15008.25 requests per second

====== LRANGE_100 (first 100 elements) ======

100000 requests completed in 8.77 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

0.01% <= 2 milliseconds

99.96% <= 3 milliseconds

99.99% <= 4 milliseconds

100.00% <= 4 milliseconds

11401.21 requests per second

====== LRANGE_300 (first 300 elements) ======

100000 requests completed in 15.59 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

0.00% <= 2 milliseconds

0.01% <= 3 milliseconds

76.04% <= 4 milliseconds

99.65% <= 5 milliseconds

99.92% <= 6 milliseconds

99.96% <= 7 milliseconds

99.99% <= 8 milliseconds

100.00% <= 9 milliseconds

6413.55 requests per second

====== LRANGE_500 (first 450 elements) ======

100000 requests completed in 19.95 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 2 milliseconds

0.01% <= 3 milliseconds

0.01% <= 4 milliseconds

62.01% <= 5 milliseconds

99.31% <= 6 milliseconds

99.80% <= 7 milliseconds

99.98% <= 8 milliseconds

99.99% <= 9 milliseconds

100.00% <= 10 milliseconds

100.00% <= 12 milliseconds

100.00% <= 12 milliseconds

5011.53 requests per second

====== LRANGE_600 (first 600 elements) ======

100000 requests completed in 24.36 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 2 milliseconds

0.00% <= 3 milliseconds

0.01% <= 4 milliseconds

0.02% <= 5 milliseconds

38.54% <= 6 milliseconds

99.88% <= 7 milliseconds

99.96% <= 8 milliseconds

99.97% <= 9 milliseconds

99.98% <= 10 milliseconds

99.99% <= 11 milliseconds

100.00% <= 12 milliseconds

100.00% <= 13 milliseconds

4104.92 requests per second

====== MSET (10 keys) ======

100000 requests completed in 6.67 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

95.90% <= 2 milliseconds

99.97% <= 3 milliseconds

100.00% <= 3 milliseconds

14994.75 requests per second

╭─ubuntu@RaspberryPi ~

╰─➤可以看到 QPS 和 TPS 在 4000-16000之间

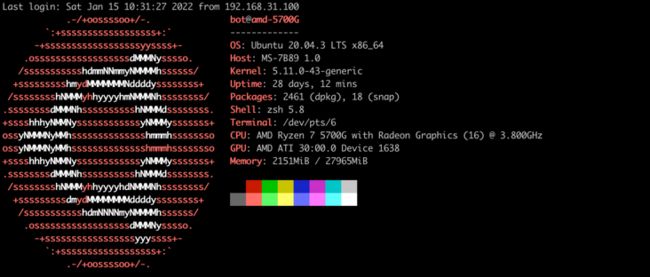

相比之下,使用 5700g 测试,QPS 和 TPS 提高了 10-20 倍,轻松上 10w

╭─bot@amd-5700G ~/Desktop/python

╰─➤ redis-benchmark

====== PING_INLINE ======

100000 requests completed in 0.57 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.039 milliseconds (cumulative count 2)

50.000% <= 0.135 milliseconds (cumulative count 59347)

75.000% <= 0.151 milliseconds (cumulative count 76345)

87.500% <= 0.175 milliseconds (cumulative count 88058)

93.750% <= 0.199 milliseconds (cumulative count 94218)

96.875% <= 0.239 milliseconds (cumulative count 97089)

98.438% <= 0.279 milliseconds (cumulative count 98615)

99.219% <= 0.359 milliseconds (cumulative count 99223)

99.609% <= 0.575 milliseconds (cumulative count 99614)

99.805% <= 0.735 milliseconds (cumulative count 99821)

99.902% <= 0.791 milliseconds (cumulative count 99908)

99.951% <= 0.903 milliseconds (cumulative count 99953)

99.976% <= 0.943 milliseconds (cumulative count 99978)

99.988% <= 0.959 milliseconds (cumulative count 99990)

99.994% <= 0.967 milliseconds (cumulative count 100000)

100.000% <= 0.967 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.081% <= 0.103 milliseconds (cumulative count 81)

95.131% <= 0.207 milliseconds (cumulative count 95131)

98.976% <= 0.303 milliseconds (cumulative count 98976)

99.331% <= 0.407 milliseconds (cumulative count 99331)

99.446% <= 0.503 milliseconds (cumulative count 99446)

99.684% <= 0.607 milliseconds (cumulative count 99684)

99.767% <= 0.703 milliseconds (cumulative count 99767)

99.913% <= 0.807 milliseconds (cumulative count 99913)

99.953% <= 0.903 milliseconds (cumulative count 99953)

100.000% <= 1.007 milliseconds (cumulative count 100000)

Summary:

throughput summary: 176366.86 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.145 0.032 0.135 0.207 0.311 0.967

====== PING_MBULK ======

100000 requests completed in 0.58 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.063 milliseconds (cumulative count 1)

50.000% <= 0.143 milliseconds (cumulative count 70232)

75.000% <= 0.151 milliseconds (cumulative count 75071)

87.500% <= 0.183 milliseconds (cumulative count 90255)

93.750% <= 0.199 milliseconds (cumulative count 94609)

96.875% <= 0.231 milliseconds (cumulative count 97069)

98.438% <= 0.287 milliseconds (cumulative count 98528)

99.219% <= 0.383 milliseconds (cumulative count 99220)

99.609% <= 0.535 milliseconds (cumulative count 99629)

99.805% <= 0.687 milliseconds (cumulative count 99806)

99.902% <= 0.767 milliseconds (cumulative count 99908)

99.951% <= 0.879 milliseconds (cumulative count 99960)

99.976% <= 0.895 milliseconds (cumulative count 99977)

99.988% <= 1.143 milliseconds (cumulative count 99989)

99.994% <= 1.183 milliseconds (cumulative count 99994)

99.997% <= 1.199 milliseconds (cumulative count 99997)

99.998% <= 1.215 milliseconds (cumulative count 100000)

100.000% <= 1.215 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.030% <= 0.103 milliseconds (cumulative count 30)

95.513% <= 0.207 milliseconds (cumulative count 95513)

98.747% <= 0.303 milliseconds (cumulative count 98747)

99.303% <= 0.407 milliseconds (cumulative count 99303)

99.534% <= 0.503 milliseconds (cumulative count 99534)

99.749% <= 0.607 milliseconds (cumulative count 99749)

99.820% <= 0.703 milliseconds (cumulative count 99820)

99.924% <= 0.807 milliseconds (cumulative count 99924)

99.980% <= 0.903 milliseconds (cumulative count 99980)

99.983% <= 1.103 milliseconds (cumulative count 99983)

99.998% <= 1.207 milliseconds (cumulative count 99998)

100.000% <= 1.303 milliseconds (cumulative count 100000)

Summary:

throughput summary: 172117.05 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.148 0.056 0.143 0.207 0.335 1.215

====== SET ======

100000 requests completed in 0.52 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.063 milliseconds (cumulative count 1)

50.000% <= 0.127 milliseconds (cumulative count 52318)

75.000% <= 0.143 milliseconds (cumulative count 76574)

87.500% <= 0.167 milliseconds (cumulative count 87660)

93.750% <= 0.191 milliseconds (cumulative count 94698)

96.875% <= 0.215 milliseconds (cumulative count 96877)

98.438% <= 0.303 milliseconds (cumulative count 98453)

99.219% <= 0.471 milliseconds (cumulative count 99233)

99.609% <= 0.591 milliseconds (cumulative count 99621)

99.805% <= 0.687 milliseconds (cumulative count 99807)

99.902% <= 0.847 milliseconds (cumulative count 99903)

99.951% <= 0.935 milliseconds (cumulative count 99957)

99.976% <= 1.071 milliseconds (cumulative count 99978)

99.988% <= 1.095 milliseconds (cumulative count 99991)

99.994% <= 1.119 milliseconds (cumulative count 99994)

99.997% <= 1.127 milliseconds (cumulative count 99997)

99.998% <= 1.135 milliseconds (cumulative count 100000)

100.000% <= 1.135 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

8.896% <= 0.103 milliseconds (cumulative count 8896)

96.387% <= 0.207 milliseconds (cumulative count 96387)

98.453% <= 0.303 milliseconds (cumulative count 98453)

98.953% <= 0.407 milliseconds (cumulative count 98953)

99.331% <= 0.503 milliseconds (cumulative count 99331)

99.674% <= 0.607 milliseconds (cumulative count 99674)

99.825% <= 0.703 milliseconds (cumulative count 99825)

99.899% <= 0.807 milliseconds (cumulative count 99899)

99.923% <= 0.903 milliseconds (cumulative count 99923)

99.971% <= 1.007 milliseconds (cumulative count 99971)

99.991% <= 1.103 milliseconds (cumulative count 99991)

100.000% <= 1.207 milliseconds (cumulative count 100000)

Summary:

throughput summary: 191204.59 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.138 0.056 0.127 0.199 0.423 1.135

====== GET ======

100000 requests completed in 0.55 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.063 milliseconds (cumulative count 2)

50.000% <= 0.135 milliseconds (cumulative count 67996)

75.000% <= 0.143 milliseconds (cumulative count 75770)

87.500% <= 0.175 milliseconds (cumulative count 89447)

93.750% <= 0.199 milliseconds (cumulative count 94491)

96.875% <= 0.239 milliseconds (cumulative count 96943)

98.438% <= 0.295 milliseconds (cumulative count 98514)

99.219% <= 0.415 milliseconds (cumulative count 99248)

99.609% <= 0.615 milliseconds (cumulative count 99611)

99.805% <= 0.703 milliseconds (cumulative count 99812)

99.902% <= 0.807 milliseconds (cumulative count 99903)

99.951% <= 0.887 milliseconds (cumulative count 99956)

99.976% <= 0.951 milliseconds (cumulative count 99976)

99.988% <= 1.039 milliseconds (cumulative count 99988)

99.994% <= 1.071 milliseconds (cumulative count 99995)

99.997% <= 1.079 milliseconds (cumulative count 99998)

99.998% <= 1.087 milliseconds (cumulative count 100000)

100.000% <= 1.087 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

1.716% <= 0.103 milliseconds (cumulative count 1716)

95.269% <= 0.207 milliseconds (cumulative count 95269)

98.623% <= 0.303 milliseconds (cumulative count 98623)

99.215% <= 0.407 milliseconds (cumulative count 99215)

99.422% <= 0.503 milliseconds (cumulative count 99422)

99.596% <= 0.607 milliseconds (cumulative count 99596)

99.812% <= 0.703 milliseconds (cumulative count 99812)

99.903% <= 0.807 milliseconds (cumulative count 99903)

99.960% <= 0.903 milliseconds (cumulative count 99960)

99.982% <= 1.007 milliseconds (cumulative count 99982)

100.000% <= 1.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 183150.19 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.141 0.056 0.135 0.207 0.359 1.087

====== INCR ======

100000 requests completed in 0.58 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.047 milliseconds (cumulative count 2)

50.000% <= 0.143 milliseconds (cumulative count 68051)

75.000% <= 0.159 milliseconds (cumulative count 77162)

87.500% <= 0.183 milliseconds (cumulative count 88919)

93.750% <= 0.207 milliseconds (cumulative count 94423)

96.875% <= 0.247 milliseconds (cumulative count 97073)

98.438% <= 0.287 milliseconds (cumulative count 98547)

99.219% <= 0.335 milliseconds (cumulative count 99230)

99.609% <= 0.559 milliseconds (cumulative count 99621)

99.805% <= 0.655 milliseconds (cumulative count 99814)

99.902% <= 0.759 milliseconds (cumulative count 99903)

99.951% <= 0.823 milliseconds (cumulative count 99960)

99.976% <= 0.879 milliseconds (cumulative count 99976)

99.988% <= 0.927 milliseconds (cumulative count 99992)

99.994% <= 0.935 milliseconds (cumulative count 100000)

100.000% <= 0.935 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.166% <= 0.103 milliseconds (cumulative count 166)

94.423% <= 0.207 milliseconds (cumulative count 94423)

98.936% <= 0.303 milliseconds (cumulative count 98936)

99.387% <= 0.407 milliseconds (cumulative count 99387)

99.519% <= 0.503 milliseconds (cumulative count 99519)

99.699% <= 0.607 milliseconds (cumulative count 99699)

99.879% <= 0.703 milliseconds (cumulative count 99879)

99.942% <= 0.807 milliseconds (cumulative count 99942)

99.985% <= 0.903 milliseconds (cumulative count 99985)

100.000% <= 1.007 milliseconds (cumulative count 100000)

Summary:

throughput summary: 172413.80 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.149 0.040 0.143 0.215 0.311 0.935

====== LPUSH ======

100000 requests completed in 0.55 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.039 milliseconds (cumulative count 2)

50.000% <= 0.135 milliseconds (cumulative count 60644)

75.000% <= 0.151 milliseconds (cumulative count 77368)

87.500% <= 0.175 milliseconds (cumulative count 87986)

93.750% <= 0.199 milliseconds (cumulative count 94060)

96.875% <= 0.231 milliseconds (cumulative count 97053)

98.438% <= 0.271 milliseconds (cumulative count 98444)

99.219% <= 0.343 milliseconds (cumulative count 99234)

99.609% <= 0.567 milliseconds (cumulative count 99619)

99.805% <= 0.759 milliseconds (cumulative count 99808)

99.902% <= 0.855 milliseconds (cumulative count 99913)

99.951% <= 0.903 milliseconds (cumulative count 99963)

99.976% <= 0.975 milliseconds (cumulative count 99977)

99.988% <= 0.999 milliseconds (cumulative count 99988)

99.994% <= 1.047 milliseconds (cumulative count 99997)

99.998% <= 1.055 milliseconds (cumulative count 100000)

100.000% <= 1.055 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

2.639% <= 0.103 milliseconds (cumulative count 2639)

95.028% <= 0.207 milliseconds (cumulative count 95028)

99.059% <= 0.303 milliseconds (cumulative count 99059)

99.365% <= 0.407 milliseconds (cumulative count 99365)

99.512% <= 0.503 milliseconds (cumulative count 99512)

99.653% <= 0.607 milliseconds (cumulative count 99653)

99.762% <= 0.703 milliseconds (cumulative count 99762)

99.857% <= 0.807 milliseconds (cumulative count 99857)

99.963% <= 0.903 milliseconds (cumulative count 99963)

99.988% <= 1.007 milliseconds (cumulative count 99988)

100.000% <= 1.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 182149.36 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.142 0.032 0.135 0.207 0.303 1.055

====== RPUSH ======

100000 requests completed in 0.57 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.047 milliseconds (cumulative count 2)

50.000% <= 0.135 milliseconds (cumulative count 66248)

75.000% <= 0.151 milliseconds (cumulative count 75181)

87.500% <= 0.191 milliseconds (cumulative count 87591)

93.750% <= 0.247 milliseconds (cumulative count 94165)

96.875% <= 0.287 milliseconds (cumulative count 96953)

98.438% <= 0.335 milliseconds (cumulative count 98541)

99.219% <= 0.455 milliseconds (cumulative count 99225)

99.609% <= 0.631 milliseconds (cumulative count 99619)

99.805% <= 0.815 milliseconds (cumulative count 99807)

99.902% <= 0.919 milliseconds (cumulative count 99907)

99.951% <= 1.015 milliseconds (cumulative count 99955)

99.976% <= 1.255 milliseconds (cumulative count 99978)

99.988% <= 1.295 milliseconds (cumulative count 99990)

99.994% <= 1.303 milliseconds (cumulative count 99994)

99.997% <= 1.311 milliseconds (cumulative count 100000)

100.000% <= 1.311 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

3.805% <= 0.103 milliseconds (cumulative count 3805)

90.162% <= 0.207 milliseconds (cumulative count 90162)

97.587% <= 0.303 milliseconds (cumulative count 97587)

99.154% <= 0.407 milliseconds (cumulative count 99154)

99.302% <= 0.503 milliseconds (cumulative count 99302)

99.572% <= 0.607 milliseconds (cumulative count 99572)

99.741% <= 0.703 milliseconds (cumulative count 99741)

99.802% <= 0.807 milliseconds (cumulative count 99802)

99.897% <= 0.903 milliseconds (cumulative count 99897)

99.950% <= 1.007 milliseconds (cumulative count 99950)

99.974% <= 1.103 milliseconds (cumulative count 99974)

99.994% <= 1.303 milliseconds (cumulative count 99994)

100.000% <= 1.407 milliseconds (cumulative count 100000)

Summary:

throughput summary: 175131.36 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.148 0.040 0.135 0.255 0.375 1.311

====== LPOP ======

100000 requests completed in 0.57 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.039 milliseconds (cumulative count 1)

50.000% <= 0.135 milliseconds (cumulative count 57478)

75.000% <= 0.159 milliseconds (cumulative count 76183)

87.500% <= 0.183 milliseconds (cumulative count 88761)

93.750% <= 0.215 milliseconds (cumulative count 93885)

96.875% <= 0.255 milliseconds (cumulative count 97277)

98.438% <= 0.279 milliseconds (cumulative count 98447)

99.219% <= 0.327 milliseconds (cumulative count 99246)

99.609% <= 0.479 milliseconds (cumulative count 99615)

99.805% <= 0.623 milliseconds (cumulative count 99805)

99.902% <= 0.735 milliseconds (cumulative count 99904)

99.951% <= 0.791 milliseconds (cumulative count 99953)

99.976% <= 0.847 milliseconds (cumulative count 99977)

99.988% <= 0.879 milliseconds (cumulative count 99990)

99.994% <= 0.887 milliseconds (cumulative count 99995)

99.997% <= 0.895 milliseconds (cumulative count 99997)

99.998% <= 0.903 milliseconds (cumulative count 100000)

100.000% <= 0.903 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.412% <= 0.103 milliseconds (cumulative count 412)

93.070% <= 0.207 milliseconds (cumulative count 93070)

99.022% <= 0.303 milliseconds (cumulative count 99022)

99.465% <= 0.407 milliseconds (cumulative count 99465)

99.654% <= 0.503 milliseconds (cumulative count 99654)

99.777% <= 0.607 milliseconds (cumulative count 99777)

99.882% <= 0.703 milliseconds (cumulative count 99882)

99.958% <= 0.807 milliseconds (cumulative count 99958)

100.000% <= 0.903 milliseconds (cumulative count 100000)

Summary:

throughput summary: 175131.36 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.147 0.032 0.135 0.231 0.303 0.903

====== RPOP ======

100000 requests completed in 0.57 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.063 milliseconds (cumulative count 2)

50.000% <= 0.135 milliseconds (cumulative count 57341)

75.000% <= 0.159 milliseconds (cumulative count 78943)

87.500% <= 0.183 milliseconds (cumulative count 89854)

93.750% <= 0.207 milliseconds (cumulative count 94610)

96.875% <= 0.247 milliseconds (cumulative count 97218)

98.438% <= 0.287 milliseconds (cumulative count 98562)

99.219% <= 0.399 milliseconds (cumulative count 99219)

99.609% <= 0.591 milliseconds (cumulative count 99617)

99.805% <= 0.703 milliseconds (cumulative count 99809)

99.902% <= 0.775 milliseconds (cumulative count 99915)

99.951% <= 0.815 milliseconds (cumulative count 99958)

99.976% <= 0.847 milliseconds (cumulative count 99979)

99.988% <= 0.879 milliseconds (cumulative count 99990)

99.994% <= 0.903 milliseconds (cumulative count 99995)

99.997% <= 0.911 milliseconds (cumulative count 99999)

99.999% <= 0.919 milliseconds (cumulative count 100000)

100.000% <= 0.919 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.047% <= 0.103 milliseconds (cumulative count 47)

94.610% <= 0.207 milliseconds (cumulative count 94610)

98.819% <= 0.303 milliseconds (cumulative count 98819)

99.229% <= 0.407 milliseconds (cumulative count 99229)

99.332% <= 0.503 milliseconds (cumulative count 99332)

99.672% <= 0.607 milliseconds (cumulative count 99672)

99.809% <= 0.703 milliseconds (cumulative count 99809)

99.948% <= 0.807 milliseconds (cumulative count 99948)

99.995% <= 0.903 milliseconds (cumulative count 99995)

100.000% <= 1.007 milliseconds (cumulative count 100000)

Summary:

throughput summary: 174520.06 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.147 0.056 0.135 0.215 0.327 0.919

====== SADD ======

100000 requests completed in 0.57 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.047 milliseconds (cumulative count 2)

50.000% <= 0.135 milliseconds (cumulative count 57363)

75.000% <= 0.151 milliseconds (cumulative count 75129)

87.500% <= 0.175 milliseconds (cumulative count 88183)

93.750% <= 0.199 milliseconds (cumulative count 94968)

96.875% <= 0.231 milliseconds (cumulative count 97161)

98.438% <= 0.279 milliseconds (cumulative count 98589)

99.219% <= 0.391 milliseconds (cumulative count 99225)

99.609% <= 0.583 milliseconds (cumulative count 99623)

99.805% <= 0.727 milliseconds (cumulative count 99809)

99.902% <= 0.823 milliseconds (cumulative count 99907)

99.951% <= 0.879 milliseconds (cumulative count 99952)

99.976% <= 0.927 milliseconds (cumulative count 99977)

99.988% <= 0.951 milliseconds (cumulative count 99989)

99.994% <= 0.967 milliseconds (cumulative count 99999)

99.999% <= 0.975 milliseconds (cumulative count 100000)

100.000% <= 0.975 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.562% <= 0.103 milliseconds (cumulative count 562)

95.818% <= 0.207 milliseconds (cumulative count 95818)

98.939% <= 0.303 milliseconds (cumulative count 98939)

99.249% <= 0.407 milliseconds (cumulative count 99249)

99.387% <= 0.503 milliseconds (cumulative count 99387)

99.674% <= 0.607 milliseconds (cumulative count 99674)

99.780% <= 0.703 milliseconds (cumulative count 99780)

99.889% <= 0.807 milliseconds (cumulative count 99889)

99.964% <= 0.903 milliseconds (cumulative count 99964)

100.000% <= 1.007 milliseconds (cumulative count 100000)

Summary:

throughput summary: 176056.33 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.146 0.040 0.135 0.207 0.311 0.975

====== HSET ======

100000 requests completed in 0.59 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.063 milliseconds (cumulative count 1)

50.000% <= 0.135 milliseconds (cumulative count 52022)

75.000% <= 0.159 milliseconds (cumulative count 76364)

87.500% <= 0.191 milliseconds (cumulative count 89232)

93.750% <= 0.223 milliseconds (cumulative count 94892)

96.875% <= 0.255 milliseconds (cumulative count 97171)

98.438% <= 0.319 milliseconds (cumulative count 98457)

99.219% <= 0.503 milliseconds (cumulative count 99236)

99.609% <= 0.615 milliseconds (cumulative count 99614)

99.805% <= 0.719 milliseconds (cumulative count 99817)

99.902% <= 0.775 milliseconds (cumulative count 99904)

99.951% <= 0.823 milliseconds (cumulative count 99952)

99.976% <= 0.871 milliseconds (cumulative count 99976)

99.988% <= 1.039 milliseconds (cumulative count 99988)

99.994% <= 1.079 milliseconds (cumulative count 99995)

99.997% <= 1.087 milliseconds (cumulative count 99998)

99.998% <= 1.095 milliseconds (cumulative count 100000)

100.000% <= 1.095 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.043% <= 0.103 milliseconds (cumulative count 43)

91.823% <= 0.207 milliseconds (cumulative count 91823)

98.290% <= 0.303 milliseconds (cumulative count 98290)

99.037% <= 0.407 milliseconds (cumulative count 99037)

99.236% <= 0.503 milliseconds (cumulative count 99236)

99.604% <= 0.607 milliseconds (cumulative count 99604)

99.786% <= 0.703 milliseconds (cumulative count 99786)

99.937% <= 0.807 milliseconds (cumulative count 99937)

99.979% <= 0.903 milliseconds (cumulative count 99979)

99.980% <= 1.007 milliseconds (cumulative count 99980)

100.000% <= 1.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 168918.92 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.152 0.056 0.135 0.231 0.407 1.095

====== SPOP ======

100000 requests completed in 0.59 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.055 milliseconds (cumulative count 1)

50.000% <= 0.135 milliseconds (cumulative count 51371)

75.000% <= 0.167 milliseconds (cumulative count 80840)

87.500% <= 0.183 milliseconds (cumulative count 89375)

93.750% <= 0.207 milliseconds (cumulative count 94285)

96.875% <= 0.247 milliseconds (cumulative count 97177)

98.438% <= 0.287 milliseconds (cumulative count 98631)

99.219% <= 0.327 milliseconds (cumulative count 99258)

99.609% <= 0.447 milliseconds (cumulative count 99614)

99.805% <= 0.623 milliseconds (cumulative count 99805)

99.902% <= 0.799 milliseconds (cumulative count 99907)

99.951% <= 0.887 milliseconds (cumulative count 99952)

99.976% <= 0.919 milliseconds (cumulative count 99980)

99.988% <= 0.943 milliseconds (cumulative count 99988)

99.994% <= 0.959 milliseconds (cumulative count 99997)

99.998% <= 0.967 milliseconds (cumulative count 100000)

100.000% <= 0.967 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.041% <= 0.103 milliseconds (cumulative count 41)

94.285% <= 0.207 milliseconds (cumulative count 94285)

98.940% <= 0.303 milliseconds (cumulative count 98940)

99.528% <= 0.407 milliseconds (cumulative count 99528)

99.665% <= 0.503 milliseconds (cumulative count 99665)

99.799% <= 0.607 milliseconds (cumulative count 99799)

99.852% <= 0.703 milliseconds (cumulative count 99852)

99.908% <= 0.807 milliseconds (cumulative count 99908)

99.969% <= 0.903 milliseconds (cumulative count 99969)

100.000% <= 1.007 milliseconds (cumulative count 100000)

Summary:

throughput summary: 169204.73 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.150 0.048 0.135 0.215 0.311 0.967

====== ZADD ======

100000 requests completed in 0.56 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.047 milliseconds (cumulative count 4)

50.000% <= 0.135 milliseconds (cumulative count 58400)

75.000% <= 0.151 milliseconds (cumulative count 76612)

87.500% <= 0.183 milliseconds (cumulative count 89978)

93.750% <= 0.207 milliseconds (cumulative count 94675)

96.875% <= 0.239 milliseconds (cumulative count 97046)

98.438% <= 0.279 milliseconds (cumulative count 98695)

99.219% <= 0.311 milliseconds (cumulative count 99264)

99.609% <= 0.535 milliseconds (cumulative count 99612)

99.805% <= 0.719 milliseconds (cumulative count 99812)

99.902% <= 0.791 milliseconds (cumulative count 99916)

99.951% <= 0.807 milliseconds (cumulative count 99953)

99.976% <= 0.887 milliseconds (cumulative count 99977)

99.988% <= 0.943 milliseconds (cumulative count 99989)

99.994% <= 0.967 milliseconds (cumulative count 99997)

99.998% <= 0.975 milliseconds (cumulative count 100000)

100.000% <= 0.975 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.118% <= 0.103 milliseconds (cumulative count 118)

94.675% <= 0.207 milliseconds (cumulative count 94675)

99.183% <= 0.303 milliseconds (cumulative count 99183)

99.517% <= 0.407 milliseconds (cumulative count 99517)

99.576% <= 0.503 milliseconds (cumulative count 99576)

99.705% <= 0.607 milliseconds (cumulative count 99705)

99.792% <= 0.703 milliseconds (cumulative count 99792)

99.953% <= 0.807 milliseconds (cumulative count 99953)

99.979% <= 0.903 milliseconds (cumulative count 99979)

100.000% <= 1.007 milliseconds (cumulative count 100000)

Summary:

throughput summary: 178253.12 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.145 0.040 0.135 0.215 0.295 0.975

====== ZPOPMIN ======

100000 requests completed in 0.60 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.079 milliseconds (cumulative count 1)

50.000% <= 0.135 milliseconds (cumulative count 52822)

75.000% <= 0.167 milliseconds (cumulative count 78683)

87.500% <= 0.199 milliseconds (cumulative count 88653)

93.750% <= 0.247 milliseconds (cumulative count 94200)

96.875% <= 0.287 milliseconds (cumulative count 96951)

98.438% <= 0.319 milliseconds (cumulative count 98457)

99.219% <= 0.359 milliseconds (cumulative count 99228)

99.609% <= 0.511 milliseconds (cumulative count 99617)

99.805% <= 0.647 milliseconds (cumulative count 99808)

99.902% <= 0.767 milliseconds (cumulative count 99907)

99.951% <= 0.847 milliseconds (cumulative count 99962)

99.976% <= 0.871 milliseconds (cumulative count 99980)

99.988% <= 0.895 milliseconds (cumulative count 99993)

99.994% <= 0.903 milliseconds (cumulative count 99996)

99.997% <= 0.911 milliseconds (cumulative count 99998)

99.998% <= 0.927 milliseconds (cumulative count 100000)

100.000% <= 0.927 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.030% <= 0.103 milliseconds (cumulative count 30)

89.709% <= 0.207 milliseconds (cumulative count 89709)

97.815% <= 0.303 milliseconds (cumulative count 97815)

99.368% <= 0.407 milliseconds (cumulative count 99368)

99.608% <= 0.503 milliseconds (cumulative count 99608)

99.778% <= 0.607 milliseconds (cumulative count 99778)

99.855% <= 0.703 milliseconds (cumulative count 99855)

99.927% <= 0.807 milliseconds (cumulative count 99927)

99.996% <= 0.903 milliseconds (cumulative count 99996)

100.000% <= 1.007 milliseconds (cumulative count 100000)

Summary:

throughput summary: 165562.92 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.154 0.072 0.135 0.263 0.343 0.927

====== LPUSH (needed to benchmark LRANGE) ======

100000 requests completed in 0.52 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.039 milliseconds (cumulative count 2)

50.000% <= 0.127 milliseconds (cumulative count 57330)

75.000% <= 0.143 milliseconds (cumulative count 78341)

87.500% <= 0.167 milliseconds (cumulative count 88472)

93.750% <= 0.191 milliseconds (cumulative count 94001)

96.875% <= 0.239 milliseconds (cumulative count 97076)

98.438% <= 0.279 milliseconds (cumulative count 98472)

99.219% <= 0.407 milliseconds (cumulative count 99223)

99.609% <= 0.591 milliseconds (cumulative count 99630)

99.805% <= 0.687 milliseconds (cumulative count 99809)

99.902% <= 0.831 milliseconds (cumulative count 99908)

99.951% <= 0.887 milliseconds (cumulative count 99953)

99.976% <= 0.927 milliseconds (cumulative count 99978)

99.988% <= 0.967 milliseconds (cumulative count 99988)

99.994% <= 1.007 milliseconds (cumulative count 99996)

99.997% <= 1.015 milliseconds (cumulative count 100000)

100.000% <= 1.015 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

4.325% <= 0.103 milliseconds (cumulative count 4325)

95.503% <= 0.207 milliseconds (cumulative count 95503)

98.807% <= 0.303 milliseconds (cumulative count 98807)

99.223% <= 0.407 milliseconds (cumulative count 99223)

99.422% <= 0.503 milliseconds (cumulative count 99422)

99.662% <= 0.607 milliseconds (cumulative count 99662)

99.815% <= 0.703 milliseconds (cumulative count 99815)

99.892% <= 0.807 milliseconds (cumulative count 99892)

99.962% <= 0.903 milliseconds (cumulative count 99962)

99.996% <= 1.007 milliseconds (cumulative count 99996)

100.000% <= 1.103 milliseconds (cumulative count 100000)

Summary:

throughput summary: 191938.56 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.136 0.032 0.127 0.207 0.343 1.015

====== LRANGE_100 (first 100 elements) ======

100000 requests completed in 0.92 seconds

50 parallel clients

3 bytes payload

keep alive: 1

host configuration "save": 3600 1 300 100 60 10000

host configuration "appendonly": no

multi-thread: no

Latency by percentile distribution:

0.000% <= 0.143 milliseconds (cumulative count 1)

50.000% <= 0.223 milliseconds (cumulative count 51105)

75.000% <= 0.263 milliseconds (cumulative count 77241)

87.500% <= 0.295 milliseconds (cumulative count 87596)

93.750% <= 0.375 milliseconds (cumulative count 94099)

96.875% <= 0.447 milliseconds (cumulative count 96895)

98.438% <= 0.527 milliseconds (cumulative count 98539)

99.219% <= 0.591 milliseconds (cumulative count 99249)

99.609% <= 0.647 milliseconds (cumulative count 99640)

99.805% <= 0.687 milliseconds (cumulative count 99806)

99.902% <= 0.735 milliseconds (cumulative count 99909)

99.951% <= 0.791 milliseconds (cumulative count 99954)

99.976% <= 0.839 milliseconds (cumulative count 99978)

99.988% <= 0.879 milliseconds (cumulative count 99989)

99.994% <= 0.903 milliseconds (cumulative count 99994)

99.997% <= 0.943 milliseconds (cumulative count 99998)

99.998% <= 0.967 milliseconds (cumulative count 99999)

99.999% <= 0.975 milliseconds (cumulative count 100000)

100.000% <= 0.975 milliseconds (cumulative count 100000)

Cumulative distribution of latencies:

0.000% <= 0.103 milliseconds (cumulative count 0)

38.497% <= 0.207 milliseconds (cumulative count 38497)

88.558% <= 0.303 milliseconds (cumulative count 88558)

95.506% <= 0.407 milliseconds (cumulative count 95506)

98.180% <= 0.503 milliseconds (cumulative count 98180)

99.394% <= 0.607 milliseconds (cumulative count 99394)

99.848% <= 0.703 milliseconds (cumulative count 99848)

99.962% <= 0.807 milliseconds (cumulative count 99962)

99.994% <= 0.903 milliseconds (cumulative count 99994)

100.000% <= 1.007 milliseconds (cumulative count 100000)

Summary:

throughput summary: 109051.26 requests per second

latency summary (msec):

avg min p50 p95 p99 max

0.247 0.136 0.223 0.399 0.567 0.975

^CANGE_300 (first 300 elements): rps=40920.0 (overall: 43920.2) avg_msec=0.611 (overall: 0.574)

╭─bot@amd-5700G ~/Desktop/python

╰─➤