经过一周的折腾,终于把kubernetes给搭建起来了,简单做个记录。

如果没有GTW的话,通过官网给的教程那简直就是so easy。可是,你知道的,国人在软件方面总不是那么一帆风顺的。

先来说说kubernetes核心组件:

kubelet:每个节点都需要安装的组件,负责操作docker并与主节点通信。

kube-apiservice:主节点提供给用户操作的接口,同时操作各个节点。

kube-controller-manager:控制中心,负责各个节点的状态管理等。

kube-scheduler:调度中心。

kubernetes几个主要的概念:

- namespace:命名空间。我们将按照项目的性质进行划分,例如:showdoc:可以划分到support命名空间。开发的服务划分到dev下面。

- Deployment:部署。每个项目都需要一个部署的节点,可以理解成守护进程。如果具体的容器挂了,部署的节点会自动重新启动一个新的容器。

- Pod:容器。每个项目具体的容器节点。可以通过这个容器节点查看日志,运行一些简单的容器命令。

- Service:服务。这个服务的功能是将kubernetes集群IP和容器进行绑定。外部可直接访问集群IP进行访问容器。(我们可以通过nginx将端口代理到kubernetes集群IP上面,达到外部局域网直接访问容器的目的。)

安装docker

本机装的docker版本是18.06.0-ce.

安装依赖

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

设置阿里云镜像

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装docker

sudo yum install docker-ce

启动 Docker

sudo systemctl enable docker

sudo systemctl start docker

配置阿里云镜像加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["你的加速器地址"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

准备kubernetes安装条件

配置阿里云的kubernetes源

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

EOF

重建 Yum 缓存

yum -y install epel-release

yum clean all

yum makecache

安装kubernetes核心组件

yum -y install kubelet kubeadm kubectl kubernetes-cni

启动服务

systemctl enable kubelet && systemctl start kubelet

配置 Kubeadm 所用到的镜像,新建一个kubeadm-config.sh文件

#!/bin/bash

images=(kube-proxy-amd64:v1.11.0 kube-scheduler-amd64:v1.11.0 kube-controller-manager-amd64:v1.11.0 kube-apiserver-amd64:v1.11.0

etcd-amd64:3.2.18 coredns:1.1.3 pause-amd64:3.1 kubernetes-dashboard-amd64:v1.8.3 k8s-dns-sidecar-amd64:1.14.9 k8s-dns-kube-dns-amd64:1.14.9

k8s-dns-dnsmasq-nanny-amd64:1.14.9 )

for imageName in ${images[@]} ; do

docker pull keveon/$imageName

docker tag keveon/$imageName k8s.gcr.io/$imageName

docker rmi keveon/$imageName

done

docker tag k8s.gcr.io/pause-amd64:3.1 k8s.gcr.io/pause:3.1

执行这个文件

chmod -R 777 ./kubeadm-config.sh

./kubeadm-config.sh

关闭 Swap

sudo swapoff -a

#要永久禁掉swap分区,打开如下文件注释掉swap那一行

sudo vi /etc/fstab

关闭 SELinux

# 永久关闭 修改/etc/sysconfig/selinux文件设置

sed -i 's/SELINUX=permissive/SELINUX=disabled/' /etc/sysconfig/selinux

# 临时禁用selinux

setenforce 0

关闭 防火墙

systemctl stop firewalld&&systemctl disable firewalld

配置转发参数

# 配置转发相关参数,否则可能会出错

cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

EOF

# 这里按回车,下面是第二条命令

sysctl --system

正式安装 Kuberentes(主机)###

执行命令

kubeadm init --kubernetes-version=v1.11.0 --pod-network-cidr=10.244.0.0/16

看到如下信息即安装成功

[init] using Kubernetes version: v1.11.0

[preflight] running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

I0822 17:20:10.828319 14125 kernel_validator.go:81] Validating kernel version

I0822 17:20:10.828402 14125 kernel_validator.go:96] Validating kernel config

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 18.06.0-ce. Max validated version: 17.03

[WARNING Hostname]: hostname "test121" could not be reached

[WARNING Hostname]: hostname "test121" lookup test121 on 192.168.192.1:53: no such host

[preflight/images] Pulling images required for setting up a Kubernetes cluster

[preflight/images] This might take a minute or two, depending on the speed of your internet connection

[preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[preflight] Activating the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [test121 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.192.121]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Generated etcd/ca certificate and key.

[certificates] Generated etcd/server certificate and key.

[certificates] etcd/server serving cert is signed for DNS names [test121 localhost] and IPs [127.0.0.1 ::1]

[certificates] Generated etcd/peer certificate and key.

[certificates] etcd/peer serving cert is signed for DNS names [test121 localhost] and IPs [192.168.192.121 127.0.0.1 ::1]

[certificates] Generated etcd/healthcheck-client certificate and key.

[certificates] Generated apiserver-etcd-client certificate and key.

[certificates] valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests"

[init] this might take a minute or longer if the control plane images have to be pulled

[apiclient] All control plane components are healthy after 36.501252 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.11" in namespace kube-system with the configuration for the kubelets in the cluster

[markmaster] Marking the node test121 as master by adding the label "node-role.kubernetes.io/master=''"

[markmaster] Marking the node test121 as master by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "test121" as an annotation

[bootstraptoken] using token: zw54ho.7rcy6bzcxjlxo0j6

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.192.121:6443 --token zw54ho.7rcy6bzcxjlxo0j6 --discovery-token-ca-cert-hash sha256:b9162792dd5ad6719c87bbc3938240f6dc23de4be6f0fefff5069f11b3710f79

配置 kubectl 认证信息

export KUBECONFIG=/etc/kubernetes/admin.conf

# 如果你想持久化的话,直接执行以下命令【推荐】

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

安装 Flannel 网络

mkdir -p /etc/cni/net.d/

cat < /etc/cni/net.d/10-flannel.conf

{

“name”: “cbr0”,

“type”: “flannel”,

“delegate”: {

“isDefaultGateway”: true

}

}

EOF

mkdir /usr/share/oci-umount/oci-umount.d -p

mkdir /run/flannel/

cat < /run/flannel/subnet.env

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.1.0/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

EOF

最后,我们需要新建一个 flannel.yml 文件,内容如下:

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"type": "flannel",

"delegate": {

"isDefaultGateway": true

}

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.9.1-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conf

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.9.1-amd64

command: [ "/opt/bin/flanneld", "--ip-masq", "--kube-subnet-mgr" ]

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

执行命令

kubectl create -f ./flannel.yml

执行完成之后,我们可以运行一下命令,查看现在的节点信息:

kubectl get nodes

# 会打印如下信息

NAME STATUS ROLES AGE VERSION

test121 Ready master 6m v1.11.0

如果想让master运行pod,执行如下命令

kubectl taint nodes master.k8s node-role.kubernetes.io/master-

#或者

kubectl uncordon test121

好了,以上我们就完成了主机的配置。

正式安装 Kuberentes(节点安装)

Node 节点所需要做的都在 准备kubernetes安装条件里面,做完之后直接执行刚刚主机输出的:

kubeadm join 192.168.192.121:6443 --token zw54ho.7rcy6bzcxjlxo0j6 --discovery-token-ca-cert-hash sha256:b9162792dd5ad6719c87bbc3938240f6dc23de4be6f0fefff5069f11b3710f79

执行完就 OK 了。回到主节点查看运行状态

kubectl get nodes

#会输出

NAME STATUS ROLES AGE VERSION

test119 Ready 4d v1.11.2

test121 Ready master 4d v1.11.2

Dashboard 配置(测试环境)

Kuberentes 配置 DashBoard 也不简单,当然你可以使用官方的 dashboard 的 yaml 文件进行部署,也可以使用 Mr.Devin 这位博主所提供的修改版,避免踩坑。

地址在:https://github.com/gh-Devin/kubernetes-dashboard,将这些 Yaml 文件下载下来,在其目录下(注意在 Yaml 文件所在目录),执行以下命令:

kubectl -n kube-system create -f .

继续创建文件dashboard-admin.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

kubectl -f ./dashboard-admin.yaml create

访问你 MASTER 主机的 IP:30090即可。

Dashboard 配置(生产环境)

- 配置Dashboard

Dashboard需要用到k8s.gcr.io/kubernetes-dashboard的镜像,由于网络原因,可以采用预先拉取并打Tag或者修改yaml文件中的镜像地址,本文使用后者:

kubectl apply -f http://mirror.faasx.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

上面使用的yaml只是将 https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml 中的 k8s.gcr.io 替换为了 reg.qiniu.com/k8s。

查看Dashboard是否启动

kubectl get pods --all-namespaces

# 输出

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system kubernetes-dashboard-7d5dcdb6d9-mf6l2 1/1 Running 0 9m

创建服务用户,创建admin-user.yaml文件

# admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

执行kubectl create命令:

kubectl create -f admin-user.yaml

绑定角色,创建admin-user-role-binding.yaml

# admin-user-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

执行kubectl create命令:

kubectl create -f admin-user-role-binding.yaml

获取Token,现在我们需要找到新创建的用户的Token,以便用来登录dashboard:

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

#输出:

Name: admin-user-token-qrj82

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name=admin-user

kubernetes.io/service-account.uid=6cd60673-4d13-11e8-a548-00155d000529

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXFyajgyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2Y2Q2MDY3My00ZDEzLTExZTgtYTU0OC0wMDE1NWQwMDA1MjkiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.C5mjsa2uqJwjscWQ9x4mEsWALUTJu3OSfLYecqpS1niYXxp328mgx0t-QY8A7GQvAr5fWoIhhC_NOHkSkn2ubn0U22VGh2msU6zAbz9sZZ7BMXG4DLMq3AaXTXY8LzS3PQyEOCaLieyEDe-tuTZz4pbqoZQJ6V6zaKJtE9u6-zMBC2_iFujBwhBViaAP9KBbE5WfREEc0SQR9siN8W8gLSc8ZL4snndv527Pe9SxojpDGw6qP_8R-i51bP2nZGlpPadEPXj-lQqz4g5pgGziQqnsInSMpctJmHbfAh7s9lIMoBFW7GVE8AQNSoLHuuevbLArJ7sHriQtDB76_j4fmA

ca.crt: 1025 bytes

namespace: 11 bytes

保存好token信息。

- 集成Heapster

Heapster是容器集群监控和性能分析工具,天然的支持Kubernetes和CoreOS。

Heapster支持多种储存方式,本示例中使用influxdb,直接执行下列命令即可

kubectl create -f http://mirror.faasx.com/kubernetes/heapster/deploy/kube-config/influxdb/influxdb.yaml

kubectl create -f http://mirror.faasx.com/kubernetes/heapster/deploy/kube-config/influxdb/grafana.yaml

kubectl create -f http://mirror.faasx.com/kubernetes/heapster/deploy/kube-config/influxdb/heapster.yaml

kubectl create -f http://mirror.faasx.com/kubernetes/heapster/deploy/kube-config/rbac/heapster-rbac.yaml

上面命令中用到的yaml是从 https://github.com/kubernetes/heapster/tree/master/deploy/kube-config/influxdb 复制的,并将

k8s.gcr.io修改为国内镜像。

然后,查看一下Pod的状态:

kubectl get pods --namespace=kube-system

#输出

NAME READY STATUS RESTARTS AGE

...

heapster-5869b599bd-kxltn 1/1 Running 0 5m

monitoring-grafana-679f6b46cb-xxsr4 1/1 Running 0 5m

monitoring-influxdb-6f875dc468-7s4xz 1/1 Running 0 6m

...

- 访问

通过API Server

Dashboard的访问地址为:https://

: /api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

但是返回的结果可能如下:

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "services \"https:kubernetes-dashboard:\" is forbidden: User \"system:anonymous\" cannot get services/proxy in the namespace \"kube-system\"",

"reason": "Forbidden",

"details": {

"name": "https:kubernetes-dashboard:",

"kind": "services"

},

"code": 403

}

这是因为最新版的k8s默认启用了RBAC,并为未认证用户赋予了一个默认的身份:anonymous。

对于API Server来说,它是使用证书进行认证的,我们需要先创建一个证书:

1.首先找到kubectl命令的配置文件,默认情况下为/etc/kubernetes/admin.conf,复制到了$HOME/.kube/config中。

mkdir $HOME/.kube/config

cp /etc/kubernetes/admin.conf $HOME/.kube/config/admin.conf

2.然后我们使用client-certificate-data和client-key-data生成一个p12文件,可使用下列命令:

# 生成client-certificate-data

grep 'client-certificate-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.crt

# 生成client-key-data

grep 'client-key-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.key

# 生成p12

openssl pkcs12 -export -clcerts -inkey kubecfg.key -in kubecfg.crt -out kubecfg.p12 -name "kubernetes-client"

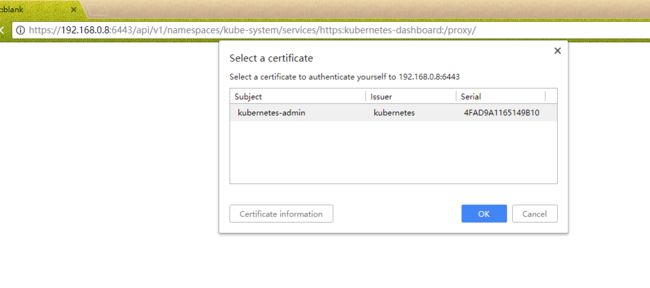

3.最后导入上面生成的p12文件,重新打开浏览器,显示如下:

然后输入Token,进入控制台。

我们可以使用一开始创建的admin-user用户的token进行登录,一切OK。

对于生产系统,我们应该为每个用户应该生成自己的证书,因为不同的用户会有不同的命名空间访问权限。

以上就是kubernetes配置的全部过程了。