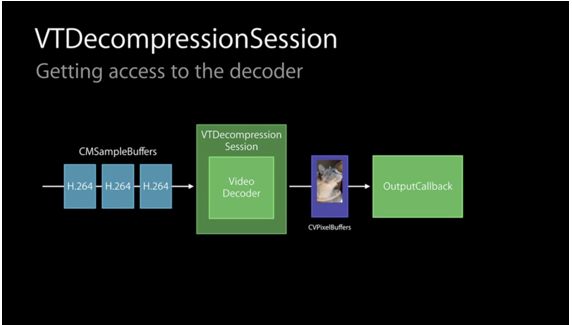

解码器VTDecompressionSession

将压缩的CMSampleBuffers数据解码为非压缩的CVPixelBuffers数据,并回调

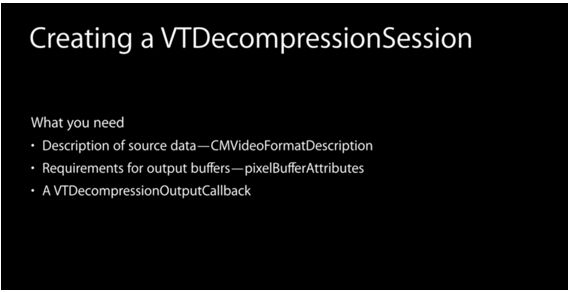

创建一个解码器,你需要准备的数据

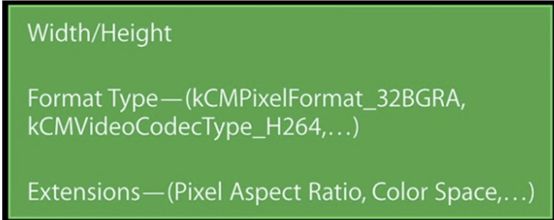

CMFormatDescription

描述了视频的基本信息,有时也用CMVideoFormatDescriptionRef表示

typedef CMFormatDescriptionRef CMVideoFormatDescriptionRef;

示意图:

有3个接口可创建视频格式描述对象CMFormatDescriptionRef

一般视频解码里使用以下方式

const uint8_t* const parameterSetPointers[2] = { (const uint8_t*)[spsData bytes], (const uint8_t*)[ppsData bytes] };

const size_t parameterSetSizes[2] = { [spsData length], [ppsData length] };

status = CMVideoFormatDescriptionCreateFromH264ParameterSets(kCFAllocatorDefault, 2, parameterSetPointers, parameterSetSizes, 4, &videoFormatDescr);

其中

OSStatus CMVideoFormatDescriptionCreateFromH264ParameterSets(

CFAllocatorRef CM_NULLABLE allocator, /*! @param allocator

CFAllocator to be used when creating the CMFormatDescription. Pass NULL to use the default allocator. */

size_t parameterSetCount, /*! @param parameterSetCount

The number of parameter sets to include in the format description. This parameter must be at least 2. */

const uint8_t * CM_NONNULL const * CM_NONNULL parameterSetPointers, /*! @param parameterSetPointers

Points to a C array containing parameterSetCount pointers to parameter sets. */

const size_t * CM_NONNULL parameterSetSizes, /*! @param parameterSetSizes

Points to a C array containing the size, in bytes, of each of the parameter sets. */

int NALUnitHeaderLength, /*! @param NALUnitHeaderLength

Size, in bytes, of the NALUnitLength field in an AVC video sample or AVC parameter set sample. Pass 1, 2 or 4. */

CM_RETURNS_RETAINED_PARAMETER CMFormatDescriptionRef CM_NULLABLE * CM_NONNULL formatDescriptionOut ) /*! @param formatDescriptionOut

Returned newly-created video CMFormatDescription */

第二种方式

CFMutableDictionaryRef atoms = CFDictionaryCreateMutable(NULL, 0, &kCFTypeDictionaryKeyCallBacks,&kCFTypeDictionaryValueCallBacks);

CFMutableDictionarySetData(atoms, CFSTR ("avcC"), (uint8_t *)extradata, extradata_size);

CFMutableDictionaryRef extensions = CFDictionaryCreateMutable(NULL, 0, &kCFTypeDictionaryKeyCallBacks, &kCFTypeDictionaryValueCallBacks);

CFMutableDictionarySetObject(extensions, CFSTR ("SampleDescriptionExtensionAtoms"), (CFTypeRef *) atoms);

CMVideoFormatDescriptionCreate(NULL, format_id, width, height, extensions, &videoFormatDescr);

第三种,从CVImageBufferRef创建

OSStatus CMVideoFormatDescriptionCreateForImageBuffer(

CFAllocatorRef CM_NULLABLE allocator, /*! @param allocator

CFAllocator to be used when creating the CMFormatDescription. NULL will cause the default allocator to be used */

CVImageBufferRef CM_NONNULL imageBuffer, /*! @param imageBuffer

Image buffer for which we are creating the format description. */

CM_RETURNS_RETAINED_PARAMETER CMVideoFormatDescriptionRef CM_NULLABLE * CM_NONNULL formatDescriptionOut) /*! @param formatDescriptionOut

Returned newly-created video CMFormatDescription */

pixelBufferAttributes

创建解码会话时还需要提供一些解码指导信息,如已解码数据是否为OpenGL ES兼容、是否需要YUV转换RGB(此项一般不设置,OpenGL转换效率更高,VideoToolbox转换不仅需要使用更多内存,同时也消耗CPU)等等,如

NSDictionary *destinationImageBufferAttributes =[NSDictionary dictionaryWithObjectsAndKeys:[NSNumber numberWithBool:NO],(id)kCVPixelBufferOpenGLESCompatibilityKey,[NSNumber numberWithInt:kCVPixelFormatType_32BGRA],(id)kCVPixelBufferPixelFormatTypeKey,nil];

VTDecompressionOutputCallbackRecord

创建解码会话需要提供回调函数以便系统解码完成时将解码数据、状态等信息返回给用户

VTDecompressionOutputCallbackRecord callback;

callback.decompressionOutputCallback = didDecompress;

callback.decompressionOutputRefCon = (__bridge void *)self;

回调函数原型如下,每解码一帧视频都调用一次此函数。

void didDecompress( void *decompressionOutputRefCon, void *sourceFrameRefCon, OSStatus status, VTDecodeInfoFlags infoFlags, CVImageBufferRef imageBuffer, CMTime presentationTimeStamp, CMTime presentationDuration )

VTDecompressionSessionRef

准备工作都完成了,现在正式创建解码会话,解码会话的创建函数VTDecompressionSessionCreate

函数

OSStatus

VTDecompressionSessionCreate(

CM_NULLABLE CFAllocatorRef allocator,

CM_NONNULL CMVideoFormatDescriptionRef videoFormatDescription,

CM_NULLABLE CFDictionaryRef videoDecoderSpecification,

CM_NULLABLE CFDictionaryRef destinationImageBufferAttributes,

const VTDecompressionOutputCallbackRecord * CM_NULLABLE outputCallback,

CM_RETURNS_RETAINED_PARAMETER CM_NULLABLE VTDecompressionSessionRef * CM_NONNULL decompressionSessionOut)

使用函数创建

OSStatus status = VTDecompressionSessionCreate(kCFAllocatorDefault,

_decoderFormatDescription,

NULL,

(__bridge CFDictionaryRef)destinationPixelBufferAttributes,

&callBackRecord,

&_deocderSession);

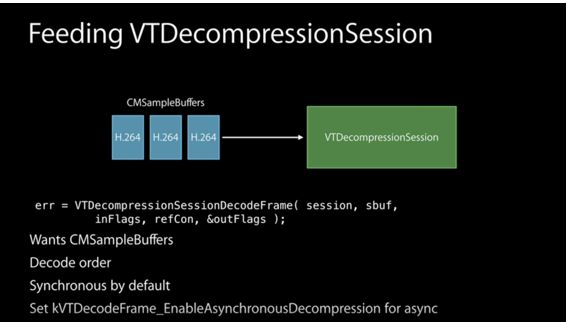

VTDecompressionSessionDecodeFrame进行解码

给解码器填充数据,进行解码,需要压缩数据CMSampleBufferRef

1.CMBlockBufferCreateWithMemoryBlock

将NALU数据拷贝到CMBlockBuffer

CMBlockBufferRef blockBuffer = NULL;

OSStatus status = CMBlockBufferCreateWithMemoryBlock(NULL,

(void *)frame,

frameSize,

kCFAllocatorNull,

NULL,

0,

frameSize,

FALSE,

&blockBuffer);

2.CMSampleBufferCreateReady

创建CMSampleBufferRef

CMSampleBufferRef sampleBuffer = NULL;

const size_t sampleSizeArray[] = {frameSize};

status = CMSampleBufferCreateReady(kCFAllocatorDefault,

blockBuffer,

_decoderFormatDescription ,

1, 0, NULL, 1, sampleSizeArray,

&sampleBuffer);

3.VTDecompressionSessionDecodeFrame

CVPixelBufferRef outputPixelBuffer = NULL;

VTDecodeFrameFlags flags = 0;

VTDecodeInfoFlags flagOut = 0;

OSStatus decodeStatus = VTDecompressionSessionDecodeFrame(_deocderSession,

sampleBuffer,

flags,

&outputPixelBuffer,

&flagOut);

解码前后的数据结构CMSamleBuffer

附解码器代码,直接拷贝到项目既可以使用

#import

NS_ASSUME_NONNULL_BEGIN

@protocol H264DecoderDelegate

- (void)didDecodedFrame:(CVImageBufferRef )imageBuffer;

@end

@interface H264Decoder : NSObject

@property (weak, nonatomic) id delegate;

- (BOOL)initH264Decoder;

- (void)decodeNalu:(uint8_t *)frame withSize:(uint32_t)frameSize;

@end

#import "H264Decoder.h"

#import

#define h264outputWidth 800

#define h264outputHeight 600

@interface H264Decoder ()

@property (nonatomic, assign) uint8_t *sps;

@property (nonatomic, assign) NSInteger spsSize;

@property (nonatomic, assign) uint8_t *pps;

@property (nonatomic, assign) NSInteger ppsSize;

@property (nonatomic, assign) VTDecompressionSessionRef deocderSession;

@property (nonatomic, assign) CMVideoFormatDescriptionRef decoderFormatDescription;

@end

@implementation H264Decoder

#pragma mark - public

- (BOOL)initH264Decoder {

if(_deocderSession) {

return YES;

}

const uint8_t* const parameterSetPointers[2] = { _sps, _pps };

const size_t parameterSetSizes[2] = { _spsSize, _ppsSize };

OSStatus status = CMVideoFormatDescriptionCreateFromH264ParameterSets(kCFAllocatorDefault,

2, //param count

parameterSetPointers,

parameterSetSizes,

4, //nal start code size

&_decoderFormatDescription);

if(status == noErr) {

NSDictionary* destinationPixelBufferAttributes = @{

(id)kCVPixelBufferPixelFormatTypeKey : [NSNumber numberWithInt:kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange],

//硬解必须是 kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange

// 或者是kCVPixelFormatType_420YpCbCr8Planar

//因为iOS是 nv12 其他是nv21

(id)kCVPixelBufferWidthKey : [NSNumber numberWithInt:h264outputHeight*2],

(id)kCVPixelBufferHeightKey : [NSNumber numberWithInt:h264outputWidth*2],

//这里款高和编码反的

(id)kCVPixelBufferOpenGLCompatibilityKey : [NSNumber numberWithBool:YES]

};

VTDecompressionOutputCallbackRecord callBackRecord;

callBackRecord.decompressionOutputCallback = didDecompress;

callBackRecord.decompressionOutputRefCon = (__bridge void *)self;

status = VTDecompressionSessionCreate(kCFAllocatorDefault,

_decoderFormatDescription,

NULL,

(__bridge CFDictionaryRef)destinationPixelBufferAttributes,

&callBackRecord,

&_deocderSession);

VTSessionSetProperty(_deocderSession, kVTDecompressionPropertyKey_ThreadCount, (__bridge CFTypeRef)[NSNumber numberWithInt:1]);

VTSessionSetProperty(_deocderSession, kVTDecompressionPropertyKey_RealTime, kCFBooleanTrue);

} else {

NSLog(@"IOS8VT: reset decoder session failed status=%d", (int)status);

}

return YES;

}

- (void)decodeNalu:(uint8_t *)frame withSize:(uint32_t)frameSize {

// NSLog(@">>>>>>>>>>开始解码");

int nalu_type = (frame[4] & 0x1F); //16进制,16进制的-位,对应二进制的4位,16进制的两位对应二进制的8位,就是一字节,0x1F转为10进制就是31,转为二进制是11111,& 运算 真真为真,其中frame[4]表示开始码后第一个byte的地址,所以frame[4] & 0x1F表示取开始码后第一个byte的后5位,开始码后第一个byte的后5位,7代表sps,8代表pps。定位NALU的类型是什么

CVPixelBufferRef pixelBuffer = NULL;

// 将NALU的开始码转为4字节大端NALU的长度信息

/**

字节排序按分为大端和小端,概念如下

大端(big endian):低地址存放高有效字节

小端(little endian):低字节存放地有效字节

现在主流的CPU,intel系列的是采用的little endian的格式存放数据,而motorola系列的CPU采用的是big endian,ARM则同时支持 big和little,网络编程中,TCP/IP统一采用大端方式传送数据,所以有时我们也会把大端方式称之为网络字节序。

https://www.cnblogs.com/Romi/archive/2012/01/10/2318551.html

1.大端和小端的方式及判断

举个例子说明,我的机子是32位windows的系统,处理器是AMD的。对于一个int型数0x12345678,为方便说明,这里采用16进制表示。这个数在不同字节顺序存储的CPU中储存顺序如下:

0x12345678 16进制,两个数就是一字节

高有效字节——>低有效字节: 12 34 56 78

低地址位 高低址位

大端: 12 34 56 78

小端: 78 56 34 12

下面是将小端转大端

在工作中遇到一个问题,数据是以大端模式存储的,而机器是小端模式,必须进行转换,否则使用时会出问题

看到有人这样处理的https://www.cnblogs.com/sunminmin/p/4976418.html

B. 用4字节长度代码(4 byte length code (the length of the NalUnit including the unit code))替换分隔码(separator code)

int reomveHeaderSize = packet.size - 4;

const uint8_t sourceBytes[] = {(uint8_t)(reomveHeaderSize >> 24), (uint8_t)(reomveHeaderSize >> 16), (uint8_t)(reomveHeaderSize >> 8), (uint8_t)reomveHeaderSize};

status = CMBlockBufferReplaceDataBytes(sourceBytes, videoBlock, 0, 4);

*/

uint32_t nalSize = (uint32_t)(frameSize - 4);

uint8_t *pNalSize = (uint8_t*)(&nalSize);

frame[0] = *(pNalSize + 3);

frame[1] = *(pNalSize + 2);

frame[2] = *(pNalSize + 1);

frame[3] = *(pNalSize);

//传输的时候。关键帧不能丢数据 否则绿屏 B/P可以丢 这样会卡顿

switch (nalu_type)

{

case 0x05:

// NSLog(@"nalu_type:%d Nal type is IDR frame",nalu_type); //关键帧

if([self initH264Decoder])

{

pixelBuffer = [self decode:frame withSize:frameSize];

}

break;

case 0x07:

// NSLog(@"nalu_type:%d Nal type is SPS",nalu_type); //sps

_spsSize = frameSize - 4;

_sps = malloc(_spsSize);

memcpy(_sps, &frame[4], _spsSize);

break;

case 0x08:

{

// NSLog(@"nalu_type:%d Nal type is PPS",nalu_type); //pps

_ppsSize = frameSize - 4;

_pps = malloc(_ppsSize);

memcpy(_pps, &frame[4], _ppsSize);

break;

}

default:

{

// NSLog(@"Nal type is B/P frame");//其他帧

if([self initH264Decoder])

{

pixelBuffer = [self decode:frame withSize:frameSize];

}

break;

}

}

}

#pragma mark - private

//解码回调函数

static void didDecompress(void *decompressionOutputRefCon, void *sourceFrameRefCon, OSStatus status, VTDecodeInfoFlags infoFlags, CVImageBufferRef pixelBuffer, CMTime presentationTimeStamp, CMTime presentationDuration) {

CVPixelBufferRef *outputPixelBuffer = (CVPixelBufferRef *)sourceFrameRefCon;

*outputPixelBuffer = CVPixelBufferRetain(pixelBuffer);

H264Decoder *decoder = (__bridge H264Decoder *)decompressionOutputRefCon;

if (decoder.delegate!=nil)

{

[decoder.delegate didDecodedFrame:pixelBuffer];

}

}

-(CVPixelBufferRef)decode:(uint8_t *)frame withSize:(uint32_t)frameSize {

CVPixelBufferRef outputPixelBuffer = NULL;

CMBlockBufferRef blockBuffer = NULL;

OSStatus status = CMBlockBufferCreateWithMemoryBlock(NULL,

(void *)frame,

frameSize,

kCFAllocatorNull,

NULL,

0,

frameSize,

FALSE,

&blockBuffer);

if(status == kCMBlockBufferNoErr) {

CMSampleBufferRef sampleBuffer = NULL;

const size_t sampleSizeArray[] = {frameSize};

status = CMSampleBufferCreateReady(kCFAllocatorDefault,

blockBuffer,

_decoderFormatDescription ,

1, 0, NULL, 1, sampleSizeArray,

&sampleBuffer);

if (status == kCMBlockBufferNoErr && sampleBuffer) {

VTDecodeFrameFlags flags = 0;

VTDecodeInfoFlags flagOut = 0;

OSStatus decodeStatus = VTDecompressionSessionDecodeFrame(_deocderSession,

sampleBuffer,

flags,

&outputPixelBuffer,

&flagOut);

if(decodeStatus == kVTInvalidSessionErr) {

NSLog(@"IOS8VT: Invalid session, reset decoder session");

} else if(decodeStatus == kVTVideoDecoderBadDataErr) {

NSLog(@"IOS8VT: decode failed status=%d(Bad data)", (int)decodeStatus);

} else if(decodeStatus != noErr) {

NSLog(@"IOS8VT: decode failed status=%d", (int)decodeStatus);

}

CFRelease(sampleBuffer);

}

CFRelease(blockBuffer);

}

return outputPixelBuffer;

}

@end

疑惑

上面的这个代码的用处?

uint32_t nalSize = (uint32_t)(frameSize - 4);

uint8_t *pNalSize = (uint8_t*)(&nalSize);

frame[0] = *(pNalSize + 3);

frame[1] = *(pNalSize + 2);

frame[2] = *(pNalSize + 1);

frame[3] = *(pNalSize);

由于VideoToolbox解码器只接受MPEG-4流,当接收到Elementary Stream形式的H.264流,需把Start Code(3- or 4-Byte Header)换成Length(4-Byte Header)。