编程实战(1)——爬取牛客ACM编程题信息

编程实战(1)——爬取牛客ACM编程题信息

文章目录

- 编程实战(1)——爬取牛客ACM编程题信息

-

-

- 简述

- 网页分析

- 代码解释

-

- 信息头和存储结构

- 获取题号列表

- 题目号、限制信息、题目标题

- 题目描述

- 奇怪字符串的处理

- 输入输出样例

- 标签、难度

- 转json文件输出

- 源码

-

简述

最近做项目需要把ACM牛客网的编程题里面的信息爬出来然后进行分析。需要爬取的数据有:题目号(NC开头)、标题名、难度、知识点、题目描述信息、限制信息和输入输出样例等;

-

运行环境:Anaconda python 3.8;

-

爬虫库是BeautifulSoup+requests,安装教程可以自行参考相关博客;

-

爬取的基网址:https://ac.nowcoder.com/acm/problem/list(不是牛客首页,因为首页里面的编程题比较少,所以当时为了追求题目量选择直接爬acm里面的题库)

网页分析

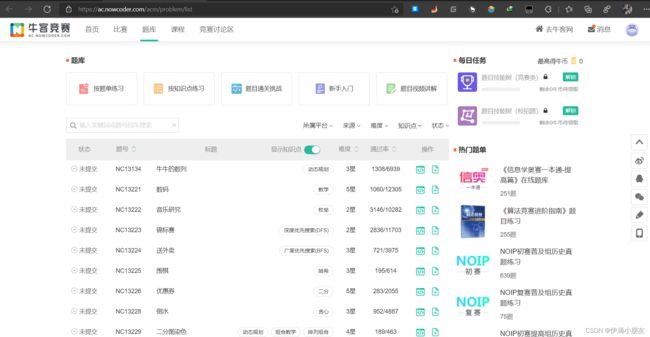

首先登录基网址(没有账号的一定要注册一个账号,因为查看题目详细信息需要用户登录的状态),可以看到题目列表。

往下翻会有分页,一共有两万多页,咱们也要不了那么多题目,所以只爬大概三十页这也就可以了。

点击不同的页面,他的url会变成这样的格式:https://ac.nowcoder.com/acm/problem/list?keyword=&tagId=&platformTagId=0&sourceTagId=0&difficulty=0&status=all&order=id&asc=true&pageSize=50&page= + 页号,因此我们可以用这个页号的变化来遍历不同的页。

题目列表中有每一题的题号、标题、知识点、难度信息,如果还要获取输入输出、限制和题目描述信息的话得点进去才行。我们以第一题牛牛的数列为例来讲一下原理。

这里一定要有账号登录,不然爬虫程序执行的时候网页会不给你爬!

点进去的页面是这样的,里面我们想要的各种信息(但是没有知识点标签)。然后观察一下网页的url,发现格式是:https://ac.nowcoder.com/acm/problem/ + 题目数字编号。

因此,我们可以利用这两种url的固定格式来完成大量的遍历爬取,具体思路是:

- 遍历每一页,爬取每一页所有题目的题号、难度、标签(因为详情页面没有标签信息和难度),将题号存成一个列表numlist;

- 利用这一页爬好的题号列表numlist,遍历之,把题号跟https://ac.nowcoder.com/acm/problem/ 拼接,进入每个详情页面,爬取其他详细信息;

- 把所有的信息整理输出;

for i in 页面范围:

#爬取每一页的所有题号列表numlist

for j in numlist:

#根据每个题号进入对应详情页面,爬取信息

#存储

接下来是代码部分。

代码解释

这一部分涉及到的元素属性名选择器的地方请对照网页F12的元素栏来参考;

信息头和存储结构

url = "https://ac.nowcoder.com/acm/problem/list? keyword=&tagId=&platformTagId=0&sourceTagId=0&difficulty=0&status=all&order=id&asc=true&pageSize=50&page=" + str(page)

headers = {

"User-Agent": '你的user agent',

"Cookie": '你的cookie'

}

这两个参数的获取方式简单提一下步骤:

- 在已经登录的页面上右键->检查、在菜单栏种找到“网络”,然后按ctrl+R,选择列表中第一个(一般名字是题号);

- 在右边那一栏中找到cookie和user agent,copy下来即可;

图例(user agent同理):

存储结构方面,我是用了简单粗暴的方法:先每个属性搞了一个列表,遍历的时候一个个添加,也可以用其他的方法。

QNum = []

QTitle = []

QDifficulty = []

QContent = []

QTag = []

QTimeLimit = []

QSpaceLimit = []

QInput = []

QOutput = []

获取题号列表

response = requests.get(url, headers=headers) #获取请求

BasicSoup = BeautifulSoup(response.content, 'lxml') #用bs4爬取list全部的页面元素

tablelist = BasicSoup.find_all(name="table", attrs={"class": "no-border"})[0].find_all(name="tr")[1:] #根据属性class、属性名为no-border来寻找符合条件的table元素,然后获取除去表头之外所有的tr元素

numlist = [] #存储题号的列表

for i in tablelist:

item = i.attrs['data-problemid'] #获取所有tr元素的data-problemid属性值

numlist.append(item) #添加属性

题目号、限制信息、题目标题

urls = 'https://ac.nowcoder.com/acm/problem/' + i #每个详情页面的url

responses = requests.get(urls, headers=headers)

content = responses.content

soup = BeautifulSoup(content, 'lxml') #得到所有元素的html文档

QTitle.append(soup.find_all(name="div", attrs={"class": "question-title"})[0].text.strip("\n"))#获取标题

mainContent = soup.find_all(name="div", attrs={"class": "terminal-topic"})[0]#找到主体部分

div = mainContent.find_all(name="div", attrs={"class": "subject-item-wrap"})[0].find_all("span")#找到题号、限制信息所在的div

num = div[0].text.strip("题号:") #其实前面已经爬过了一次了。。

QNum.append(num)

QTimeLimit.append(div[1].text.strip("时间限制:"))

QSpaceLimit.append(div[2].text.strip("空间限制:"))#获取信息

题目描述

div1 = mainContent.find_all(name="div", attrs={"class": "subject-question"})[0]#题目描述

div2 = mainContent.find_all(name="pre")[0]#输入描述

div3 = mainContent.find_all(name="pre")[1]#输出描述

descriptDict = {"题目描述:": divTextProcess(div1), "输入描述:": divTextProcess(div2), "输出描述:": divTextProcess(div3)}#这三个信息都属于题目描述,弄成一个字典

QContent.append(descriptDict)#把字典存到题目描述列表中

奇怪字符串的处理

def divTextProcess(div):

strBuffer = div.get_text() #获取文本

strBuffer = strBuffer.replace("{", " $").replace("}", "$ ") #替换公式标记

strBuffer = strBuffer.replace(" ", "") #去除多个空格

strBuffer = strBuffer.replace("\n\n\n", "\n") #去除多个换行符

strBuffer = strBuffer.replace("\xa0", "") #去除内容中用\xa0表示的空格

strBuffer = strBuffer.strip() #去除首位空格

return strBuffer

输入输出样例

div4 = mainContent.find_all(name="div", attrs={"class": "question-oi-cont"})[0]

div5 = mainContent.find_all(name="div", attrs={"class": "question-oi-cont"})[1]

QInput.append(divTextProcess(div4))

QOutput.append(divTextProcess(div5))

标签、难度

这里因为是开始爬了才发现标签不在详细页面里面,所以必须返回到对应的list页面中爬,逻辑有点点乱。。。。

response = requests.get(url, headers=headers)

BasicSoup = BeautifulSoup(response.content, 'lxml')#返回list页面

diff = BasicSoup.find_all(name="tr", attrs={"data-problemid": i})[0].find_all(name="td")[3].text.strip("\n")

QDifficulty.append(diff)#获取难度信息

problem = BasicSoup.find_all(name="tr", attrs={"data-problemid": i})[0].find_all(name="a", attrs={

"class": "tag-label js-tag"})

tag = "" #由于一道题目的标签可能不止一个,因此为了后续的格式化,做一下字符串处理

count = 0 #指针,如果是第一个标签就不要在前面加逗号

for i in problem:

if count == 0:

tag = tag + i.text

else:

tag = tag + "," + i.text

count = count + 1

QTag.append(tag)#获取标签信息

转json文件输出

result = {}#python里面的json库必须要字典型数据

for i in range(len(QNum)):#存储

message = {}

message.update({"questionNum": QNum[i]})

message.update({"questionTitle": QTitle[i]})

message.update({"difficulty": QDifficulty[i]})

message.update({"content": QContent[i]})

message.update({"PositiveTags": QTag[i]})

message.update({"TimeLimit": QTimeLimit[i]})

message.update({"SpaceLimit": QSpaceLimit[i]})

message.update({"Input": QInput[i]})

message.update({"Output": QOutput[i]})

result.update({str(i+1): message})

with open("文件名.json","w",encoding="UTF-8") as f:

json.dump(result, f, ensure_ascii=False) #输出文件

源码

import json

import requests

from bs4 import BeautifulSoup

def divTextProcess(div):

strBuffer = div.get_text() #获取文本

strBuffer = strBuffer.replace("{", " $").replace("}", "$ ") #替换公式标记

strBuffer = strBuffer.replace(" ", "") #去除多个空格

strBuffer = strBuffer.replace("\n\n\n", "\n") #去除多个换行符

strBuffer = strBuffer.replace("\xa0", "") #去除内容中用\xa0表示的空格

strBuffer = strBuffer.strip() #去除首位空格

return strBuffer

QNum = []

QTitle = []

QDifficulty = []

QContent = []

QTag = []

QTimeLimit = []

QSpaceLimit = []

QInput = []

QOutput = []

for page in range(1, 36):

print("page " + str(page) + " begin----------------------------")

url = "https://ac.nowcoder.com/acm/problem/list?keyword=&tagId=&platformTagId=0&sourceTagId=0&difficulty=0&status=all&order=id&asc=true&pageSize=50&page=" + str(

page)

headers = {

"User-Agent": '你的user agent',

"Cookie": '你的cookie'

}

response = requests.get(url, headers=headers)

BasicSoup = BeautifulSoup(response.content, 'lxml')

tablelist = BasicSoup.find_all(name="table", attrs={"class": "no-border"})[0].find_all(name="tr")[1:]

numlist = []

for i in tablelist:

item = i.attrs['data-problemid']

numlist.append(item)

for i in numlist:

urls = 'https://ac.nowcoder.com/acm/problem/' + i

headers = {

"User-Agent": '你的user agent',

"Cookie": '你的cookie'

}

responses = requests.get(urls, headers=headers)

content = responses.content

soup = BeautifulSoup(content, 'lxml')

QTitle.append(soup.find_all(name="div", attrs={"class": "question-title"})[0].text.strip("\n"))

mainContent = soup.find_all(name="div", attrs={"class": "terminal-topic"})[0]

div = mainContent.find_all(name="div", attrs={"class": "subject-item-wrap"})[0].find_all("span")

num = div[0].text.strip("题号:")

QNum.append(num)

QTimeLimit.append(div[1].text.strip("时间限制:"))

QSpaceLimit.append(div[2].text.strip("空间限制:"))

div1 = mainContent.find_all(name="div", attrs={"class": "subject-question"})[0]

div2 = mainContent.find_all(name="pre")[0]

div3 = mainContent.find_all(name="pre")[1]

descriptDict = {"题目描述:": divTextProcess(div1), "输入描述:": divTextProcess(div2), "输出描述:": divTextProcess(div3)}

QContent.append(descriptDict)

div4 = mainContent.find_all(name="div", attrs={"class": "question-oi-cont"})[0]

div5 = mainContent.find_all(name="div", attrs={"class": "question-oi-cont"})[1]

QInput.append(divTextProcess(div4))

QOutput.append(divTextProcess(div5))

response = requests.get(url, headers=headers)

BasicSoup = BeautifulSoup(response.content, 'lxml')

diff = BasicSoup.find_all(name="tr", attrs={"data-problemid": i})[0].find_all(name="td")[3].text.strip("\n")

QDifficulty.append(diff)

problem = BasicSoup.find_all(name="tr", attrs={"data-problemid": i})[0].find_all(name="a", attrs={

"class": "tag-label js-tag"})

tag = ""

count = 0

for i in problem:

if count == 0:

tag = tag + i.text

else:

tag = tag + "," + i.text

count = count + 1

QTag.append(tag)

print("-----------------" + str(num) + " finished-----------------")

print("page " + str(page) + " finished----------------------------\n")

# print(QNum)

# print(QTitle)

# print(QTag)

# print(QContent)

# print(QDifficulty)

# print(QInput)

# print(QOutput)

# print(QSpaceLimit)

# print(QTimeLimit)

result = {}

for i in range(len(QNum)):

message = {}

message.update({"questionNum": QNum[i]})

message.update({"questionTitle": QTitle[i]})

message.update({"difficulty": QDifficulty[i]})

message.update({"content": QContent[i]})

message.update({"PositiveTags": QTag[i]})

message.update({"TimeLimit": QTimeLimit[i]})

message.update({"SpaceLimit": QSpaceLimit[i]})

message.update({"Input": QInput[i]})

message.update({"Output": QOutput[i]})

result.update({str(i+1): message})

# for item in result.items():

# print(item)

with open("NiuKeACM.json","w",encoding="UTF-8") as f:

json.dump(result, f, ensure_ascii=False)