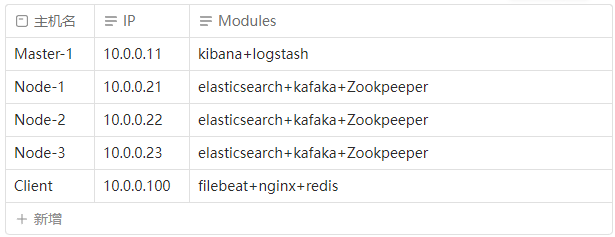

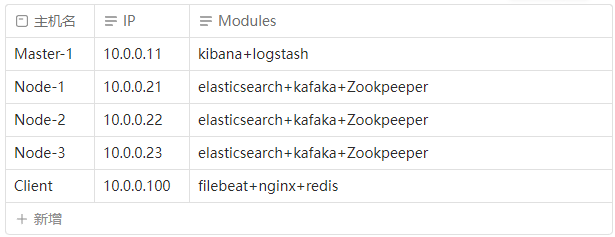

Elasticsearch

Node-1

#安装docker

sudo yum update -y

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo yum install docker-ce -y;

docker -v;

mkdir -p /etc/docker;

cd /etc/docker;

cat > daemon.json < elasticsearch.yml << EOF

cluster.name: es-cluster

node.name: node-1

network.host: 0.0.0.0

network.publish_host: 10.0.0.21

http.port: 9200

transport.port: 9300

bootstrap.memory_lock: true

discovery.seed_hosts: ["10.0.0.21:9300","10.0.0.22:9300","10.0.0.23:9300"]

cluster.initial_master_nodes: ["10.0.0.21","10.0.0.22","10.0.0.23"]

http.cors.enabled: true

http.cors.allow-origin: "*"

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: "certs/elastic-certificates.p12"

xpack.security.transport.ssl.truststore.path: "certs/elastic-certificates.p12"

xpack.monitoring.collection.enabled: true

xpack.monitoring.exporters.my_local.type: local

xpack.monitoring.exporters.my_local.use_ingest: false

EOF

#授权开端口

chown es:es /data/modules -R

firewall-cmd --zone=public --add-port=9100/tcp --permanent;

firewall-cmd --zone=public --add-port=9200/tcp --permanent;

firewall-cmd --zone=public --add-port=9300/tcp --permanent;

firewall-cmd --zone=public --add-service=http --permanent;

firewall-cmd --zone=public --add-service=https --permanent;

firewall-cmd --reload;

firewall-cmd --list-all;

#容器网络

systemctl restart docker

docker network create \

--driver=bridge \

--subnet=10.10.10.0/24 \

--ip-range=10.10.10.0/24 \

--gateway=10.10.10.254 \

es-net

## 创建容器

docker run --name es \

-d --network=es-net \

--ip=10.10.10.21 \

--restart=always \

--publish 9200:9200 \

--publish 9300:9300 \

--privileged=true \

--ulimit nofile=655350 \

--ulimit memlock=-1 \

--memory=2G \

--memory-swap=-1 \

--volume /data/modules/es/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \

--volume /data/modules/es/data:/usr/share/elasticsearch/data \

--volume /data/modules/es/logs:/usr/share/elasticsearch/logs \

--volume /data/modules/es/certs:/usr/share/elasticsearch/config/certs \

--volume /etc/localtime:/etc/localtime \

-e TERM=dumb \

-e ELASTIC_PASSWORD='elastic' \

-e ES_JAVA_OPTS="-Xms256m -Xmx256m" \

-e path.data=data \

-e path.logs=logs \

-e node.master=true \

-e node.data=true \

-e node.ingest=false \

-e node.attr.rack="0402-K03" \

-e gateway.recover_after_nodes=1 \

-e bootstrap.memory_lock=true \

-e bootstrap.system_call_filter=false \

-e indices.fielddata.cache.size="25%" \

elasticsearch:7.17.0

#登陆账号密码

elastic

elastic

Node-2

sudo yum update -y

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo yum install docker-ce -y;

docker -v;

mkdir -p /etc/docker;

cd /etc/docker;

cat > daemon.json < elasticsearch.yml << EOF

cluster.name: es-cluster

node.name: node-2

network.host: 0.0.0.0

network.publish_host: 10.0.0.22

http.port: 9200

transport.port: 9300

bootstrap.memory_lock: true

discovery.seed_hosts: ["10.0.0.21:9300","10.0.0.22:9300","10.0.0.23:9300"]

cluster.initial_master_nodes: ["10.0.0.21","10.0.0.22","10.0.0.23"]

http.cors.enabled: true

http.cors.allow-origin: "*"

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: "certs/elastic-certificates.p12"

xpack.security.transport.ssl.truststore.path: "certs/elastic-certificates.p12"

xpack.monitoring.collection.enabled: true

xpack.monitoring.exporters.my_local.type: local

xpack.monitoring.exporters.my_local.use_ingest: false

EOF

#授权开端口

chown es:es /data/modules -R

firewall-cmd --zone=public --add-port=9100/tcp --permanent;

firewall-cmd --zone=public --add-port=9200/tcp --permanent;

firewall-cmd --zone=public --add-port=9300/tcp --permanent;

firewall-cmd --zone=public --add-service=http --permanent;

firewall-cmd --zone=public --add-service=https --permanent;

firewall-cmd --reload;

firewall-cmd --list-all;

#容器网络

systemctl restart docker

docker network create \

--driver=bridge \

--subnet=10.10.10.0/24 \

--ip-range=10.10.10.0/24 \

--gateway=10.10.10.254 \

es-net

## 创建容器

docker run --name es \

-d --network=es-net \

--ip=10.10.10.22 \

--restart=always \

--publish 9200:9200 \

--publish 9300:9300 \

--privileged=true \

--ulimit nofile=655350 \

--ulimit memlock=-1 \

--memory=2G \

--memory-swap=-1 \

--volume /data/modules/es/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \

--volume /data/modules/es/data:/usr/share/elasticsearch/data \

--volume /data/modules/es/logs:/usr/share/elasticsearch/logs \

--volume /data/modules/es/certs:/usr/share/elasticsearch/config/certs \

--volume /etc/localtime:/etc/localtime \

-e TERM=dumb \

-e ELASTIC_PASSWORD='elastic' \

-e ES_JAVA_OPTS="-Xms256m -Xmx256m" \

-e path.data=data \

-e path.logs=logs \

-e node.master=true \

-e node.data=true \

-e node.ingest=false \

-e node.attr.rack="0402-K03" \

-e gateway.recover_after_nodes=1 \

-e bootstrap.memory_lock=true \

-e bootstrap.system_call_filter=false \

-e indices.fielddata.cache.size="25%" \

elasticsearch:7.17.0

#登陆账号密码

elastic

elastic

node-3

sudo yum update -y

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo yum install docker-ce -y;

docker -v;

mkdir -p /etc/docker;

cd /etc/docker;

cat > daemon.json < elasticsearch.yml << EOF

cluster.name: es-cluster

node.name: node-3

network.host: 0.0.0.0

network.publish_host: 10.0.0.23

http.port: 9200

transport.port: 9300

bootstrap.memory_lock: true

discovery.seed_hosts: ["10.0.0.21:9300","10.0.0.22:9300","10.0.0.23:9300"]

cluster.initial_master_nodes: ["10.0.0.21","10.0.0.22","10.0.0.23"]

http.cors.enabled: true

http.cors.allow-origin: "*"

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: "certs/elastic-certificates.p12"

xpack.security.transport.ssl.truststore.path: "certs/elastic-certificates.p12"

xpack.monitoring.collection.enabled: true

xpack.monitoring.exporters.my_local.type: local

xpack.monitoring.exporters.my_local.use_ingest: false

EOF

#授权开端口

chown es:es /data/modules -R

firewall-cmd --zone=public --add-port=9100/tcp --permanent;

firewall-cmd --zone=public --add-port=9200/tcp --permanent;

firewall-cmd --zone=public --add-port=9300/tcp --permanent;

firewall-cmd --zone=public --add-service=http --permanent;

firewall-cmd --zone=public --add-service=https --permanent;

firewall-cmd --reload;

firewall-cmd --list-all;

#容器网络

systemctl restart docker

docker network create \

--driver=bridge \

--subnet=10.10.10.0/24 \

--ip-range=10.10.10.0/24 \

--gateway=10.10.10.254 \

es-net

## 创建容器

docker run --name es \

-d --network=es-net \

--ip=10.10.10.23 \

--restart=always \

--publish 9200:9200 \

--publish 9300:9300 \

--privileged=true \

--ulimit nofile=655350 \

--ulimit memlock=-1 \

--memory=2G \

--memory-swap=-1 \

--volume /data/modules/es/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \

--volume /data/modules/es/data:/usr/share/elasticsearch/data \

--volume /data/modules/es/logs:/usr/share/elasticsearch/logs \

--volume /data/modules/es/certs:/usr/share/elasticsearch/config/certs \

--volume /etc/localtime:/etc/localtime \

-e TERM=dumb \

-e ELASTIC_PASSWORD='elastic' \

-e ES_JAVA_OPTS="-Xms256m -Xmx256m" \

-e path.data=data \

-e path.logs=logs \

-e node.master=true \

-e node.data=true \

-e node.ingest=false \

-e node.attr.rack="0402-K03" \

-e gateway.recover_after_nodes=1 \

-e bootstrap.memory_lock=true \

-e bootstrap.system_call_filter=false \

-e indices.fielddata.cache.size="25%" \

elasticsearch:7.17.0

#登陆账号密码

elastic

elastic

Kibana

Master

#安装Docker

sudo yum update -y

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo yum install docker-ce -y;

docker -v;

mkdir -p /etc/docker;

cd /etc/docker;

cat > daemon.json < kibana.yml << EOF

server.name: kibana

server.port: 5601

i18n.locale: "zh-CN"

server.host: "0.0.0.0"

kibana.index: ".kibana"

server.shutdownTimeout: "5s"

server.publicBaseUrl: "http://10.0.0.11:5601"

monitoring.ui.container.elasticsearch.enabled: true

elasticsearch.hosts: ["http://10.0.0.21:9200","http://10.0.0.22:9200","http://10.0.0.23:9200"]

elasticsearch.username: "elastic"

elasticsearch.password: "elastic"

EOF

exit

docker restart kibana

Zookeeper+Kafka

Node-1

#Zookeeper-1

#ELK同网络

docker network create \

--driver=bridge \

--subnet=10.10.10.0/24 \

--ip-range=10.10.10.0/24 \

--gateway=10.10.10.254 \

es-net

#创建映射目录

mkdir -p /data/modules/zookeeper/data

mkdir -p /data/modules/zookeeper/logs

mkdir -p /data/modules/zookeeper/conf

#(myid)需要与server.(id)相同

cd /data/modules/zookeeper/data

cat > myid << EOF

1

EOF

#写入配置文件

cd /data/modules/zookeeper/conf/

cat > zoo.cfg << EOF

#集群节点间心跳检查间隔,单位是毫秒,后续所有和时间相关的配置都是该值的倍数,进行整数倍的配置,如4等于8000

tickTime=2000

#集群其他节点与Master通信完成的初始通信时间限制,这里代表10*2000

initLimit=10

#若Master节点在超过syncLimit*tickTime的时间还未收到响应,认为该节点宕机

syncLimit=5

#数据存放目录

dataDir=/data

#ZK日志文件存放路径

dataLogDir=/logs

#ZK服务端口

clientPort=2181

#单个客户端最大连接数限制,0代表不限制

maxClientCnxns=60

#快照文件保留的数量

autopurge.snapRetainCount=3

#清理快照文件和事务日志文件的频率,默认为0代表不开启,单位是小时

autopurge.purgeInterval=1

#server.A=B:C:D 集群设置,

#A表示第几号服务器;

#B是IP;

#C是该服务器与leader通信端口;

#D是leader挂掉后重新选举所用通信端口;两个端口号可以随意

server.1=0.0.0.0:2888:3888

server.2=10.0.0.22:2888:3888

server.3=10.0.0.23:2888:3888

EOF

#开启端口

firewall-cmd --permanent --zone=public --add-port=2181/tcp;

firewall-cmd --permanent --zone=public --add-port=2888/tcp;

firewall-cmd --permanent --zone=public --add-port=3888/tcp;

firewall-cmd --reload

#下载镜像

docker pull zookeeper:3.7.0

#创建容器

docker run -d \

-p 2181:2181 \

-p 2888:2888 \

-p 3888:3888 \

--network=es-net \

--name zookeeper \

--ip=10.10.10.31 \

--privileged=true \

--restart always \

-v /data/modules/zookeeper/data:/data \

-v /data/modules/zookeeper/logs:/logs \

-v /data/modules/zookeeper/data/myid:/data/myid \

-v /data/modules/zookeeper/conf/zoo.cfg:/conf/zoo.cfg \

zookeeper:3.7.0

#查看容器

docker ps

#安装Kafka

docker pull wurstmeister/kafka

#创建日志目录

mkdir -p /data/modules/kafka/logs

#创建容器

docker run -d --name kafka \

--publish 9092:9092 \

--network=es-net \

--ip=10.10.10.41 \

--privileged=true \

--restart always \

--link zookeeper \

--env KAFKA_ZOOKEEPER_CONNECT=10.0.0.21:2181,10.0.0.22:2181,10.0.0.23:2181 \

--env KAFKA_ADVERTISED_HOST_NAME=10.0.0.21 \

--env KAFKA_ADVERTISED_PORT=9092 \

--env KAFKA_LOG_DIRS=/kafka/kafka-logs-1 \

-v /data/modules/kafka/logs:/kafka/kafka-logs-1 \

wurstmeister/kafka:2.13-2.8.1

#下载镜像

docker pull sheepkiller/kafka-manager:alpine

#kafka-manager

docker run -itd --restart=always \

--name=kafka-manager \

-p 9000:9000 \

--network=es-net \

--ip=10.10.10.51 \

--privileged=true \

-e ZK_HOSTS="10.0.0.21:2181,10.0.0.22:2181,10.0.0.23:2181" \

sheepkiller/kafka-manager:alpine

Node-2

#Zookeeper-1

#ELK同网络

docker network create \

--driver=bridge \

--subnet=10.10.10.0/24 \

--ip-range=10.10.10.0/24 \

--gateway=10.10.10.254 \

es-net

#创建映射目录

mkdir -p /data/modules/zookeeper/data

mkdir -p /data/modules/zookeeper/logs

mkdir -p /data/modules/zookeeper/conf

#(myid)需要与server.(id)相同

cd /data/modules/zookeeper/data

cat > myid << EOF

2

EOF

#写入配置文件

cd /data/modules/zookeeper/conf/

cat > zoo.cfg << EOF

#集群节点间心跳检查间隔,单位是毫秒,后续所有和时间相关的配置都是该值的倍数,进行整数倍的配置,如4等于8000

tickTime=2000

#集群其他节点与Master通信完成的初始通信时间限制,这里代表10*2000

initLimit=10

#若Master节点在超过syncLimit*tickTime的时间还未收到响应,认为该节点宕机

syncLimit=5

#数据存放目录

dataDir=/data

#ZK日志文件存放路径

dataLogDir=/logs

#ZK服务端口

clientPort=2181

#单个客户端最大连接数限制,0代表不限制

maxClientCnxns=60

#快照文件保留的数量

autopurge.snapRetainCount=3

#清理快照文件和事务日志文件的频率,默认为0代表不开启,单位是小时

autopurge.purgeInterval=1

#server.A=B:C:D 集群设置,

#A表示第几号服务器;

#B是IP;

#C是该服务器与leader通信端口;

#D是leader挂掉后重新选举所用通信端口;两个端口号可以随意

server.1=10.0.0.21:2888:3888

server.2=0.0.0.0:2888:3888

server.3=10.0.0.23:2888:3888

EOF

#开启端口

firewall-cmd --permanent --zone=public --add-port=2181/tcp;

firewall-cmd --permanent --zone=public --add-port=2888/tcp;

firewall-cmd --permanent --zone=public --add-port=3888/tcp;

firewall-cmd --reload

#下载镜像

docker pull zookeeper:3.7.0

#创建容器

docker run -d \

-p 2181:2181 \

-p 2888:2888 \

-p 3888:3888 \

--network=es-net \

--name zookeeper \

--ip=10.10.10.32 \

--privileged=true \

--restart always \

-v /data/modules/zookeeper/data:/data \

-v /data/modules/zookeeper/logs:/logs \

-v /data/modules/zookeeper/data/myid:/data/myid \

-v /data/modules/zookeeper/conf/zoo.cfg:/conf/zoo.cfg \

zookeeper:3.7.0

#查看容器

docker ps

#安装Kafka

docker pull wurstmeister/kafka

#创建日志目录

mkdir -p /data/modules/kafka/logs

#创建容器

docker run -d --name kafka \

--publish 9092:9092 \

--network=es-net \

--ip=10.10.10.42 \

--privileged=true \

--restart always \

--link zookeeper \

--env KAFKA_ZOOKEEPER_CONNECT=10.0.0.21:2181,10.0.0.22:2181,10.0.0.23:2181 \

--env KAFKA_ADVERTISED_HOST_NAME=10.0.0.22 \

--env KAFKA_ADVERTISED_PORT=9092 \

--env KAFKA_LOG_DIRS=/kafka/kafka-logs-1 \

-v /data/modules/kafka/logs:/kafka/kafka-logs-1 \

wurstmeister/kafka

#下载镜像

docker pull sheepkiller/kafka-manager:alpine

#kafka-manager

docker run -itd --restart=always \

--name=kafka-manager \

-p 9000:9000 \

--network=es-net \

--ip=10.10.10.52 \

--privileged=true \

-e ZK_HOSTS="10.0.0.21:2181,10.0.0.22:2181,10.0.0.23:2181" \

sheepkiller/kafka-manager:alpine

Node-3

#Zookeeper-1

#ELK同网络

docker network create \

--driver=bridge \

--subnet=10.10.10.0/24 \

--ip-range=10.10.10.0/24 \

--gateway=10.10.10.254 \

es-net

#创建映射目录

mkdir -p /data/modules/zookeeper/data

mkdir -p /data/modules/zookeeper/logs

mkdir -p /data/modules/zookeeper/conf

#(myid)需要与server.(id)相同

cd /data/modules/zookeeper/data

cat > myid << EOF

3

EOF

#写入配置文件

cd /data/modules/zookeeper/conf/

cat > zoo.cfg << EOF

#集群节点间心跳检查间隔,单位是毫秒,后续所有和时间相关的配置都是该值的倍数,进行整数倍的配置,如4等于8000

tickTime=2000

#集群其他节点与Master通信完成的初始通信时间限制,这里代表10*2000

initLimit=10

#若Master节点在超过syncLimit*tickTime的时间还未收到响应,认为该节点宕机

syncLimit=5

#数据存放目录

dataDir=/data

#ZK日志文件存放路径

dataLogDir=/logs

#ZK服务端口

clientPort=2181

#单个客户端最大连接数限制,0代表不限制

maxClientCnxns=60

#快照文件保留的数量

autopurge.snapRetainCount=3

#清理快照文件和事务日志文件的频率,默认为0代表不开启,单位是小时

autopurge.purgeInterval=1

#server.A=B:C:D 集群设置,

#A表示第几号服务器;

#B是IP;

#C是该服务器与leader通信端口;

#D是leader挂掉后重新选举所用通信端口;两个端口号可以随意

server.1=10.0.0.21:2888:3888

server.2=10.0.0.22:2888:3888

server.3=0.0.0.0:2888:3888

EOF

#开启端口

firewall-cmd --permanent --zone=public --add-port=2181/tcp;

firewall-cmd --permanent --zone=public --add-port=2888/tcp;

firewall-cmd --permanent --zone=public --add-port=3888/tcp;

firewall-cmd --reload

#下载镜像

docker pull zookeeper:3.7.0

#创建容器

docker run -d \

-p 2181:2181 \

-p 2888:2888 \

-p 3888:3888 \

--network=es-net \

--name zookeeper \

--ip=10.10.10.33 \

--privileged=true \

--restart always \

-v /data/modules/zookeeper/data:/data \

-v /data/modules/zookeeper/logs:/logs \

-v /data/modules/zookeeper/data/myid:/data/myid \

-v /data/modules/zookeeper/conf/zoo.cfg:/conf/zoo.cfg \

zookeeper:3.7.0

#查看容器

docker ps

#安装Kafka

docker pull wurstmeister/kafka

#创建日志目录

mkdir -p /data/modules/kafka/logs

#创建容器

docker run -d --name kafka \

--publish 9092:9092 \

--network=es-net \

--ip=10.10.10.43 \

--privileged=true \

--restart always \

--link zookeeper \

--env KAFKA_ZOOKEEPER_CONNECT=10.0.0.21:2181,10.0.0.22:2181,10.0.0.23:2181 \

--env KAFKA_ADVERTISED_HOST_NAME=10.0.0.23 \

--env KAFKA_ADVERTISED_PORT=9092 \

--env KAFKA_LOG_DIRS=/kafka/kafka-logs-1 \

-v /data/modules/kafka/logs:/kafka/kafka-logs-1 \

wurstmeister/kafka

#下载镜像

docker pull sheepkiller/kafka-manager:alpine

#kafka-manager

docker run -itd --restart=always \

--name=kafka-manager \

-p 9000:9000 \

--network=es-net \

--ip=10.10.10.53 \

--privileged=true \

-e ZK_HOSTS="10.0.0.21:2181,10.0.0.22:2181,10.0.0.23:2181" \

sheepkiller/kafka-manager:alpine

Logstash

master

#安装Docker

sudo yum update -y

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo yum install docker-ce -y;

docker -v;

mkdir -p /etc/docker;

cd /etc/docker;

cat > daemon.json < logstash.yml << EOF

http.host: "0.0.0.0"

path.config: /usr/share/logstash/config/conf.d/*.conf

path.logs: /usr/share/logstash/logs

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: elastic

xpack.monitoring.elasticsearch.password: elastic

xpack.monitoring.elasticsearch.hosts: [ "http://10.0.0.21:9200","http://10.0.0.22:9200","http://10.0.0.23:9200" ]

EOF

cat > conf.d << EOF

input {

beats {

port => 5044

}

file {

#Nginx日志目录

path => "/usr/local/nginx/logs/access.log"

start_position => "beginning"

}

}

filter {

if [path] =~ "access" {

mutate { replace => { "type" => "nginx_access" } }

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

date {

#时间戳

match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ]

}

}

output {

elasticsearch {

#接受主机

hosts => ["${NODE_1_IP}:9200","${NODE_2_IP}:9200","${NODE_3_IP}:9200"]

}

stdout { codec => rubydebug }

}

EOF

#创建容器网络

docker network create \

--driver=bridge \

--subnet=10.10.10.0/24 \

--ip-range=10.10.10.0/24 \

--gateway=10.10.10.254 \

elk-net

#下载镜像

docker pull elastic/logstash:7.17.0

#启动容器

docker run -dit --name=logstash \

-d --network=elk-net \

--ip=10.10.10.12 \

--publish 5044:5044 \

--restart=always --privileged=true \

-e ES_JAVA_OPTS="-Xms512m -Xmx512m" \

-v /data/modules/logstash/conf/logstash.yml:/usr/share/logstash/config/logstash.yml \

-v /data/modules/logstash/conf/conf.d/:/usr/share/logstash/config/conf.d/ \

elastic/logstash:7.17.0

Filebeat

client

Filebeat

mysql

#!/bin/bash

MYSQL_V=5.7.37

TMP_DIR=/tmp

INSTALL_DIR=/usr/local

function install_mysql(){

MYSQL_BASE=/usr/local/mysql

cd $TMP_DIR

file="mysql-5.7.37-linux-glibc2.12-x86_64.tar.gz"

if [ ! -f $file ]; then

echo "File not found!"

yum install -y wget && wget -c wget https://cdn.mysql.com//Downloads/MySQL-5.7/mysql-5.7.37-linux-glibc2.12-x86_64.tar.gz;

echo "下载完成,正在解压.......";

tar -zxvf mysql-5.7.37-linux-glibc2.12-x86_64.tar.gz

mv mysql-5.7.37-linux-glibc2.12-x86_64 /usr/local/mysql

cd /usr/local/mysql

#exit 0

fi

echo "创建用户组"

userdel mysql;

groupadd mysql;

useradd -r -g mysql mysql;

mkdir -p /data/mysql;

chown mysql:mysql -R /data/mysql;

cd /etc

echo "写入配置文件"

cat > my.cnf < mysq.service << EOF

[Unit]

Description=Mysql

After=syslog.target network.target remote-fs.target nss-lookup.target

[Service]

Type=forking

PIDFile=/data/mysql/mysql.pid

ExecStart=/usr/local/mysql/support-files/mysql.server start

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

PrivateTmp=false

[Install]

WantedBy=multi-user.target

EOF

systemctl start mysql

systemctl enable mysql

systemctl status mysql

#cp /usr/local/mysql/support-files/mysql.server /etc/rc.d/init.d/mysqld;

#chmod +x /etc/init.d/mysqld;

#chkconfig --add mysqld;

#chkconfig --list;

firewall-cmd --zone=public --add-port=3306/tcp --permanent;

firewall-cmd --reload;

firewall-cmd --list-all;

echo "=========> MYSQL信息 <========="

echo " 数据库版本 : 5.7.37 "

echo " 数据库密码 : [email protected] "

echo " 数据库端口 : 3306 "

echo " BASEDIR目录: /usr/local/mysql "

echo " DATADIR目录: /data/mysql "

}

install_mysql

nginx

#!/bin/bash

#Nginx版本

NGINX_V=1.20.0

#Nginx下载目录

TMP_DIR=/tmp

#Nginx安装目录

INSTALL_DIR=/usr/local

function install_nginx() {

#下载依赖

yum -y install gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel

#下载Nginx

cd ${TMP_DIR}

yum install -y wget && wget -c wget http://nginx.org/download/nginx-${NGINX_V}.tar.gz

#解压源码

tar -zxvf ${TMP_DIR}/nginx-${NGINX_V}.tar.gz

mv nginx-${NGINX_V} nginx;cd nginx;

#预编译配置

./configure --prefix=/usr/local/nginx --with-http_ssl_module --with-http_stub_status_module

sleep 2s

#编译安装

make && make install

#以服务启动

cd /usr/lib/systemd/system;

cat > nginx.service <

redis

#!/bin/bash

sudo yum install net-tools -y

IP=`ifconfig -a|grep inet|grep -v 127.0.0.1|grep -v inet6|awk '{print $2}'|tr -d "addr:"|grep "10."`

REDIS_PASSWD=123456

#编译环境

yum -y install centos-release-scl devtoolset-9-gcc

yum -y install devtoolset-9-gcc-c++ devtoolset-9-binutils

scl enable devtoolset-9 bash

echo "source /opt/rh/devtoolset-9/enable" >> /etc/profile

gcc -v

install_redis(){

#统一目录

if [ ! -d "/data/software" ]; then

mkdir -p /data/software/

fi

#远程下载

cd /data/software/

file="redis-6.2.6.tar.gz"

if [ ! -f $file ]; then

yum install -y wget && wget http://download.redis.io/releases/redis-6.2.6.tar.gz

#exit 0

fi

#解压编译

cd /data/software

tar -zxvf redis-6.2.6.tar.gz -C /usr/local/

cd /usr/local/

mv redis-6.2.6 redis

cd redis

sudo make

sleep 2s

sudo make PREFIX=/usr/local/redis install

mkdir /usr/local/redis/etc/

cp /usr/local/redis/redis.conf /usr/local/redis/redis.conf.bak

#写入配置

cd /usr/local/redis

cat > redis.conf << EOF

//是否后台运行,no不是,yes是

daemonize no

//端口 默认 6379

port 6379

//日志文件地址

logfile "/var/log/redis.log"

//如果要开启外网访问请修改下面值

bind 127.0.0.1

protected-mode yes

//密码

requirepass 123456

protected-mode no

EOF

#环境变量

cd /etc/

cat >> profile << EOF

export REDIS_HOME=/usr/local/redis

export PATH=$PATH:$REDIS_HOME/bin/

EOF

source /etc/profile

#端口

firewall-cmd --permanent --zone=public --add-port=6379/tcp

firewall-cmd --reload

#启动redis

cd /usr/local/redis/bin

ln -s /usr/local/redis/bin/redis-server /usr/bin/redis-server

ln -s /usr/local/redis/bin/redis-cli /usr/bin/redis-cli

redis-server

}

install_redis