业务数据采集平台搭建

业务数据采集平台搭建

- 业务数据采集模块

-

- 业务数据同步概述

-

- 数据同步策略概述

- 数据同步策略选择

- 数据同步工具概述

- 数据同步工具部署

- 全量表数据同步

-

- 数据通道

- DataX 配置文件

- DataX 配置文件生成脚本

- 测试生成的 DataX 配置文件

- 全量表数据同步脚本

- 全量表同步总结

- 增量表数据同步

-

- 数据通道

- Maxwell 配置

- Flume 配置

- 增量表首日全量同步

- 增量表同步总结

- 数仓环境准备

-

- Hive安装部署

业务数据采集模块

业务数据同步概述

数据同步策略概述

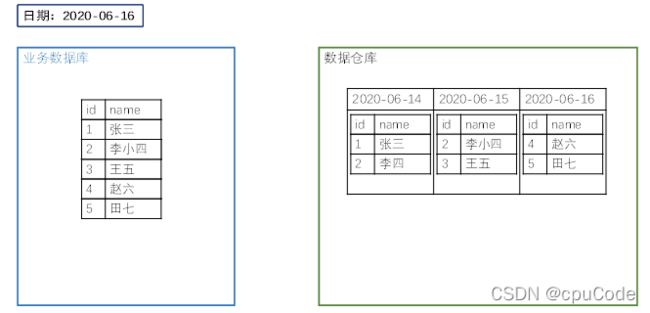

每日定时从业务数据库中抽取数据,传输到数据仓库中,之后再对数据进行分析统计

为保证统计结果的正确性,需要保证数据仓库中的数据与业务数据库是同步,离线数仓的计算周期通常为天,所以数据同步周期为天 ( 每天同步一次 )

数据的同步策略 :

- 全量同步

- 增量同步

全量同步 : 每天都将业务数据库中的全部数据同步一份到数据仓库,保证两侧数据同步的最简单的方式

增量同步 : 每天只将业务数据中的新增及变化数据同步到数据仓库。采用每日增量同步的表 ( 首日一次全量同步 )

数据同步策略选择

两种策略对比 :

| 同步策略 | 优点 | 缺点 |

|---|---|---|

| 全量同步 | 逻辑简单 | 在某些情况下效率较低。例如某张表数据量较大,但是每天数据的变化比例很低,若对其采用每日全量同步,则会重复同步和存储大量相同的数据。 |

| 增量同步 | 效率高,无需同步和存储重复数据 | 逻辑复杂,需要将每日的新增及变化数据同原来的数据进行整合,才能使用 |

结论:业务表数据量大,且每天数据变化低 ( 增量同步 ) ,否则 全量同步

各表同步策略:

数据同步工具概述

数据同步工具 :

- 离线、批量同步 : 基于Select查询 , DataX、Sqoop

- 实时流式同步 : 基于数据库数据变更日志 , Maxwell、Canal

| 增量同步方案 | DataX/Sqoop | Maxwell/Canal |

|---|---|---|

| 对数据库的要求 | 数据表中存在create_time、update_time等字段,然后根据这些字段获取变更数据 | 要求数据库记录变更操作,如 : MySQL开启 binlog |

| 数据的中间状态 | 获取最后一个状态,中间状态无法获取 | 获取变更数据的所有中间状态 |

全量同步 : DataX

增量同步 : Maxwell

数据同步工具部署

DataX

Maxwell

全量表数据同步

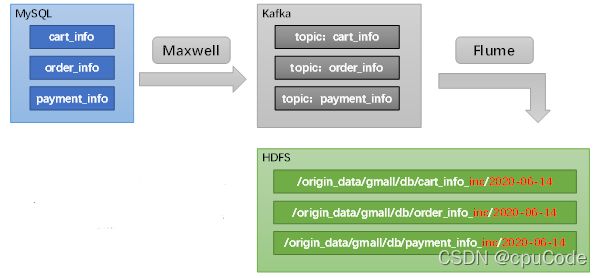

数据通道

全量表数据由 DataX 从 MySQL 业务数据库直接同步到 HDFS

目标路径中表名须包含后缀 full , 表示该表为全量同步

目标路径中包含一层日期 , 用以对不同天的数据进行区分

DataX 配置文件

每张全量表编写一个 DataX 的 json 配置文件

栗子 : activity_info

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [

"id",

"activity_name",

"activity_type",

"activity_desc",

"start_time",

"end_time",

"create_time"

],

"connection": [

{

"jdbcUrl": [

"jdbc:mysql://cpucode101:3306/gmall"

],

"table": [

"activity_info"

]

}

],

"password": "123456",

"splitPk": "",

"username": "root"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [

{

"name": "id",

"type": "bigint"

},

{

"name": "activity_name",

"type": "string"

},

{

"name": "activity_type",

"type": "string"

},

{

"name": "activity_desc",

"type": "string"

},

{

"name": "start_time",

"type": "string"

},

{

"name": "end_time",

"type": "string"

},

{

"name": "create_time",

"type": "string"

}

],

"compress": "gzip",

"defaultFS": "hdfs://cpucode101:8020",

"fieldDelimiter": "\t",

"fileName": "activity_info",

"fileType": "text",

"path": "${targetdir}",

"writeMode": "append"

}

}

}

],

"setting": {

"speed": {

"channel": 1

}

}

}

}

由于目标路径包含一层日期,用于对不同天的数据加以区分,故 path 参数并未写死,需在提交任务时通过参数动态传入,参数名称为 targetdir

DataX 配置文件生成脚本

DataX配置文件批量生成脚本

gen_import_config.py 脚本

vim gen_import_config.py

# coding=utf-8

import json

import getopt

import os

import sys

import MySQLdb

#MySQL相关配置,需根据实际情况作出修改

mysql_host = "cpucode101"

mysql_port = "3306"

mysql_user = "root"

mysql_passwd = "123456"

#HDFS NameNode相关配置,需根据实际情况作出修改

hdfs_nn_host = "cpucode101"

hdfs_nn_port = "8020"

#生成配置文件的目标路径,可根据实际情况作出修改

output_path = "/opt/module/datax/job/import"

#获取mysql连接

def get_connection():

return MySQLdb.connect(host=mysql_host, port=int(mysql_port), user=mysql_user, passwd=mysql_passwd)

#获取表格的元数据 包含列名和数据类型

def get_mysql_meta(database, table):

connection = get_connection()

cursor = connection.cursor()

sql = "SELECT COLUMN_NAME,DATA_TYPE from information_schema.COLUMNS WHERE TABLE_SCHEMA=%s AND TABLE_NAME=%s ORDER BY ORDINAL_POSITION"

cursor.execute(sql, [database, table])

fetchall = cursor.fetchall()

cursor.close()

connection.close()

return fetchall

#获取mysql表的列名

def get_mysql_columns(database, table):

return map(lambda x: x[0], get_mysql_meta(database, table))

#将获取的元数据中 mysql 的数据类型转换为 hive 的数据类型 写入到 hdfswriter 中

def get_hive_columns(database, table):

def type_mapping(mysql_type):

mappings = {

"bigint": "bigint",

"int": "bigint",

"smallint": "bigint",

"tinyint": "bigint",

"decimal": "string",

"double": "double",

"float": "float",

"binary": "string",

"char": "string",

"varchar": "string",

"datetime": "string",

"time": "string",

"timestamp": "string",

"date": "string",

"text": "string"

}

return mappings[mysql_type]

meta = get_mysql_meta(database, table)

return map(lambda x: {"name": x[0], "type": type_mapping(x[1].lower())}, meta)

#生成json文件

def generate_json(source_database, source_table):

job = {

"job": {

"setting": {

"speed": {

"channel": 3

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": mysql_user,

"password": mysql_passwd,

"column": get_mysql_columns(source_database, source_table),

"splitPk": "",

"connection": [{

"table": [source_table],

"jdbcUrl": ["jdbc:mysql://" + mysql_host + ":" + mysql_port + "/" + source_database]

}]

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"defaultFS": "hdfs://" + hdfs_nn_host + ":" + hdfs_nn_port,

"fileType": "text",

"path": "${targetdir}",

"fileName": source_table,

"column": get_hive_columns(source_database, source_table),

"writeMode": "append",

"fieldDelimiter": "\t",

"compress": "gzip"

}

}

}]

}

}

if not os.path.exists(output_path):

os.makedirs(output_path)

with open(os.path.join(output_path, ".".join([source_database, source_table, "json"])), "w") as f:

json.dump(job, f)

def main(args):

source_database = ""

source_table = ""

options, arguments = getopt.getopt(args, '-d:-t:', ['sourcedb=', 'sourcetbl='])

for opt_name, opt_value in options:

if opt_name in ('-d', '--sourcedb'):

source_database = opt_value

if opt_name in ('-t', '--sourcetbl'):

source_table = opt_value

generate_json(source_database, source_table)

if __name__ == '__main__':

main(sys.argv[1:])

安装 Python Mysql 驱动

sudo yum install -y MySQL-python

脚本使用说明

python gen_import_config.py -d database -t table

- -d : 数据库名

- -t : 表名

创建 gen_import_config.sh 脚本

vim gen_import_config.sh

#!/bin/bash

python ~/bin/gen_import_config.py -d gmall -t activity_info

python ~/bin/gen_import_config.py -d gmall -t activity_rule

python ~/bin/gen_import_config.py -d gmall -t base_category1

python ~/bin/gen_import_config.py -d gmall -t base_category2

python ~/bin/gen_import_config.py -d gmall -t base_category3

python ~/bin/gen_import_config.py -d gmall -t base_dic

python ~/bin/gen_import_config.py -d gmall -t base_province

python ~/bin/gen_import_config.py -d gmall -t base_region

python ~/bin/gen_import_config.py -d gmall -t base_trademark

python ~/bin/gen_import_config.py -d gmall -t cart_info

python ~/bin/gen_import_config.py -d gmall -t coupon_info

python ~/bin/gen_import_config.py -d gmall -t sku_attr_value

python ~/bin/gen_import_config.py -d gmall -t sku_info

python ~/bin/gen_import_config.py -d gmall -t sku_sale_attr_value

python ~/bin/gen_import_config.py -d gmall -t spu_info

gen_import_config.sh 脚本增加执行权限

chmod 777 gen_import_config.sh

执行 gen_import_config.sh 脚本,生成配置文件

gen_import_config.sh

配置文件 :

ll /opt/module/datax/job/import/

测试生成的 DataX 配置文件

全量表数据同步脚本

全量表数据同步脚本 mysql_to_hdfs_full.sh

vim mysql_to_hdfs_full.sh

#!/bin/bash

DATAX_HOME=/opt/module/datax

# 如果传入日期则do_date等于传入的日期,否则等于前一天日期

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d "-1 day" +%F`

fi

#处理目标路径,此处的处理逻辑是,

#如果目标路径不存在,则创建;

#若存在,则清空,目的是保证同步任务可重复执行

handle_targetdir() {

hadoop fs -test -e $1

if [[ $? -eq 1 ]]; then

echo "路径$1不存在,正在创建......"

hadoop fs -mkdir -p $1

else

echo "路径$1已经存在"

fs_count=$(hadoop fs -count $1)

content_size=$(echo $fs_count | awk '{print $3}')

if [[ $content_size -eq 0 ]]; then

echo "路径$1为空"

else

echo "路径$1不为空,正在清空......"

hadoop fs -rm -r -f $1/*

fi

fi

}

#数据同步

import_data() {

datax_config=$1

target_dir=$2

handle_targetdir $target_dir

python $DATAX_HOME/bin/datax.py -p"-Dtargetdir=$target_dir" $datax_config

}

case $1 in

"activity_info")

import_data /opt/module/datax/job/import/gmall.activity_info.json /origin_data/gmall/db/activity_info_full/$do_date

;;

"activity_rule")

import_data /opt/module/datax/job/import/gmall.activity_rule.json /origin_data/gmall/db/activity_rule_full/$do_date

;;

"base_category1")

import_data /opt/module/datax/job/import/gmall.base_category1.json /origin_data/gmall/db/base_category1_full/$do_date

;;

"base_category2")

import_data /opt/module/datax/job/import/gmall.base_category2.json /origin_data/gmall/db/base_category2_full/$do_date

;;

"base_category3")

import_data /opt/module/datax/job/import/gmall.base_category3.json /origin_data/gmall/db/base_category3_full/$do_date

;;

"base_dic")

import_data /opt/module/datax/job/import/gmall.base_dic.json /origin_data/gmall/db/base_dic_full/$do_date

;;

"base_province")

import_data /opt/module/datax/job/import/gmall.base_province.json /origin_data/gmall/db/base_province_full/$do_date

;;

"base_region")

import_data /opt/module/datax/job/import/gmall.base_region.json /origin_data/gmall/db/base_region_full/$do_date

;;

"base_trademark")

import_data /opt/module/datax/job/import/gmall.base_trademark.json /origin_data/gmall/db/base_trademark_full/$do_date

;;

"cart_info")

import_data /opt/module/datax/job/import/gmall.cart_info.json /origin_data/gmall/db/cart_info_full/$do_date

;;

"coupon_info")

import_data /opt/module/datax/job/import/gmall.coupon_info.json /origin_data/gmall/db/coupon_info_full/$do_date

;;

"sku_attr_value")

import_data /opt/module/datax/job/import/gmall.sku_attr_value.json /origin_data/gmall/db/sku_attr_value_full/$do_date

;;

"sku_info")

import_data /opt/module/datax/job/import/gmall.sku_info.json /origin_data/gmall/db/sku_info_full/$do_date

;;

"sku_sale_attr_value")

import_data /opt/module/datax/job/import/gmall.sku_sale_attr_value.json /origin_data/gmall/db/sku_sale_attr_value_full/$do_date

;;

"spu_info")

import_data /opt/module/datax/job/import/gmall.spu_info.json /origin_data/gmall/db/spu_info_full/$do_date

;;

"all")

import_data /opt/module/datax/job/import/gmall.activity_info.json /origin_data/gmall/db/activity_info_full/$do_date

import_data /opt/module/datax/job/import/gmall.activity_rule.json /origin_data/gmall/db/activity_rule_full/$do_date

import_data /opt/module/datax/job/import/gmall.base_category1.json /origin_data/gmall/db/base_category1_full/$do_date

import_data /opt/module/datax/job/import/gmall.base_category2.json /origin_data/gmall/db/base_category2_full/$do_date

import_data /opt/module/datax/job/import/gmall.base_category3.json /origin_data/gmall/db/base_category3_full/$do_date

import_data /opt/module/datax/job/import/gmall.base_dic.json /origin_data/gmall/db/base_dic_full/$do_date

import_data /opt/module/datax/job/import/gmall.base_province.json /origin_data/gmall/db/base_province_full/$do_date

import_data /opt/module/datax/job/import/gmall.base_region.json /origin_data/gmall/db/base_region_full/$do_date

import_data /opt/module/datax/job/import/gmall.base_trademark.json /origin_data/gmall/db/base_trademark_full/$do_date

import_data /opt/module/datax/job/import/gmall.cart_info.json /origin_data/gmall/db/cart_info_full/$do_date

import_data /opt/module/datax/job/import/gmall.coupon_info.json /origin_data/gmall/db/coupon_info_full/$do_date

import_data /opt/module/datax/job/import/gmall.sku_attr_value.json /origin_data/gmall/db/sku_attr_value_full/$do_date

import_data /opt/module/datax/job/import/gmall.sku_info.json /origin_data/gmall/db/sku_info_full/$do_date

import_data /opt/module/datax/job/import/gmall.sku_sale_attr_value.json /origin_data/gmall/db/sku_sale_attr_value_full/$do_date

import_data /opt/module/datax/job/import/gmall.spu_info.json /origin_data/gmall/db/spu_info_full/$do_date

;;

esac

mysql_to_hdfs_full.sh 增加执行权限

chmod 777 mysql_to_hdfs_full.sh

测试同步脚本

mysql_to_hdfs_full.sh all 2020-06-14

检查同步结果

查看HDFS目表路径是否出现全量表数据,全量表共15张

全量表同步总结

全量表同步逻辑比较简单,只需每日执行全量表数据同步脚本 mysql_to_hdfs_full.sh

增量表数据同步

数据通道

目标路径中表名须包含后缀 inc,为增量同步

目标路径中包含一层日期,用以对不同天的数据进行区分

Maxwell 配置

有 cart_info 、comment_info 等共计13张表需进行增量同步,Maxwell 同步 binlog 中的所有表的数据变更记录

为方便下游使用数据, Maxwell 将不同表的数据发往不同的 Kafka Topic

修改 Maxwell 配置文件 config.properties

vim /opt/module/maxwell/config.properties

log_level=info

producer=kafka

kafka.bootstrap.servers=cpucode101:9092,cpucode102:9092

#kafka topic动态配置

kafka_topic=%{table}

# mysql login info

host=cpucode101

user=maxwell

password=maxwell

jdbc_options=useSSL=false&serverTimezone=Asia/Shanghai

#表过滤,只同步特定的13张表

filter= include:gmall.cart_info,include:gmall.comment_info,include:gmall.coupon_use,include:gmall.favor_info,include:gmall.order_detail,include:gmall.order_detail_activity,include:gmall.order_detail_coupon,include:gmall.order_info,include:gmall.order_refund_info,include:gmall.order_status_log,include:gmall.payment_info,include:gmall.refund_payment,include:gmall.user_info

重新启动 Maxwell

mxw.sh restart

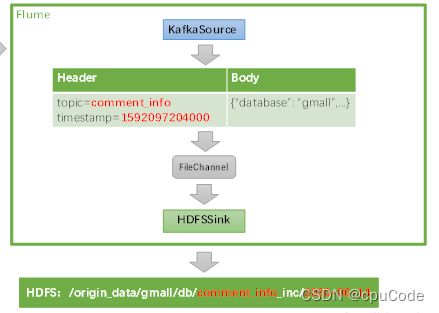

Flume 配置

Flume 需要将 Kafka 中各 topic 的数据传输到 HDFS,故其需选用 KafkaSource 以及 HDFSSink ,Channe 选用 FileChanne

KafkaSource 需订阅 Kafka 中的 13 个 topic,HDFSSink 需要将不同 topic 的数据写到不同的路径,并且路径中应当包含一层日期,用于区分每天的数据

a1.sources = r1

a1.channels = c1

a1.sinks = k1

a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r1.batchSize = 5000

a1.sources.r1.batchDurationMillis = 2000

a1.sources.r1.kafka.bootstrap.servers = hadoop102:9092,hadoop103:9092

a1.sources.r1.kafka.topics = cart_info,comment_info,coupon_use,favor_info,order_detail_activity,order_detail_coupon,order_detail,order_info,order_refund_info,order_status_log,payment_info,refund_payment,user_info

a1.sources.r1.kafka.consumer.group.id = flume

a1.sources.r1.setTopicHeader = true

a1.sources.r1.topicHeader = topic

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = com.atguigu.flume.interceptor.db.TimestampInterceptor$Builder

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /opt/module/flume/checkpoint/behavior2

a1.channels.c1.dataDirs = /opt/module/flume/data/behavior2/

a1.channels.c1.maxFileSize = 2146435071

a1.channels.c1.capacity = 1123456

a1.channels.c1.keep-alive = 6

## sink1

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = /origin_data/gmall/db/%{topic}_inc/%Y-%m-%d

a1.sinks.k1.hdfs.filePrefix = db

a1.sinks.k1.hdfs.round = false

a1.sinks.k1.hdfs.rollInterval = 10

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0

a1.sinks.k1.hdfs.fileType = CompressedStream

a1.sinks.k1.hdfs.codeC = gzip

## 拼装

a1.sources.r1.channels = c1

a1.sinks.k1.channel= c1

<dependencies>

<dependency>

<groupId>org.apache.flumegroupId>

<artifactId>flume-ng-coreartifactId>

<version>1.9.0version>

<scope>providedscope>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.2.62version>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<artifactId>maven-compiler-pluginartifactId>

<version>2.3.2version>

<configuration>

<source>1.8source>

<target>1.8target>

configuration>

plugin>

<plugin>

<artifactId>maven-assembly-pluginartifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependenciesdescriptorRef>

descriptorRefs>

configuration>

<executions>

<execution>

<id>make-assemblyid>

<phase>packagephase>

<goals>

<goal>singlegoal>

goals>

execution>

executions>

plugin>

plugins>

build>

增量表首日全量同步

增量表需要在首日进行一次全量同步,后续每日再进行增量同步,首日全量同步可以使用 Maxwell 的 bootstrap 功能

mysql_to_kafka_inc_init.sh

vim mysql_to_kafka_inc_init.sh

#!/bin/bash

# 该脚本的作用是初始化所有的增量表,只需执行一次

MAXWELL_HOME=/opt/module/maxwell

import_data() {

$MAXWELL_HOME/bin/maxwell-bootstrap --database gmall --table $1 --config $MAXWELL_HOME/config.properties

}

case $1 in

"cart_info")

import_data cart_info

;;

"comment_info")

import_data comment_info

;;

"coupon_use")

import_data coupon_use

;;

"favor_info")

import_data favor_info

;;

"order_detail")

import_data order_detail

;;

"order_detail_activity")

import_data order_detail_activity

;;

"order_detail_coupon")

import_data order_detail_coupon

;;

"order_info")

import_data order_info

;;

"order_refund_info")

import_data order_refund_info

;;

"order_status_log")

import_data order_status_log

;;

"payment_info")

import_data payment_info

;;

"refund_payment")

import_data refund_payment

;;

"user_info")

import_data user_info

;;

"all")

import_data cart_info

import_data comment_info

import_data coupon_use

import_data favor_info

import_data order_detail

import_data order_detail_activity

import_data order_detail_coupon

import_data order_info

import_data order_refund_info

import_data order_status_log

import_data payment_info

import_data refund_payment

import_data user_info

;;

esac

mysql_to_kafka_inc_init.sh 增加执行权限

chmod 777 mysql_to_kafka_inc_init.sh

清理历史数据

hadoop fs -ls /origin_data/gmall/db | grep _inc | awk '{print $8}' | xargs hadoop fs -rm -r -f

执行同步脚本

mysql_to_kafka_inc_init.sh all

观察HDFS上是否重新出现增量表数据

增量表同步总结

增量表同步,需要在首日进行一次全量同步,后续每日才是增量同步。首日进行全量同步时,需先启动数据通道,包括 Maxwell、Kafka、Flume,然后执行增量表首日同步脚本 mysql_to_kafka_inc_init.sh 进行同步。后续每日只需保证采集通道正常运行即可,Maxwell 便会实时将变动数据发往 Kafka