零基础班第十八课 - Hive项目实战

第一章:上次课回顾

第二章:离线处理过程中的大数据处理

- 2.1 MySQL数据准备

- 2.2 Hive数据准备

- 2.3 从sqoop导数据到Hive中去

第三章:开始进行需求分析

- 第一步获取商品基本信息

- 第二步:统计各区域下各个商品的访问次数

- 第三步:获取完整的商品信息的各区域的访问次数(根据product_id关联产品名称)

- 第四步:拿到每一个区域下最受欢迎的Top3

- 第五步:统计结果输出到Sqoop

第四章:shell脚本的方式来执行

第五章:本次课程作业

本次课程所需环境CentOS6.X,各位有需要购买云服务器的可以通过我的链接:点击进行购买,享9折优惠!

阿里云9折优惠券,点击领取

第一章:上次课回顾

零基础班第十七课 - hive进阶:

https://blog.csdn.net/zhikanjiani/article/details/89416079

回顾:

1、上次课主要讲了建表语句中更为复杂的函数:array_type、map_type、struct_type;

2、除了直接启动Hive以外,还提供了Hiveserver2+beeline的方式连接Hive、或者Java\Scala\Python通过JDBC的方式连接;

3、每一个分区都是HDFS上的一个目录,这个目录要和元数据对应上;如果对接不上,在Hive中是查询不到数据的;分区还分为静态分区(包括单级分区、多级分区)、多级分区,工作中动态分区用的多(分区的key不用写值,只需要把这个字段和select后的最后一个字段对应上即可),把hive的模式设置为非严格模式。

第二章:离线过程中的数据处理

大数据处理:离线、实时

-

有一个输入路径:(HDFS、MySQL) --> 进行分布式处理(MapReduce、Hive、Spark、Flink),处理完的结果输出.

-

数据只要能存储的地方,都有可能是输入路径

需求:统计各个城市下最受欢迎的TopN产品

分析:每个城市肯定需要分组,limit10

第一步:

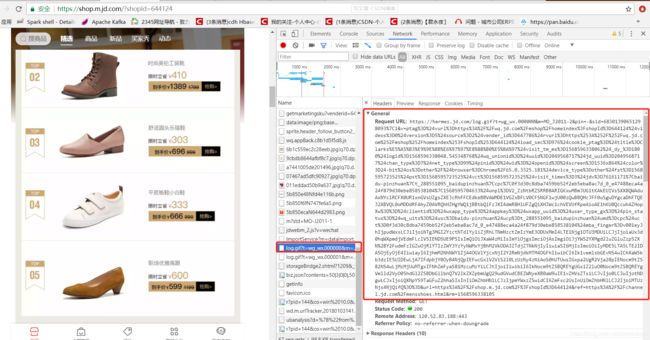

- 数据源:理解为电商的数据,这里拿京东举例:网址如下:https://shop.m.jd.com/?shopId=644124,右键检查 --> 选择network,再重新加载网页,找到log.gif开头的网页,复制request URL:

log.gif中的request URL如下所示,这个就是前端的埋点:

https://hermes.jd.com/log.gif?t=wg_wx.000000&m=MO_J2011-2&pin=-&sid=68301390651298093%7C1&v=ptag%3D%24vurl%3Dhttps%3A%2F%2Fwq.jd.com%2Fmshop%2Fhomeindex%3FshopId%3D644124%24videos%3D0%24version%3D5%24source%3D2%24vender_id%3D647786%24rvurl%3Dhttps%253A%252F%252Fwq.jd.com%252Fmshop%252Fhomeindex%253FshopId%253D644124%24load_sec%3D976%24cookie_ptag%3D%24title%3DClarks%E5%A5%B3%E9%9E%8B%E6%97%97%E8%88%B0%E5%BA%97%24visit_tm_ms%3D1568596338062%24_dy_%3D1800%24logid%3D1568596338048.545348768%24wq_unionid%3D%24uuid%3D2049568717%24jd_uuid%3D2049568717%24chan_type%3D7%24net_type%3D99%24pinid%3D%24wid%3D%24openid%3D%24screen%3D1536x864%24color%3D24-bit%24os%3Dother%2F%24browser%3DChrome%2F65.0.3325.181%24device_type%3Dother%24fst%3D1568595723525%24pst%3D1568595723525%24vct%3D1568595723525%24visit_times%3D3%24jdv%3D76161171%7Cbaidu-pinzhuan%7Ct_288551095_baidupinzhuan%7Ccpc%7C0f3d30c8dba7459bb52f2eb5eba8ac7d_0_e47488eca4a24f879d30ebe858538104%7C1568595704633%24unpl%3DV2_ZzNtbRZSRRB8ARIGKxwMBmJUG1tKAkEUIVsSXX8QWAduAxNYclRCFX0UR1xnGVsUZgsZXEJcRhFFCEdkeBBVAWMDE1VGZxBFLV0CFSNGF1wjU00zQwBBQHcJFF0uSgwDYgcaDhFTQEJ2XBVQL0oMDDdRFAhyZ0AVRQhHZHgYWQ1jBBtbQlFzJXI4dmR8HlUFZgQiXHJWc1chVEVUfRpeAioAE1hKU0QccwhAZHopXw%3D%3D%24clientid%3D%24wxapp_type%3D%24appkey%3D%24wxapp_uuid%3D%24user_type_gx%3D%24pin_status%3D%24wq_uits%3D%24usc%3Dbaidu-pinzhuan%24ucp%3Dt_288551095_baidupinzhuan%24umd%3Dcpc%24uct%3D0f3d30c8dba7459bb52f2eb5eba8ac7d_0_e47488eca4a24f879d30ebe858538104%24mba_finger%3Dv001eyJhIjpudWxsLCJiIjoiNTg3MGI2YzctNTdlYy1iZjRhLTNmNzctZmIzYmE3ODUxMmI4LTE1Njg1OTU3MDUiLCJjIjoiaUx3d0hqWXpmdjVEdmFLclV5IENDSUE9PSIsImQiOiJXaW4zMiIsImYiOjgsImciOjAsImgiOiJjYW52YXMgd2luZGluZzp5ZXN%2BY2FudmFzIGZwOjRlYTIzZWY3YzYyNWMxYjBhM2VkODA3ZTdjZTNkNjIyIiwiaSI6MjIsImoiOiIyMDE5LTA5LTE2IDA5OjEyOjE4IiwiayI6IjhmM2NhNWY1ZjA4OGVlYjcxNjI2Y2RmNjdkMTM4OGFhIiwibCI6Ik1vemlsbGEvNS4wIChXaW5kb3dzIE5UIDEwLjA7IFdpbjY0OyB4NjQpIEFwcGxlV2ViS2l0LzUzNy4zNiAoS0hUTUwsIGxpa2UgR2Vja28pIENocm9tZS82NS4wLjMzMjUuMTgxIFNhZmFyaS81MzcuMzYiLCJtIjoiIiwibiI6IkNocm9tZSBQREYgUGx1Z2luO0Nocm9tZSBQREYgVmlld2VyO05hdGl2ZSBDbGllbnQ7V2lkZXZpbmUgQ29udGVudCBEZWNyeXB0aW9uIE1vZHVsZTsiLCJvIjo0LCJwIjotNDgwLCJxIjoiQXNpYS9TaGFuZ2hhaSIsInIiOmZhbHNlLCJzIjpmYWxzZSwidCI6ZmFsc2UsInUiOmZhbHNlLCJ2IjoiMTUzNjs4NjQifQ%3D%3D&url=https%3A%2F%2Fshop.m.jd.com%2F%3FshopId%3D644124&ref=https%3A%2F%2Fchannel.jd.com%2Fmensshoes.html&rm=1568596338105

使用Urldecode解码器:进行解码

可以在解码中获取如下信息:

1、访问的品类

2、ref,访问的来源渠道:

==> 可以去求转化率

https://shop.m.jd.com/?shopId=644124&ref=https://channel.jd.com/mensshoes.html&rm=1568596338105

3、os = 0,当前访问网页的操作系统

我们要从日志里面去找到对应商品以及对应地址的信息

日志信息是存放在hdfs上的,城市信息在日志中是没有的,日志中只有的是城市的id,我们要求的是区域,比如:上海属于华东

- 区域信息是存放在MySQL中的,城市id是满足不了我们的需求;

MySQL:

1、存放城市区域对应信息

2、存放产品信息

Hive

1、用户点击行为日志

2.1 MySQL数据准备

首先city_info和product_info是存储在ruoze_g6数据库下面的。

第一份数据city_info数据:

1、在ruoze_g6数据库下新建city_info表,SQL如下:

CREATE TABLE city_info (

city_id int(11) DEFAULT NULL,

city_name varchar(255) DEFAULT NULL,

area varchar(255) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

2、把数据insert进city_info表:

insert into city_info (city_id,city_name,area) values

(1,'BEIJING','NC'),(2,'SHANGHAI','EC'),(3,'NANJING','EC'),(4,'GUANGZHOU','SC'),(5,'SANYA','SC'),(6,'WUHAN','CC'),(7,'CHANGSHA','CC'),(8,'XIAN','NW'),(9,'CHENGDU','SW'),(10,'HAERBIN','NE');

3、检验数据是否被加载进去了

- select * from city_info

第二份数据product_info:

1、创建表:

CREATE TABLE product_info (

product_id int(11) DEFAULT NULL,

product_name varchar(255) DEFAULT NULL,

extend_info varchar(255) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

2、此处选择的是dbeaver连接MySQL

- 使用准备好的数据,alt + x,直接批量插入数据库中

2.2 Hive数据准备

第一张表:本来就在Hive中加载好的

用户id,session_id,访问日志时间,城市id,产品id

1、创建表user_click:

create table user_click (

user_id int,

session_id string,

action_time string,

city_id int,

product_id int

) partitioned by (day string)

row format delimited fields terminated by ',';

2、把数据加载至user_click表:

hive (ruozeg6)> load data local inpath '/home/hadoop/data/topN/user_click.txt' overwrite into table user_click partition(day='2019-09-16');

Loading data to table ruozeg6.user_click partition (day=2019-09-16)

Partition ruozeg6.user_click{day=2019-09-16} stats: [numFiles=1, numRows=0, totalSize=725264, rawDataSize=0]

OK

Time taken: 1.962 seconds

3、测试查询数据:

- select * from user_click where day = ‘2019-09-16’ limit 10;

需要Hive中创建好表结构(product_info)

1.1、创建product_info表:

create table product_info (

product_id int,

product_name string,

extend_info string

) row format delimited fields terminated by "\t";

在Hive中创建好表结构(city_info)

1.2、创建city_info表:

create table city_info (

city_id int,

city_name string,

area string

) row format delimited fields terminated by "\t";

2.2 Sqoop导数据到Hive中去

sqoop import \

--connect jdbc:mysql://localhost:3306/ruoze_g6 \

--username root --password root \

--delete-target-dir \

--table city_info \

Hive arguments:

--hive-import \

--hive-table city_info \

--hive-overwrite \

--fields-terminated-by '\t' \

--lines-terminated-by '\n'

- 上述语句放到命令行中执行是失败的,报错信息如下:Error during import: No primary key could be found for table city_info. Please specify one with --split-by or perform a sequential import.

为什么需要使用–split -by来指定主键?

- 在Sqoop中,默认的mapper数量是4,假设一个表中主键是id,有40条记录,那么就是每个mapper处理10条数据;4个mapper来并行,那就是一个mapper运行10条记录。

前提:

1、有主键的情况下,sqoop以主键作为数据的分片,指定以id作主键。

此处我们以city_id作为主键,那我们如何指定mapper的数量?

使用如下命令将MySQL的数据转到Hive中

sqoop import \

--connect jdbc:mysql://localhost:3306/ruoze_g6 \

--username root --password 960210 \

--delete-target-dir \

--table city_info \

--hive-import \

--hive-table city_info \

--hive-overwrite \

--fields-terminated-by '\t' \

--lines-terminated-by '\n' \

--split-by city_id \

-m 2

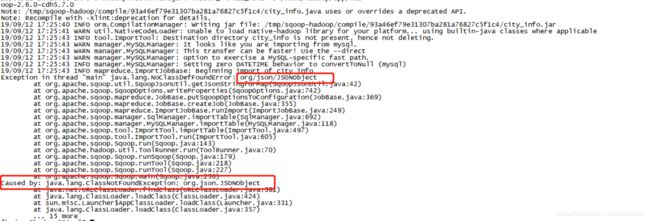

运行后报错,报错信息如下所示(缺少java-json.jar包):

1、可以直接到这个网站上去下载:

http://www.java2s.com/Code/Jar/j/Downloadjavajsonjar.htm

2、云盘上的链接:

链接:https://pan.baidu.com/s/1Cbmu4KVJCuRT3ag1Oj7YWQ

提取码:e85y

复制这段内容后打开百度网盘手机App,操作更方便哦

3、上传这个jar包到$SQOOP_HOME/lib目录下即可。

jar包上传完后重新使用这个命令,等待MapReduce作业跑完后去到Hive中查看是否有数据?

- sleect * from city_info

注意:

--split-by city_id \

-m 2

- 这个地方指定的主键是让它到关系型数据库中去,根据这个键作为分片原则;如果这个键没有,它不知道以哪个作为分片,如果有的话,就以city_id作为分片。

hdfs上去验证:

1、指定了map是2,所以输出是2个文件;如果不指定map,那么它的输出就是4

- hdfs dfs -ls /user/hive/warehouse/city_info

- 大数据中Hive是没有主键的,像Hbase中的row、key相当于是主键。

sqoop import \

--connect jdbc:mysql://localhost:3306/ruoze_g6 \

--username root --password 960210 \

--delete-target-dir \

--table product_info \

--hive-import \

--hive-table product_info \

--hive-overwrite \

--fields-terminated-by '\t' \

--lines-terminated-by '\n' \

--split-by product_id \

-m 2

第三章:开始进行需求分析

-

此时Hive中已经有三张表了,各个表之间的join操作:

-

select * from city_info where day=‘2019-05-05’ limit 10;

-

select * from user_click where day=‘2019-05-05’ limit 10;

需求一:每个区域取top3

- 在我们的用户点击表中,日志中只有城市编号和产品编号,需要根据id,把数据join出来。

华东 product1 30

华东 product2 20

华东 product3 10

第一步:获得商品的基本信息

SQL解析:

- 两张表中有不同信息,但是两张表有一列city_id是相同的,所以使用user_click表和city_info表进行join操作,条件是u.city_id = c.city_id.

- as c:给这个表取一个别名

select u.product_id,u.city_id,c.city_name,c.area

from

(select product_id,city_id from user_click where day = '20190919') as u

join

(select city_id,city_name,area from city_info) as c

on u.city_id = c.city_id

limit 10;

输出结果:(产品id,城市id,城市名称,城市区域)

p_id c_id city_name city_area

72 1 beijing1 NC

68 1 beijing1 NC

40 1 beijing1 NC

21 1 beijing1 NC

63 1 beijing1 NC

60 1 beijing1 NC

30 1 beijing1 NC

96 1 beijing1 NC

71 1 beijing1 NC

8 1 beijing1 NC

1.1、创建临时表(tmp_product_click_basic_info)

- (产品点击的基本信息表),接下来的所有操作都要基于临时表来完成:

create table tmp_product_click_basic_info

as

select u.product_id,u.city_id,c.city_name,c.area

from

(select product_id,city_id from user_click where day = '2019-09-19') as u

join

(select city_id,city_name,area from city_info) as c

on u.city_id = c.city_id

limit 10;

第二步:统计各区域下各个商品的访问次数:

- 基于临时表进行访问:

select

product_id,area,count(1) click_count

from

tmp_product_click_basic_info

group by

product_id,area

limit 10;

2.1、再创建一个临时表(各区域下商品点击的临时表):

create table tmp_area_product_click_count

as

select

product_id,area,count(1) click_count

from

tmp_product_click_basic_info

group by

product_id,area;

问题:

- 产品0是什么东西?

第三步:获取完整的商品信息的各区域的访问次数(根据product_id关联产品名称)

SQL解析:

-

区域下商品点击的临时表取别名为a,原始表取别名为b;join操作是a表的product_id关联b表的product_id.

-

其实就是在上面的基础上加了一个product_name.

select

a.product_id,b.product_name,a.area,a.click_count

from

tmp_area_product_click_count as a join product product_info as b

on a.product_id = b.product_id

limit 10;

3.1、继续创建临时表(tmp_area_product_click_count_full_info)

create table tmp_area_product_click_count_full_info

as

select

a.product_id,b.product_name,a.area,a.click_count

from

tmp_area_product_click_count as a join product product_info as b

on a.product_id = b.product_id;

分析:

- 此时的结果已经非常接近我们要的结果了。

- 为什么此时的结果没有0了,而第二步的时候还是有0的,因为我们的产品数据中压根就没有0;内连接的原因;产品0是一个脏数据的概念。

- 现在统计的是每一个产品在每一个地方出现的次数

第四步:拿到每一个区域下最受欢迎的Top3

第四步:拿到每一个区域下最受欢迎的Top3

引出概念:窗口函数

https://cwiki.apache.org/confluence/display/Hive/LanguageManual+WindowingAndAnalytics

select * from (

select

product_id,product_name,area,click_count,

row_number() over(partition by area order by click_count desc) rank

from

tmp_area_product_click_count_full_info ) t where t.rank <=3;

- row_number会根据区域进行分组,分组以后在区域内部以点击次数的一个降序进行排列,最终只要知道区域<=3就行了。

统计结果写到Hive表中去:

create table area_product_click_count_top3

select *

from (

select product_id,product_name,area,click_count,

row_number() over(partition by area order by click_count desc) rank

from

tmp_area_product_click_count_full_info ) t where t.rank <=3;

第五步:统计结果输出到Sqoop

现在我们的结果已经计算出来了,我们要把数据存储到MySQL表中,最后会呈现在网页上。

在生产中,以脚本的方式来执行:

第四章:以shell脚本的方式来执行

-

在脚本中,使用Linux脚本来获取到时间

-

在离线处理中:今天凌晨来执行昨天的数据,当前时间减去一天就是昨天的时间。

-

脚本写好后,crontable启动一个定时任务就行

4.1 扩展

- 有个问题,就是会生成tmp表,在shell脚本中写在执行之前删除表就行了。

- 留下tmp表的意义就是检查昨天的数据是否准确,历史数据的验证。

到现在为止,整个的流程跑完了,对基础班不做要求,注意user_click.txt数据;

进来的日志是非常繁琐的,需要经过ETL清洗;一条日志有100、200个字段,现在的作法是使用Hive关联上去(采用行存储)

举例:

行存储:

100个字段

select a,b,c from XXX //全量数据查询,IO是一个大问题

要改为列存储

小结:

- 在数据仓库中,能使用列存储就尽量使用列存储;MySQL的数据导入到Hive中也建议使用列存储。

因为真实产品有很多很多的信息,类别比较多

reducer数量控制的好与坏也决定了落到hdfs上小文件的个数。

问题:

group by数据量大会产生数据倾斜

第五章:本次课程作业

作业1:使用Sqoop export存储数据到MySQL中

product_id product_name area click_count rank 在Hive中是以时间day为分区,MySQL中要添加个字段day.