计算机视觉-局部图像描述子

计算机视觉-局部图像描述子

- Harris角点检测

-

- Harris角点检测实现

-

- 实现代码

- 原图及检测结果

- Harris角点匹配

-

- Harris角点匹配实现

-

- 实现代码

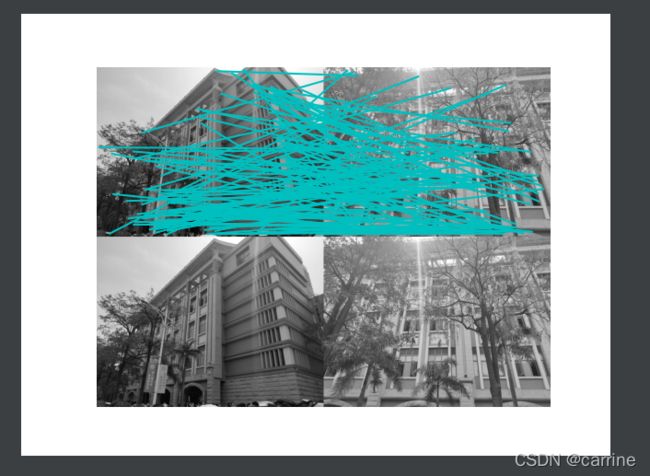

- 原图及匹配结果

- SIFT(尺度不变特征转换)

-

- 主要步骤

-

- 尺度空间极值检测

- 关键点的定位

- 关键点方向匹配

- 关键点特征描述

- 关键点匹配

- 实现代码

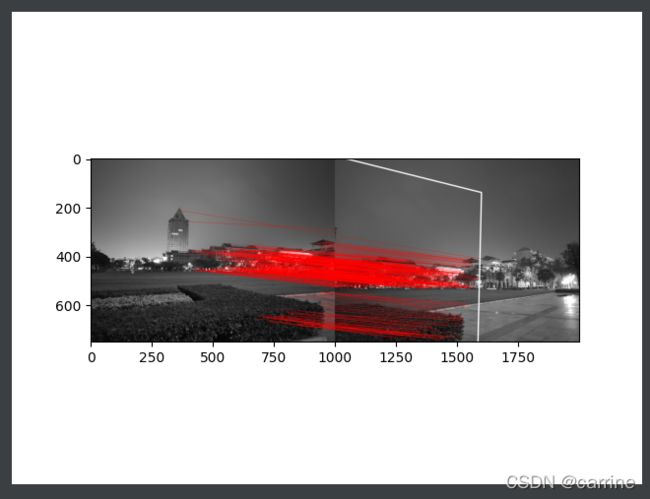

- 原图及匹配结果

Harris角点检测

Harris角点检测算法是一个极其简单的角点检测算法,其主要思想是,如果像素周围显示存在多于一个方向的边,我们认为该点为兴趣点,又称为角点。

我们把图像域中点x上的对称半正定矩阵 M I M_{I} MI定义为:

M I = [ I x 2 I x I y I y I x I y 2 ] M_{I}= \begin{bmatrix} I_{x}^{2}&I_{x}I_{y} \\ I_{y}I_{x}&I_{y}^{2} \end{bmatrix} MI=[Ix2IyIxIxIyIy2]

对于图像的每一个像素,我们可以计算出该矩阵,再将其乘上权重矩阵W,我们可以得到卷积。这样计算出的矩阵又称作Harris矩阵。

Harris角点检测实现

实现代码

def Harris(img):

from pylab import array, figure, gray, subplot, imshow, axis, plot, show

from PIL import Image

from PCV.localdescriptors import harris

# 读入图像

im = array(Image.open(img).convert('L'))

# 检测harris角点

harrisim = harris.compute_harris_response(im)

# Harris响应函数

harrisim1 = 255 - harrisim

figure()

gray()

# 画出Harris响应图

subplot(141)

imshow(harrisim1)

print(harrisim1.shape)

axis('off')

axis('equal')

threshold = [0.01, 0.05, 0.1]

for i, thres in enumerate(threshold):

filtered_coords = harris.get_harris_points(harrisim, 6, thres)

subplot(1, 4, i + 2)

imshow(im)

print(im.shape)

plot([p[1] for p in filtered_coords], [p[0] for p in filtered_coords], '*')

axis('off')

show()

原图及检测结果

Harris角点匹配

Harris角点检测器只能检测处图像中的兴趣点,但是没有通过比较兴趣点从而实现匹配角点的功能。为此,我们需要在每个点上添加描述符并给出比较描述子的方法。

Harris角点的描述符通常由周围图像像素块的灰度值,以及用于比较的归一化互相关矩阵构成。图像的像素块由以该像素点为中心的周围矩形部分构成,通常,两个大小相同的像素块 I 1 ( x ) I_{1}(x) I1(x)和 I 2 ( x ) I_{2}(x) I2(x)的相关矩阵定义为 c ( I 1 , I 2 ) c(I_{1},I_{2}) c(I1,I2)。

对于互相关矩阵, c ( I 1 , I 2 ) = I 1 ∗ I 2 ) c(I_{1},I_{2})=I_{1}*I_{2}) c(I1,I2)=I1∗I2),由两矩阵的乘积结果决定两像素块的相似度,结果越大,相似度越高。

而对于归一化的互相关矩阵,其定义为:

n c c ( I 1 , I 2 ) = 1 n − 1 ∑ I 1 ( x ) − μ 1 σ 1 ∗ I 2 ( x ) − μ 2 σ 2 ncc(I_{1},I_{2})=\frac{1}{n-1}\sum \frac{I_{1}(x)-\mu _{1}}{\sigma_{1}}*\frac{I_{2}(x)-\mu _{2}}{\sigma_{2}} ncc(I1,I2)=n−11∑σ1I1(x)−μ1∗σ2I2(x)−μ2

其中,n为像素的数目, μ 1 \mu _{1} μ1和 μ 2 \mu _{2} μ2代表每个像素块的平均像素值, σ 1 \sigma _{1} σ1和 σ 2 \sigma _{2} σ2表示每个像素块的标准差,这样构造的式子对图像亮度变化具有稳健性。

Harris角点匹配实现

实现代码

def Harris_match(img1, img2):

from pylab import array, figure, gray, show

from PIL import Image

from PCV.localdescriptors import harris

from PCV.tools.imtools import imresize

im1 = array(Image.open(img1).convert("L"))

im2 = array(Image.open(img2).convert("L"))

# resize加快匹配速度

im1 = imresize(im1, (int(im1.shape[1] / 2), int(im1.shape[0] / 2)))

im2 = imresize(im2, (int(im2.shape[1] / 2), int(im2.shape[0] / 2)))

wid = 5

harrisim = harris.compute_harris_response(im1, 5)

filtered_coords1 = harris.get_harris_points(harrisim, wid + 1)

d1 = harris.get_descriptors(im1, filtered_coords1, wid)

harrisim = harris.compute_harris_response(im2, 5)

filtered_coords2 = harris.get_harris_points(harrisim, wid + 1)

d2 = harris.get_descriptors(im2, filtered_coords2, wid)

print('starting matching')

matches = harris.match_twosided(d1, d2)

figure()

gray()

harris.plot_matches(im1, im2, filtered_coords1, filtered_coords2, matches)

show()

原图及匹配结果

SIFT(尺度不变特征转换)

SIFT(Scale Invariant Feature Transform),尺度不变特征变换。关于物体匹配的核心是将目标在不同环境和时间下所成的像相对应。SIFT不同于传统的匹配算法将边缘和角点作为判别依据,而是将图像映射为一个局部特征向量集,解决了图像中物体缩放、移动、旋转后的匹配问题。

主要步骤

SIFT特征检测主要步骤:

尺度空间极值检测

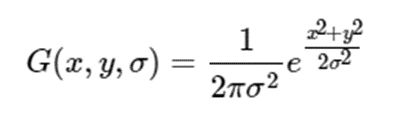

搜索所有尺度上的图像位置。通过高斯微分函数来识别潜在的对于尺度和旋转不变的关键点。

- 构建尺度空间:

高斯核卷积,通过二维高斯函数(图1.1)与像素的卷积,因为在实际应用中,计算3σ以外的像素可以看作不起作用,所以通常只计算 ( 6 σ + 1 ) ∗ ( 6 σ + 1 ) (6σ+1)*(6σ+1) (6σ+1)∗(6σ+1)的区域。 图 1.1 图1.1 图1.1

图 1.1 图1.1 图1.1

一个图像的尺度空间L(x,y,σ)定义为原始图像I(x,y)与上述二维高斯函数的卷积(图1.2)。

图 1.2 图1.2 图1.2

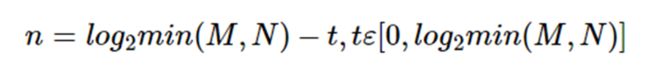

构建的高斯金字塔构成的空间称为尺度空间。高斯金字塔的由n个组构成,组的个数由原始图像取对数决定(图1.3)。每个组内由S张图像,组内的每张图像都是由初始图像与不同σ的高斯函数卷积而成,同一组内,第n+1层的模糊系数是第n层的k倍(图1.4),这样使得组内图像的大小相同,但是从下至上逐渐模糊。上一个组的倒数第三张图片按2倍向下采样(俗称降采样,也就是宽高都缩小一半),就得到了下一个组的第一张图像,这使得下一组的模糊系数是上一组的两倍,如此反复得到高斯金字塔。

图 1.3 图1.3 图1.3

图 1.4 图1.4 图1.4

PS:高斯核是唯一一个可以做到近处清晰远处模糊的线性核函数 - 构建高斯差分金字塔DoG:

组数和高斯金字塔相同,组内每张图片都是高斯金字塔组内的图片两两相减得到(图1.5)。 - 空间极值点检测:

求关键点也就是求一张图像的某个点与其邻域在组内和其上下两张图像的相同区域的极值点(图1.6),也就是说一个点要与其余26个点比较。在这里,我们若想得到S个尺度的极值点,一个组就需要S+2张图像,反推回去,高斯金字塔每组就需要进行S+3次高斯卷积。

图 1.5 图1.5 图1.5

图 1.6 图1.6 图1.6

关键点的定位

在每个候选的位置上,通过一个拟合精细的模型来确定位置和尺度。关键点的选择依据于它们的稳定程度。

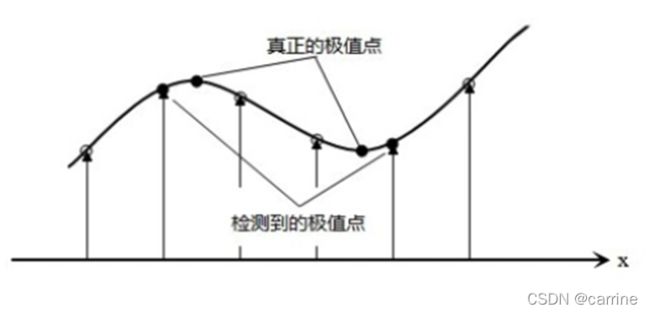

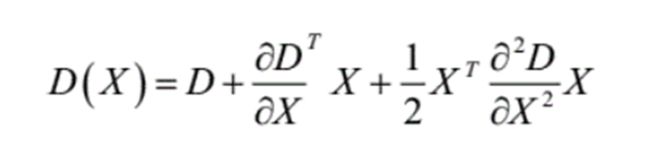

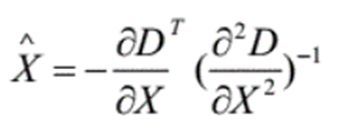

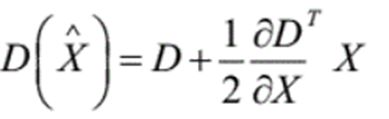

- 提高精确性:通过比较得出的极值点是在离散空间中得到,由于离散空间是对连续空间采样得到的结果,因此,为了提高关键点的精确性,需要对尺度空间DoG函数进行曲线拟合,利用其在尺度空间的三元二次泰勒展开式(图1.8)。通过对其求导为零可以得到极值点的偏移量(图1.9)。对左边进行循环修正,将修正后的结果代回泰勒展开式,得到新式(图1.10),新式去除小于灰度值为0.04(经验阈值)的极值点以去除低对比度的点。

图 1.7 图1.7 图1.7

图 1.8 图1.8 图1.8

图 1.9 图1.9 图1.9

图 1.10 图1.10 图1.10 - 消除边缘响应:在边缘梯度的方向上主曲率比较大,而沿着边缘方向的主曲率值比较小。DoG算子会产生较强的边缘效应,需要剔除不稳定的边缘响应点。主曲率通过特征点处的海森矩阵求出。如果一个点不在边缘,那么该点在x,y方向上的曲率差不多。海森矩阵的特征值和D(x)曲率是成正比的,但是这样太麻烦了,我们就通过矩阵的迹和行列式(图1.11)来代替,α>β且α=γβ(建议γ取10.0)。首先,当行列式小于零时舍去该点,再者当不满足下式(图1.12)时,也舍去该点。

图 1.11 图1.11 图1.11

图 1.12 图1.12 图1.12

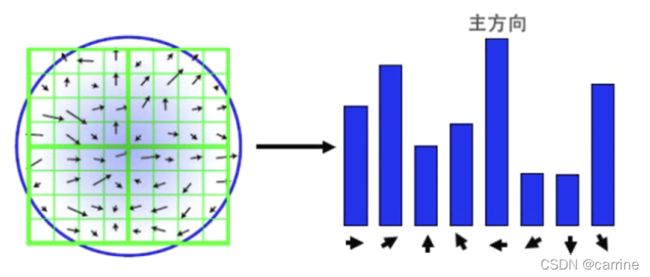

关键点方向匹配

基于图像局部的梯度方向,分配给每个关键点一个或多个方向。所有后面的对图像数据的操作都相对于关键点的方向、尺度和位置进行变换,从而提供对于这些变换的不变性。

- 对于在DoG金字塔中检测出的关键点,采集其所在高斯金字塔图像邻域内像素的梯度和方向(图1.13)。先对幅值进行高斯加权处理模糊系数1.5σ,使得靠近关键点的像素影响更大,减小突变的影响。

- 然后采用梯度直方统计图,将360°分为36柱,以关键点为圆心,在半径为r=3*1.5σ的区域内,将梯度方向在某个柱内的像素找出来,然后将幅值相加作为柱高。以直方图中最大值作为关键点的主方向,为了增强鲁棒性,保留大于主方向峰值80%的方向作为辅方向。(图1.14)

图 1.13 图1.13 图1.13

图 1.14 图1.14 图1.14

关键点特征描述

在每个关键点周围的邻域内,在选定的尺度上测量图像局部的梯度。这些梯度被变换成一种表示,这种表示允许比较大的局部形状的变形和光照变化。

- 先对图像进行旋转,让关键点的梯度方向和坐标轴x重合,这个基准的设定也解释了为什么SFIT最后得出的描述符具有旋转不变性。

- 对于每一个关键点,都拥有位置(x,y)、尺度(σ)、方向(θ)三个信息,为每个关键点构建一个描述符,即用一组向量把该关键点描述出来,用来作为关键点匹配的依据。以关键点为圆心,框定一个1616的格子,然后将其分为44的子区域,将每个子区域的所有梯度高斯加权,将8个方向上的梯度相加,得到448=128的向量(图1.15),即描述符。

图 1.15 图1.15 图1.15

关键点匹配

- 对于两张图片,分别计算其关键点及描述符

- 通过KNN匹配描述符

- 通过设定关键点匹配的偏差阈值,剔除不匹配的点

- 如果匹配出的点大于10个,则将图片拼接并画出匹配点及其连线

实现代码

pysift.py

from functools import cmp_to_key

from cv2 import resize, GaussianBlur, subtract, KeyPoint, INTER_LINEAR, INTER_NEAREST

from numpy import all, array, arctan2, cos, sin, exp, dot, log, logical_and, roll, sqrt, stack, trace, deg2rad, rad2deg, \

where, zeros, floor, round, float32

from numpy.linalg import det, lstsq, norm

float_tolerance = 1e-7

def computeKeypointsAndDescriptors(image, sigma=1.6, num_intervals=3, assumed_blur=0.5, image_border_width=5):

"""生成关键点、描述符

"""

image = image.astype('float32') # 从uint8转换成float32

base_image = generateBaseImage(image, sigma, assumed_blur)

num_octaves = computeNumberOfOctaves(base_image.shape)

gaussian_kernels = generateGaussianKernels(sigma, num_intervals)

gaussian_images = generateGaussianImages(base_image, num_octaves, gaussian_kernels)

# 生成高斯差分金字塔

dog_images = generateDoGImages(gaussian_images)

# 从离散空间的极值点寻找连续空间的极值点

keypoints = findScaleSpaceExtrema(gaussian_images, dog_images, num_intervals, sigma, image_border_width)

# 移除重复的关键点

keypoints = removeDuplicateKeypoints(keypoints)

keypoints = convertKeypointsToInputImageSize(keypoints)

descriptors = generateDescriptors(keypoints, gaussian_images)

return keypoints, descriptors

def generateBaseImage(image, sigma, assumed_blur):

"""生成基础图像,先放大两倍(线性插值),再进行高斯模糊(类似勾股定理)

image:图像的灰度图

sigma:高斯滤波标准差

assumed_blur:原图的默认模糊

"""

image = resize(image, (0, 0), fx=2, fy=2, interpolation=INTER_LINEAR) # (0,0)相当于None,用于设定输出图像大小

sigma_diff = sqrt(max((sigma ** 2) - ((2 * assumed_blur) ** 2), 0.01)) # 计算高斯标准差σ

return GaussianBlur(image, (0, 0), sigmaX=sigma_diff, sigmaY=sigma_diff) # 调用cv2的高斯模糊

def computeNumberOfOctaves(image_shape):

"""通过图像最短的边来计算高斯金字塔的层数,因为之后要对比尺度空间上的极值点,所以保证顶层的组尺寸大于3

"""

return int(round(log(min(image_shape)) / log(2) - 1))

def generateGaussianKernels(sigma, num_intervals):

"""构建高斯金字塔的滤波器

层数=想进行极值点检测的层数+3

k是构造不同高斯模糊的系数

"""

num_images_per_octave = num_intervals + 3

k = 2 ** (1. / num_intervals)

gaussian_kernels = zeros(

num_images_per_octave)

gaussian_kernels[0] = sigma

for image_index in range(1, num_images_per_octave):

sigma_previous = (k ** (image_index - 1)) * sigma

sigma_total = k * sigma_previous

gaussian_kernels[image_index] = sqrt(sigma_total ** 2 - sigma_previous ** 2)

return gaussian_kernels

def generateGaussianImages(image, num_octaves, gaussian_kernels):

"""生成高斯金字塔

image:原图像经过函数生成的基础图像

num_octaves:总组数

gaussian_kernels:高斯滤波器

"""

gaussian_images = []

for octave_index in range(num_octaves):

gaussian_images_in_octave = [image]

for gaussian_kernel in gaussian_kernels[1:]:

image = GaussianBlur(image, (0, 0), sigmaX=gaussian_kernel, sigmaY=gaussian_kernel)

gaussian_images_in_octave.append(image)

gaussian_images.append(gaussian_images_in_octave)

octave_base = gaussian_images_in_octave[-3]

image = resize(octave_base, (int(octave_base.shape[1] / 2), int(octave_base.shape[0] / 2)),

interpolation=INTER_NEAREST)

return array(gaussian_images, dtype=object)

def generateDoGImages(gaussian_images):

"""生成高斯差分金字塔,上下两层相减(模糊的减去清晰的),得到的是边缘信息

gaussian_images:高斯金字塔

"""

dog_images = []

for gaussian_images_in_octave in gaussian_images:

dog_images_in_octave = []

for first_image, second_image in zip(gaussian_images_in_octave, gaussian_images_in_octave[1:]):

dog_images_in_octave.append(subtract(second_image,

first_image)) # 这里用cv2的减法是因为不能用普通的减法

dog_images.append(dog_images_in_octave)

return array(dog_images, dtype=object)

def findScaleSpaceExtrema(gaussian_images, dog_images, num_intervals, sigma, image_border_width,

contrast_threshold=0.04):

"""找出图像金字塔中所有尺度空间中极值的像素位置

gaussian_images:高斯金字塔

dog_images:高斯差分金字塔

num_intervals:进行极值点检测的层数

sigma:高斯函数标准差

image_border_width:检测区域远离图像边缘5个像素

"""

threshold = floor(0.5 * contrast_threshold / num_intervals * 255) # 去除低于阈值的点

keypoints = []

for octave_index, dog_images_in_octave in enumerate(dog_images):

# 这里用zip是为了构造(0,1,2)、(1,2,3)、(2,3,4)的组合

for image_index, (first_image, second_image, third_image) in enumerate(

zip(dog_images_in_octave, dog_images_in_octave[1:], dog_images_in_octave[2:])):

# i,j为九宫格的中心

for i in range(image_border_width, first_image.shape[0] - image_border_width):

for j in range(image_border_width, first_image.shape[1] - image_border_width):

# 函数判断是否为在当前层相邻和尺度上相邻的极值点

if isPixelAnExtremum(first_image[i - 1:i + 2, j - 1:j + 2], second_image[i - 1:i + 2, j - 1:j + 2],

third_image[i - 1:i + 2, j - 1:j + 2], threshold):

# 如果是则细化这个点到亚像素级别

localization_result = localizeExtremumViaQuadraticFit(i, j, image_index + 1, octave_index,

num_intervals, dog_images_in_octave,

sigma, contrast_threshold,

image_border_width)

if localization_result is not None:

keypoint, localized_image_index = localization_result

# 计算关键点方向

keypoints_with_orientations = computeKeypointsWithOrientations(keypoint, octave_index,

gaussian_images[octave_index]

[localized_image_index])

for keypoint_with_orientation in keypoints_with_orientations:

keypoints.append(keypoint_with_orientation)

return keypoints

def isPixelAnExtremum(first_subimage, second_subimage, third_subimage, threshold):

"""判断当前点在当前层和尺度上相邻的点中是否为极值点,是则True,否则为False

参数依次为第x张图片

threshold:阈值

"""

center_pixel_value = second_subimage[1, 1]

# 小于阈值的点删去

if abs(center_pixel_value) > threshold:

if center_pixel_value > 0:

return all(center_pixel_value >= first_subimage) and \

all(center_pixel_value >= third_subimage) and \

all(center_pixel_value >= second_subimage[0, :]) and \

all(center_pixel_value >= second_subimage[2, :]) and \

center_pixel_value >= second_subimage[1, 0] and \

center_pixel_value >= second_subimage[1, 2]

elif center_pixel_value < 0:

return all(center_pixel_value <= first_subimage) and \

all(center_pixel_value <= third_subimage) and \

all(center_pixel_value <= second_subimage[0, :]) and \

all(center_pixel_value <= second_subimage[2, :]) and \

center_pixel_value <= second_subimage[1, 0] and \

center_pixel_value <= second_subimage[1, 2]

return False

def localizeExtremumViaQuadraticFit(i, j, image_index, octave_index, num_intervals, dog_images_in_octave, sigma,

contrast_threshold, image_border_width, eigenvalue_ratio=10,

num_attempts_until_convergence=5):

"""通过对极值点的邻域进行二次拟合,细化极值点到亚像素级别

i,j:极值点在离散空间的坐标

image_index:高斯差分金字塔中每组的图像索引

octave_index:高斯差分金字塔的组索引

num_intervals:每组极值点检测层数

dog_images_in_octave:高斯差分金字塔

sigma:高斯标准差

contrast_threshold:对比度阈值

image_border_width:检测区域远离图像边缘5个像素图像

eigenvalue_ratio:主曲率阈值

num_attempts_until_convergence:最大尝试次数

"""

extremum_is_outside_image = False

image_shape = dog_images_in_octave[0].shape

for attempt_index in range(num_attempts_until_convergence):

# 需要从uint8转换到float32来计算导数,需要缩放像素值到[0,1]

first_image, second_image, third_image = dog_images_in_octave[image_index - 1:image_index + 2]

pixel_cube = stack([first_image[i - 1:i + 2, j - 1:j + 2],

second_image[i - 1:i + 2, j - 1:j + 2],

third_image[i - 1:i + 2, j - 1:j + 2]]).astype('float32') / 255.

# 计算梯度

gradient = computeGradientAtCenterPixel(pixel_cube)

# 计算海森矩阵

hessian = computeHessianAtCenterPixel(pixel_cube)

# 最小二乘拟合求偏移量

extremum_update = -lstsq(hessian, gradient, rcond=None)[0]

# 如果偏移量的每个值的绝对值均小于0.5,放弃迭代

if abs(extremum_update[0]) < 0.5 and abs(extremum_update[1]) < 0.5 and abs(extremum_update[2]) < 0.5:

break

j += int(round(extremum_update[0]))

i += int(round(extremum_update[1]))

image_index += int(round(extremum_update[2]))

# 确保在尺度上的正方体在图像之中

if i < image_border_width or i >= image_shape[0] - image_border_width or j < image_border_width or j >= \

image_shape[1] - image_border_width or image_index < 1 or image_index > num_intervals:

extremum_is_outside_image = True

break

if extremum_is_outside_image:

return None

if attempt_index >= num_attempts_until_convergence - 1:

return None

functionValueAtUpdatedExtremum = pixel_cube[1, 1, 1] + 0.5 * dot(gradient, extremum_update)

if abs(functionValueAtUpdatedExtremum) * num_intervals >= contrast_threshold:

xy_hessian = hessian[:2, :2]

# 海森矩阵的迹

xy_hessian_trace = trace(xy_hessian)

# 海森矩阵的行列式

xy_hessian_det = det(xy_hessian)

# 判断主曲率阈值是否在预设阈值之下

if xy_hessian_det > 0 and eigenvalue_ratio * (xy_hessian_trace ** 2) < (

(eigenvalue_ratio + 1) ** 2) * xy_hessian_det:

# 对比度检查,返回keypoint对象

keypoint = KeyPoint()

# 往keypoints里添加坐标、组数、邻域直径大小、关键点响应程度

keypoint.pt = (

(j + extremum_update[0]) * (2 ** octave_index), (i + extremum_update[1]) * (2 ** octave_index))

keypoint.octave = octave_index + image_index * (2 ** 8) + int(round((extremum_update[2] + 0.5) * 255)) * (

2 ** 16)

keypoint.size = sigma * (2 ** ((image_index + extremum_update[2]) / float32(num_intervals))) * (

2 ** (octave_index + 1))

keypoint.response = abs(functionValueAtUpdatedExtremum)

return keypoint, image_index

return None

def computeGradientAtCenterPixel(pixel_array):

"""利用中心差分公式计算中心像素的近似梯度

"""

dx = 0.5 * (pixel_array[1, 1, 2] - pixel_array[1, 1, 0])

dy = 0.5 * (pixel_array[1, 2, 1] - pixel_array[1, 0, 1])

ds = 0.5 * (pixel_array[2, 1, 1] - pixel_array[0, 1, 1])

return array([dx, dy, ds])

def computeHessianAtCenterPixel(pixel_array):

"""利用中心差分公式计算中心像素的近似海森

"""

center_pixel_value = pixel_array[1, 1, 1]

dxx = pixel_array[1, 1, 2] - 2 * center_pixel_value + pixel_array[1, 1, 0]

dyy = pixel_array[1, 2, 1] - 2 * center_pixel_value + pixel_array[1, 0, 1]

dss = pixel_array[2, 1, 1] - 2 * center_pixel_value + pixel_array[0, 1, 1]

dxy = 0.25 * (pixel_array[1, 2, 2] - pixel_array[1, 2, 0] - pixel_array[1, 0, 2] + pixel_array[1, 0, 0])

dxs = 0.25 * (pixel_array[2, 1, 2] - pixel_array[2, 1, 0] - pixel_array[0, 1, 2] + pixel_array[0, 1, 0])

dys = 0.25 * (pixel_array[2, 2, 1] - pixel_array[2, 0, 1] - pixel_array[0, 2, 1] + pixel_array[0, 0, 1])

return array([[dxx, dxy, dxs],

[dxy, dyy, dys],

[dxs, dys, dss]])

def computeKeypointsWithOrientations(keypoint, octave_index, gaussian_image, radius_factor=3, num_bins=36,

peak_ratio=0.8, scale_factor=1.5):

"""计算每个关键点的方向

keypoint:检测到的精确关键点

octave_index:高斯差分金字塔的组索引

gaussian_image:高斯金字塔

radius_factor:半径因子

num_bins:直方图柱的数目

peak_ratio:保留辅方向的百分比

scale_factor:尺度因子

"""

keypoints_with_orientations = []

image_shape = gaussian_image.shape

# 特征点所在高斯图像的尺度

scale = scale_factor * keypoint.size / float32(2 ** (octave_index + 1))

# 统计半径

radius = int(round(radius_factor * scale))

# 权重

weight_factor = -0.5 / (scale ** 2)

raw_histogram = zeros(num_bins)

smooth_histogram = zeros(num_bins)

# 采集其所在高斯金字塔图像3σ领域窗口内像素的梯度和方向分布特征

for i in range(-radius, radius + 1):

region_y = int(round(keypoint.pt[1] / float32(2 ** octave_index))) + i

if region_y > 0 and region_y < image_shape[0] - 1:

for j in range(-radius, radius + 1):

region_x = int(round(keypoint.pt[0] / float32(2 ** octave_index))) + j

if region_x > 0 and region_x < image_shape[1] - 1:

# 中心差分求偏导

dx = gaussian_image[region_y, region_x + 1] - gaussian_image[region_y, region_x - 1]

dy = gaussian_image[region_y - 1, region_x] - gaussian_image[region_y + 1, region_x]

# 梯度幅值

gradient_magnitude = sqrt(dx * dx + dy * dy)

# 梯度方向

gradient_orientation = rad2deg(arctan2(dy, dx))

weight = exp(weight_factor * (

i ** 2 + j ** 2))

histogram_index = int(round(gradient_orientation * num_bins / 360.))

raw_histogram[histogram_index % num_bins] += weight * gradient_magnitude

for n in range(num_bins):

# 这里用了平滑公式

smooth_histogram[n] = (6 * raw_histogram[n] + 4 * (raw_histogram[n - 1] + raw_histogram[(n + 1) % num_bins]) +

raw_histogram[n - 2] + raw_histogram[(n + 2) % num_bins]) / 16.

orientation_max = max(smooth_histogram)

orientation_peaks = \

where(logical_and(smooth_histogram > roll(smooth_histogram, 1), smooth_histogram > roll(smooth_histogram, -1)))[

0]

for peak_index in orientation_peaks:

peak_value = smooth_histogram[peak_index]

if peak_value >= peak_ratio * orientation_max:

left_value = smooth_histogram[(peak_index - 1) % num_bins]

right_value = smooth_histogram[(peak_index + 1) % num_bins]

# 梯度直方图抛物线插值

interpolated_peak_index = (peak_index + 0.5 * (left_value - right_value) / (

left_value - 2 * peak_value + right_value)) % num_bins

orientation = 360. - interpolated_peak_index * 360. / num_bins

if abs(orientation - 360.) < float_tolerance:

orientation = 0

new_keypoint = KeyPoint(*keypoint.pt, keypoint.size, orientation, keypoint.response, keypoint.octave)

keypoints_with_orientations.append(new_keypoint)

return keypoints_with_orientations

def compareKeypoints(keypoint1, keypoint2):

"""对关键点进行比较

"""

if keypoint1.pt[0] != keypoint2.pt[0]:

return keypoint1.pt[0] - keypoint2.pt[0]

if keypoint1.pt[1] != keypoint2.pt[1]:

return keypoint1.pt[1] - keypoint2.pt[1]

if keypoint1.size != keypoint2.size:

return keypoint2.size - keypoint1.size

if keypoint1.angle != keypoint2.angle:

return keypoint1.angle - keypoint2.angle

if keypoint1.response != keypoint2.response:

return keypoint2.response - keypoint1.response

if keypoint1.octave != keypoint2.octave:

return keypoint2.octave - keypoint1.octave

return keypoint2.class_id - keypoint1.class_id

def removeDuplicateKeypoints(keypoints):

"""对关键点进行排序,删除重复关键点

"""

if len(keypoints) < 2:

return keypoints

keypoints.sort(key=cmp_to_key(compareKeypoints))

unique_keypoints = [keypoints[0]]

for next_keypoint in keypoints[1:]:

last_unique_keypoint = unique_keypoints[-1]

if last_unique_keypoint.pt[0] != next_keypoint.pt[0] or \

last_unique_keypoint.pt[1] != next_keypoint.pt[1] or \

last_unique_keypoint.size != next_keypoint.size or \

last_unique_keypoint.angle != next_keypoint.angle:

unique_keypoints.append(next_keypoint)

return unique_keypoints

def convertKeypointsToInputImageSize(keypoints):

"""将关键点位置转换到原图的位置

"""

converted_keypoints = []

for keypoint in keypoints:

keypoint.pt = tuple(0.5 * array(keypoint.pt))

keypoint.size *= 0.5

keypoint.octave = (keypoint.octave & ~255) | ((keypoint.octave - 1) & 255)

converted_keypoints.append(keypoint)

return converted_keypoints

def unpackOctave(keypoint):

"""从关键点中计算组、层和尺度

"""

octave = keypoint.octave & 255

layer = (keypoint.octave >> 8) & 255

if octave >= 128:

octave = octave | -128

scale = 1 / float32(1 << octave) if octave >= 0 else float32(1 << -octave)

return octave, layer, scale

def generateDescriptors(keypoints, gaussian_images, window_width=4, num_bins=8, scale_multiplier=3,

descriptor_max_value=0.2):

"""为每个关键点生成描述符

keypoints:关键点

gaussian_images:高斯金字塔图像

window_width:关键点附近区域长度

num_bins:8个方向的梯度直方图

scale_multiplier:求取极值的尺度多少

descriptor_max_value:描述符最大值

"""

descriptors = []

for keypoint in keypoints:

octave, layer, scale = unpackOctave(keypoint)

gaussian_image = gaussian_images[octave + 1, layer]

num_rows, num_cols = gaussian_image.shape

point = round(scale * array(keypoint.pt)).astype('int')

bins_per_degree = num_bins / 360.

angle = 360. - keypoint.angle

cos_angle = cos(deg2rad(angle))

sin_angle = sin(deg2rad(angle))

weight_multiplier = -0.5 / ((0.5 * window_width) ** 2)

row_bin_list = []

col_bin_list = []

magnitude_list = []

orientation_bin_list = []

# 前两个维度增加了2,以考虑边界效应

histogram_tensor = zeros((window_width + 2, window_width + 2,

num_bins))

hist_width = scale_multiplier * 0.5 * scale * keypoint.size

half_width = int(

round(hist_width * sqrt(2) * (window_width + 1) * 0.5)) # sqrt(2) corresponds to diagonal length of a pixel

half_width = int(min(half_width, sqrt(num_rows ** 2 + num_cols ** 2))) # ensure half_width lies within image

# 坐标轴旋转至主方向

for row in range(-half_width, half_width + 1):

for col in range(-half_width, half_width + 1):

row_rot = col * sin_angle + row * cos_angle

col_rot = col * cos_angle - row * sin_angle

row_bin = (row_rot / hist_width) + 0.5 * window_width - 0.5

col_bin = (col_rot / hist_width) + 0.5 * window_width - 0.5

if row_bin > -1 and row_bin < window_width and col_bin > -1 and col_bin < window_width:

window_row = int(round(point[1] + row))

window_col = int(round(point[0] + col))

if window_row > 0 and window_row < num_rows - 1 and window_col > 0 and window_col < num_cols - 1:

dx = gaussian_image[window_row, window_col + 1] - gaussian_image[window_row, window_col - 1]

dy = gaussian_image[window_row - 1, window_col] - gaussian_image[window_row + 1, window_col]

gradient_magnitude = sqrt(dx * dx + dy * dy)

gradient_orientation = rad2deg(arctan2(dy, dx)) % 360

weight = exp(weight_multiplier * ((row_rot / hist_width) ** 2 + (col_rot / hist_width) ** 2))

row_bin_list.append(row_bin)

col_bin_list.append(col_bin)

magnitude_list.append(weight * gradient_magnitude)

orientation_bin_list.append((gradient_orientation - angle) * bins_per_degree)

for row_bin, col_bin, magnitude, orientation_bin in zip(row_bin_list, col_bin_list, magnitude_list,

orientation_bin_list):

# 三线性差值平滑

row_bin_floor, col_bin_floor, orientation_bin_floor = floor([row_bin, col_bin, orientation_bin]).astype(int)

row_fraction, col_fraction, orientation_fraction = row_bin - row_bin_floor, col_bin - col_bin_floor, orientation_bin - orientation_bin_floor

if orientation_bin_floor < 0:

orientation_bin_floor += num_bins

if orientation_bin_floor >= num_bins:

orientation_bin_floor -= num_bins

c1 = magnitude * row_fraction

c0 = magnitude * (1 - row_fraction)

c11 = c1 * col_fraction

c10 = c1 * (1 - col_fraction)

c01 = c0 * col_fraction

c00 = c0 * (1 - col_fraction)

c111 = c11 * orientation_fraction

c110 = c11 * (1 - orientation_fraction)

c101 = c10 * orientation_fraction

c100 = c10 * (1 - orientation_fraction)

c011 = c01 * orientation_fraction

c010 = c01 * (1 - orientation_fraction)

c001 = c00 * orientation_fraction

c000 = c00 * (1 - orientation_fraction)

histogram_tensor[row_bin_floor + 1, col_bin_floor + 1, orientation_bin_floor] += c000

histogram_tensor[row_bin_floor + 1, col_bin_floor + 1, (orientation_bin_floor + 1) % num_bins] += c001

histogram_tensor[row_bin_floor + 1, col_bin_floor + 2, orientation_bin_floor] += c010

histogram_tensor[row_bin_floor + 1, col_bin_floor + 2, (orientation_bin_floor + 1) % num_bins] += c011

histogram_tensor[row_bin_floor + 2, col_bin_floor + 1, orientation_bin_floor] += c100

histogram_tensor[row_bin_floor + 2, col_bin_floor + 1, (orientation_bin_floor + 1) % num_bins] += c101

histogram_tensor[row_bin_floor + 2, col_bin_floor + 2, orientation_bin_floor] += c110

histogram_tensor[row_bin_floor + 2, col_bin_floor + 2, (orientation_bin_floor + 1) % num_bins] += c111

descriptor_vector = histogram_tensor[1:-1, 1:-1, :].flatten() # Remove histogram borders

# 设定阈值,归一化描述符

threshold = norm(descriptor_vector) * descriptor_max_value

descriptor_vector[descriptor_vector > threshold] = threshold

descriptor_vector /= max(norm(descriptor_vector), float_tolerance)

descriptor_vector = round(512 * descriptor_vector)

descriptor_vector[descriptor_vector < 0] = 0

descriptor_vector[descriptor_vector > 255] = 255

descriptors.append(descriptor_vector)

return array(descriptors, dtype='float32')

sift_match.py

def sift_match(img1, img2):

MIN_MATCH_COUNT = 10 # 最少匹配10处

# 计算特征点和描述符

# kp1, des1 = pysift.computeKeypointsAndDescriptors(img1)

# kp2, des2 = pysift.computeKeypointsAndDescriptors(img2)

# 利用cv2自带SIFT方法计算特征点和描述符,速度较快

sift = cv2.xfeatures2d.SIFT_create()

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1, des2, k=2)

good = []

for m, n in matches:

if m.distance < 0.7 * n.distance:

good.append(m)

if len(good) > MIN_MATCH_COUNT:

src_pts = np.float32([kp1[m.queryIdx].pt for m in good]).reshape(-1, 1, 2)

dst_pts = np.float32([kp2[m.trainIdx].pt for m in good]).reshape(-1, 1, 2)

M = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, 5.0)[0]

h, w = img1.shape

pts = np.float32([[0, 0],

[0, h - 1],

[w - 1, h - 1],

[w - 1, 0]]).reshape(-1, 1, 2)

dst = cv2.perspectiveTransform(pts, M)

img2 = cv2.polylines(img2, [np.int32(dst)], True, 255, 3, cv2.LINE_AA)

h1, w1 = img1.shape

h2, w2 = img2.shape

nWidth = w1 + w2

nHeight = max(h1, h2)

hdif = int((h2 - h1) / 2)

newimg = np.zeros((nHeight, nWidth, 3), np.uint8)

for i in range(3):

newimg[hdif:hdif + h1, :w1, i] = img1

newimg[:h2, w1:w1 + w2, i] = img2

for m in good:

pt1 = (int(kp1[m.queryIdx].pt[0]), int(kp1[m.queryIdx].pt[1] + hdif))

pt2 = (int(kp2[m.trainIdx].pt[0] + w1), int(kp2[m.trainIdx].pt[1]))

cv2.line(newimg, pt1, pt2, (255, 0, 0))

plt.imshow(newimg)

plt.show()

else:

print("Not enough matches are found - %d/%d" % (len(good), MIN_MATCH_COUNT))