数据挖掘 —— 有监督学习(回归)

数据挖掘 —— 有监督学习(回归)

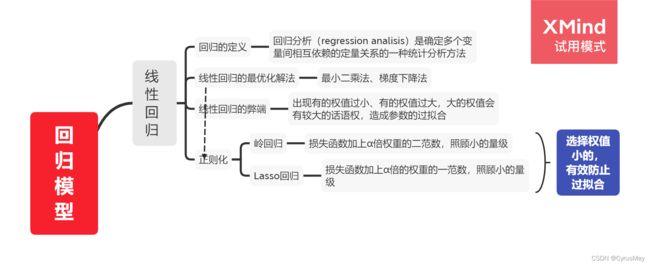

- 1. 线性回归模型

-

- 1.1 线性回归模型

- 1.2 岭回归模型

- 1.3 Lasso回归模型

- 2 逻辑回归模型

- 3 回归树与提升树

- 4 总结

1. 线性回归模型

1.1 线性回归模型

from sklearn.linear_model import LinearRegression

LR_model = LinearRegression()

- LR_model.intercept_ :截距

- LR_model.coef_ :权重

1.2 岭回归模型

from sklearn.linear_model import Ridge

Ridge_model = Ridge(alpha,max_iter,tol,solver)

- alpha:正则化强度系数

- max_iter:最大迭代次数 默认1000

- tol:精度,默认1e-3

- solver:求解算法 {‘auto’, ‘svd’, ‘cholesky’, ‘lsqr’, ‘sparse_cg’, ‘sag’, ‘saga’}默认为“auto”

- random_state:int, RandomState instance, default=None 使用随机平均梯度法时使用的随机样本数

- Ridge_model.n_iter 模型性质

1.3 Lasso回归模型

from sklearn.Linear_model import Lasso

Lasso_model = Lasso(alpha,max_iter,tol,solver)

2 逻辑回归模型

from sklearn.linear_model import LogisticRegression

logistic_model = LogisticRegression(penalty,tol,C,max_iter,solver)

- penalty 为正则化的惩罚方法:"l1"为一范数惩罚,"l2"为二范数惩罚

- C:默认为1,正则化强度,值越小,正则化强度越大

- solver:{‘newton-cg’, ‘lbfgs’, ‘liblinear’, ‘sag’, ‘saga’}, default=’lbfgs’

3 回归树与提升树

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.ensemble import GradientBoostingRegressor

GradientBoostingClassifier(max_depth.n_estimators,learning_rate,criterion)

GradientBoostingRegressor()

- max_depth:默认为3 决定了每个决策树的的节点深度,

- learning_rate:学习速率

- criterion:优化算法

4 总结

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression,Ridge,Lasso,LogisticRegression

from sklearn.metrics import mean_squared_error,accuracy_score,f1_score,precision_score,recall_score

from sklearn.ensemble import GradientBoostingRegressor

features = pd.read_excel("./data.xlsx",sheet_name = "features")

label = pd.read_excel("./data.xlsx",sheet_name = "label")

# ————————添加线性回归模型

# 训练集拆分

def data_split(x,y):

X_tt,X_validation,Y_tt,Y_validation = train_test_split(x,y,test_size = 0.2)

X_train,X_test,Y_train,Y_test = train_test_split(X_tt,Y_tt,test_size = 0.25)

return X_train,X_validation,X_test,Y_train,Y_validation,Y_test

# 自定义回归模型评价参数

def regression_metrics(model,X_train,X_validation,X_test,Y_train,Y_validation,Y_test):

print("train:")

print("\tMSE:",mean_squared_error(model.predict(X_train),Y_train))

print("validation:")

print("\tMSE:",mean_squared_error(model.predict(X_validation),Y_validation))

print("test:")

print("\tMSE:",mean_squared_error(model.predict(X_test),Y_test))

# 自定义分类器判别参数

def classifier_metrics(model,X_train,X_validation,X_test,Y_train,Y_validation,Y_test):

def self_metrics(y1,y2,name):

print(name)

print("\taccuracy score:",accuracy_score(y1,y2))

print("\tf1 score:",f1_score(y1,y2))

print("\trecall score:",recall_score(y1,y2))

print("\tprecision score:",precision_score(y1,y2))

self_metrics(Y_train,model.predict(X_train),"train:")

self_metrics(Y_validation,model.predict(X_validation),"validation:")

self_metrics(Y_test,model.predict(X_test),"test:")

print(model.predict(X_validation))

# ————构建线性回归模型

X = features.iloc[:,[2,3]].values

Y = features.iloc[:,1].values

X_train,X_validation,X_test,Y_train,Y_validation,Y_test = data_split(X,Y)

LR_model = LinearRegression()

LR_model.fit(X_train,Y_train)

print("*"*20,"LinearRegression","*"*20)

print("coef:",LR_model.coef_)

print("intercept:",LR_model.intercept_)

regression_metrics(LR_model,X_train,X_validation,X_test,Y_train,Y_validation,Y_test)

# ————构建岭回归模型

X = features.iloc[:,[2,3]].values

Y = features.iloc[:,1].values

X_train,X_validation,X_test,Y_train,Y_validation,Y_test = data_split(X,Y)

Ridge_model = Ridge(alpha = 10,max_iter = 1e6,tol=1e-6)

Ridge_model.fit(X_train,Y_train)

print("*"*20,"RidgeRegression","*"*20)

print("coef:",Ridge_model.coef_)

print("intercept:",Ridge_model.intercept_)

regression_metrics(Ridge_model,X_train,X_validation,X_test,Y_train,Y_validation,Y_test)

# ————Lasso回归模型

X = features.iloc[:,[2,3]].values

Y = features.iloc[:,1].values

X_train,X_validation,X_test,Y_train,Y_validation,Y_test = data_split(X,Y)

lasso_model = Lasso(alpha = 0,max_iter = 1e6,tol=1e-6)

lasso_model.fit(X_train,Y_train)

print("*"*20,"LassoRegression","*"*20)

print("coef:",lasso_model.coef_)

print("intercept:",lasso_model.intercept_)

regression_metrics(lasso_model,X_train,X_validation,X_test,Y_train,Y_validation,Y_test)

# ————逻辑回归模型

X = features.values

Y = label.values

X_train,X_validation,X_test,Y_train,Y_validation,Y_test = data_split(X,Y)

logistic_model = LogisticRegression(penalty = "l2",C = 0.51)

logistic_model.fit(X_train,Y_train)

print("*"*20,"LogisticRegression","*"*20)

print("coef:",logistic_model.coef_)

print("intercept:",logistic_model.intercept_)

classifier_metrics(logistic_model,X_train,X_validation,X_test,Y_train,Y_validation,Y_test)

# ————构建梯度提升回归树模型

X = features.iloc[:,[2,3]].values

Y = features.iloc[:,1].values

X_train,X_validation,X_test,Y_train,Y_validation,Y_test = data_split(X,Y)

GBDT = GradientBoostingRegressor(n_estimators = 1000,learning_rate = 0.5)

GBDT.fit(X_train,Y_train)

print("train score:",GBDT.train_score_)

regression_metrics(GBDT,X_train,X_validation,X_test,Y_train,Y_validation,Y_test)

by CyrusMay 2022 04 05