PaddleDetection部署c++生成dll并进行调用

文章目录

- 前言

- 1、必要环境

- 2、封装流程

-

- 2.1 修改CMakeLists.txt

- 2.2 重新进行cmake编译

- 2.3 生成dll

- 3、在C++中进行调用

- 4、在python中进行调用

- 5、可能出现的问题

-

- 修改threshold阈值对结果没有影响

前言

上一篇文章我们讲到了如何用c++部署PaddleDetection,以及遇到一些常见报错的解决方法。这篇文章我们将在上一篇文章的基础上讲一下如何将C++预测代码封装成一个dll,并利用python和c++进行调用。

上一篇文章链接:PaddleDetection部署c++测试图片视频

1、必要环境

本文使用cuda10.1+cudnn7.6.5+vs2017+cmake3.17.0

2、封装流程

2.1 修改CMakeLists.txt

- 修改文件夹 PaddleDetection/deploy/cpp下的CMakeLists.txt如下:

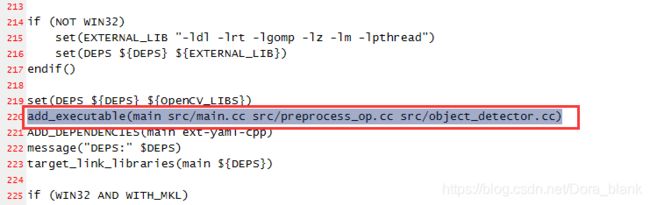

将add_executable(main src/main.cc src/preprocess_op.cc src/object_detector.cc)

改为ADD_library(main SHARED src/main.cc src/preprocess_op.cc src/object_detector.cc)

2.2 重新进行cmake编译

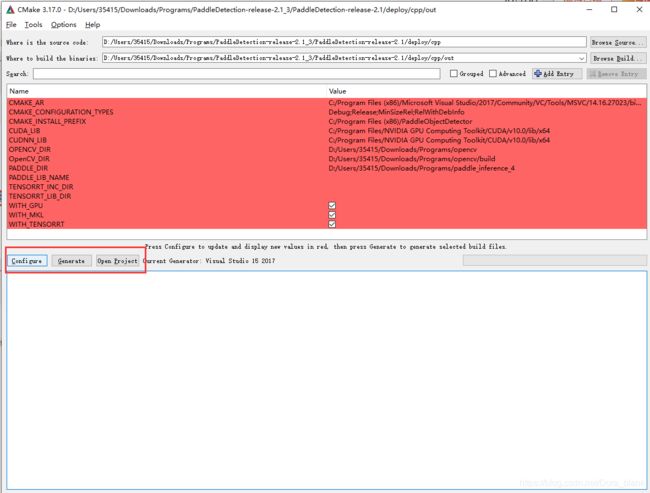

依次点击configure、Generate、Open Project

2.3 生成dll

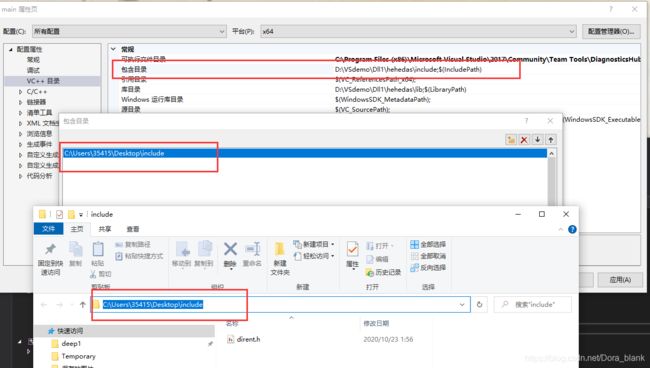

1.包含目录中添加dirent.h的路径

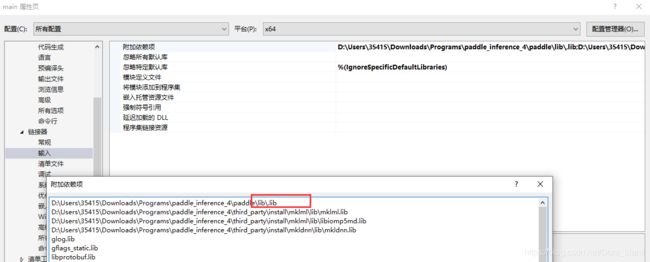

2.将附加依赖项的.lib改为paddle_inference.lib

3.生成后事件->在生成中使用改为 否

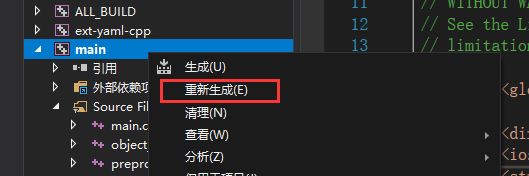

4.右击main重新生成

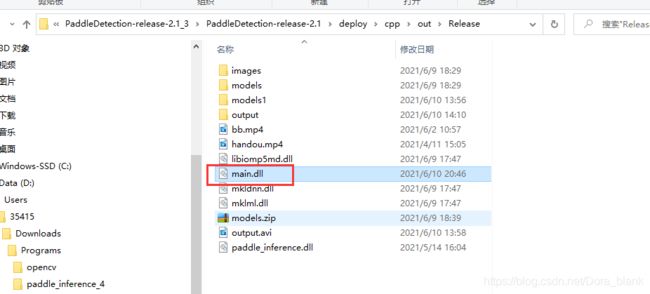

这时我们会发现在release目录下成功生成main.dll

不急,现在还不能用,继续往下看

5.将main.cc代码修改成如下形式

main.cc

// Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

#include

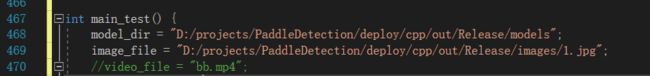

这里记得修改为自己的路径 model_dir是存放模型的文件夹路径,image_file是图片文件路径

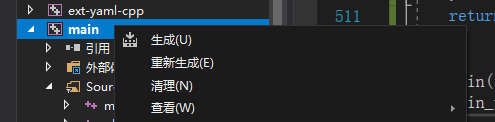

6.右键main,再次重新生成

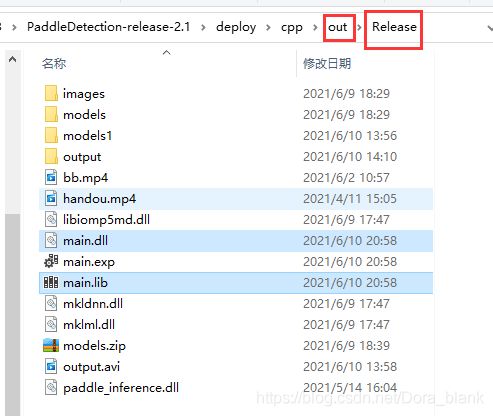

这时我们发现在out/Release目录下生成了.dll和.lib文件

3、在C++中进行调用

1.新建一个项目

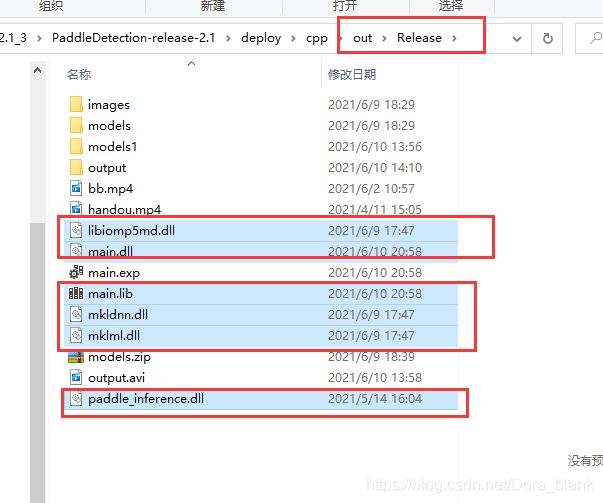

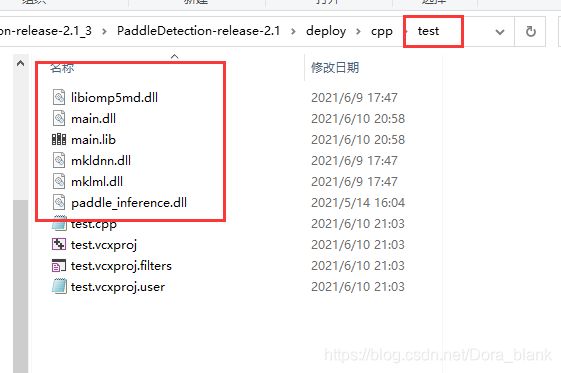

2.将out/Release目录下的如下六个文件 复制到新建的项目目录下

3.输入如下代码进行测试

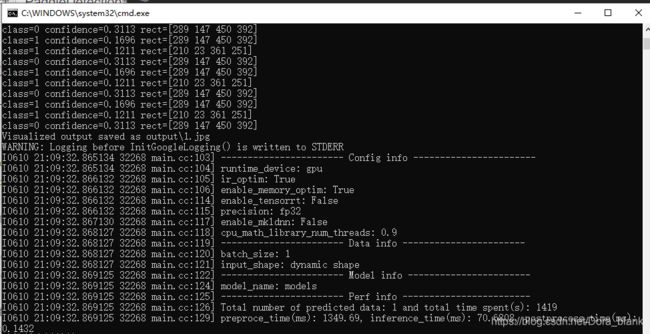

#include 如出现如下界面,则证明测试成功!

效果图

因为这里是自己随便训练的模型,所以准确率有些低 ~

4、在python中进行调用

1.新建一个python文件,输入如下代码

from ctypes import *

dll = CDLL(r"main.dll")

print(dll.main_test())

2.同样将上述六个文件复制到python文件目录下

3.运行.py文件

5、可能出现的问题

修改threshold阈值对结果没有影响

本人的解决方案:

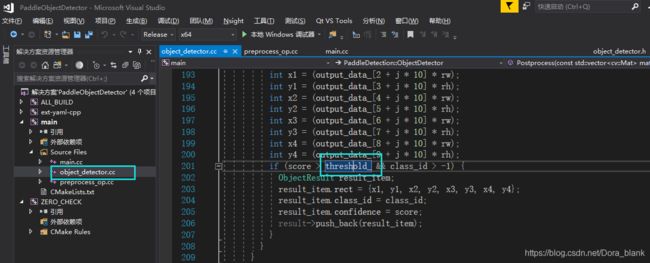

1.找到object_detector.cc文件的201行,按住ctrl键点击threshold_ 进入object_detector.h

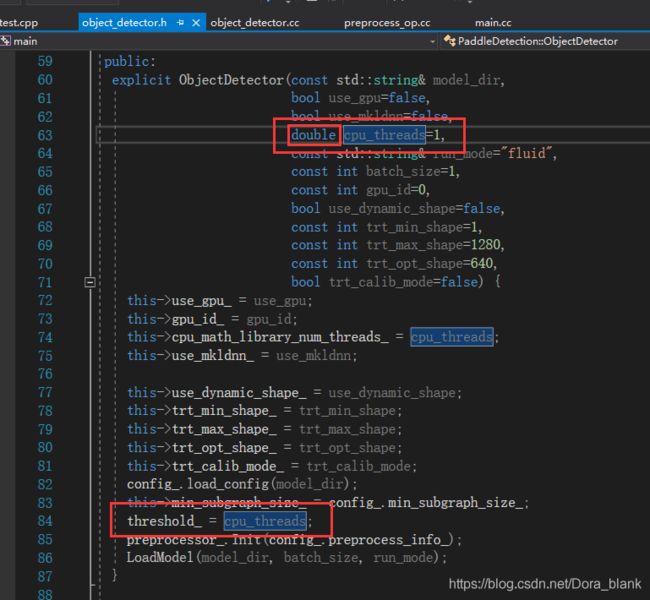

2.将object_detector.h文件的63行和84行修改成如下

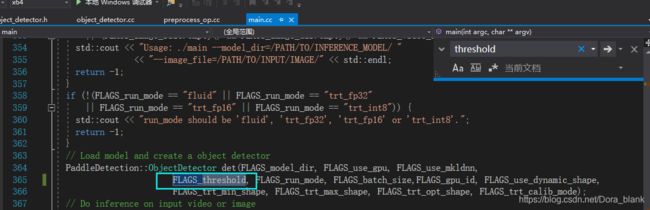

3.将main.cc修改成如下,或者直接使用我上面提供的代码。

修改之后就可以随意调整threshold设置阈值啦